1. Introduction

Since almost all the control systems are subject to random signals (such as those originating from system parameter variations and sensor noise,

etc.), stochastic systems are widely encountered in control engineering design. Minimizing the randomness in the closed-loop system is one of the important practical issues in controller design. Therefore, minimum variance control [

1] has been obtained significant attentions. Its purpose is to minimize variations in the controlled system outputs or the tracking errors. Indeed, even today, most stochastic control design methods have only focused on control of the output mean and the variance of stochastic systems. In general, these developments are done mostly based on the assumptions that the system variables are of Gaussian types. Such assumptions, although strict, allow control engineers to make use of the well-established stochastic theory to perform controller design and closed-loop system analysis.

In industrial processes, product quality data can be approximated by the Gaussian probability density function (PDF) when the system operates normally. However, when abnormality occurs along the production line, the variabilities of these quality variables would not follow Gaussian distributions. In this regard, actions need to be taken so that the manipulated variables can be tuned to bring these quality variables back to certain desired ones. In fact, most industrial processes have difficulty meeting the Gaussian assumption because of the mixture of different courses with Gaussian disturbances or other factors. Moreover, the nonlinearity in stochastic systems could lead to non-Gaussian randomness even if the disturbances follow a Gaussian distribution. Thus, controlling the mean and variance of system variables may be far from sufficiently characterizing the statistical property of the stochastic processes. It is known that, in many cases, the behavior of a stochastic process can be completely characterized by the shape of its statistical distribution represented by PDF. Therefore, for analysis and design purposes, it is important to consider the entire PDF. PDF-shaping control design accounts for the issues mentioned above by selecting a certain shape for the process PDF as the goal of the control design procedure would provide an accurate and flexible control strategy that can accommodate a wide class of objectives.

In order to solve the problems existing in paper-making processes, the stochastic distribution control (SDC) theory was proposed by Wang (1996) [

2]. This theory aims at controlling the shape of the output PDF instead of the mean and variance for stochastic systems [

3,

4,

5]. After that, SDC has been used to handle the stochastic systems with non-Gaussian disturbances. Then, a linear-matrix-inequality- based convex optimization algorithm was developed for control, filter design and fault detection in non-Gaussian systems [

6,

7,

8]. Nevertheless, the PDFs of the output are not necessarily measurable; instead, a more general measure of uncertainty, namely the entropy, has been used to characterize the uncertainty of the output tracking error. Compared with SDC (PDF shaping strategy) proposed earlier, it is more straightforward to us a minimum error entropy (MEE) based stochastic control algorithm to design a controller for tracking errors. It is more appropriate than the traditional minimum mean square error (MSE) criterion when dealing with nonlinearities and non-Gaussian disturbances. Shannon entropy is the most important and commonly used method in MEE based stochastic control [

9,

10,

11]. A well-known generalization of Shannon entropy is Renyi entropy. When the order of Renyi entropy approaches 1, Renyi entropy will reduce and become Shannon entropy. The argument of the log in Renyi entropy is named the information potential (IP) and used as an alternative entropy criterion because of its monotonic property. Puya

et al. [

12] applied the minimum Renyi entropy control scheme to decrease the closed-loop randomness of the output under an iterative learning control (ILC) basis for general nonlinear and unknown non-Gaussian stochastic systems. Besides, information potential (IP) based dynamic neural networks were used to perform the modeling and control of the plant. In [

13], the quadratic IP of tracking errors was employed to design controllers for nonlinear multivariate and non-Gaussian systems.

is the most generalized definition of entropy [

14].

has been employed in stochastic control systems [

15,

16]. Ren

et al. [

15] proposed a new tracking control algorithm for a class of networked control systems (NCSs) with non-Gaussian random disturbances and delays. Zhang

et al. [

16] presented an improved single neuron controller for multivariable stochastic systems with non-Gaussianities and unmodeled dynamics by minimizing

of tracking errors.

The Shannon and order-

Renyi entropies of a continuous random variable are both defined based on the probability density function. This kind of entropy has several drawbacks. (1) The definition will be ill-suited for the case in which PDF does not exist; (2) the value can be negative; (3) the approximation using empirical distribution is impossible in general. And the IP criterion is conservative. It should be maximized to achieve smaller errors only when

. Some new definitions of entropy have been made to solve these problems. Rao

et al. [

17] proposed the cumulative residual entropy (CRE), which is defined based on the cumulative distribution function. In [

18], Zografos and Nadarajah proposed the survival exponential and the generalized survival exponential entropies, both of which are broad entropy definitions based on the survival function and include CRE as a special case.

In this paper, survival information potential (SIP), proposed by Chen

et al. [

19], will be utilized to construct the performance index of stochastic control systems. Compared with the MEE criterion, adding a bias term to the tracking error [

6,

9,

10,

13] would not be necessary because of the shift-variance property of the SIP. In the previous work of MEE control [

9,

10,

11,

12,

13,

14,

15,

16], although the randomness of control input exists in practical conditions, the control input was considered as a deterministic variable for simplicity, which is unsuitable and conservative. Therefore, in this paper, the performance index with the integration of SIPs of the control input and the tracking error is proposed.

The predictive control idea was brought up as an industrial approach to process control in the 1970s. Today this technique is the most frequently applied advanced process control method in the industry. The stochastic distribution control algorithms have been extended by the advanced control algorithm to increase the control performance. So far mainly two classes of algorithms have been developed for systems affected by stochastic noise and subject to probabilistic state and/or input constraints. (1) The randomized, or scenario-based approach [

20,

21,

22]: It is a very general methodology that can consider linear or nonlinear systems affected by noise with general distributions characterized by possibly unbounded and nonconvex support. (2) The probabilistic approximation approach [

23,

24,

25,

26,

27,

28]: It is based on the point-wise reformulation of probabilistic or expectation constraints in deterministic terms to be included in the MPC formulation. Reference [

29] gave an overview of the main developments in the area of stochastic model predictive control (SMPC) in the past decade. It described different SMPC algorithms and the key theoretical challenges in stochastic predictive control without undue mathematical complexity. However, the above results were obtained under the assumption that the system variables obey Gaussian distribution, and only mean value and variance were considered. As presented above, PDF contains the whole characteristics of random variables. From this perspective, SMPC approaches to a class of nonlinear systems with unbounded stochastic uncertainties were proposed in [

30,

31]. In [

30], the Fokker–Planck equation was used for describing the dynamic evolution of the states’ PDFs and the closed-loop stability was ensured by designing a stability constraint in terms of a stochastic control Lyapunov function. Polynomial chaos expansions were utilized to propagate the probabilistic parametric uncertainties through the system model in [

31]. In the framework of SDC, SMPC was used to control the molecular weight distribution (MWD) [

32,

33] with the existence of non-Gaussian noises. As mentioned previously, considering the non-Gaussian SMPC in the statistical information framework may be an alternative and effective method.

Based on the preliminary work [

19,

34,

35,

36,

37], in this paper, a single neuron stochastic predictive control method for nonlinear stochastic discrete systems affected by non-Gaussian noise is proposed. The proposed algorithm will be detailed in the rest of the sections. In

Section 2, the models of the nonlinear stochastic system and single neuron controller are firstly presented. Then a new SIP-based predictive criterion, which contains both the randomness of tracking errors and the control input, is formulated. Based on the established models and the new criterion, the single neuron stochastic predictive control (SNSPC) algorithm is derived and the online computation procedure is also summarized. To analyze the convergence of the proposed control algorithm, the energy conversion principle is used in

Section 3. A numerical simulation example is introduced to illustrate the efficiency of the proposed control strategy in

Section 4. The last section concludes this paper.

3. Mean-Square Stability

In order to analyze the convergence of the proposed control algorithm, the nonlinear stochastic system Equation (1) is firstly linearized as

where

,

,

,

, and

. It can be simply denoted as

where

,

,

,

,

,

.

Based on the state space representation, an extended state space model can be formulated as [

36]

where

,

,

,

,

.

,

and

can be obtained from Equation (14);

is the composite noise, including external noises (

and

) and the model mismatch randomness, and it may be non-Gaussian noises.

From Equation (2), Equation (15) can be reformulated as

where

is the input matrices of the single neural network, which consists of tracking errors:

where

and

.

Thus, the difference between the future predictions and the set-point trajectory is

where

is the change of the set-point at time point

and

, which is a new random vector; then Equation (17) can be rewritten as

Now a priori error vector and a posteriori error vector,

and

, are defined as:

Obviously,

and

have the following relationship

By incorporating Equation (11), the following equation is gotten,

where

.

is an

-dimensional symmetric matrix. Assume

is invertible (

i.e.,

),

And hence

Both sides of Equation (23) should have the same energy; that is

From

, we have

Since

,

, then

Adding

to both sides of the above equation and substituting

and

into it, one can calculate the energy conservation relation of

and

:

where

,

, and

. To study the mean-square behavior of the algorithm, one takes expectations of both sides of (27) and write

By substituting

into Equation (28), the following equation can be obtained.

In order to evaluate the expectations

and

, the following assumptions [

37] are used in this paper:

Assumption 1. The noise is independent, identically distributed (i.i.d.), and independent of the input .

Assumption 2. The a priori error vector is jointly Gaussian distributed.

Assumption 3. The input vectors are zero-mean independent, identically distributed (i.i.d.).

Assumption 4. , is independent of .

Based on the above assumptions, we have

where

is the density distribution of

and

.

Therefore, the expectation

can be expressed as a function of

. Define the following function

and then yields

We now evaluate the expectation

.

where:

By substituting Equations (32) and (35) into Equation (29), the following equation is gotten.

Then the convergent condition for the sequence

(

i.e.,

) would be:

Remark 5. Since the nonlinear system can be approximated by a linear system at the equilibrium, the convergence of the nonlinear system Equation (1) using the linearizing method is studied here.

4. Simulation Results

In order to illustrate the efficiency of the presented SNSPC algorithm, consider a nonlinear stochastic system described by

At each time point

, PDFs of

and

are given by

where

,

,

,

,

.

In this example, the set point of the system Equation (38) is set to be . The prediction horizon and control horizon, , are chosen. The sampling period is . The initial control input () and the state variable () are selected. The step size in Equation (12) is . In the performance function Equation (9), we choose , and .

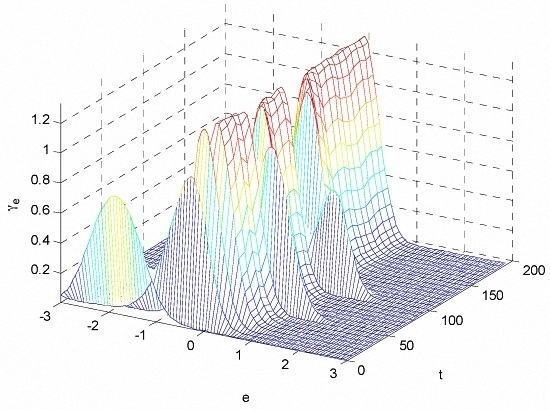

In this simulation, the corresponding control inputs based on SIP and entropy criteria respectively are implemented on the system Equation (38) at each time point. Some comparative results are given to illustrate the superiority of the proposed SIP based stochastic predictive tracking control algorithm. In

Figure 1, it is clear that the proposed control algorithm based on the SIP criterion has better performance, the fluctuation of the output response is smaller, and the response is quicker using the SIP method. In

Figure 2, the trend of the performance index Equation (9) is presented. It is found that the performance index is overall decreasing with the progress of the time although some small variations can be recorded. The variances of the single neuron weights are presented in

Figure 3.

Figure 4 and

Figure 5 demonstrate the PDFs of the output tracking errors using the entropy-based controller, while

Figure 6 and

Figure 7 illustrate the PDFs of the tracking errors using the proposed SNSPC method. Compared with

Figure 4, the shape of the PDF of the tracking error in

Figure 6 turns to be narrower and sharper over the control process, which indicates that the proposed SNSPC control system has achieved a smaller uncertainty in the tracking error distribution. In addition, it can also be seen that the peak of the tracking error PDF locates in the vicinity of zero using the proposed method. In

Figure 8, the final PDF of the tracking error under the proposed control law is sharper and narrower than that under the entropy-based control law.

5. Conclusions

In the past, several different approaches, such as PDF shaping control, minimum entropy control, generalized minimum entropy control, etc. have been proposed to solve the control problem for non-Gaussian stochastic systems. These control strategies can achieve good performance, but there are still two main issues to be further improved: (1) The entropy value can be negative and it is shift-invariant. More suitable statistical information that describes objective functions is necessary; (2) Control input in a stochastic system is also a random variable. The randomness of control input should be considered.

In this work, a convergent SNSPC algorithm is presented for the controlled system with non-Gaussian disturbance. The proposed SNSPC is obtained by minimizing a SIP-based predictive criterion, in which the randomness of the control input is also considered besides randomness of the tracking error. Compared with the entropy or IP, the randomness measure SIP has some advantages, such as validity in a wide range of distributions, robustness, and the simplicity in computation. Moreover, the multistep predictive control strategy, rather than single step control is developed in this paper as it is more robust to disturbances and nonlinearities involved in the systems. Also, the convergent condition of the proposed SNSPC based on the energy conservation principle is proposed. The proposed control strategy is applied in a nonlinear and non-Gaussian stochastic numerical example. The simulation results confirm that this new SIP based predictive control method can achieve a good tracking performance.

Compared with the previous work in the field of stochastic distribution control, the contributions of this paper are three folds: (1) the randomness of control inputs is considered for the first time; (2) instead of the instantaneous performance index, a novel SIP-based cumulative criterion is formulated; (3) a single neuron multi-step predictive control algorithm is obtained, and it is much better than the single-step control method. However, most of the practical industrial processes have multi-inputs and multi-outputs, and there are also many constraints when the controller is designed. Future research should be focused on such problems.