Monocular-Vision-Based Autonomous Hovering for a Miniature Flying Ball

Abstract

:1. Introduction

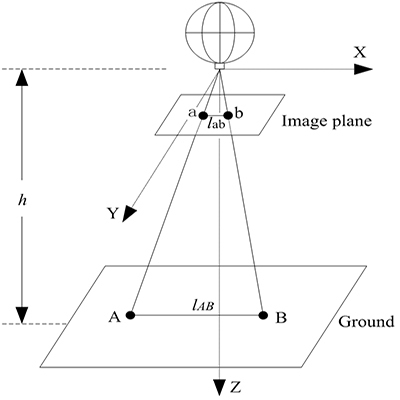

2. Vision-Based Position Estimation

2.1. Monocular Vision Analysis

2.2. Measurement from Images

2.2.1. Feature Extraction

2.2.2. Feature Matching

2.2.3. Distance of Frames

2.3. Kalman Filter

2.4. Controller Design

3. Materials

3.1. Hardware Description

3.1.1. MFB Aircraft

3.1.2. Ground Station

3.2. Software Architecture

4. Experiments

4.1. Evaluating the Solution of the System

4.2. Static Measurement Precision of the Vision System of MFB

4.3. Dynamic Hovering Precision of MFB

4.4. Testing the Robustness of the System

4.5. Discussion

5. Conclusions and Future Work

Author Contributions

Conflicts of Interest

References

- Garratt, M.A.; Lambert, A.J.; Teimoori, H. Design of a 3D snapshot based visual flight control system using a single camera in hover. Auton. Robot. 2013, 34, 19–34. [Google Scholar] [CrossRef]

- Amidi, O.; Kanade, T.; Fujiita, K. A visual odometer for autonomous helicopter flight. Rob. Auton. Syst. 1999, 28, 185–193. [Google Scholar] [CrossRef]

- Oertel, C.-H. Machine vision-based sensing for helicopter flight control. Robotica 2000, 18, 299–303. [Google Scholar] [CrossRef]

- Mejias, L.; Saripalli, S.; Campoy, P.; Sukhatme, G.S. Visual Servoing of an Autonomous Helicopter in Urban Areas Using Feature Tracking. IFAC Proc. Vol. 2007, 7, 81–86. [Google Scholar] [CrossRef] [Green Version]

- Bonak, M.; Matko, D.; Blai, S. Quadrocopter control using an on-board video system with off-board processing. Rob. Auton. Syst. 2012, 60, 657–667. [Google Scholar] [CrossRef]

- Gomez-Balderas, J.E.; Salazar, S.; Guerrero, J.A.; Lozano, R. Vision-based autonomous hovering for a miniature quad-rotor. Robotica 2014, 32, 43–61. [Google Scholar] [CrossRef]

- Cherian, A.; Andersh, J.; Morellas, V.; Papanikolopoulos, N.; Mettler, B. Autonomous altitude estimation of a UAV using a single onboard camera. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 3900–3905.

- Garratt, M.A.; Chahl, J.S. Vision-Based Terrain Following for an Unmanned Rotorcraft. IFAC Proc. Vol. 2007, 7, 81–86. [Google Scholar] [CrossRef]

- Beyeler, A.; Dario, J.Z. Vision-based control of near-obstacle flight. 2009, 27, 201–219. [Google Scholar] [CrossRef]

- Zingg, S.; Scaramuzza, D.; Weiss, S.; Siegwart, R. MAV navigation through indoor corridors using optical flow. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Anchorage, AK, USA, 3–7 May 2010; pp. 3361–3368.

- Beyeler, A.; Zufferey, J.C.; Floreano, D. 3D vision-based navigation for indoor microflyers. In Proceedings of the IEEE International Conference on Robotics and Automation, Roma, Italy, 10–14 April 2007; pp. 1336–1341.

- Chowdhary, G.; Johnson, E.N.; Magree, D.; Wu, A.; Shein, A. GPS-denied Indoor and Outdoor Monocular Vision Aided Navigation and Control of Unmanned Aircraft. J. F. Robot. 2013, 30, 415–438. [Google Scholar] [CrossRef]

- Shen, S.; Mulgaonkar, Y.; Michael, N.; Kumar, V. Vision-Based State Estimation and Trajectory Control Towards High-Speed Flight with A Quadrotor. Available online: http://www.kumarrobotics.org/wp-content/uploads/2014/01/2013-vision-based.pdf (accessed on 5 June 2015).

- Zhang, T.; Li, W.; Kühnlenz, K.; Buss, M. Multi-Sensory Motion Estimation and Control of an Autonomous Quadrotor. Adv. Robot. 2011, 25, 1493–1514. [Google Scholar] [CrossRef]

- Cartwright, B.A.; Collett, T.S. How honey bees use landmarks to guide their return to a food source. Nature 1982, 295, 560–564. [Google Scholar] [CrossRef]

- Cartwright, B.A.; Collett, T.S. Landmark learning in bees. J. Comp. Physiol. Psychol. 1983, 151, 521–543. [Google Scholar] [CrossRef]

- Wu, A.D.; Johnson, E.N. Methods for localization and mapping using vision and inertial sensors. In Proceedings of the AIAA Guidance, Navigation and Control Conference and Exhibit, Honolulu, HI, USA, 18–21 August 2008.

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-Up Robust Features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Klaptocz, A. Design of Flying Robots for Collision Absorption and Self-Recovery. Ph.D Thesis, École Polytechneque Fédérale de Lausanne, Lausanne, Switzerland, 2012. [Google Scholar]

- Briod, A.; Kornatowski, P.; Jean-Christophe, Z.; Dario, F. A Collision-resilient Flying Robot. IFAC Proc. Vol. 2007, 7, 81–86. [Google Scholar] [CrossRef]

© 2015 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, J.; Han, B.; Luo, Q. Monocular-Vision-Based Autonomous Hovering for a Miniature Flying Ball. Sensors 2015, 15, 13270-13287. https://0-doi-org.brum.beds.ac.uk/10.3390/s150613270

Lin J, Han B, Luo Q. Monocular-Vision-Based Autonomous Hovering for a Miniature Flying Ball. Sensors. 2015; 15(6):13270-13287. https://0-doi-org.brum.beds.ac.uk/10.3390/s150613270

Chicago/Turabian StyleLin, Junqin, Baoling Han, and Qingsheng Luo. 2015. "Monocular-Vision-Based Autonomous Hovering for a Miniature Flying Ball" Sensors 15, no. 6: 13270-13287. https://0-doi-org.brum.beds.ac.uk/10.3390/s150613270