1. Introduction

Rice is recognized as the most significant crop species worldwide, and the current annual production of rice grain is 590 million tons [

1]. High yield has always been one of the most important objectives of rice breeding and cultivation. Rice breeding requires measuring the yield of a large number of candidate samples in different environments, so as to provide a basis for breeding high-yield, high-quality, stress-resistant rice varieties. The rice panicle is the organ for the growth of rice grains and is directly related to final yield. It also plays an important role in pest detection, nutritional diagnosis, and growth-period detection [

2]. Therefore, the accurate recognition of rice panicles is a key step to obtain panicle characteristics and to automate the detection of rice phenotypes. The appearance of panicles—such as shape, color, size, texture, and posture—vary strongly among the different rice varieties and growth stages. The edge of the rice panicle is very irregular, and the panicle color blends with that of the leaves. The complex field environment, mutual occlusion between different rice organs, and uneven and constantly changing natural solar illumination severely hinder efforts to automatically recognize rice panicles [

3]. Early work on recognizing plant organs from images focused on indoor experiments and used ground-based vehicles to collect data. An example of such work is Phadikar et al., who describe an image-based system to detect rice disease [

4]. They used a digital camera to image the infected plants and processed the images by using image-growing and segmentation techniques. They then used a neural network to separate infected leaves from normal leaves and thereby determined the number of diseased plants. In other work, Huang et al. measured the panicle length by using a dual-camera system equipped with a long-focus lens and a short-focus lens [

5]. After co-registration and resampling a series of images, the panicle length was calculated as the sum of the distances between each adjacent point on the path. More recently, Fernandezgallego et al. proposed a novel ear-counting algorithm to measure ear density under field conditions [

6]. The whole algorithm contained three steps: (i) remove low- and high-frequency elements appearing in an image by using a Laplacian frequency filter, (ii) reduce noise by using a median filter, and (iii) segment the images. After these treatments, they could accurately deduce the number of ears with high efficiency. However, these color-based or machine-learning-based methods are susceptible to illumination and other factors [

7]. They are thus only suitable for a specific growth period and are very sensitive to environmental noise.

In recent years, to address a number of plant-phenotyping problems, deep learning (DL) has been adopted as the method of choice for many image analysis applications [

8]. DL networks avoid the need for hand-engineered features by automatically learning feature representations that discriminately capture the data distribution. The hidden layer of the network extracts features, and the feature information is reflected in the weight of the hidden-layer links. The parallel structure of neural networks makes them insensitive to incomplete input mode information or defective features [

9]. One such study organized by Pound et al. recognized the characteristic parts of wheat by using a convolutional neural network (CNN) technique. Xiong et al. introduced a panicle-SEG algorithm based on super-pixel segmentation and a CNN [

10]. The method involves several steps, including super-pixel region generation, convolutional neural network classification, and entropy rate super-pixel optimization. After training the model by using 684 manual labeling images, the average precision of panicle-SEG reached 82%, which shows its reliability. In addition, Rahnemoonfar et al. used DL to accurately count the number of fruit in an unstructured environment. Their research included a fully convolutional network to extract candidate regions and a counting algorithm based on a second convolutional network to estimate the number of fruit in each region [

11]. Most research that incorporated DL architectures took advantage of transfer learning, which concerns leveraging the already existing knowledge of some related task or domain to increase the learning efficiency of the problem under study by fine-tuning pre-trained models [

12]. The considerable drawbacks of this algorithm or model are (1) the limitation of image data acquisition, and (2) the requirement of large datasets.

Monitoring rice panicles by using images acquired from rotor light unmanned aerial vehicles (RL-UAVs) in the field is complicated by the following difficulties:

The shape, color, size, texture, and posture of panicles of different varieties and growth stages differ significantly. The panicle edge is very irregular, and the panicle color is similar to that of leaves.

The natural environment is complex and includes mutual occlusion of different rice organs and varying soil reflectance and light intensity.

Restrictions of RL-UAV flight altitude and sensor resolution affect the accuracy of automated identification and manual labeling, which can lead to false positives and false negatives.

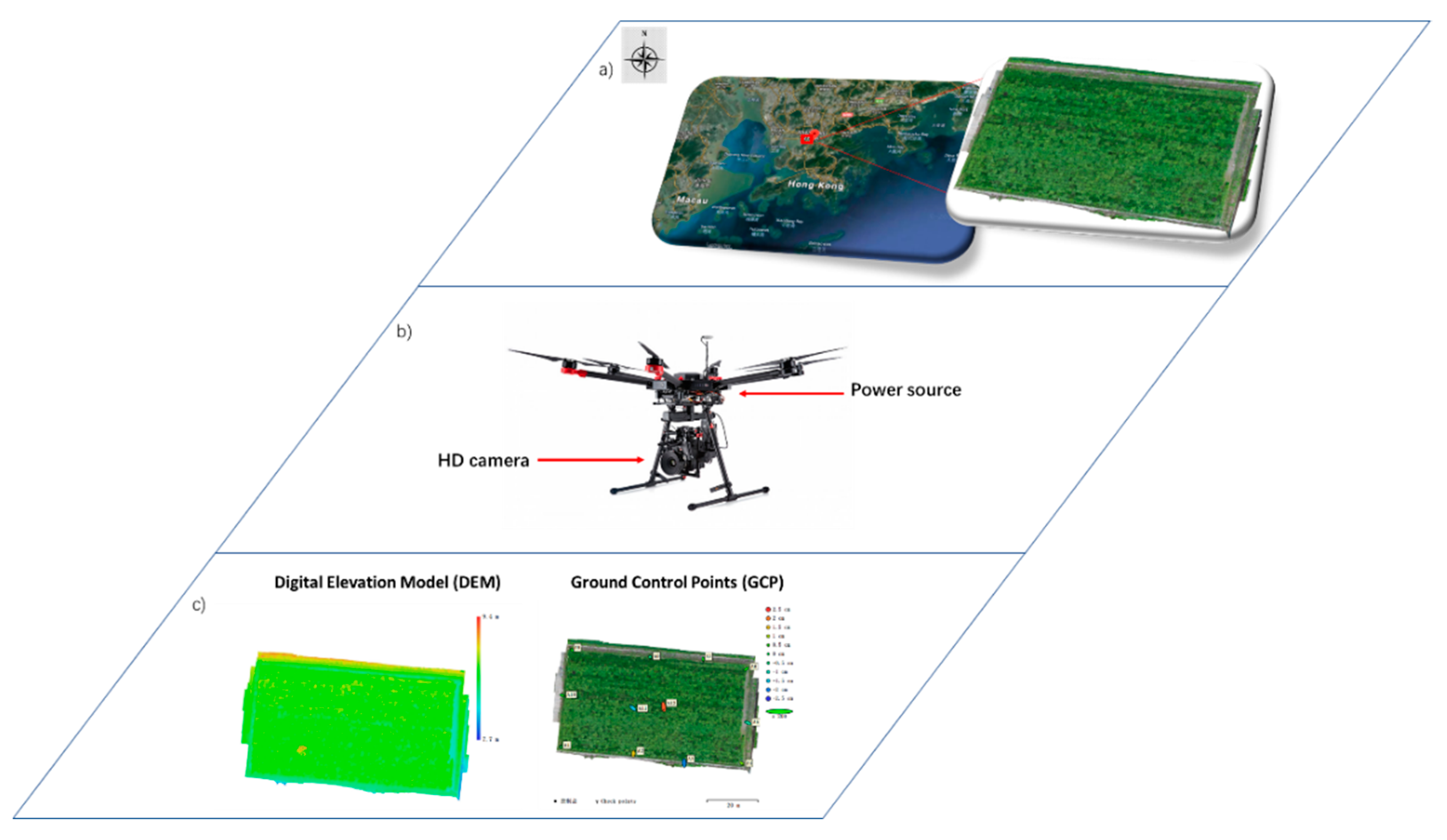

This paper proposes a method to detect rice panicles based on statistical treatment of digital images acquired from a UAV platform. The aim of this study is to train and apply a CNN-based model called the ‘improved region-based fully convolutional network’ (R-FCN), which can accurately count rice panicles in the field. The training process produces a series of bounding boxes that contain detected panicles and compares the number of panicles in each box with manual labeling results to determine the effectiveness of the proposed method. Moreover, we dynamically monitor the number of panicles of different rice varieties at different growth stages and analyze the error cases and limitations of the proposed DL-based model. The proposed method provides automated panicle recognition with the accuracies >90% in real time, which is superior to the results of several traditional training models. The improved R-FCN method offers the advantage of object recognition from image sets, which allows it to perform better than the other methods.

The outline of this article is as follows: The Materials and Methods section describes the study area and the image-acquisition system. We also present the image-dataset preprocessing process. Finally, several DL models are introduced, following which we describe in detail the proposed improved R-FCN method to monitor rice panicles. The Results and Discussion section analyzes the accuracy of each model by using four evaluation indexes. We also analyze the number of panicles at different growth stages. Finally, the last section summarizes the works presented in this paper and presents the main conclusions.

3. Results and Discussion

The experiment was run on the Ubuntu 16.04 operating system (OMEN by HP Laptop with a 4-core i5 CPU, 2.3 GHz per CPU core, 8 GB of memory, and an NVIDA GTX 960M video card). Based on Cafe’s deep-learning framework, we use Python to do the training and testing of the target-recognition network model. In this paper, we use the stochastic gradient descent method to train the network in a joint end-to-end manner. The pre-training model on ImageNet is used to initialize the network parameters, and the verification period is set to 1000 (i.e., the accuracy of the training model is tested 1000 times on the verification set per iteration of the network).

3.1. Performance

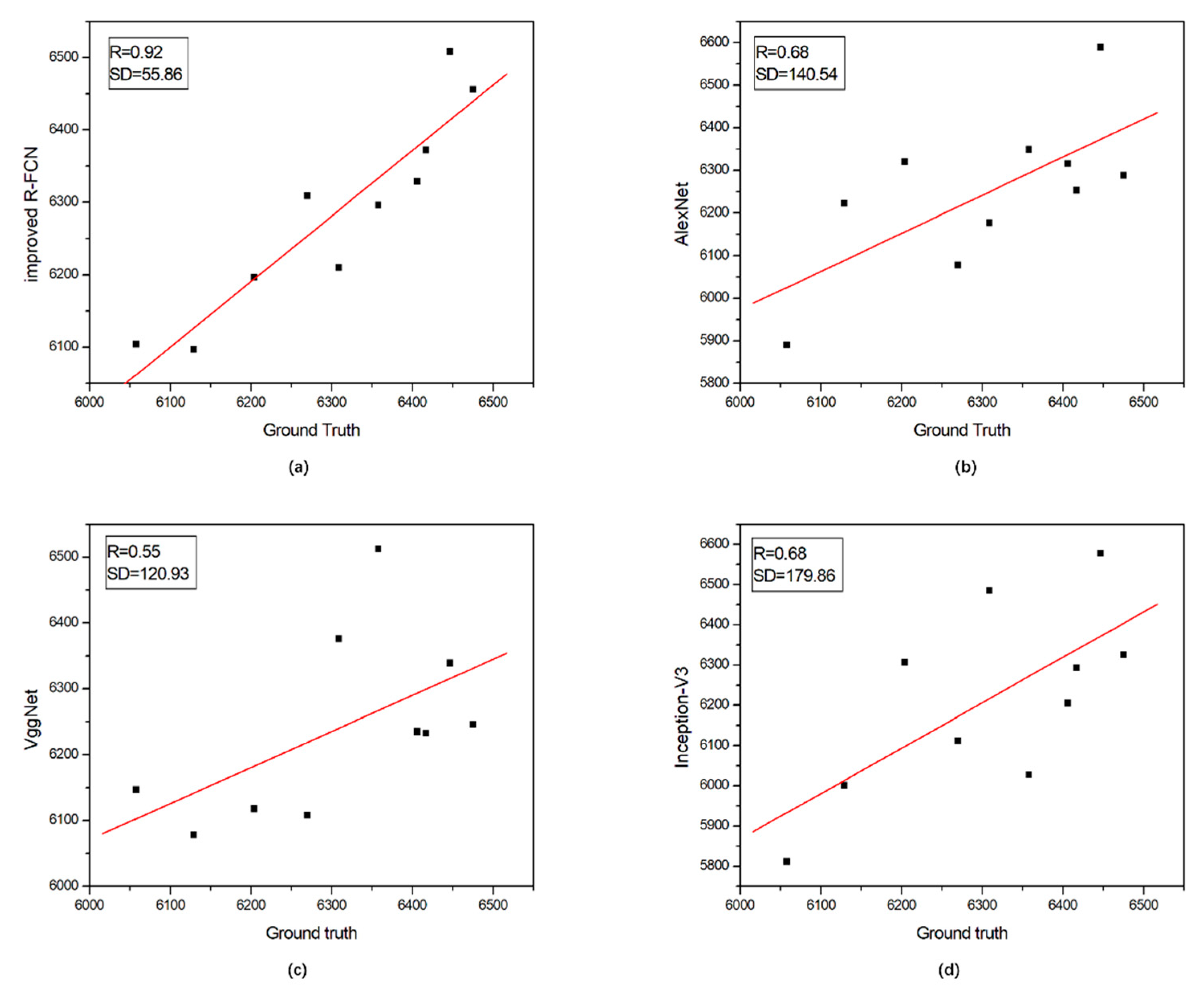

After dealing with the images in the testing set, the four models above returned the location of the detected panicles and their total number; see

Figure 3 (10 randomly selected plots). For each combination of proportions, the following statistics are provided in

Table 4: mean precision, mean recall, F-measure, and

mIOU. Across all our experimental configurations, the overall precision obtained on the dataset varied from 0.592 (for 20–80 AlexNet) to 0.897 (for 80–20 improved R-FCN), which shows the strong potential of DL technology for such recognition problems. Specifically, AlexNet has relatively low precision (mean value 0.651); VggNet and Inception-V3 are second and third with average precisions of 0.802 and 0.818 and a much higher recall of 0.814 and 0.833, respectively. Of all these methods, the improved R-FCN method gives the highest mean precision (0.868), the highest recall (0.883), and the highest F-measure (0.874). For the

mIOU index, the VggNet has the highest rate of misclassified pixels (0.792) whereas the improved R-FCN method performs best with the lowest rate of misclassified pixels (0.887). To address the issue of overfitting, different test set to train set ratio were used to cut the whole dataset even in the extreme case: 20% of the whole dataset used for training, and 80% for testing. As expected, these four models will perform worse if we continue to increase the ratio of testing set to training set, but if the model indeed overfits, then the drop-in performance is not as dramatic as we expected (

Figure 3).

In addition to detection accuracy, another important performance index of target detection algorithm is speed. Only with high speed can real-time detection be achieved, which is extremely important for some application scenarios. Average running time (ART) represents the time taken by different models to process a certain picture on the same hardware, and the shorter the time, the faster the speed. Since the steps of image acquisition and preprocessing are the same, we here compared the training efficiency of different methods and demonstrated the results in

Table 5.

From

Table 5, one can see that the time required for panicle detection is always within the range of 0.5 s for all tested images. This is a quite satisfactory result after considering its future application scenarios for miniaturized devices. Also, the training loss and error rates while the model is learning can be used to judge the efficiency of a training model. We define herein the epoch as one full pass forward and backward through the network during the learning stage. After a series of epochs, the weights of the model will become closer to a suitable range and will reduce the error rate and training loss.

Figure 4 shows the relationship between the loss metric and the number of epochs. We see from

Figure 4 that the loss metric decreases over subsequent epochs of training and the loss and error rate remain almost unchanged after 100 epochs. To avoid overfitting, the number of epochs is fixed to 300 to achieve a relatively accurate result (

Figure 4).

The accuracy of the proposed model differs substantially for changes in training images, transfer learning, and data augmentation. Here, we analyze how these factors affect the accuracy of the recognition results.

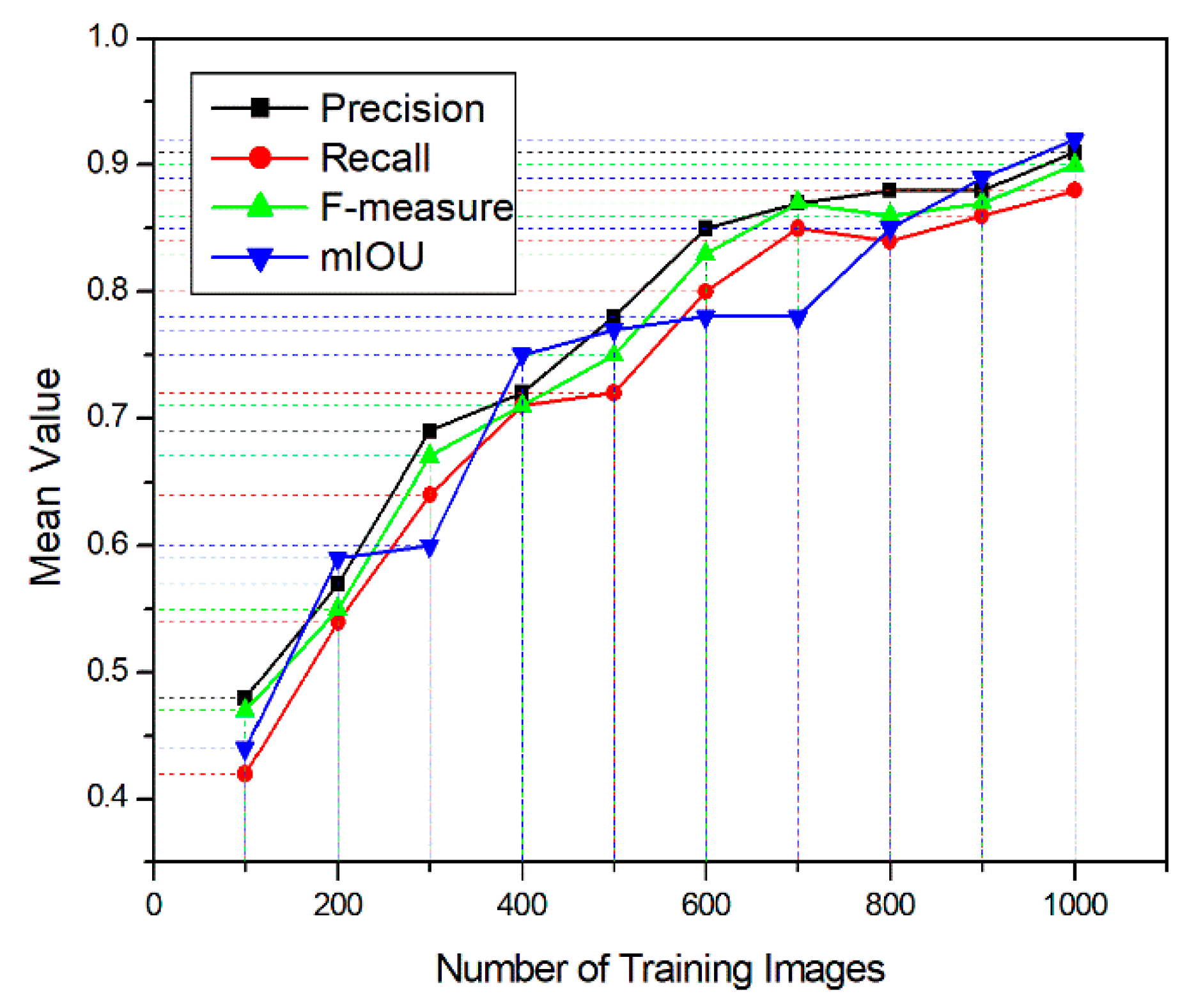

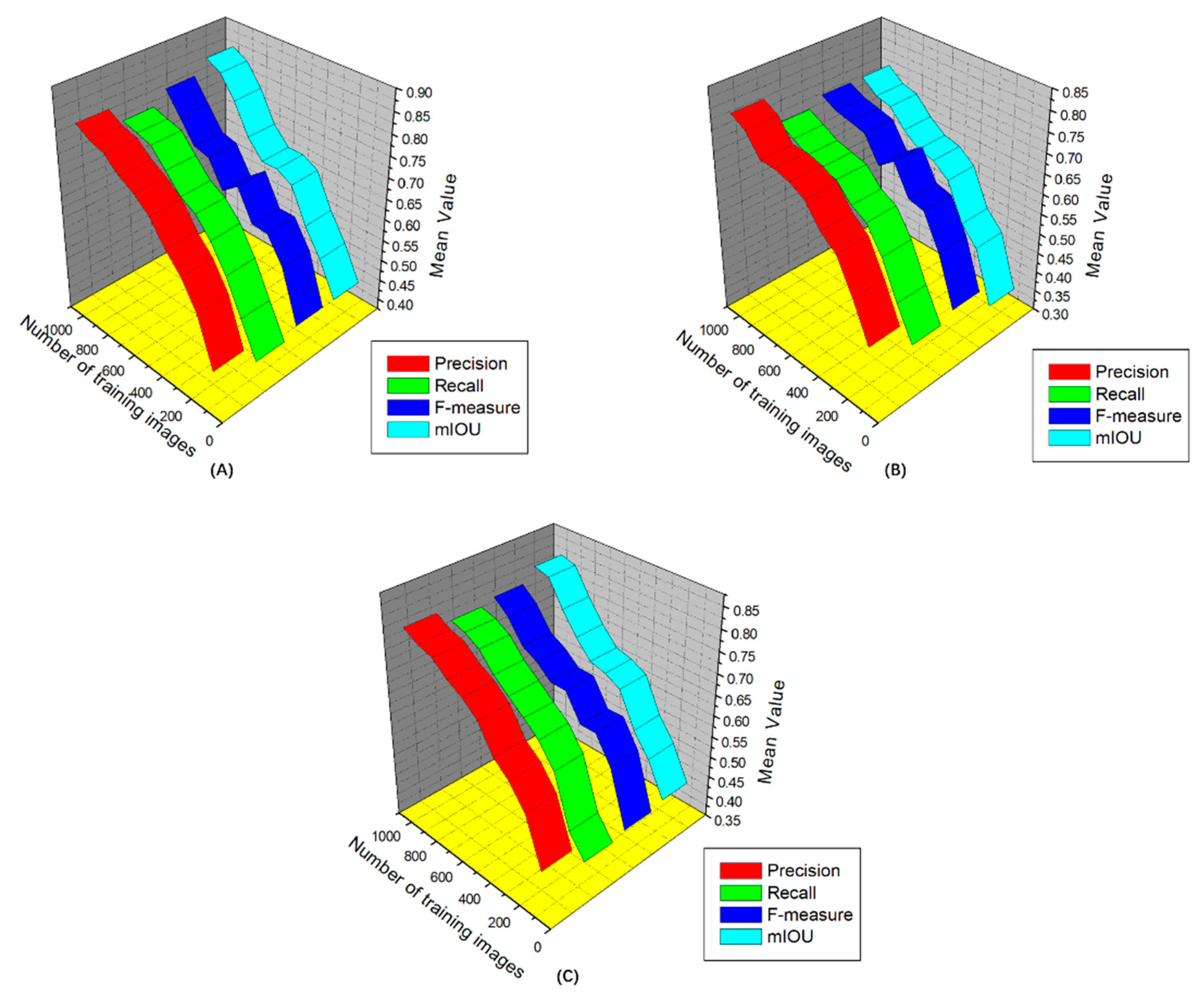

A. Number of Training Images

Figure 5 shows the relationship between evaluation index and the number of training images. We see from

Figure 5 that the index rises quickly with a small number of training images. For example, when the accuracy reaches a relatively high value (about 0.85), performance is close to convergence for all models, only increasing by 0.05 to 0.07 after introducing the remaining training images (

Figure 5).

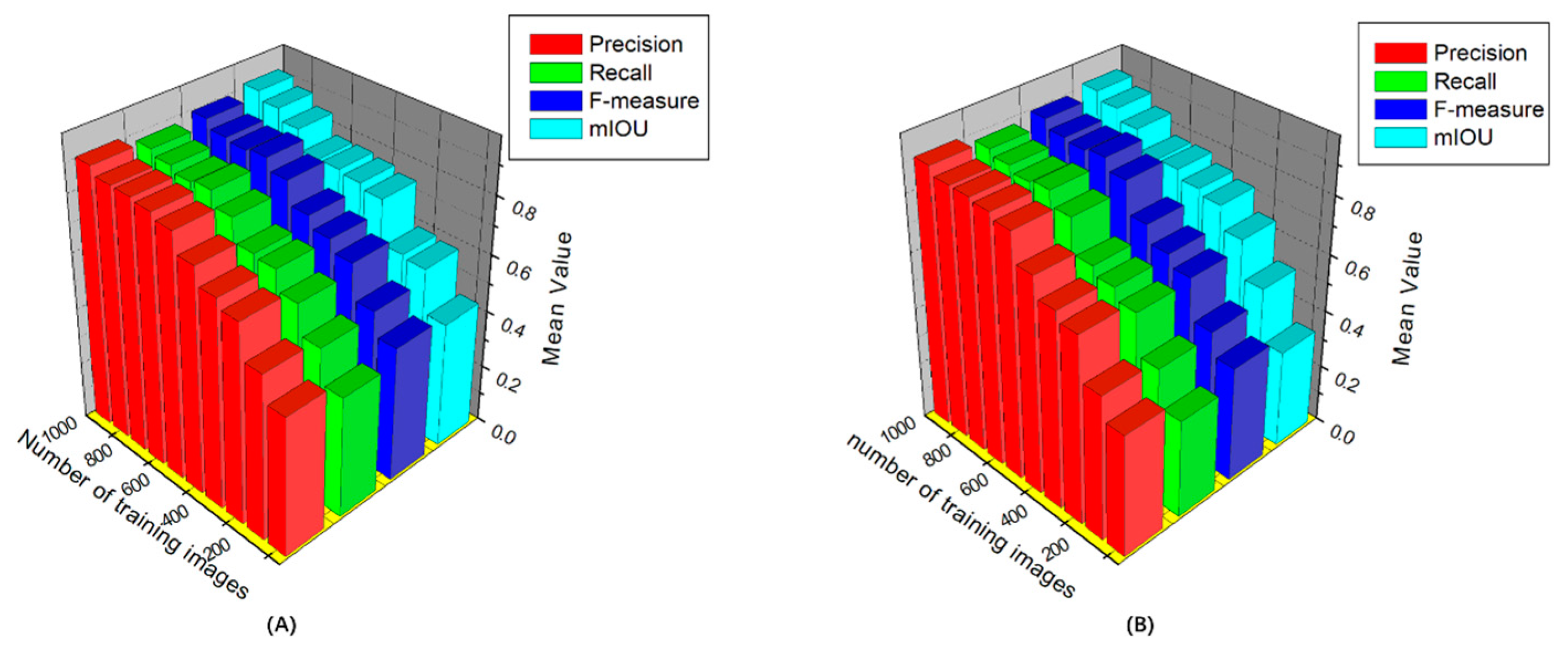

B. Transfer Learning

Transfer learning involves the migration of the original data domain and the original task to the target data domain and target task, respectively. The weight parameters are used to improve the prediction function for the target domain. In this study, most parameters in the training models were directly initialized from ImageNet. To examine the utility of transfer learning, we compare the accuracy of the proposed model by using pre-trained models from ImageNet and by training from scratch. The results reveal a certain difference in initial accuracy (from 5% to 15%). After a series of image training processes, the initial benefits diminish, with a difference of less than 1% remaining between the highest and the lowest accuracy (

Figure 6).

C. Data Augmentation

The aim of the data augmentation is to enlarge the number of training images, which can improve the overall learning procedure and performance. This process is important for DL trainings that possess small datasets and is especially important when training models by using synthetic images and testing them on real images. By augmenting the training set, the model can more easily be generalized to adapt to real-world problems. We see from

Figure 7 that, after various augmentations, the model becomes significantly more robust. Specifically, the color transformation improves the final accuracy by about 10%, and the mirror and rotation processes improve by 5% to 7% compared with non-augmented data. These results suggest that there are more color variations in the dataset relative to shape and scale variability (

Figure 7).

3.2. Dynamic Monitoring of Panicle Number at Different Growth Stages

Rice yield can be decomposed into four elements: panicles per unit area, total grains per panicle, setting percentage, and grain weight. The number of panicles per unit area is the most active and basic factor in yield components. To dynamically monitor rice panicle number during the different growth stages, the panicles per unit area of three varieties of rice grown under different fertilizer treatments (called no treatment, early treatment, and late treatment) were compared and analyzed. Two-thirds of the plots were treated at a standard rate of 139.5 kg/hm

2 N, 57.75 kg/hm

2 P

2O

5 and 120.0 kg/hm

2 K

2O while the rest plots received no treatment. For early treatment, the fertilizers were applied on 24 June, 7 July, 21 July and 11 August, respectively. For the late stage treatment, all fertilizers were applied together on 15 July. To verify that panicles are correctly detected and counted, we systematically tested 150 rice images (50 images per treatment plan). To reduce the effect of panicle overlap on counting accuracy, the thresholds of panicle confidence fraction

P and overlap-area ratio

I were selected through several experiments. Finally,

P and

I were set to 0.95 and 0.1, respectively. The measured data of rice panicle in the image were obtained by manual counting. Because of the incomplete situation in the region of the panicle edge, the automated count is greater than the manual count. To ensure the accuracy of the count and the rigor of the experiment, we applied a unified principle of rice panicle counting: Five experts separately counted the panicles in each image. For each image, the average manual count is used as the number of panicles in the image to reduce the subjective error of automated counting.

Table 6 gives both the manual counts and the automated counts.

We see from

Table 6 that the untreated plants generally produced fewer panicles per square meter than the plants that had undergone either the early or late treatment. Each variety of rice shows a significant difference in panicle number between no treatment and treatment, which demonstrates that they were sensitive to the amount of nitrogen.

For nearly all rice varieties, significantly higher yields were obtained for early treatment than for late treatment. These results also show that the rice yield is quadratic in nitrogen uptake and in nitrogen application rate. With the increasing application of nitrogen, plant nutrient content, and nutrient uptake increase continuously. At present, few studies have concentrated on the characteristics of nitrogen uptake and utilization in rice under the condition of precise nitrogen application. In the future, the relationship between nitrogen-application time and amount, and rice biomass needs to be further studied.

3.3. Error Case and Limitation

Target detection is an important subject in the field of computer vision. The main task is to locate the object of interest in an image. Doing so accurately determine the specific category of each object and give the boundary frame of them. However, since these methods use designed features, even the best nonlinear classifier is unable to make the right judgment. The performance of convolutional neural networks in object recognition and image classification has made tremendous progress in the past few years. Using the deep convolutional neural network architecture, we trained a model on the images of rice canopy with the goal of identifying the panicle part and counting the number of it. However, there are a number of error cases and limitations at the current stage that need to be addressed in future work.

3.3.1. Error Cases

A portion of the observed detection errors can be attributed to (1) the deep-learning results in a black-box model, which are almost impossible to explain based on the technical and logical basis of the system, (2) a lack of high-quality labeled training samples leads to overfitting and poor model robustness, (3) the inability to detect overlapping panicles in an image, and (4) errors in manual labeling, which leads to errors in validation.

3.3.2. Limitations

Deep learning focuses mainly on CNNs in the image field, but the convolution operation requires the entire network to have a large amount of computation so that network training takes an excessively long time. Changing the form of the convolution operation to simplify the computational complexity should be a major development direction. A second limitation is that we are currently constrained to the classification of single panicles, facing up, on a homogeneous background. Although these are straightforward conditions, a real-world application should be able to classify images of panicles as presented directly on the plant. At present, experiments are used to prove the effectiveness of CNNs. The training parameters are based mostly on experience and a lack of theoretical guidance and quantitative analysis. A more reasonable network structure should be designed for target detection and to improve detection efficiency by combining recurrent neural networks to achieve multi-scale and multi-category target detection.

4. Conclusions

Estimating the number of rice panicles in the field is a challenging task. However, it is an important index for plant breeders in order to select high-yield varieties. Most previous work involving the analysis of panicle images was done in a controlled environment and was not suitable for practical applications. In this paper, we present a panicle detection system based on a state-of-art DL model applied to images acquired from a UAV platform. The model can accurately detect rice panicles in images acquired in a complex and changing outdoor environment. The analysis of transfer learning shows that transferring weights between orchards does not lead to significant performance gains over a network initialized directly from the highly generalized ImageNet features. Data augmentation techniques, such as flip and scale improve performance, giving equivalent performance with less than half the number of training images. The model produced an average precision, recall, F-measure, and mIOU of 0.868, 0.883, 0.874, and 0.887 respectively, which indicates that it is very precise. Also, the high ART of less than 0.5 s shows the great application potential of our model, trained on UAV image dataset. The main contributions of this paper is (1) the design of an improved R-FCN model that not only accurately recognizes panicles, but also simplifies and accelerates network training, and (2) a model that accurately recognizes incomplete or small panicles in images and that can be used in real time. Although the results of this method are satisfactory, the model remains too large, and the training process needs to be improved. A more powerful central processing unit and graphics processors unit, such as new products of the Xeon series and NVIDIA GeForce, will greatly reduce the training time of our model. In the future, panicle detection will be further analyzed to better understand transfer learning between datasets representing a single rice variety but captured under different lighting conditions, sensor configurations, and seasons.