Investigating 2-D and 3-D Proximal Remote Sensing Techniques for Vineyard Yield Estimation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Site

2.2. Data Acquisition

- RGB and RGB-D imagery acquired for the FC treatment taken at bunch-level (n = 21; randomly selected and labelled bunches from the 31 vines) and plant-level (n = 31; individual vines). The resulting four datasets included: (i) RGB: bunch; (ii) RGB-D: bunch; (iii) RGB: vine; and (iv) RGB-D: vine.

- Manual manipulation of the canopy resulted in the environment for the four LR treatment datasets. These datasets were identical to the FC datasets and concluded the in-situ datasets (datasets = 8). At this point, the 31 vines were harvested.

- RGB and RGB-D imagery of the harvested bunches (n = 21) captured under laboratory conditions resulted in the final two datasets. At this point, all datasets (datasets = 10) have been captured.

- Reference measurements, captured under laboratory conditions, included mass (g) and displacement (mL) for the individual bunches (n = 21) and individual vines (n = 31).

2.2.1. Reference Measurements

2.2.2. Experiment One: Individual Bunches under Laboratory Conditions

- (a)

- RGB imagery: The camera was placed parallel to the suspended bunch at a distance of 60 cm. A single image per bunch was captured for image processing. A ruler was included in each image for length reference (Figure 3b).

- (b)

- RGB-D (Kinect) imagery: The Kinect sensor was placed 60 cm proximal to the target and captured individual meshes per bunch, resulting in a solid 3-D model of the bunch. The Kinect sensor, coupled with the Kinect Fusion software (part of Microsoft’s Software Development Kit 1.8 for Windows [25]) running on a laptop, can capture individual meshes. Meshes were captured in .stl format with a resolution of 640 voxels/m, and a voxel resolution of 256 × 256 × 256 voxels. A white surface was positioned directly behind the bunch to improve contrast and depth determination, as illustrated by the mesh seen in Figure 3c. During mesh capture, the entire bunch system was rotated, providing different angles of view.

2.2.3. Experiment Two: Individual Bunches in Field Conditions

- (a)

- RGB imagery: The camera captured images of the individual bunches for both FC (Figure 4a) and LR (Figure 4b) treatments. The camera was positioned approximately 40 cm from the bunch being imaged, maintaining the reference length (ruler) in each image. Image acquisition occurred between 12H00 and 13H00, under natural solar illumination.

- (b)

- RGB-D (Kinect) imagery: The same software setup from experiment one was used, which allowed the Kinect to capture an individual mesh per bunch for FC (Figure 4c) and LR (Figure 4d) treatments. The imagery was captured after sunset (approximately 20H00) with artificial illumination. The Kinect was held approximately 60 cm from the bunch and was moved around the bunch axis by hand. For the LR treatment, a board was placed behind the bunches, thereby creating an artificial background to improve volume extraction (Figure 4d).

2.2.4. Experiment Three: Individual Vines in Field Conditions

- (a)

- (b)

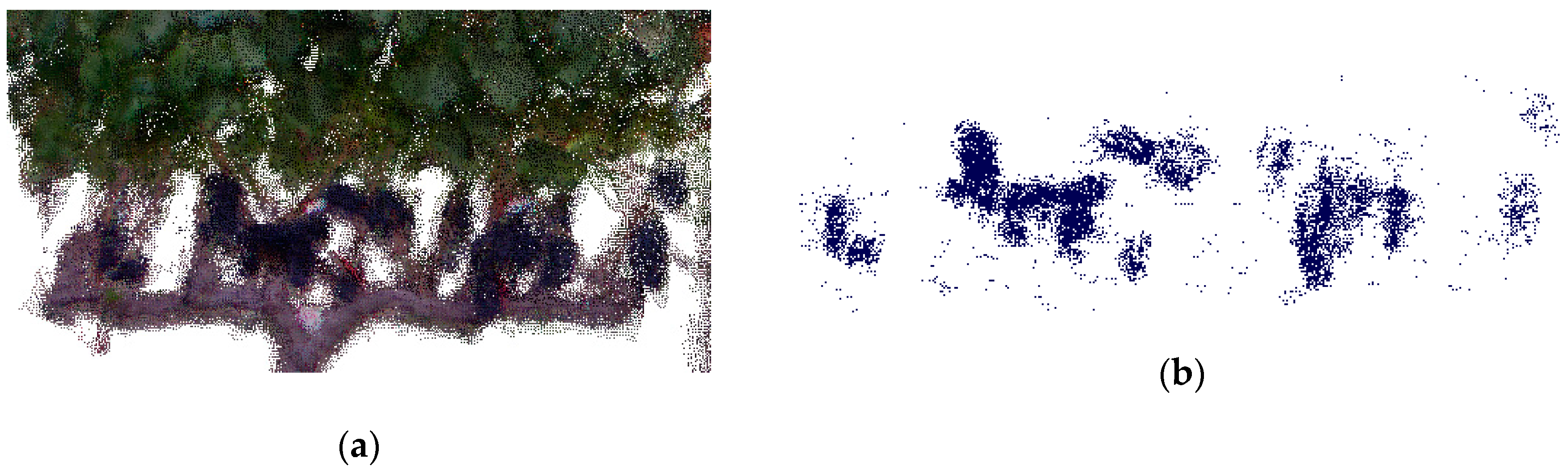

- RGB-D (Kinect) imagery: The Kinect sensor captured point clouds—instead of meshes—of the 31 vines, due to the scale difference. Point clouds consisted of thousands of individual points to create 3-D models; for both the FC and LR treatments (Figure 5c,d). The Real-Time Appearance-Based Mapping (RTAB-Map) software [26] was used for point-cloud modelling and regeneration. In RTAB-Map [26], the default filtering parameter and a 3-D cloud decimation (‘thinning’ of the point cloud) value of ‘2’ were used during exportation of the point clouds in .ply format. The point cloud was captured per individual row, repeated for both sides of the canopy. The Kinect sensor was hand-held approximately 2 m from the vines at a perpendicular angle and was moved along the row in a north to south direction. The imagery was collected at 19H00, immediately before sunset, using the last natural illumination of the day.

2.3. Data Analysis

2.3.1. RGB Imagery

- The reference length (obtained from the ruler) in each image was used to scale the image pixels, creating a calibration value in cm2.

- Manual selection of the region of interest (ROI) containing the relevant bunch/bunches was undertaken. ROIs were strategically digitised so as to capture minimal background.

- The masked RGB images were then converted to the HSV (hue, saturation, value) colour space and segmented using MATLAB’s [27] Colour Thresholder app, part of the Image Processing Toolbox™. It was visually evident when selecting the threshold values that the lighting conditions influenced the values. Separate threshold values were therefore determined for the respective experiments. Threshold values were computed using a random training sample from the specific dataset; equivalent to 25% of the experiment’s dataset.

- After the image segmentation process, the number of segmented pixels was determined (adaption of the pixel count metric [9]) and converted into a pixel area (cm2) using the calibration value.

2.3.2. RGB-D (Kinect) Imagery

- Point clouds were imported into CloudCompare [28], and subsequently cleaned and sectioned to individual vines, focusing on the bunch zone.

- Bunches were segmented from the point cloud using their colour properties. CloudCompare’s [28] Filter Points by Value tool incorporates user-defined thresholds, manipulating the RGB colour space of the point cloud. Threshold values were determined on a random sample (25%) of the LR dataset.

- A custom-built script in R statistical software [31] was used for calculating the segmented point cloud’s volume, representing the vine’s bunches.

2.3.3. Cross-Validation

3. Results

3.1. Reference Measurements

3.2. Pre-Processing

3.2.1. RGB Segmentation Accuracy

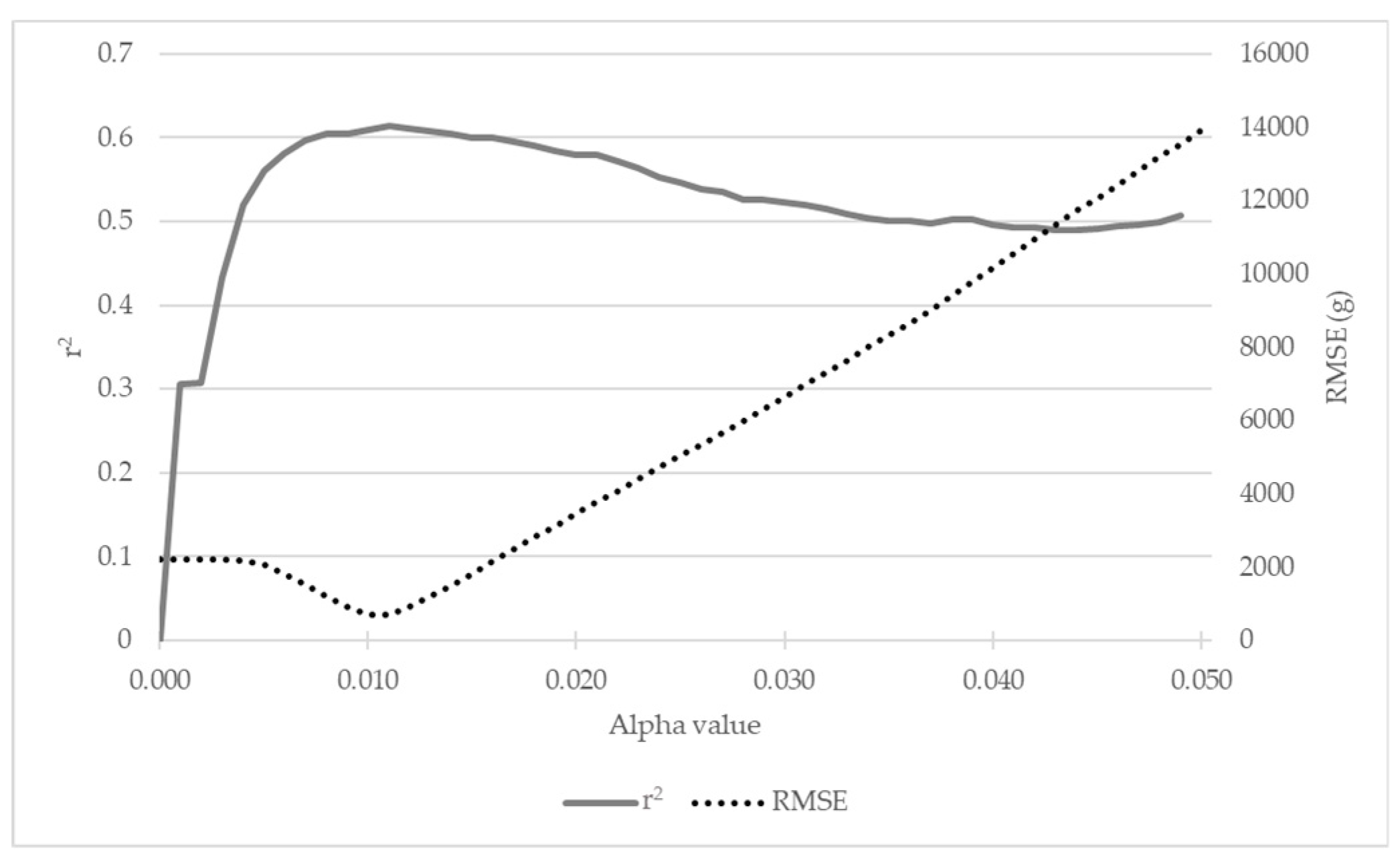

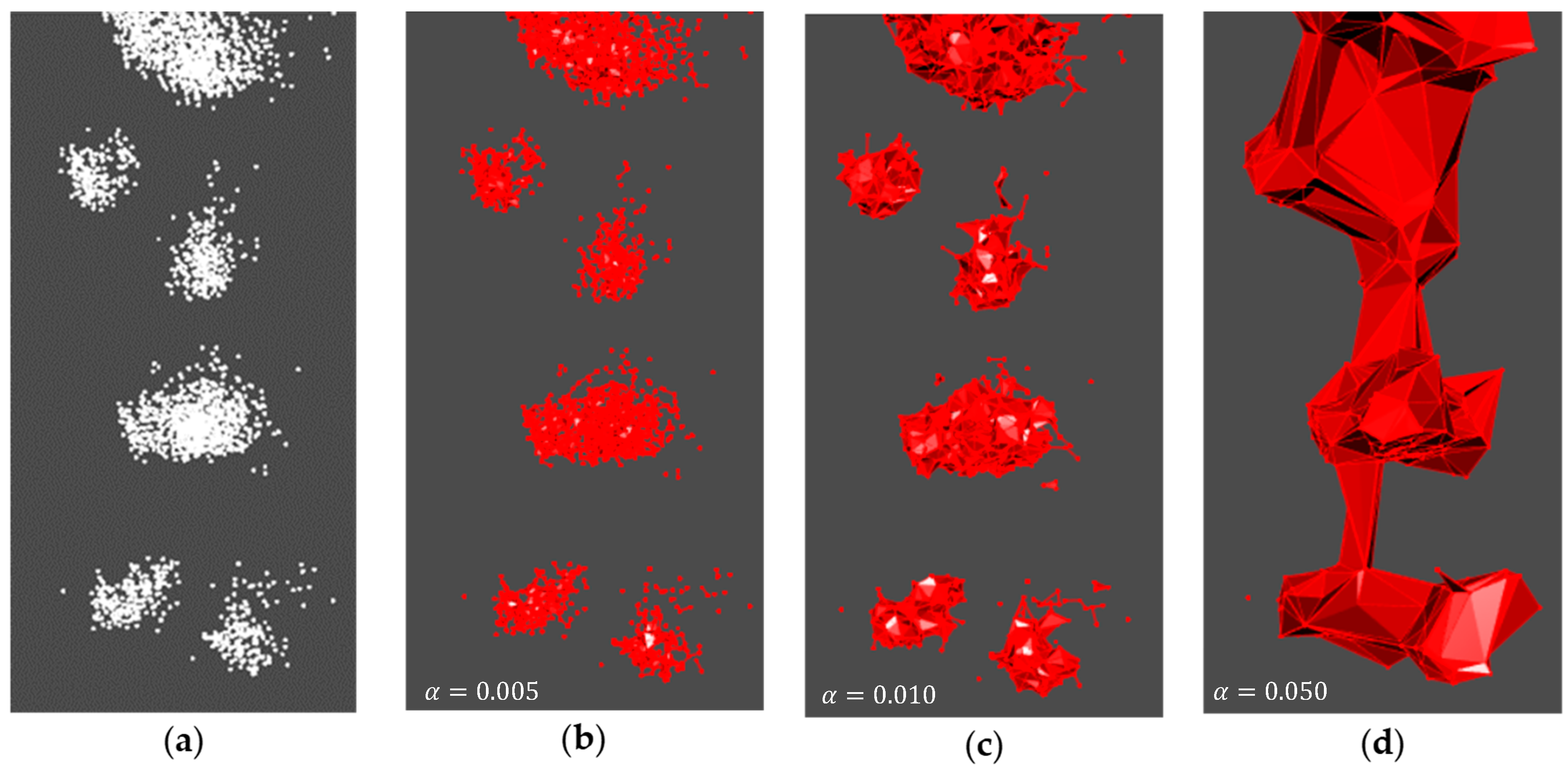

3.2.2. alphashape3d’s Adjusted Alpha Value

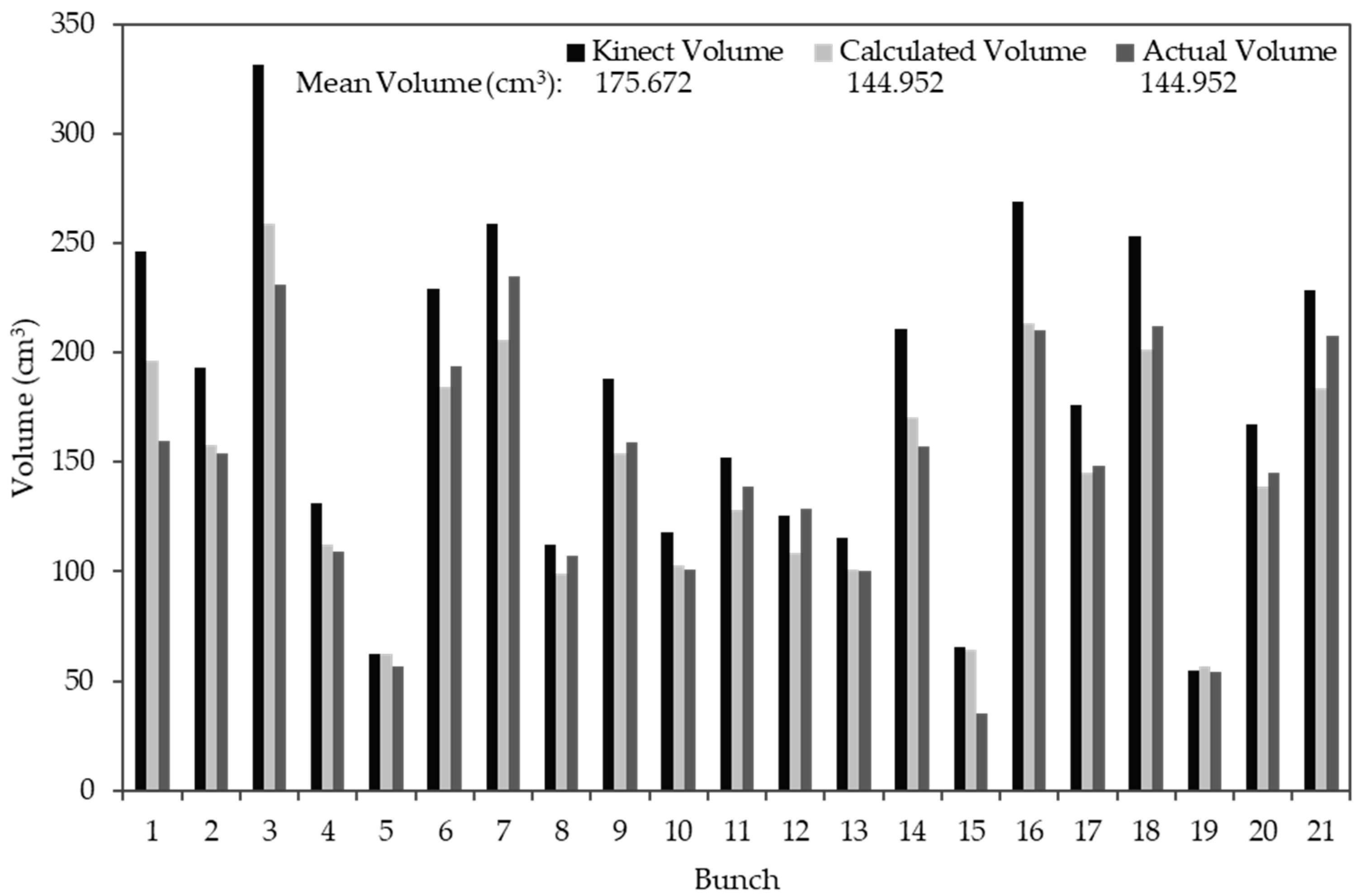

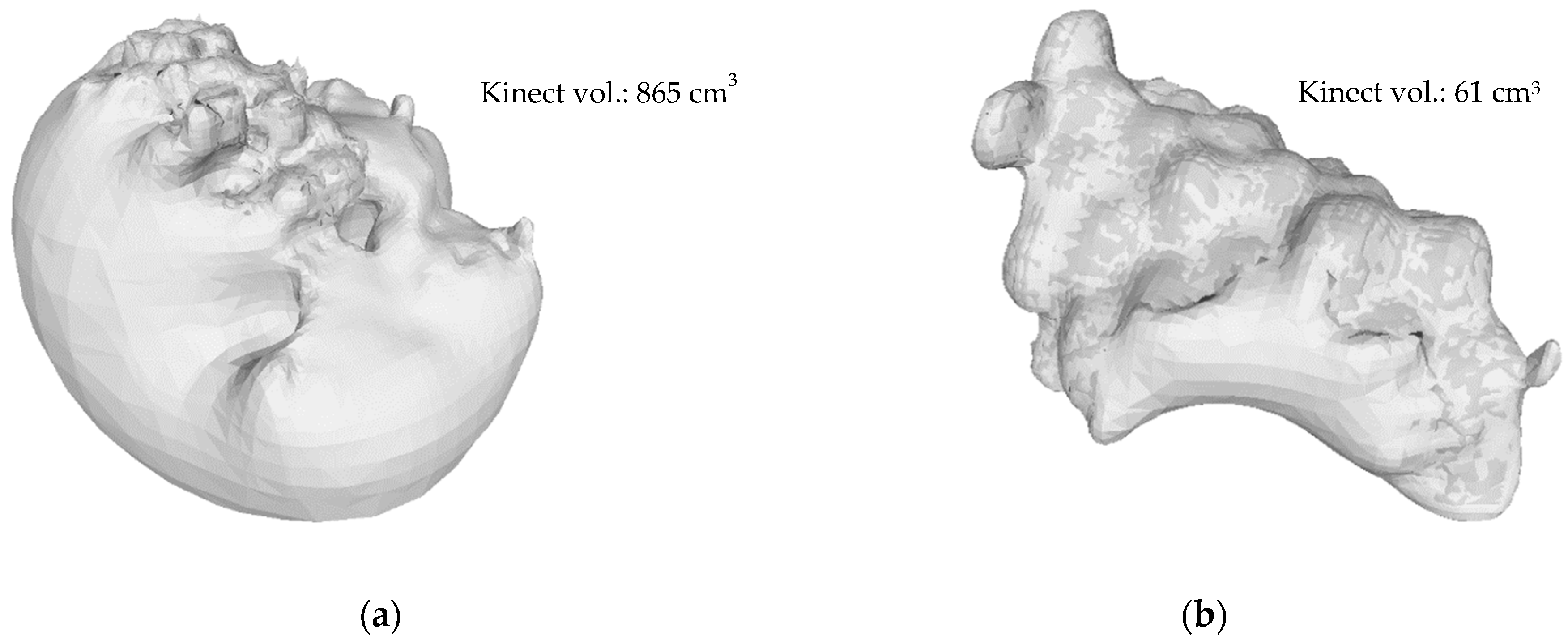

3.2.3. Kinect Volume Correction

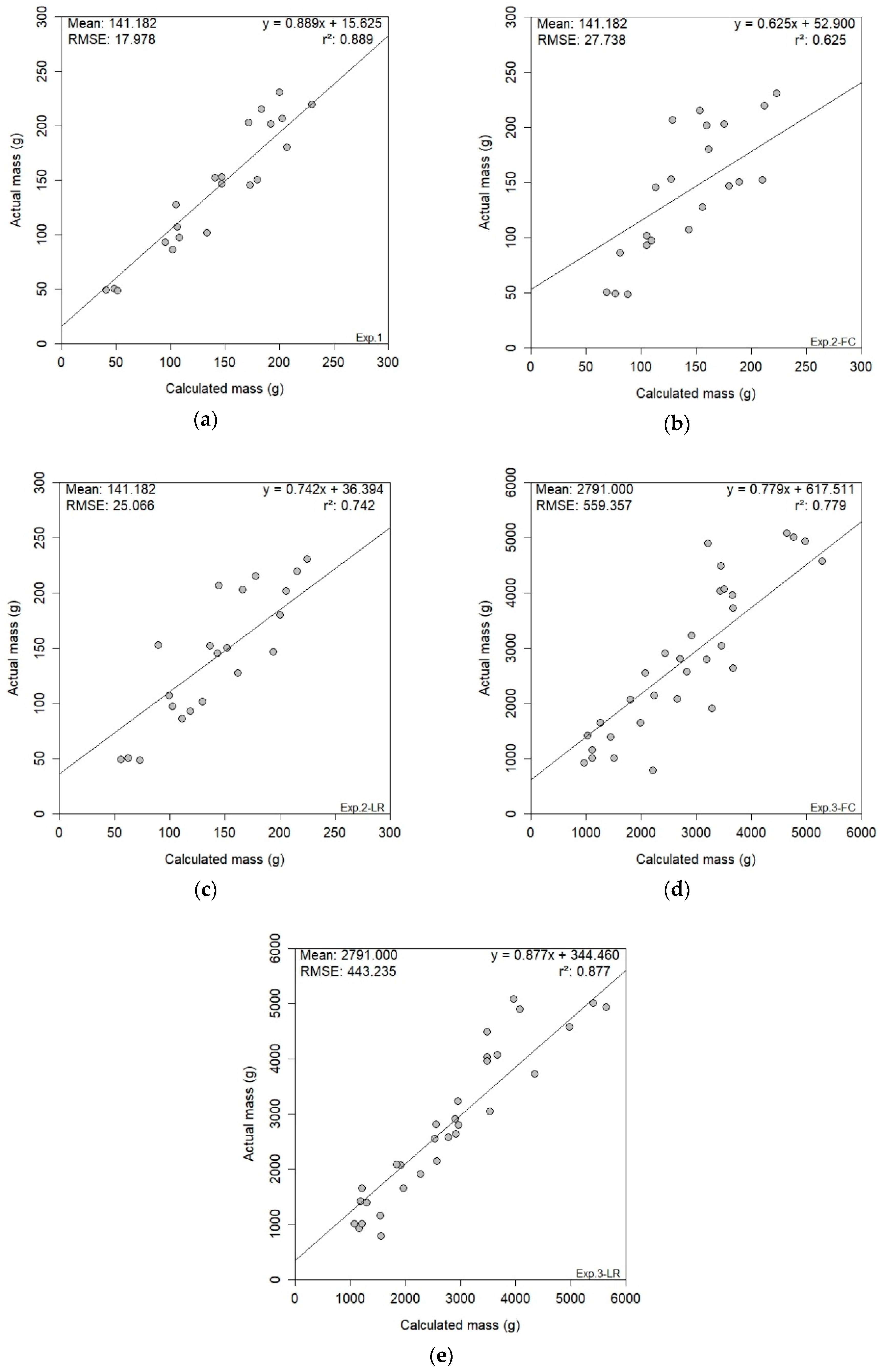

3.3. RGB Results

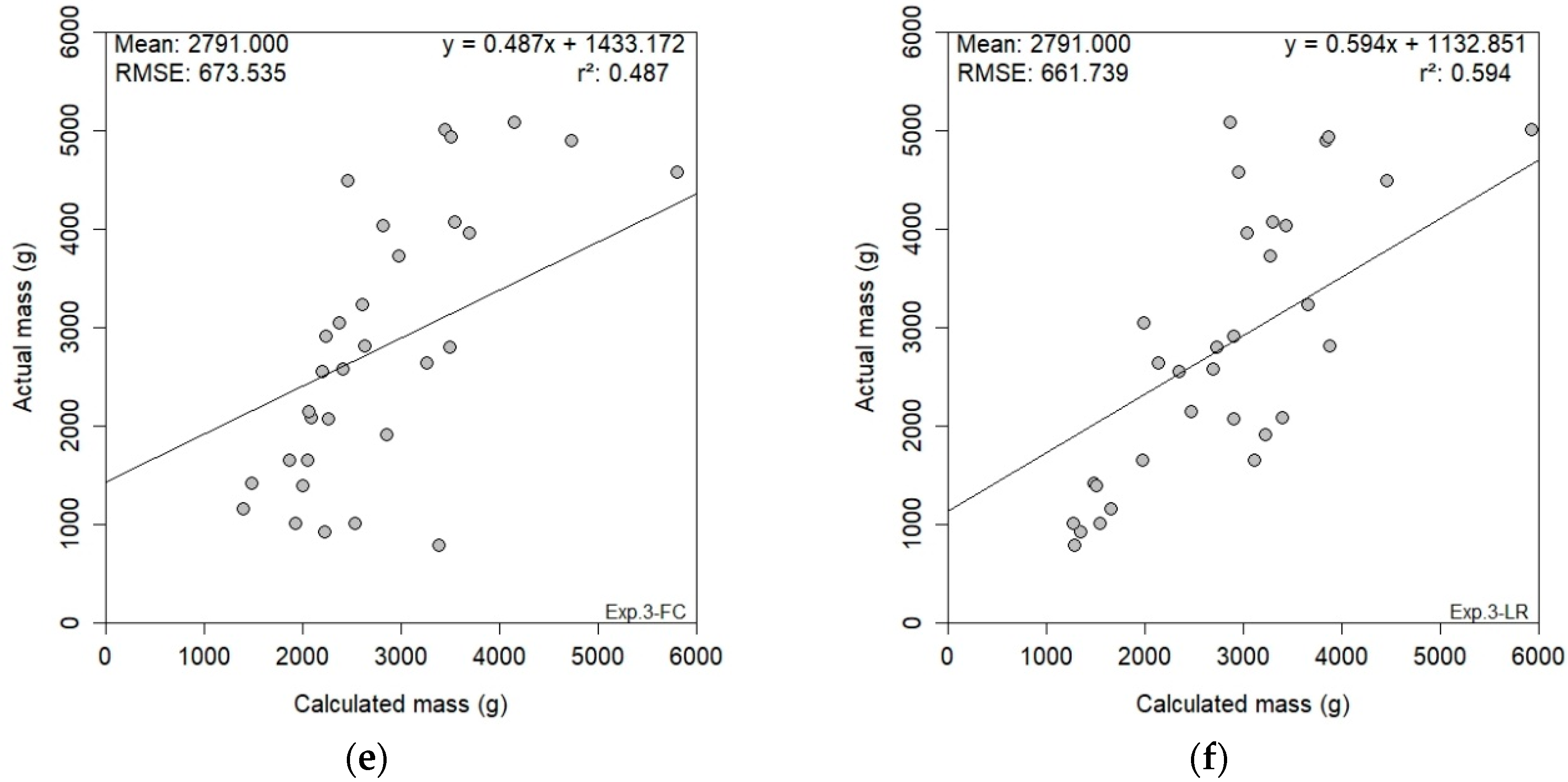

3.4. RGB-D Results

4. Discussion

4.1. Using 2-D RGB Imagery for Yield Estimation

4.2. Using 3-D RGB-D Imagery for Yield Estimation

4.3. The Operational Potential of Developed Methodologies

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Arnó, J.; Martínez-Casasnovas, J.A.; Ribes-Dasi, M.; Rosell, J.R. Review. Precision viticulture. Research topics, challenges and opportunities in site-specific vineyard management. Span. J. Agric. Res. 2009, 7, 779–790. [Google Scholar] [CrossRef]

- Blackmore, S. Developing the Principles of Precision farming. In Proceedings of the International Conference on Precision Agriculture, Minneapolis, MN, USA, 14–17 July 2002; p. 5. [Google Scholar]

- Matese, A.; Di Gennaro, S.F. Technology in precision viticulture: A state of the art review. Int. J. Wine Res. 2015, 7, 69–81. [Google Scholar] [CrossRef]

- Nuske, S.; Wilshusen, K.; Achar, S.; Yoder, L.; Narasimhan, S.; Singh, S. Automated visual yield estimation in vineyards. J. Field Robot. 2014, 31, 837–860. [Google Scholar] [CrossRef]

- Cunha, M.; Marçal, A.R.S.; Silva, L. Very early prediction of wine yield based on satellite data from vegetation. Int. J. Remote Sens. 2010, 31, 3125–3142. [Google Scholar] [CrossRef]

- Wolpert, J.A.; Vilas, E.P. Estimating Vineyard Yields: Introduction to a Simple, Two-Step Method. Am. J. Enol. Vitic. 1992, 43, 384–388. [Google Scholar]

- De la Fuente, M.; Linares, R.; Baeza, P.; Miranda, C.; Lissarrague, J. Comparison of Different Methods of Grapevine Yield Prediction in the Time Window. J. Int. Sci. Vigne Vin 2015, 49, 27–35. [Google Scholar] [CrossRef]

- Dunn, G.M.; Martin, S.R. Yield prediction from digital image analysis: A technique with potential for vineyard assessments prior to harvest. Aust. J. Grape Wine Res. 2004, 10, 196–198. [Google Scholar] [CrossRef]

- Liu, S.; Marden, S.; Whitty, M. Towards automated yield estimation in viticulture. In Proceedings of the Australasian Conference on Robotics and Automation, Sydney, Australia, 2–4 December 2013. [Google Scholar]

- Marinello, F.; Pezzuolo, A.; Cillis, D.; Sartori, L. Kinect 3D reconstruction for quantification of grape bunches volume and mass. In Proceedings of the Engineering for Rural Development, Jelgava, Latvia, 25–27 May 2016; pp. 876–881. [Google Scholar]

- Nuske, S.; Achar, S.; Bates, T.; Narasimhan, S.; Singh, S. Yield estimation in vineyards by visual grape detection. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 2352–2358. [Google Scholar] [CrossRef]

- Aquino, A.; Millan, B.; Diago, M.P.; Tardaguila, J. Automated early yield prediction in vineyards from on-the-go image acquisition. Comput. Electron. Agric. 2018, 144, 26–36. [Google Scholar] [CrossRef]

- Diago, M.P.; Correa, C.; Millán, B.; Barreiro, P.; Valero, C.; Tardaguila, J. Grapevine yield and leaf area estimation using supervised classification methodology on RGB images taken under field conditions. Sensors 2012, 12, 16988–17006. [Google Scholar] [CrossRef]

- Font, D.; Tresanchez, M.; Martínez, D.; Moreno, J.; Clotet, E.; Palacín, J. Vineyard Yield Estimation Based on the Analysis of High Resolution Images Obtained with Artificial Illumination at Night. Sensors 2015, 15, 8284–8301. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Whitty, M. Automatic grape bunch detection in vineyards with an SVM classifier. J. Appl. Log. 2015, 13, 643–653. [Google Scholar] [CrossRef]

- Millan, B.; Velasco-Forero, S.; Aquino, A.; Tardaguila, J. On-the-go grapevine yield estimation using image analysis and boolean model. J. Sens. 2018. [Google Scholar] [CrossRef]

- Bengochea-Guevara, J.M.; Andújar, D.; Sanchez-Sardana, F.L.; Cantuña, K.; Ribeiro, A. A low-cost approach to automatically obtain accurate 3D models of woody crops. Sensors 2018, 18, 30. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Li, C. Size estimation of sweet onions using consumer-grade RGB-depth sensor. J. Food Eng. 2014, 142, 153–162. [Google Scholar] [CrossRef]

- Andújar, D.; Ribeiro, A.; Fernández-Quintanilla, C.; Dorado, J. Using depth cameras to extract structural parameters to assess the growth state and yield of cauliflower crops. Comput. Electron. Agric. 2016, 122, 67–73. [Google Scholar] [CrossRef]

- Herrero-Huerta, M.; González-Aguilera, D.; Rodriguez-Gonzalvez, P.; Hernández-López, D. Vineyard yield estimation by automatic 3D bunch modelling in field conditions. Comput. Electron. Agric. 2015, 110, 17–26. [Google Scholar] [CrossRef]

- Ivorra, E.; Sánchez, A.J.; Camarasa, J.G.; Diago, M.P.; Tardaguila, J. Assessment of grape cluster yield components based on 3D descriptors using stereo vision. Food Control 2015, 50, 273–282. [Google Scholar] [CrossRef] [Green Version]

- Rose, J.C.; Kicherer, A.; Wieland, M.; Klingbeil, L.; Töpfer, R.; Kuhlmann, H. Towards automated large-scale 3D phenotyping of vineyards under field conditions. Sensors 2016, 16, 2136. [Google Scholar] [CrossRef]

- Conradie, W.; Carey, V.; Bonnardot, V.; Saayman, D.; van Schoor, L. Effect of Different Environmental Factors on the Performance of Sauvignon blanc Grapevines in the Stellenbosch/Durbanville Districts of South Africa. I. Geology, Soil, Climate, Phenology and Grape Composition. S. Afr. J. Enol. Vitic. 2002, 23, 78–91. [Google Scholar]

- Ferreira, J.H.S.; Marais, P.G. Effect of Rootstock Cultivar, Pruning Method and Crop Load on Botrytis cinerea Rot of Vitis vinifera cv. Chenin blanc grapes. S. Afr. J. Enol. Vitic. 2017, 8, 41–44. [Google Scholar] [CrossRef] [Green Version]

- Microsoft. Kinect for Windows SDK 1.8. Available online: https://www.microsoft.com/en-us/download/details.aspx?id=40276 (accessed on 16 May 2019).

- Labbe, M. RTAB-Map. Available online: http://introlab.github.io/rtabmap/ (accessed on 1 December 2018).

- The MathWorks Inc. Matlab R2018b v9.5.0.944444. Available online: https://ww2.mathworks.cn/en/ (accessed on 8 January 2019).

- CloudCompare. CloudCompare Version 2.10.alpha [GPL Software]. Available online: http://www.cloudcompare.org/ (accessed on 29 November 2018).

- Cignoni, P.; Callieri, M.; Corsini, M.; Dellepiane, M.; Ganovelli, F.; Ranzuglia, G. MeshLab: An Open-Source Mesh Processing Tool. In Proceedings of the Sixth Eurographics Italian Chapter Conference, the Eurographics Association, Pisa, Italy, 2–4 July 2008; pp. 129–136. [Google Scholar] [CrossRef]

- Kazhdan, M.; Hoppe, H. Screened poisson surface reconstruction. ACM Trans. Graph. 2013, 32, 29. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2018. [Google Scholar]

- Lafarge, T.; Pateiro-Lopez, B. alphashape3d: Implementation of the 3D Alpha-Shape for the Reconstruction of 3D Sets from a Point Cloud. R Packag version 1.3. 2017. Available online: https://rdrr.io/cran/alphashape3d/ (accessed on 20 August 2019).

- Lafarge, T.; Pateiro-López, B.; Possolo, A.; Dunkers, J.P. R Implementation of a Polyhedral Approximation to a 3D Set of Points Using the α-Shape. J. Stat. Softw. 2014, 56, 1–19. [Google Scholar] [CrossRef]

- Rueda-Ayala, V.; Peña, J.; Höglind, M.; Bengochea-Guevara, J.; Andújar, D. Comparing UAV-Based Technologies and RGB-D Reconstruction Methods for Plant Height and Biomass Monitoring on Grass Ley. Sensors 2019, 19, 535. [Google Scholar] [CrossRef] [PubMed]

- Ribeiro, A.; Bengochea-Guevara, J.M.; Conesa-Muñoz, J.; Nuñez, N.; Cantuña, K.; Andújar, D. 3D monitoring of woody crops using an unmanned ground vehicle. In Proceedings of the 11th European Conference on Precision Agriculture, Advances in Animal Biosciences, Edinburgh, UK, 16–20 July 2017; Volume 8, pp. 210–215. [Google Scholar] [CrossRef]

- Kuhn, M. Building predictive models in R using the caret package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef]

- Wasenmüller, O.; Stricker, D. Comparison of Kinect V1 and V2 Depth Images in Terms of Accuracy and Precision. In Proceedings of the ACCV Workshops, Taipei, Taiwan, 20–24 November 2016; pp. 34–45. [Google Scholar] [CrossRef]

- Lopes, C.; Torres, A.; Guzman, R.; Graca, J.; Reyes, M.; Vitorino, G.; Braga, R.; Monteiro, A.; Barriguinha, A. Using an unmanned ground vehicle to scout vineyards for non-intrusive estimation of canopy features and grape yield. In Proceedings of the 20th GiESCO International Meeting, Mendoza, Argentina, 5–10 November 2017; pp. 16–21. [Google Scholar]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hacking, C.; Poona, N.; Manzan, N.; Poblete-Echeverría, C. Investigating 2-D and 3-D Proximal Remote Sensing Techniques for Vineyard Yield Estimation. Sensors 2019, 19, 3652. https://0-doi-org.brum.beds.ac.uk/10.3390/s19173652

Hacking C, Poona N, Manzan N, Poblete-Echeverría C. Investigating 2-D and 3-D Proximal Remote Sensing Techniques for Vineyard Yield Estimation. Sensors. 2019; 19(17):3652. https://0-doi-org.brum.beds.ac.uk/10.3390/s19173652

Chicago/Turabian StyleHacking, Chris, Nitesh Poona, Nicola Manzan, and Carlos Poblete-Echeverría. 2019. "Investigating 2-D and 3-D Proximal Remote Sensing Techniques for Vineyard Yield Estimation" Sensors 19, no. 17: 3652. https://0-doi-org.brum.beds.ac.uk/10.3390/s19173652