Coarse-to-Fine Hand–Object Pose Estimation with Interaction-Aware Graph Convolutional Network

Abstract

:1. Introduction

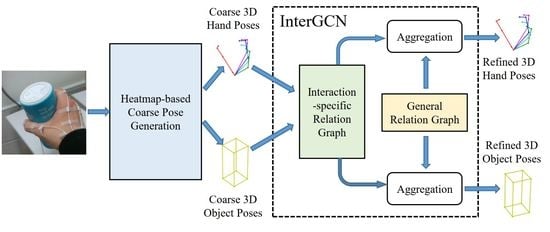

- We propose a novel deep learning framework for hand–object pose estimation, which can progressively improve the model’s performance using a coarse-to-fine strategy;

- We introduce Interaction-aware Graph Convolutional Network to explicitly model rich and dynamic hand–object relations to optimize the coarse pose results;

- Extensive experimental results on benchmarks demonstrate that our approach outperforms state-of-the-art methods.

2. Related Works

2.1. Hand–Object Pose Estimation

2.2. Graph Convolutional Networks

3. Methods

3.1. Heatmap-Based Coarse Pose Generation

3.2. Interaction-Aware Graph Convolutional Network

3.2.1. Graph Convolution

3.2.2. General Relation Graph

| Algorithm 1 Construct relation subgraphs , |

|

3.2.3. Interaction-Specific Relation Graph

3.2.4. Feature Aggregation

3.3. Loss Functions

3.3.1. Heatmap Loss

3.3.2. 3D Pose Loss

4. Experiments

4.1. Datasets

4.2. Evaluation Metrics

4.3. Implementation Details

4.4. Comparisons with the State-of-the-Arts

4.5. Ablation Study

4.5.1. Effectiveness of Coarse-to-Fine Strategy

4.5.2. Effectiveness of Relation Graphs

4.5.3. Visualization of Relation Graphs

4.6. Qualitative Results

4.7. Runtime

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tompson, J.; Stein, M.; Lecun, Y.; Perlin, K. Real-time continuous pose recovery of human hands using convolutional networks. ACM Trans. Graph. 2014, 33, 1–10. [Google Scholar] [CrossRef]

- Oberweger, M.; Wohlhart, P.; Lepetit, V. Training a Feedback Loop for Hand Pose Estimation. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015. [Google Scholar]

- Zimmermann, C.; Brox, T. Learning to Estimate 3D Hand Pose from Single RGB Images. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Iqbal, U.; Molchanov, P.; Gall, T.B.J.; Kautz, J. Hand Pose Estimation via Latent 2.5D Heatmap Regression. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Wan, C.; Probst, T.; Van Gool, L.; Yao, A. Dense 3D Regression for Hand Pose Estimation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Ge, L.; Ren, Z.; Li, Y.; Xue, Z.; Wang, Y.; Cai, J.; Yuan, J. 3D Hand Shape and Pose Estimation from a Single RGB Image. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Huang, W.; Ren, P.; Wang, J.; Qi, Q.; Sun, H. AWR: Adaptive Weighting Regression for 3D Hand Pose Estimation. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Kehl, W.; Manhardt, F.; Tombari, F.; Ilic, S.; Navab, N. SSD-6D: Making RGB-Based 3D Detection and 6D Pose Estimation Great Again. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Tekin, B.; Sinha, S.N.; Fua, P. Real-Time Seamless Single Shot 6D Object Pose Prediction. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Peng, S.; Liu, Y.; Huang, Q.; Zhou, X.; Bao, H. PVNet: Pixel-wise Voting Network for 6DoF Pose Estimation. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Kao, Y.; Li, W.; Wang, Q.; Lin, Z.; Kim, W.; Hong, S. Synthetic Depth Transfer for Monocular 3D Object Pose Estimation in the Wild. In Proceedings of the AAAI Conference on Artificial Intelligence (AAAI), New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Huang, L.; Tan, J.; Meng, J.; Liu, J.; Yuan, J. HOT-Net: Non-Autoregressive Transformer for 3D Hand-Object Pose Estimation. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020. [Google Scholar]

- Hamer, H.; Schindler, K.; Koller-Meier, E.; Van Gool, L. Tracking a Hand Manipulating an Object. In Proceedings of the 2009 IEEE International Conference on Computer Vision (ICCV), Kyoto, Japan, 29 September–2 October 2009. [Google Scholar]

- Choi, C.; Ho Yoon, S.; Chen, C.-N.; Ramani, K. Robust Hand Pose Estimation during the Interaction with an Unknown Object. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Mueller, F.; Bernard, F.; Sotnychenko, O.; Mehta, D.; Sridhar, S.; Casas, D.; Theobalt, C. GANerated Hands for Real-Time 3D Hand Tracking from Monocular RGB. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Hasson, Y.; Varol, G.; Tzionas, D.; Kalevatykh, I.; Black, M.J.; Laptev, I.; Schmid, C. Learning Joint Reconstruction of Hands and Manipulated Objects. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Oberweger, M.; Wohlhart, P.; Lepetit, V. Generalized feedback loop for joint hand-object pose estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 42, 1898–1912. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tekin, B.; Bogo, F.; Pollefeys, M. H+O: Unified Egocentric Recognition of 3D Hand-Object Poses and Interactions. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Doosti, B.; Naha, S.; Mirbagheri, M.; Crandall, D.J. HOPE-Net: A Graph-based Model for Hand-Object Pose Estimation. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020. [Google Scholar]

- Cai, Y.; Ge, L.; Liu, J.; Cai, J.; Cham, T.-J.; Yuan, J.; Thalmann, N.M. Exploiting Spatial-temporal Relationships for 3D Pose Estimation via Graph Convolutional Networks. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–3 November 2019. [Google Scholar]

- Sridhar, S.; Mueller, F.; Zollhöfer, M.; Casas, D.; Oulasvirta, A.; Theobalt, C. Real-Time Joint Tracking of a Hand Manipulating an Object from RGB-D Input. In Proceedings of the 2016 European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016. [Google Scholar]

- Tsoli, A.; Argyros, A.A. Joint 3D Tracking of a Deformable Object in Interaction with a Hand. In Proceedings of the 2018 European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Baek, S.; Kim, K.I.; Kim, T.-K. Weakly-supervised Domain Adaptation via GAN and Mesh Model for Estimating 3D Hand Poses Interacting Objects. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020. [Google Scholar]

- Romero, J.; Tzionas, D.; Black, M.J. Embodied hands: Modeling and capturing hands and bodies together. ACM Trans. Graph. 2017, 36, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Kipf, T.N.; Welling, M. Semi-supervised Classification with Graph Convolutional Networks. In Proceedings of the 5th International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017. [Google Scholar]

- Li, R.; Wang, S.; Zhu, F.; Huang, J. Adaptive Graph Convolutional Neural Networks. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence (AAAI), New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Niepert, M.; Ahmed, M.; Kutzkov, K. Learning Convolutional Neural Networks for Graphs. In Proceedings of the 33rd International Conference on Machine Learning (ICML), New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Zhao, L.; Peng, X.; Tian, Y.; Kapadia, M.; Metaxas, D.N. Semantic Graph Convolutional Networks for 3D Human Pose Regression. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Two-Stream Adaptive Graph Convolutional Networks for Skeleton-Based Action Recognition. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wei, S.-E.; Ramakrishna, V.; Kanade, T.; Sheikh, Y. Convolutional Pose Machines. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Zhou, X.; Huang, Q.; Sun, X.; Xue, X.; Wei, Y. Towards 3D Human Pose Estimation in the Wild: A Weakly-supervised Approach. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017. [Google Scholar]

- Garcia-Hernando, G.; Yuan, S.; Baek, S.; Kim, T.-K. First-Person Hand Action Benchmark with RGB-D Videos and 3D Hand Pose Annotations. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Hampali, S.; Rad, M.; Oberweger, M.; Lepetit, V. HOnnotate: A method for 3D Annotation of Hand and Object Poses. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–18 June 2020. [Google Scholar]

- Chang, A.X.; Funkhouser, T.; Guibas, L.; Hanrahan, P.; Huang, Q.; Li, Z.; Savarese, S.; Savva, M.; Song, S.; Su, H.; et al. Shapenet: An information-rich 3d model repository. arXiv 2015, arXiv:1512.03012. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

| Model | Abs. HP Error | Rel. HP Error | Abs. OP Error |

|---|---|---|---|

| H+O | 15.81 | - | 24.89 |

| HOT-Net | 15.18 | 10.41 | 21.37 |

| Ours Coarse | 16.25 | 10.04 | 27.31 |

| Ours | 14.97 | 8.32 | 23.07 |

| Dataset | Model | AUC on PCK | AUC on PCP |

|---|---|---|---|

| FPHA-HO | HOT-Net | 0.829 | 0.595 |

| Ours | 0.839 | 0.654 | |

| HO-3D | HOT-Net | 0.819 | 0.567 |

| Ours | 0.805 | 0.583 |

| Method | Abs. HP Error | Rel. HP Error | Abs. OP Error |

|---|---|---|---|

| Ours Coarse | 16.25 | 10.04 | 27.31 |

| Ours w/o | 15.59 | 9.14 | 24.83 |

| Ours w/o | 15.26 | 8.79 | 23.89 |

| Ours | 14.97 | 8.32 | 23.07 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, M.; Li, A.; Liu, H.; Wang, M. Coarse-to-Fine Hand–Object Pose Estimation with Interaction-Aware Graph Convolutional Network. Sensors 2021, 21, 8092. https://0-doi-org.brum.beds.ac.uk/10.3390/s21238092

Zhang M, Li A, Liu H, Wang M. Coarse-to-Fine Hand–Object Pose Estimation with Interaction-Aware Graph Convolutional Network. Sensors. 2021; 21(23):8092. https://0-doi-org.brum.beds.ac.uk/10.3390/s21238092

Chicago/Turabian StyleZhang, Maomao, Ao Li, Honglei Liu, and Minghui Wang. 2021. "Coarse-to-Fine Hand–Object Pose Estimation with Interaction-Aware Graph Convolutional Network" Sensors 21, no. 23: 8092. https://0-doi-org.brum.beds.ac.uk/10.3390/s21238092