Recognizing Emotions through Facial Expressions: A Largescale Experimental Study

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Materials

2.3. Procedure

2.4. Data Analyses

3. Results

3.1. Main Effects on Emotion Recognition

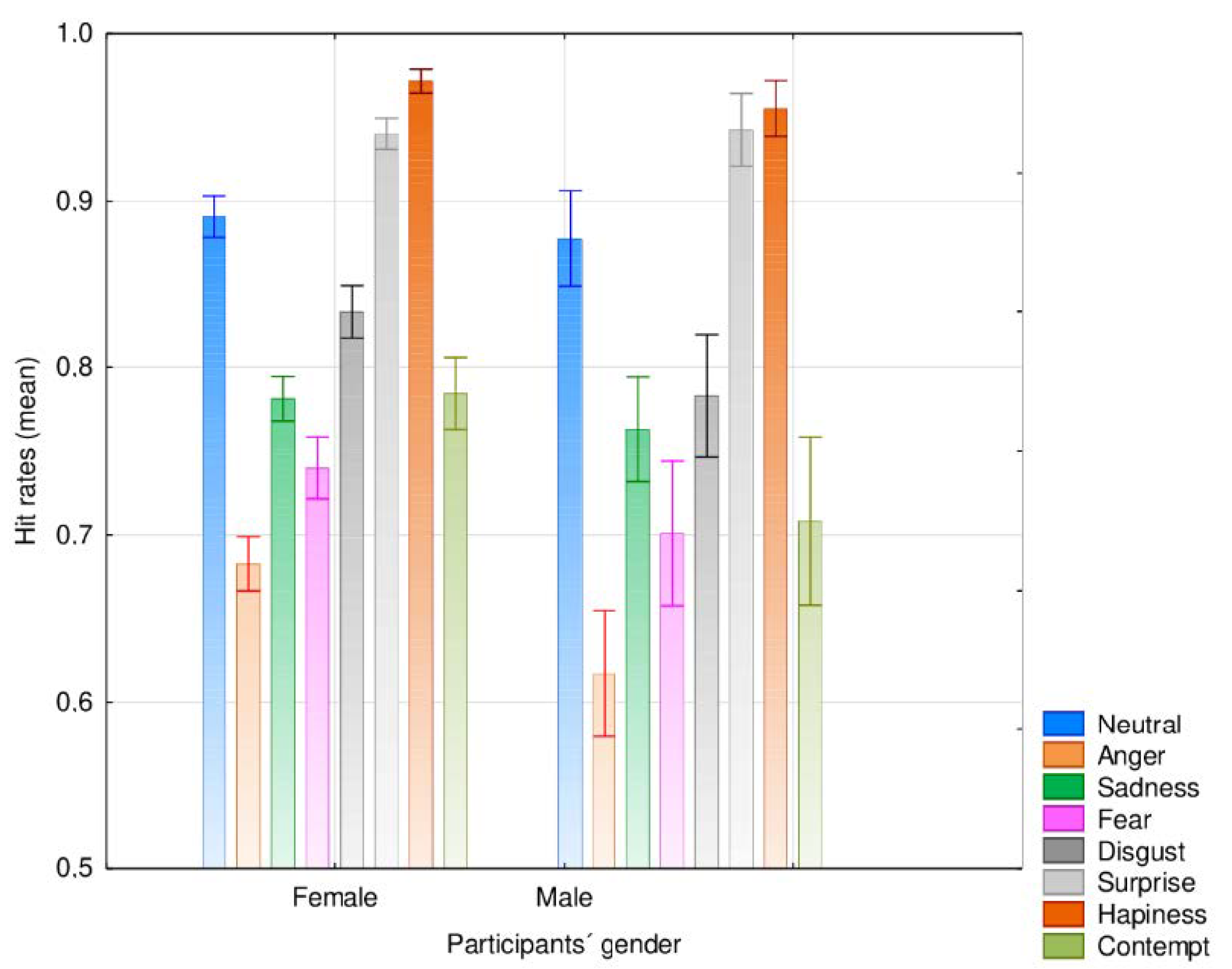

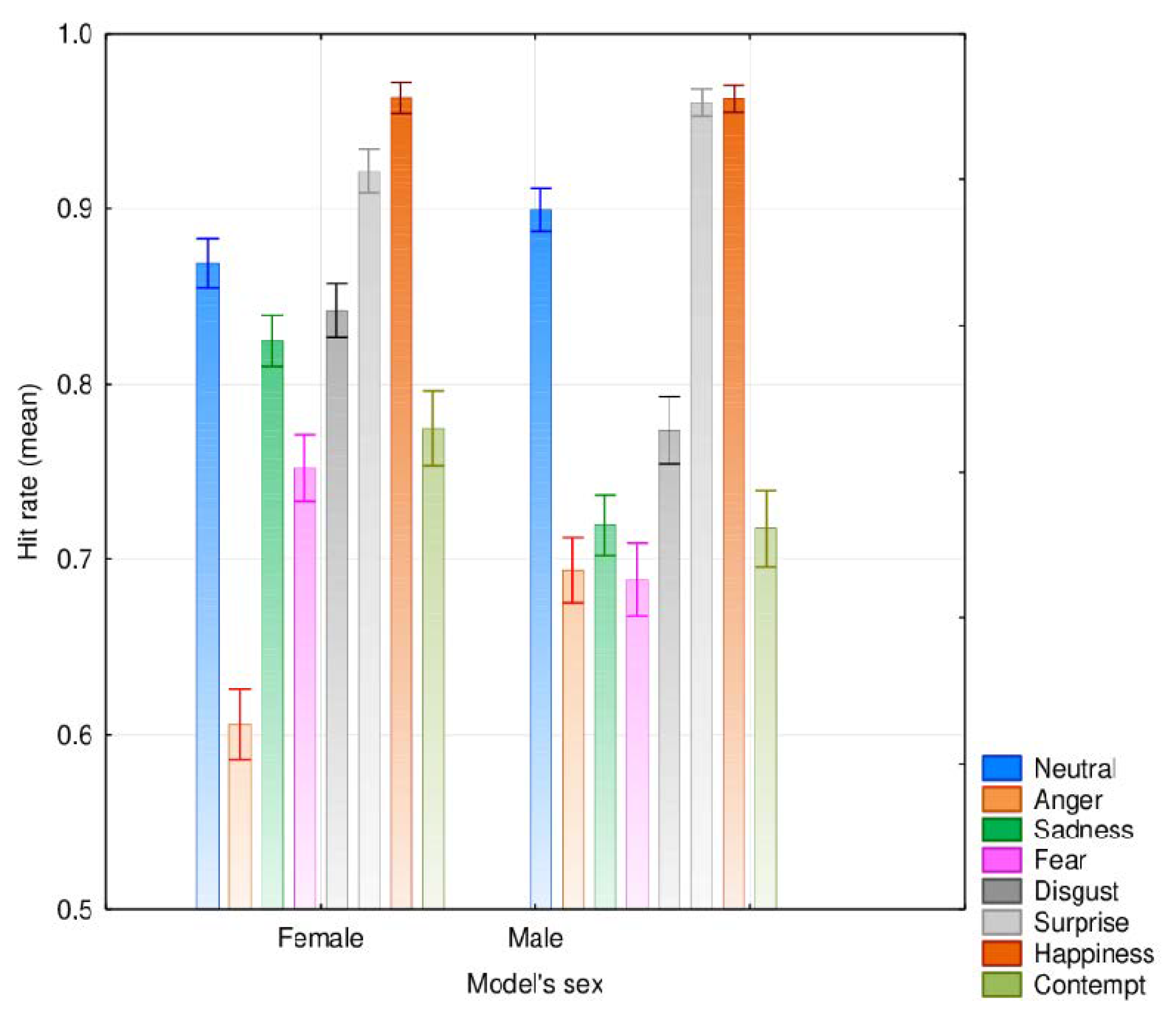

3.2. Interaction Effects on Emotion Recognition

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Erwin, R.; Gur, R.; Gur, R.; Skolnick, B.; Mawhinney-Hee, M.; Smailis, J. Facial emotion discrimination: I. Task construction and behavioral findings in normal subjects. Psychiatry Res. 1992, 42, 231–240. [Google Scholar] [CrossRef]

- Carroll, J.M.; Russell, J.A. Do facial expressions signal specific emotions? Judging the face in context. J. Personal. Soc. Psychol. 1996, 70, 205–218. [Google Scholar] [CrossRef]

- Gosselin, P.; Kirouac, G.; Doré, F.Y. Components and recognition of facial expression in the communication of emotion by actors. J. Personal. Soc. Psychol. 1995, 68, 83–96. [Google Scholar] [CrossRef]

- Kappas, A.; Hess, U.; Barr, C.L.; Kleck, R.E. Angle of regard: The effect of vertical viewing angle on the perception of facial expressions. J. Nonverbal Behav. 1994, 18, 263–280. [Google Scholar] [CrossRef]

- Motley, M.T. Facial affect and verbal context in conversation: Facial expression as interjection. Hum. Commun. Res. 1993, 20, 3–40. [Google Scholar] [CrossRef]

- Russell, J.A. Is there universal recognition of emotion from facial expression? A review of the cross-cultural studies. Psychol. Bull. 1994, 115, 102–141. [Google Scholar] [CrossRef]

- Gupta, R. Metacognitive rehabilitation of autobiographical overgeneral memory. J. Cogn. Rehabil. 2007, 25, 4–8. [Google Scholar]

- Gupta, R.; Kar, B.R. Interpretative bias: Indicators of cognitive vulnerability to depression. Ger. J. Psychiatry 2008, 11, 98–102. [Google Scholar]

- Gupta, R.; Kar, B.R. Attention and memory biases as stable abnormalities among currently depressed and currently remitted individuals with unipolar depression. Front. Psychiatry 2012, 3, 99. [Google Scholar] [CrossRef] [Green Version]

- Gupta, R.; Kar, B.R.; Thapa, K. Specific cognitive dysfunction in ADHD: An overview. In Recent Developments in Psychology; Mukherjee, V.P., Ed.; Defense Institute of Psychological Research: Delhi, India, 2006; pp. 153–170. [Google Scholar]

- Gupta, R.; Kar, B.R.; Srinivasan, N. Development of task switching and post-error slowing in children. Behav. Brain Funct. 2009, 5, 38. [Google Scholar] [CrossRef] [Green Version]

- Gupta, R.; Kar, B.R.; Srinivasan, N. Cognitive-motivational deficits in ADHD: Development of a classification system. Child Neuropsychol. 2011, 17, 67–81. [Google Scholar] [CrossRef] [PubMed]

- Gupta, R.; Kar, B.R. Development of attentional processes in normal and ADHD children. Prog. Brain Res. 2009, 176, 259–276. [Google Scholar] [PubMed] [Green Version]

- Gupta, R.; Kar, B.R. Specific cognitive deficits in ADHD: A diagnostic concern in differential diagnosis. J. Child Fam. Stud. 2010, 19, 778–786. [Google Scholar] [CrossRef] [Green Version]

- Choudhary, S.; Grupta, R. Culture and borderline personality disorder in India. Front. Psychol. 2020, 11, 714. [Google Scholar] [CrossRef]

- Brewer, R.; Cook, R.; Cardi, V.; Treasure, J.; Bird, G. Emotion recognition deficits in eating disorders are explained by co-occurring alexithymia. R. Soc. Open Sci. 2015, 2, 140382. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gupta, R. Attentional visual and emotional mechanisms of face processing in Williams syndrome. Front. Behav. Neurosci. 2011, 5, 18. [Google Scholar] [CrossRef] [Green Version]

- Kohler, C.G.; Walker, J.B.; Martin, E.A.; Healey, K.M.; Moberg, P.J. Facial emotion perception in schizophrenia: A meta-analytic review. Schizophr. Bull. 2010, 36, 1009–1019. [Google Scholar] [CrossRef]

- Weigelt, S.; Koldewyn, K.; Kanwisher, N. Face identity recognition in autism spectrum disorders: A review of behavioral studies. Neurosci. Biobehav. Rev. 2012, 36, 257–277. [Google Scholar] [CrossRef]

- Gendron, M.; Crivelli, C.; Barrett, L.F. Universality reconsidered: Diversity in meaning making of facial expressions. Curr. Dir. Psychol. Sci. 2018, 27, 211–219. [Google Scholar] [CrossRef] [Green Version]

- Boyd, R.; Richerson, P.J.; Henrich, J. The cultural niche: Why social learning is essential for human adaptation. Proc. Natl. Acad. Sci. USA 2011, 108 (Suppl. S2), 10918–10925. [Google Scholar] [CrossRef] [Green Version]

- Jackson, J.C.; Watts, J.; Henry, T.; List, J.M.; Forkel, R.; Mucha, P.J.; Greenhill, S.J.; Gray, R.D.; Lindquist, K.A. Emotion semantics show both cultural variation and universal structure. Science 2019, 366, 1517–1522. [Google Scholar] [CrossRef]

- Kim, H.S.; Sasaki, J.Y. Cultural neuroscience: Biology of the mind in cultural context. Annu. Rev. Psychol. 2014, 64, 487–514. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Medin, D.; Ojalehto, B.; Marin, A.; Bang, M. Systems of (non-)diversity. Nat. Hum. Behav. 2017, 1, 0088. [Google Scholar] [CrossRef]

- Ekman, P. Universals and cultural differences in facial expressions of emotions. In Nebraska Symposium on Motivation; Cole, J., Ed.; University of Nebraska Press: Lincoln, NB, USA, 1972; pp. 207–282. [Google Scholar]

- Matsumoto, D.; Ekman, P. Japanese and Caucasian Facial Expressions of Emotion (JACFEE); Intercultural and Emotion Research Laboratory, Department of Psychology, San Francisco State University: San Francisco, CA, USA, 1988. [Google Scholar]

- Beaupré, M.G.; Cheung, N.; Hess, U. The Montreal Set of Facial Displays of Emotion; Ursula Hess, Department of Psychology, University of Quebec at Montreal: Montreal, QC, Canada, 2000. [Google Scholar]

- Wingenbach, T.S.H.; Ashwin, C.; Brosnan, M. Validation of the Amsterdam Dynamic Facial Expression Set—Bath Intensity Variations (ADFES-BIV): A Set of Videos Expressing Low, Intermediate, and High Intensity Emotions. PLoS ONE 2016, 11, e0147112. [Google Scholar] [CrossRef]

- Hawk, S.T.; van Kleef, G.A.; Fischer, A.H.; van der Schalk, J. Worth a thousand words. Absolute and relative decoding of nonlinguistic affect vocalizations. Emotion 2009, 9, 293–305. [Google Scholar] [CrossRef] [Green Version]

- Tottenham, N. MacBrain Face Stimulus Set; John, D., Catherine, T., Eds.; MacArthur Foundation Research Network on Early Experience and Brain Development: Chicago, IL, USA, 1998. [Google Scholar]

- Lundqvist, D.; Flykt, A.A.Ö. The Karolinska Directed Emotional Faces—KDEF; [CD-ROM]; Department of Clinical Neuroscience Psychology Section, Karolinska Institute: Stockholm, Sweden, 1998. [Google Scholar]

- Langner, O.; Dotsch, R.; Bijlstra, G.; Wigboldus, D.H.J.; Hawk, S.T.; Van Knippenberg, A.D. Presentation and validation of the Radboud Face Database. Cogn. Emot. 2010, 24, 1377–1388. [Google Scholar] [CrossRef]

- Anitha, C.; Venkatesha, M.K.; Adiga, B.S. A survey on facial expression databases. Int. J. Eng. Sci. Technol. 2010, 2, 5158–5174. [Google Scholar]

- Ekman, P.; Friesen, W.V.; Hager, J.C. Facial Action Coding System: The Manual; Research Nexus: Salt Lake City, UT, USA, 2002. [Google Scholar]

- Goeleven, E.; de Raedt, R.; Leyman, L.; Verschuere, B. The Karolinska Directed Emotional Faces: A validation study. Cogn. Emot. 2008, 22, 1094–1118. [Google Scholar] [CrossRef]

- Moret-Tatay, C.; Fortea, I.B.; Grau-Sevilla, M.D. Challenges and insights for the visual system: Are face and word recognition two sides of the same coin? J. Neurolinguist. 2020, 56, 100941. [Google Scholar] [CrossRef]

- Brown, A.; Maydeu-Olivares, A. Item response modeling of forced-choice questionnaires. Educ. Psychol. Meas. 2011, 71, 460–502. [Google Scholar] [CrossRef] [Green Version]

- Thomas, K.M.; Drevets, W.C.; Whalen, P.J.; Eccard, C.H.; Dahl, R.E.; Ryan, N.D.; Casey, B.J. Amygdala response to facial expressions in children and adults. Biol. Psychiatry 2011, 49, 309–316. [Google Scholar] [CrossRef]

- Gupta, R. Commentary: Neural control of vascular reactions: Impact of emotion and attention. Front. Psychol. 2016, 7, 1613. [Google Scholar] [CrossRef] [Green Version]

- Gupta, R. Possible cognitive-emotional and neural mechanism of unethical amnesia. Act. Nerv. Super. 2018, 60, 18–20. [Google Scholar] [CrossRef]

- Gupta, R. Positive emotions have a unique capacity to capture attention. Prog. Brain Res. 2019, 274, 23–46. [Google Scholar]

- Gupta, R.; Deák, G.O. Disarming smiles: Irrelevant happy faces slow post-error responses. Cogn. Process. 2015, 16, 427–434. [Google Scholar] [CrossRef]

- Gupta, R.; Hur, Y.; Lavie, N. Distracted by pleasure: Effects of positive versus negative valence on emotional capture under load. Emotion 2016, 16, 328–337. [Google Scholar] [CrossRef]

- Gupta, R.; Srinivasan, N. Emotion helps memory for faces: Role of whole and parts. Cogn. Emot. 2009, 23, 807–816. [Google Scholar] [CrossRef]

- Gupta, R.; Srinivasan, N. Only irrelevant sad but not happy faces are inhibited under high perceptual load. Cogn. Emot. 2015, 29, 747–754. [Google Scholar] [CrossRef]

- Mather, M.; Carstensen, L.L. Aging and attentional biases for emotional faces. Psychol. Sci. 2003, 14, 409–415. [Google Scholar] [CrossRef]

- Artuso, C.; Palladino, P.; Ricciardelli, P. How do we update faces? Effects of gaze direction and facial expressions on working memory updating. Front. Psychol. 2012, 3, 362. [Google Scholar] [CrossRef] [Green Version]

- Flowe, H.D. Do characteristics of faces that convey trustworthiness and dominance underlie perceptions of criminality? PLoS ONE 2012, 7, e37253. [Google Scholar] [CrossRef] [Green Version]

- Lange, W.-G.; Rinck, M.; Becker, E.S. To be or not to be threatening. but what was the question? Biased face evaluation in social anxiety and depression depends on how you frame the query. Front. Psychol. 2013, 4, 205. [Google Scholar] [CrossRef] [Green Version]

- Rodeheffer, C.D.; Hill, S.E.; Lord, C.G. Does this recession make me look black? The effect of resource scarcity on the categorization of biracial faces. Psychol. Sci. 2012, 23, 1476–1488. [Google Scholar] [CrossRef]

- Sladky, R.; Baldinger, P.; Kranz, G.S.; Tröstl, J.; Höflich, A.; Lanzenberger, R.; Moser, E.; Windischberger, C. High-resolution functional MRI of the human amygdala at 7T. Eur. J. Radiol. 2013, 82, 728–733. [Google Scholar] [CrossRef] [Green Version]

- Mishra, M.V.; Ray, S.B.; Srinivasan, N. Cross-cultural emotion recognition and evaluation of Radboud faces database with an Indian sample. PLoS ONE 2018, 13, e0203959. [Google Scholar] [CrossRef]

- Abbruzzese, L.; Magnani, N.; Robertson, I.H.; Mancuso, M. Age and Gender Differences in Emotion Recognition. Front. Psychol. 2019, 10, 2371. [Google Scholar] [CrossRef] [Green Version]

- Verpaalen, I.A.M.; Bijsterbosch, G.; Mobach, L.; Bijlstra, G.; Rinck, M.; Klein, A.M. Validating the Radboud faces database from a child’s perspective. Cogn. Emot. 2019, 33, 1531–1547. [Google Scholar] [CrossRef] [Green Version]

- Dawel, A.; Wright, L.; Irons, J.; Dumbleton, R.; Palermo, R.; O’Kearney, R.; McKone, E. Perceived emotion genuineness: Normative ratings for popular facial expression stimuli and the development of perceived-as-genuine and perceived-as-fake sets. Behav. Res. Methods 2017, 49, 1539–1562. [Google Scholar] [CrossRef] [Green Version]

- Thompson, A.E.; Voyer, D. Sex differences in the ability to recognise non-verbal displays of emotion: A meta-analysis. Cogn. Emot. 2014, 28, 1164–1195. [Google Scholar] [CrossRef]

- Gupta, R. Distinct neural systems for men and women during emotional processing: A possible role of attention and evaluation. Front. Behav. Neurosci. 2012, 6, 86. [Google Scholar] [CrossRef] [Green Version]

- Hofer, A.; Siedentopf, C.M.; Ischebeck, A.; Rettenbacher, M.A.; Verius, M.; Felber, S.; Fleischhacker, W.W. Gender differences in regional cerebral activity during the perception of emotion: A functional MRI study. Neuroimage 2006, 32, 854–862. [Google Scholar] [CrossRef]

- Wrase, J.; Klein, S.; Gruesser, S.M.; Hermann, D.; Flor, H.; Mann, K.; Braus, D.F.; Heinz, A. Gender differences in the processing of standardized emotional visual stimuli in humans: A functional magnetic resonance imaging study. Neurosci. Lett. 2003, 348, 41–45. [Google Scholar] [CrossRef]

- Li, H.; Yuan, J.; Lin, C. The neural mechanism underlying the female advantage in identifying negative emotions: An event-related potential study. Neuroimage 2008, 40, 1921–1929. [Google Scholar] [CrossRef]

- American Psychological Association. APA Dictionary of Psychology, 2nd ed.; American Psychological Association: Washington, DC, USA, 2015. [Google Scholar]

- Ekman, P.; Friesen, W.V. Facial Action Coding System: A Technique for the Measurement of Facial Movement; Consulting Psychologists Press: Palo Alto, CA, USA, 1978. [Google Scholar]

- Guilhaume, A.; Navelet, Y.; Benoit, O. Respiratory pauses in nocturnal sleep of infants. Arch. Fr. Pediatr. 1981, 38, 673–677. [Google Scholar]

- Russell, J.A. Forced-choice response format in the study of facial expression. Motiv. Emot. 1993, 17, 41–51. [Google Scholar] [CrossRef]

- Foddy, W.H. Constructing Questions for Interviews and Questionnaires: Theory and Practice in Social Research; Cambridge University Press: Cambridge, UK, 1993. [Google Scholar]

- Dores, A.R. Portuguese Normative Data of the Radboud Faces Database. Available online: https://osf.io/ne4gh/?view_only=71aadf59335b4eda981b13fb7d1d3ef5 (accessed on 29 April 2020).

- Kirouac, G.; Doré, F.Y. Accuracy of the judgment of facial expression of emotions as a function of sex and level of education. J. Nonverbal Behav. 1985, 9, 3–7. [Google Scholar] [CrossRef]

- Montagne, B.; Kessels, R.P.C.; De Haan, E.H.F.; Perret, D.I. The Emotion Recognition Task: A paradigm to measure the perception of facial emotional expressions at different intensities. Percept. Mot. Ski. 2007, 104, 589–598. [Google Scholar] [CrossRef]

- Ruffman, T.; Henry, J.D.; Livingstone, V.; Phillips, L.H. A meta-analytic review of emotion recognition and aging: Implications for neuropsychological models of aging. Neurosci. Biobehav. Rev. 2008, 32, 863–881. [Google Scholar] [CrossRef]

- Young, A.W. Facial Expression Recognition: Selected Works of Andy Young; Psychology Press: London, UK; New York, NY, USA, 2016. [Google Scholar]

- Sunday, M.A.; Patel, P.A.; Dodd, M.D.; Gauthier, I. Gender and hometown population density interact to predict face recognition ability. Vis. Res. 2019, 163, 14–23. [Google Scholar] [CrossRef]

- Calvo, M.G.; Lundqvist, D. Facial expressions of emotion (KDEF): Identification under different display-duration conditions. Behav. Res. Methods 2008, 40, 109–115. [Google Scholar] [CrossRef] [Green Version]

- Lohani, M.; Gupta, R.; Srinivasan, N. Cross-cultural evaluation of the International Affective Picture System with an Indian sample. Psychol. Stud. 2013, 58, 233–241. [Google Scholar] [CrossRef]

- Crivelli, C.; Fridlund, A. Facial Displays Are Tools for Social Influence. Trends Cogn. Sci. 2018, 22, 388–399. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dawkins, R.; Krebs, J.R. Animal signals: Information or manipulation? In Behavioural Ecology: An Evolutionary Approach; Krebs, J.R., Davies, N.B., Eds.; Blackwell Scientific Publications: Oxford, UK, 1978; pp. 282–309. [Google Scholar]

- Durán, J.I.; Reisenzein, R.; Fernández-Dols, J.-M. Coherence between emotions and facial expressions: A research synthesis. In Oxford Series in Social Cognition and Social Neuroscience. The Science of Facial Expression; Fernández-Dols, J.-M., Russell, J.A., Eds.; University Press: Oxford, UK, 2017; pp. 107–129. [Google Scholar]

- Smith, C.A.; Scott, H.S. A Componential Approach to the meaning of facial expressions. In Studies in Emotion and Social Interaction, 2nd Series. The Psychology of Facial Expression; Russell, J.A., Fernández-Dols, J.M., Eds.; Cambridge University Press, Editions de la Maison des Sciences de L’homme: Cambridge, UK, 1997; pp. 229–254. [Google Scholar] [CrossRef]

| Agreement | Perceived Emotion | |||||||

|---|---|---|---|---|---|---|---|---|

| Neutral M (SD) | Anger M (SD) | Sadness M (SD) | Fear M (SD) | Disgust M (SD) | Surprise M (SD) | Happiness M (SD) | Contempt M (SD) | |

| Depictedemotion | ||||||||

| Anger | 4.9 (7.8) | 67.7 (25.3) | 7.9 (12.8) | 2.4 (2.8) | 3.3 (4.4) | 3.9 (5.0) | 0.2 (0.37) | 8.4 (7.4) |

| Sadness | 6.6 (15.0) | 2.4 (4.7) | 76.2 (24.2) | 2.7 (3.1) | 2.1 (4.0) | 1.1 (1.7) | 0.2 (0.3) | 8.1 (11.2) |

| Fear | 0.6 (1.5) | 1.2 (1.2) | 1.0 (1.5) | 73.8 (12.6) | 6.5 (8.6) | 15.1 (11.8) | 0.3 (0.5) | 0.9 (1.3) |

| Disgust | 0.4 (0.4) | 9.4 (11.8) | 0.4 (0.5) | 0.8 (0.6) | 80.9 (12.5) | 2.2 (3.2) | 0.2 (0.3) | 5.3 (2.9) |

| Surprise | 0.4 (0.4) | 0.2 (0.3) | 0.2 (0.3) | 2.5 (2.8) | 0.8 (1.3) | 94.2 (3.9) | 0.4 (0.5) | 1.0 (1.8) |

| Happiness | 0.6 (0.7) | 0.2 (0.3) | 0.3 (0.4) | 0.2 (0.3) | 0.3 (0.4) | 0.8 (0.7) | 96.8 (1.6) | 0.5 (0.6) |

| Contempt | 12.6 (10.6) | 0.8 (1.3) | 3.7 (2.4) | 0.4 (0.5) | 1.2 (0.7) | 0.7 (0.7) | 1.9 (3.9) | 78.1 (11.8) |

| Dores et al., 2020 [66] | Langner et al., 2010 [32] | |||||

|---|---|---|---|---|---|---|

| M | SD | M | SD | t | df | |

| Neutral | 88.3 | 9.7 | 83.0 | 13.0 | 7.65 *** | 345 |

| Anger | 67.7 | 25.0 | 81.0 | 19.0 | 8.32 *** | 509 |

| Sadness | 76.2 | 24.0 | 85.9 | 16.0 | 6.41 *** | 584 |

| Fear | 73.4 | 13.0 | 88.0 | 7.0 | 18.04 *** | 757 |

| Disgust | 80.9 | 12.0 | 79.0 | 10.0 | 2.45 * | 467 |

| Surprise | 94.2 | 4.0 | 90.0 | 9.0 | 11.99 *** | 299 |

| Happiness | 96.8 | 2.0 | 98.0 | 3.0 | 8.15 *** | 330 |

| Contempt | 78.1 | 12.0 | 48.0 | 12.0 | 37.71 *** | 1523 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dores, A.R.; Barbosa, F.; Queirós, C.; Carvalho, I.P.; Griffiths, M.D. Recognizing Emotions through Facial Expressions: A Largescale Experimental Study. Int. J. Environ. Res. Public Health 2020, 17, 7420. https://0-doi-org.brum.beds.ac.uk/10.3390/ijerph17207420

Dores AR, Barbosa F, Queirós C, Carvalho IP, Griffiths MD. Recognizing Emotions through Facial Expressions: A Largescale Experimental Study. International Journal of Environmental Research and Public Health. 2020; 17(20):7420. https://0-doi-org.brum.beds.ac.uk/10.3390/ijerph17207420

Chicago/Turabian StyleDores, Artemisa R., Fernando Barbosa, Cristina Queirós, Irene P. Carvalho, and Mark D. Griffiths. 2020. "Recognizing Emotions through Facial Expressions: A Largescale Experimental Study" International Journal of Environmental Research and Public Health 17, no. 20: 7420. https://0-doi-org.brum.beds.ac.uk/10.3390/ijerph17207420