A major task for producing the Re-Pair grammar is to count the frequencies of the most frequent bigrams. Our work horse for this task is a frequency table. A frequency table in of length f stores pairs of the form , where bc is a bigram and x the frequency of bc in . It uses bits of space since an entry stores a bigram consisting of two characters from and its respective frequency, which can be at most . Throughout this paper, we use an elementary in-place sorting algorithm like heapsort:

2.1. Trade-Off Computation

Using the frequency tables, we present a solution with a trade-off parameter:

Lemma 2. Given an integer d with , we can compute the frequencies of the d most frequent bigrams in a text of length n whose characters are drawn from an alphabet of size σ in time using bits.

Proof. Our idea is to partition the set of all bigrams appearing in T into subsets, compute the frequencies for each subset, and finally, merge these frequencies. In detail, we partition the text into substrings such that each substring has length d (the last one has a length of at most d). Subsequently, we extend to the left (only if ) such that and overlap by one text position, for . By doing so, we take the bigram on the border of two adjacent substrings and for each into account. Next, we create two frequency tables F and , each of length d for storing the frequencies of d bigrams. These tables are at the beginning empty. In what follows, we fill F such that after processing , F stores the most frequent d bigrams among those bigrams occurring in while acts as a temporary space for storing candidate bigrams that can enter F.

With F and , we process each of the substrings as follows: Let us fix an integer j with . We first put all bigrams of into in lexicographic order. We can perform this within the space of in time since there are at most d different bigrams in . We compute the frequencies of all these bigrams in the complete text T in time by scanning the text from left to right while locating a bigram in in time with a binary search. Subsequently, we interpret F and as one large frequency table, sort it with respect to the frequencies while discarding duplicates such that F stores the d most frequent bigrams in . This sorting step can be done in time. Finally, we clear and are done with . After the final merge step, we obtain the d most frequent bigrams of T stored in F.

Since each of the merge steps takes time, we need: time. For , we can build a large frequency table and perform one scan to count the frequencies of all bigrams in T. This scan and the final sorting with respect to the counted frequencies can be done in time. □

2.2. Algorithmic Ideas

With Lemma 2, we can compute in time with additional bits of working space on top of the text for a parameter d with . (The variable used in the abstract and in the introduction is interchangeable with , i.e., .) In the following, we show how this leads us to our first algorithm computing Re-Pair:

Theorem 1. We can compute Re-Pair on a string of length n in time with bits of working space including the text space as a rewritable part in the working space, where is a fixed constant and is the sum of the alphabet size σ and the number of non-terminal symbols.

In our model, we assume that we can enlarge the text from bits to bits without additional extra memory. Our main idea is to store a growing frequency table using the space freed up by replacing bigrams with non-terminals. In detail, we maintain a frequency table F in of length for a growing variable , which is set to in the beginning. The table F takes bits, which is bits for . When we want to query it for a most frequent bigram, we linearly scan F in time, which is not a problem since (a) the number of queries is and (b) we aim for as the overall running time. A consequence is that there is no need to sort the bigrams in F according to their frequencies, which simplifies the following discussion.

Frequency table F: With lemBatchedCount, we can compute F in time. Instead of recomputing F on every turn i, we want to recompute it only when it no longer stores a most frequent bigram. However, it is not obvious when this happens as replacing a most frequent bigram during a turn (a) removes this entry in F and (b) can reduce the frequencies of other bigrams in F, making them possibly less frequent than other bigrams not tracked by F. Hence, the variable i for the i-th turn (creating the i-th non-terminal) and the variable k for recomputing the frequency table F the -st time are loosely connected. We group together all turns with the same and call this group the k-th round of the algorithm. At the beginning of each round, we enlarge and create a new F with a capacity for bigrams. Since a recomputation of F takes much time, we want to end a round only if F is no longer useful, i.e., when we no longer can guarantee that F stores a most frequent bigram. To achieve our claimed time bounds, we want to assign all m turns to different rounds, which can only be done if grows sufficiently fast.

Algorithm outline: At the beginning of the

k-th round and the

i-th turn, we compute the frequency table

F storing

bigrams and keep additionally the lowest frequency of

F as a threshold

, which is treated as a constant during this round. During the computation of the

i-th turn, we replace the most frequent bigram (say,

) in the text

with a non-terminal

to produce

. Thereafter, we remove

bc from

F and update those frequencies in

F, which were decreased by the replacement of

bc with

and add each bigram containing the new character

into

F if its frequency is at least

. Whenever a frequency in

F drops below

, we discard it. If

F becomes empty, we move to the

-st round and create a new

F for storing

frequencies. Otherwise (

F still stores an entry), we can be sure that

F stores a most frequent bigram. In both cases, we recurse with the

-st turn by selecting the bigram with the highest frequency stored in

F. We show in Algorithm 1 the pseudo code of this outlined algorithm. We describe in the following how we update

F and how large

can become at least.

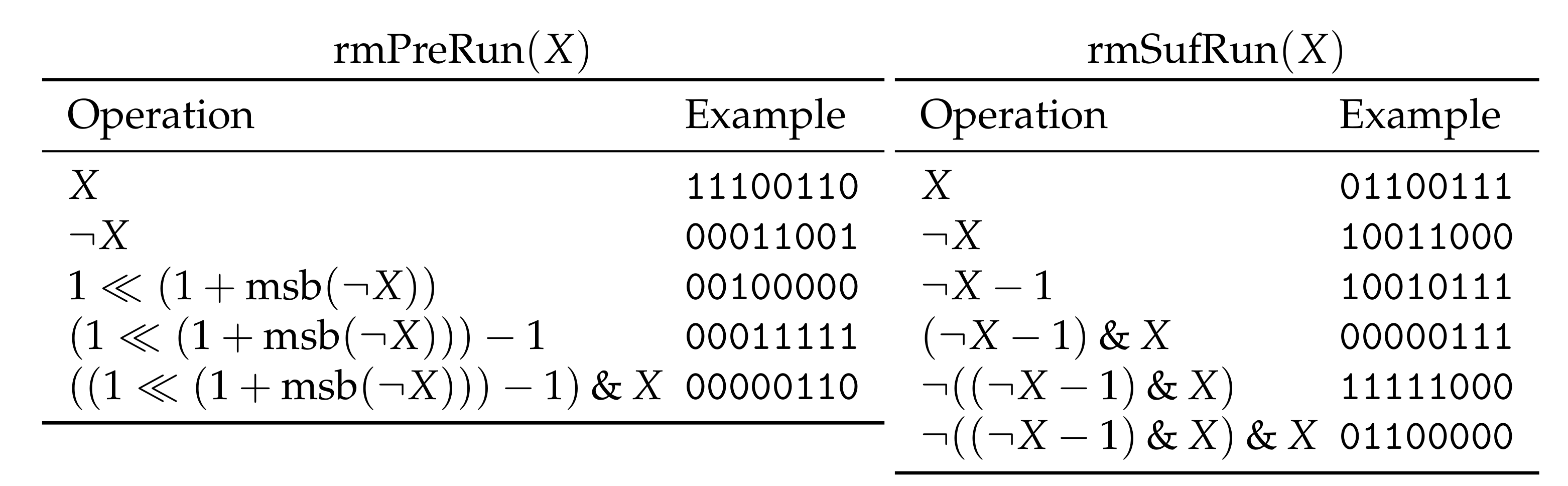

| Algorithm 1: Algorithmic outline of our proposed algorithm working on a text T with a growing frequency table F. The constants and are explained in Section 2.3. The same section shows that the outer while loop is executed times. |

![Algorithms 14 00005 i001]() |

2.3. Algorithmic Details

Suppose that we are in the k-th round and in the i-th turn. Let be the lowest frequency in F computed at the beginning of the k-th round. We keep as a constant threshold for the invariant that all frequencies in F are at least during the k-th round. With this threshold, we can assure in the following that F is either empty or stores a most frequent bigram.

Now suppose that the most frequent bigram of is , which is stored in F. To produce (and hence advancing to the -st turn), we enlarge the space of from to and replace all occurrences of bc in with a new non-terminal . Subsequently, we would like to take the next bigram of F. For that, however, we need to update the stored frequencies in F. To see this necessity, suppose that there is an occurrence of abcd with two characters in . By replacing with ,

- 1.

the frequencies of ab and cd decrease by one (for the border case a = b = c (resp. b = c = d), there is no need to decrement the frequency of ab (resp. cd)), and

- 2.

the frequencies of and increase by one.

Updating F. We can take care of the former changes (1) by decreasing the respective bigram in F (in the case that it is present). If the frequency of this bigram drops below the threshold , we remove it from F as there may be bigrams with a higher frequency that are not present in F. To cope with the latter changes (2), we track the characters adjacent to after the replacement, count their numbers, and add their respective bigrams to F if their frequencies are sufficiently high. In detail, suppose that we have substituted bc with exactly h times. Consequently, with the new text we have additionally bits of free space (the free space is consecutive after shifting all characters to the left), which we call D in the following. Subsequently, we scan the text and put the characters of appearing to the left of each of the h occurrences of into D. After sorting the characters in D lexicographically, we can count the frequency of for each character preceding an occurrence of in the text by scanning D linearly. If the obtained frequency of such a bigram is at least as high as the threshold , we insert into F and subsequently discard a bigram with the currently lowest frequency in F if the size of F has become . In the case that we visit a run of ’s during the creation of D, we must take care of not counting the overlapping occurrences of . Finally, we can count analogously the occurrences of for all characters succeeding an occurrence of .

Capacity of F: After the above procedure, we update the frequencies of F. When F becomes empty, all bigrams stored in F are replaced or have a frequency that becomes less than . Subsequently, we end the k-th round and continue with the ()-st round by (a) creating a new frequency table F with capacity and (b) setting the new threshold to the minimal frequency in F. In what follows, we (a) analyze in detail when F becomes empty (as this determines the sizes and ) and (b) show that we can compensate the number of discarded bigrams with an enlargement of F’s capacity from bigrams to bigrams for the sake of our aimed total running time.

Next, we analyze how many characters we have to free up (i.e., how many bigram occurrences we have to replace) to gain enough space for storing an additional frequency. Let be the number of bits needed to store one entry in F, and let be the minimum number of characters that need to be freed to store one frequency in this space. To understand the value of , we look at the arguments of the minimum function in the definition of and simultaneously at the maximum function in our aimed working space of bits (cf. Theorem 1):

- 1.

The first item in this maximum function allows us to spend bits for each freed character such that we obtain space for one additional entry in F after freeing characters.

- 2.

The second item allows us to use additional bits after freeing up c characters. This additional treatment helps us to let grow sufficiently fast in the first steps to save our time bound, as for sufficiently small alphabets and large text sizes, , which means that we might run the first turns with and, therefore, already spend time. Hence, after freeing up characters, we have space to store one additional entry in F.

With , we have the sufficient condition that replacing a constant number of characters gives us enough space for storing an additional frequency.

If we assume that replacing the occurrences of a bigram stored in F does not decrease the other frequencies stored in F, the analysis is now simple: Since each bigram in F has a frequency of at least two, . Since , this lets grow exponentially, meaning that we need rounds. In what follows, we show that this is also true in the general case.

Lemma 3. Given that the frequency of all bigrams in F drops below the threshold after replacing the most frequent bigram bc, then its frequency has to be at least , where is the number of frequencies stored in F.

Proof. If the frequency of bc in is x, then we can reduce at most frequencies of other bigrams (both the left character and the right character of each occurrence of bc can contribute to an occurrence of another bigram). Since a bigram must occur at least twice in to be present in F, the frequency of bc has to be at least for discarding all bigrams of F. □

Suppose that we have enough space available for storing the frequencies of

bigrams, where

is a constant (depending on

and

) such that

F and the working space of Lemma 2 with

can be stored within this space. With

and Lemma 3 with

, we have:

where we use the equivalence

to estimate the two arguments of the maximum function.

Since we let grow by a factor of at least for each recomputation of F, , and therefore, after steps. Consequently, after reaching , we can iterate the above procedure a constant number of times to compute the non-terminals of the remaining bigrams occurring at least twice.

Time analysis: In total, we have

rounds. At the start of the

k-th round, we compute

F with the algorithm of Lemma 2 with

on a text of length at most

in

time with

. Summing this up, we get:

In the i-th turn, we update F by decreasing the frequencies of the bigrams affected by the substitution of the most frequent bigram bc with . For decreasing such a frequency, we look up its respective bigram with a linear scan in F, which takes time. However, since this decrease is accompanied with a replacement of an occurrence of bc, we obtain total time by charging each text position with time for a linear search in F. With the same argument, we can bound the total time for sorting the characters in D to overall time: Since we spend time on sorting h characters preceding or succeeding a replaced character and time on swapping a sufficiently large new bigram composed of and a character of with a bigram with the lowest frequency in F, we charge each text position again with time. Putting all time bounds together gives the claim of Theorem 1.

2.4. Storing the Output In-Place

Finally, we show that we can store the computed grammar in text space. More precisely, we want to store the grammar in an auxiliary array A packed at the end of the working space such that the entry stores the right-hand side of the non-terminal , which is a bigram. Thus, the non-terminals are represented implicitly as indices of the array A. We therefore need to subtract bits of space from our working space after the i-th turn. By adjusting in the above equations, we can deal with this additional space requirement as long as the frequencies of the replaced bigrams are at least three (we charge two occurrences for growing the space of A).

When only bigrams with frequencies of at most two remain, we switch to a simpler algorithm, discarding the idea of maintaining the frequency table F: Suppose that we work with the text . Let be a text position, which is one in the beginning, but will be incremented in the following turns while holding the invariant that does not contain a bigram of frequency two. We scan linearly from left to right and check, for each text position j, whether the bigram has another occurrence with , and if so,

- (a)

append to A,

- (b)

replace and with a new non-terminal to transform to , and

- (c)

recurse on with until no bigram with frequency two is left.

The position , which we never decrement, helps us to skip over all text positions starting with bigrams with a frequency of one. Thus, the algorithm spends time for each such text position and time for each bigram with frequency two. Since there are at most n such bigrams, the overall running time of this algorithm is .

Remark 1 (Pointer machine model). Refraining from the usage of complicated algorithms, our algorithm consists only of elementary sorting and scanning steps. This allows us to run our algorithm on a pointer machine, obtaining the same time bound of . For the space bounds, we assume that the text is given in n words, where a word is large enough to store an element of or a text position.