5.4.1. Overall Result Analysis

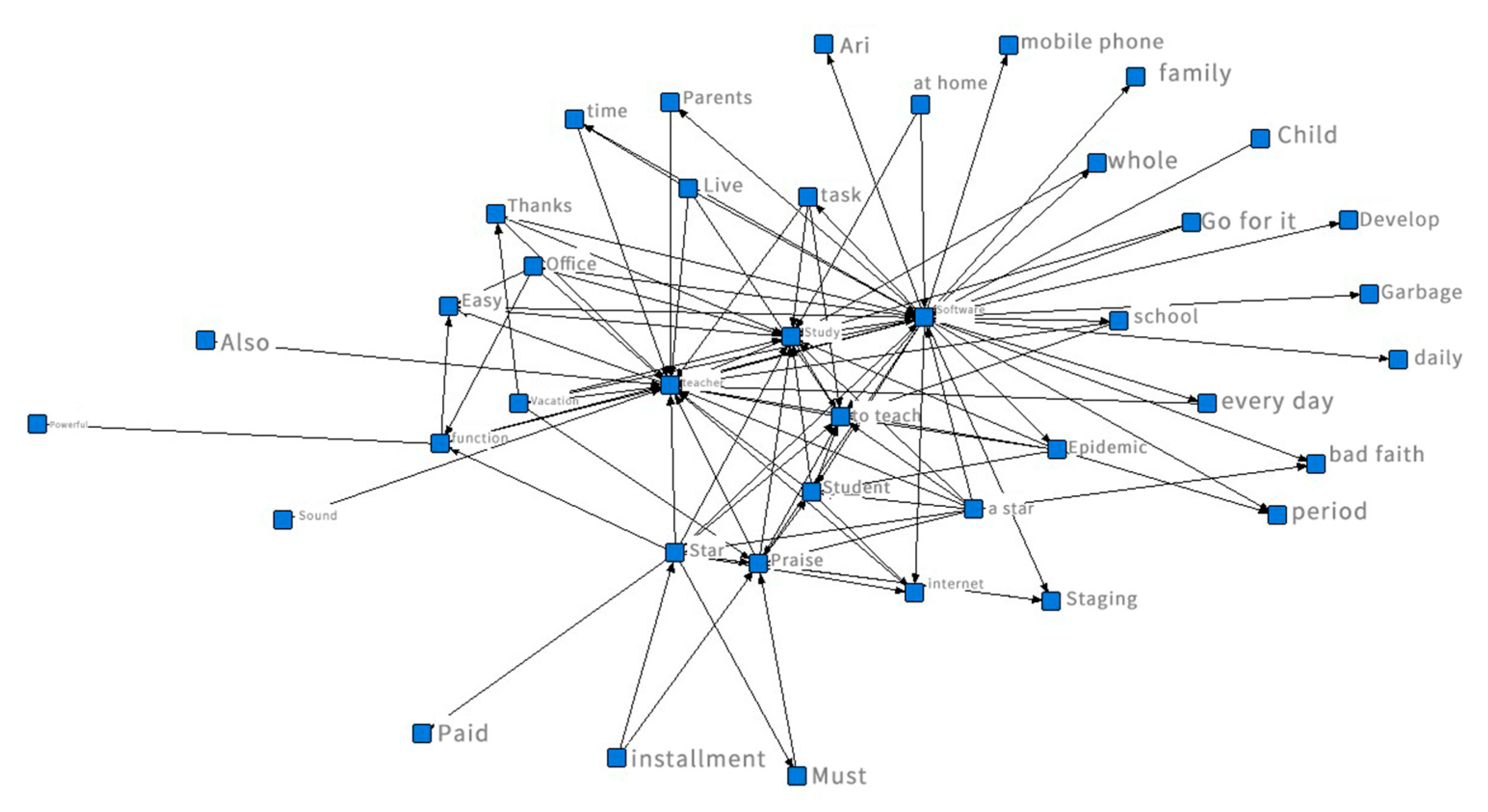

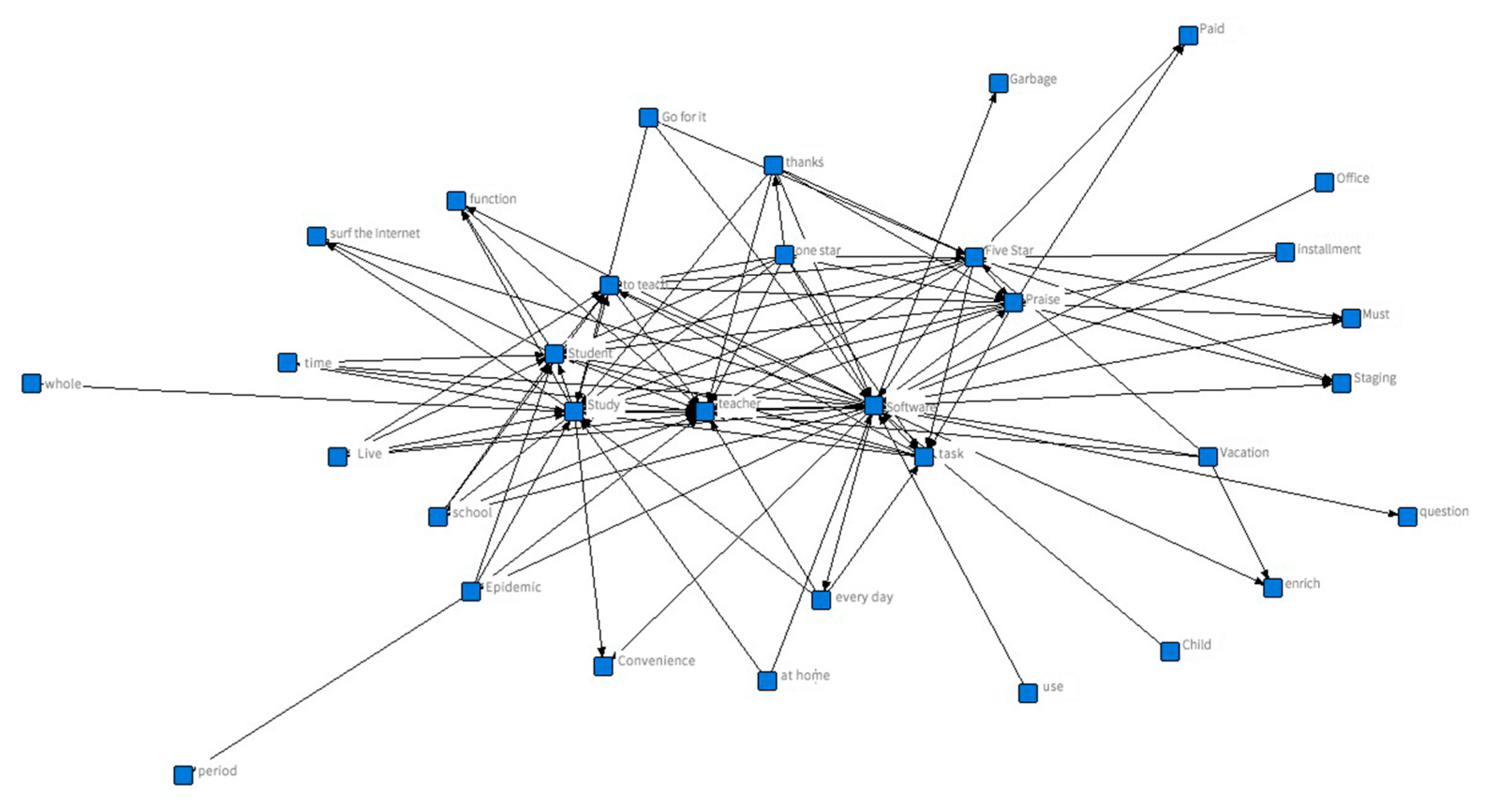

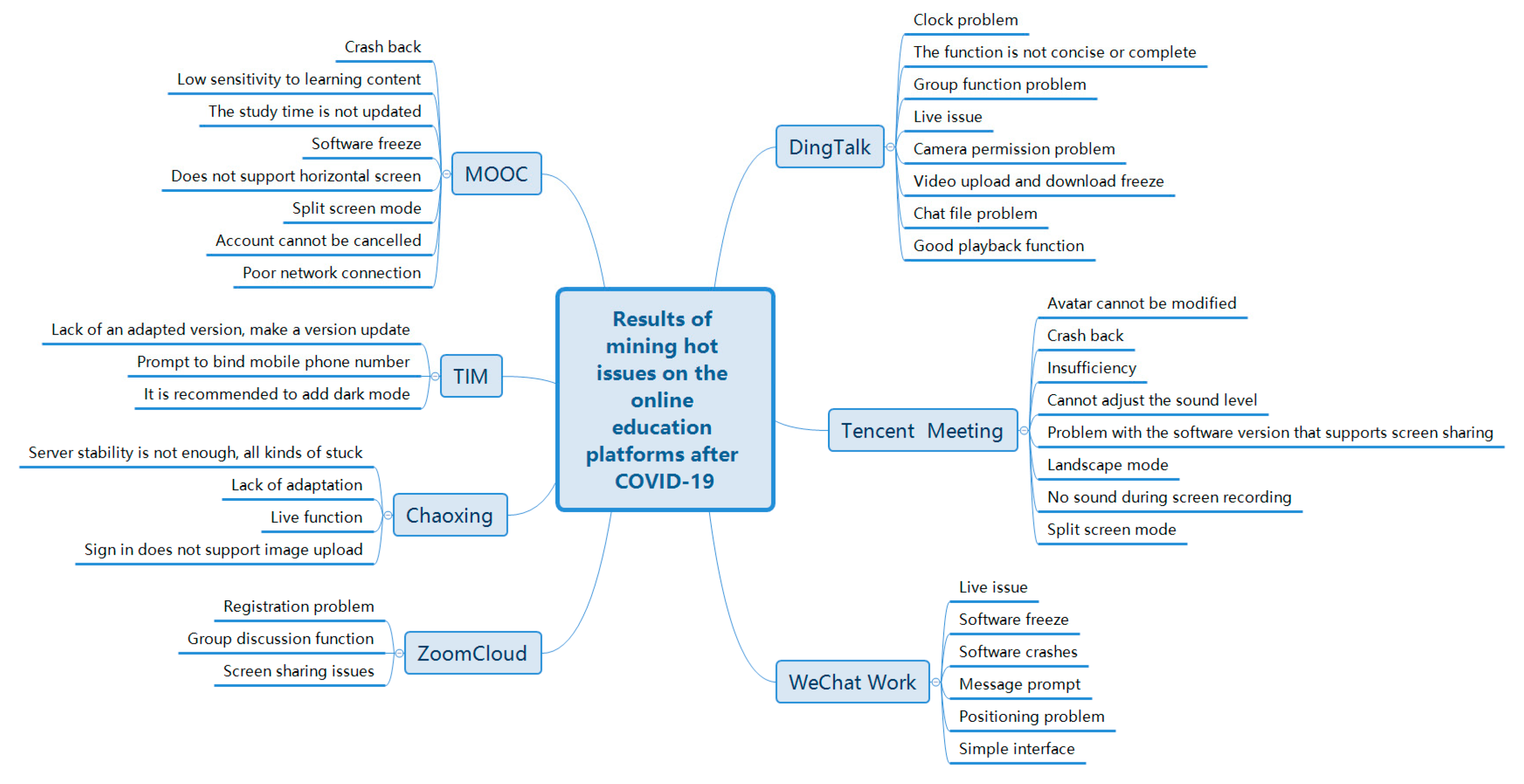

Due to the low market demand, the number of users for online education platforms was relatively small before the outbreak of COVID-19. DingTalk and Tencent Meeting did not develop an online education function. Therefore, compared with learning functions of the platform, users are more focused on smooth use and technical problems. After the outbreak, because market demand of online education and the number of online platform users rose rapidly, mobile office platforms such as DingTalk and Tencent Meeting also successively introduced the function of online teaching. Due to their competitive parent companies, DingTalk and Tencent Meeting have little technical problems, so users pay attention to the functionalities of online study. In terms of teaching support system, which represents the learning function of the platform, it can be further seen that users pay more attention to communication, interaction, and course management than teaching functionalities and school role management. Teaching functionalities refer to live broadcast, video broadcast, etc. All the platforms selected here have played an online education role during the pandemic period and all have basic functionalities such as live broadcast, video broadcast, etc. Therefore, there is little difference in this index and the index weight is naturally low. Student status management refers to the modules such as online registration, recording lecturing, etc. If the duration of video playing cannot be accurately calculated, it may lead to low scores of students and other situations damaging the user experience. Among the seven platforms, only MOOC and Chaoxing Learning are conducted through course recording, and the learning time needs to be recorded by calculating the video playing time. The other five platforms mostly teach through live streaming, so the demand for learning time recording and the weight of this index is relatively low.

Interaction is effective to improving teaching quality. Group discussion, attendance check by asking questions, and raising hand to speak, promote students’ learning enthusiasm and help instructors understand students’ learning degree and disabuse students of questions. Due to the space distance of online teaching, the interaction between teachers and students is difficult to carry out. Therefore, in order to ensure the quality of online learning, the design of communication and interaction functions is extremely important for the user experience of the online education platform during the pandemic. If the platform can provide rich interactive functionalities, it will be favored by more teachers and students.

Classroom management ensures the orderly development of the teaching plan. The setting of permissions in course management ensures the difference between the identities of online users and clarifies the difference between teachers and students. In course management, the function of reminding students to check in restores the offline scene of class bell ringing and improves the learning atmosphere. Therefore, course management is relatively important.

To sum up, after the outbreak of the pandemic, there is a huge market need for online education platforms. Course management, communication and interaction are the key factors affecting user experience.

5.4.2. Results Analysis of Each Platform

Based on a set of evaluation index system of the user experience, 5 primary indexes including platform system characteristics, customer service support, video quality, technical requirements, the platform teaching platform support system, and 15 secondary indexes including stability and compatibility of system, and user comments are weighted through variation coefficient method, and scores and ranks of user experience are shown in

Table 27 and

Table 28. In the matrices, the green font indicates the data with the highest score, while the red font represents the data with the lowest score in each row.

As can be seen from

Table 27, before the occurrence of COVID-19, the ranking of user experience of each platform from high to low is Zoom Cloud, Tencent Meeting, DingTalk, MOOC, TIM, WeChat Work, and Chaoxing Learning. It can be seen from

Table 28 that after the occurrence of COVID-19, the ranking of user experience of each platform by descending order is: DingTalk, Zoom Cloud, Tencent Meeting, WeChat Work, MOOC, TIM, and Chaoxing Learning.

This change is closely related to initiatives taken by education platforms during the pandemic. As an online office software, DingTalk quickly identified its new position, providing a complete set of solutions for online education of all kinds of schools. It also developed functions such as online classroom and health tasks to respond to “school suspension without class suspension”. Although the application of teaching functions was opposed by students who were tired of learning at the beginning, compared with other platforms, its performance during the pandemic was more prominent. The platform had higher stability and compatibility, relatively simple interface design, complete teaching functions, and was favored by teachers and students. Similarly, WeChat Work was originally an online office software, but it also helped with online teaching during the pandemic. WeChat’s group broadcast supports online teaching, while the pandemic prevention collection form of micro-documents helps schools manage students’ health information. The improvement of its teaching functionalities and the reliability of the platform have also won many positive comments. In contrast, as an educational software, Chaoxing Learning could have played a greater role during the pandemic, but its performance was always atthe bottom in terms of user experience score. The reasons for this situation are closely related to the imperfect learning function and low access speed of the platform. The other four platforms also showed slight changes in their rankings, but the differences were not significant.

The performance of each platform on each indicator will be analyzed in detail below.

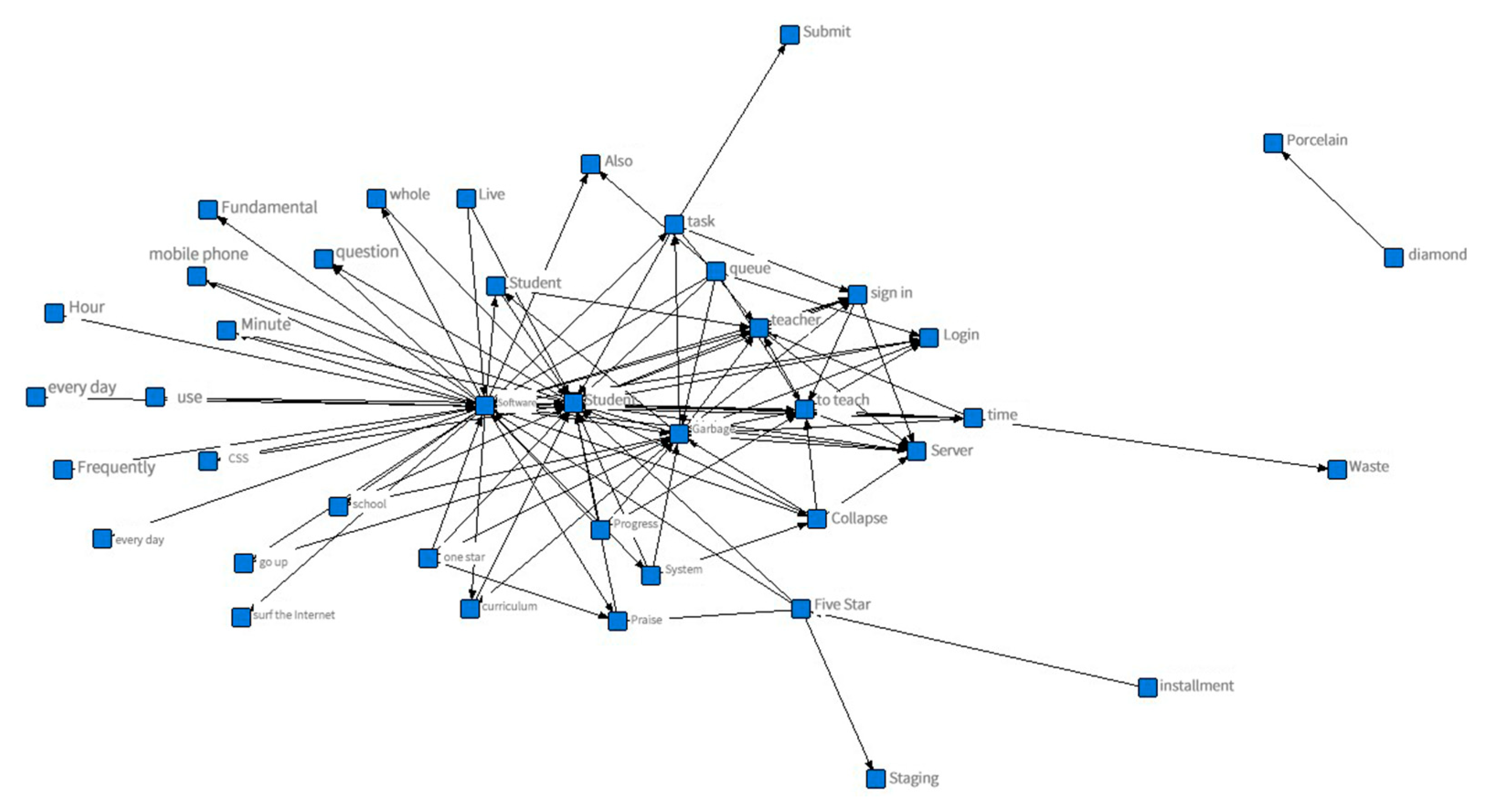

(1) Zoom Cloud

Zoom Cloudperformed well overall, ranking the 1st before the outbreak ofCOVID-19 and 2nd after, respectively. Before the pandemic, Zoom Cloud’s access speed was the only disadvantage compared with other platforms. Users had a poor experience in this indicator. After the outbreak of the pandemic, Zoom Cloud has greatly improved its access speed, and users’ attention has been transferred to its teaching functionalities. However, Zoom Cloud needs to improve its communication and interaction, teaching functionalities, and student status management. Due to its relatively stable system, simple interface, and easy operation, Zoom Cloud was highly praised during the pandemic period. However, Zoom Cloud did not develop teaching functionalities, instead, it was still a mobile office software for video conferencing and content sharing. It is more suitable for team meetings, where everyone can express and share their views. However, the interaction between teachers and students in teaching is still dominated by teachers’ teaching and supplemented by students’ participation. Therefore, the communication and interaction between teachers and students do not exactly meet the needs of teaching. In addition, users of Zoom Cloud do not distinguish between teachers and students, so it is deficient in teaching functionalities and school role management.

(2) Tencent Meeting

Tencent performed better overall, ranking the 2nd before the outbreak ofCOVID-19 and 3rd after, respectively. Before the pandemic, its access speed, reliability, and timeliness of video information transmission were poor, indicating the existence of problems such as lag and video fluidity. After the outbreak of the pandemic, Tencent Meeting has significantly improved in the above three indicators, but the quality of video sound and the teaching functionalities are deficient. In terms of the quality of video sound, many users put forward problems such as “there is no sound on the recording screen” and “the sound volume cannot be adjusted”. In terms of teaching functionalities, Tencent Meeting has the similarity with Zoom Cloud: as video conferencing software, both of them can only satisfy the need of video teaching, and are unable to count the number of students entering the meeting and record the time of entering meeting, without distinguishing students’ and teachers’ type, resulting in low score in the interaction, teaching functionalities, and student status management.

(3) DingTalk

DingTalk performed better overall, ranking 3rd before the outbreak ofCOVID-19 and 1stafter, respectively. Before the outbreak of the pandemic, the three lowest indexes were communication and interaction, reliability, and interface design. After the outbreak of the pandemic, the reliability and interface design scores increased, but the communication and interaction scores were still low. Although DingTalk was originally a mobile office software, it was successfully transformed and introduced functionalities related to teaching during the pandemic. Thanks to Alibaba Group, 140,000 servers were deployed to ensure the normal operation of the system during the pandemic. In terms of communication and interaction, when the teacher gives a live lecture, students can express their views through the message window. However, the message window is somewhat delayed and the interaction process is not smooth enough. Therefore, although DingTalk performs well compared with other platforms, it is still deficient in communication and interaction.

(4) MOOC

MOOC performed mediocrely overall, ranking 4th before the outbreak ofCOVID-19 and 5th after, respectively. Before the pandemic, MOOC was ranked last in terms of speed, interaction, and reliability. After the outbreak of the pandemic, the interaction and communication have been improved, but the stability of the system, the timeliness of video information transmission, reliability, and school role management have been criticized. Positioning an online education platform, however, MOOC does not aim to provide online learning services to students in school, but to provide an equal learning platform for all, including students and office workers. As a result, students on the platform are learning via video classes from existing elite schools, rather than via live streaming. With video recording method, it has rare interaction. After the outbreak of COVID-19, a large number of students are using MOOC to watch course video. The watching time becomes the important factor to measure student learning conditions for schools. However, due to frequent unsynchronized learning record and low sensitivity, it greatly impacted on the student status management and reduced user satisfaction. The technical problems of the platform have existed before and after the outbreak of the pandemic.

(5) TIM

TIM performed poorly overall, ranking 5th and 6th before and after the outbreak ofCOVID-19. Before the pandemic, it ranked the last in terms of system stability, platform learner support, interface design and security, but it performed well in access speed. After the outbreak of the pandemic, the platform had serious problems in five aspects: system compatibility, platform support for learners, interface design, communication and interaction, and course management. TIM is known as the concise version of Tencent QQ, which can sync QQ friends with low entry threshold. However, its positioning is also suitable for teamwork office software, which has the same problems as Zoom Cloud and Tencent Meeting. At the same time, TIM also performs poorly in terms of technology and system compatibility, which is lack of a suitable version of the tablet and delays in updating, etc. As one of Tencent’s office software, with no advertising push, simple design interface, and practical function, TIM enables Tencent to provide an opportunity to capture the market in the field of mobile office. Without clear profit as well as appearance of pure mobile office software such as DingTalk, TIM is declining.

(6) WeChat Work

The overall performance of WeChat Work is mediocre, ranking 6th and 4th before and after the outbreak ofCOVID-19. Before the pandemic, it ranked last in reliability and navigation links, and performed poorly in timely video information transmission. WeChat Work is the only platform that has problems on navigation links, with critics pointing out that it contains too many advertising links. After the outbreak of the pandemic, WeChat has improved its scores in various indicators. The main problem has shifted to security, and its privacy protection for users is insufficient. Like TIM, WeChat Work is equivalent to office WeChat. Besides live conference, it also undertakes daily information communication, clocking, approval, and other functions. Therefore, as a teaching software, its teaching functionalities are not complete enough. In addition, most users believe that WeChat is a private space, while the chat records of staff or students on WeChat can be viewed by superiors, and the moderator of the meeting can open the microphone of others at will. The setting of these functions is a kind of privacy interference behavior, so users have raised many doubts about the security of this platform.

(7) Chaoxing Learning

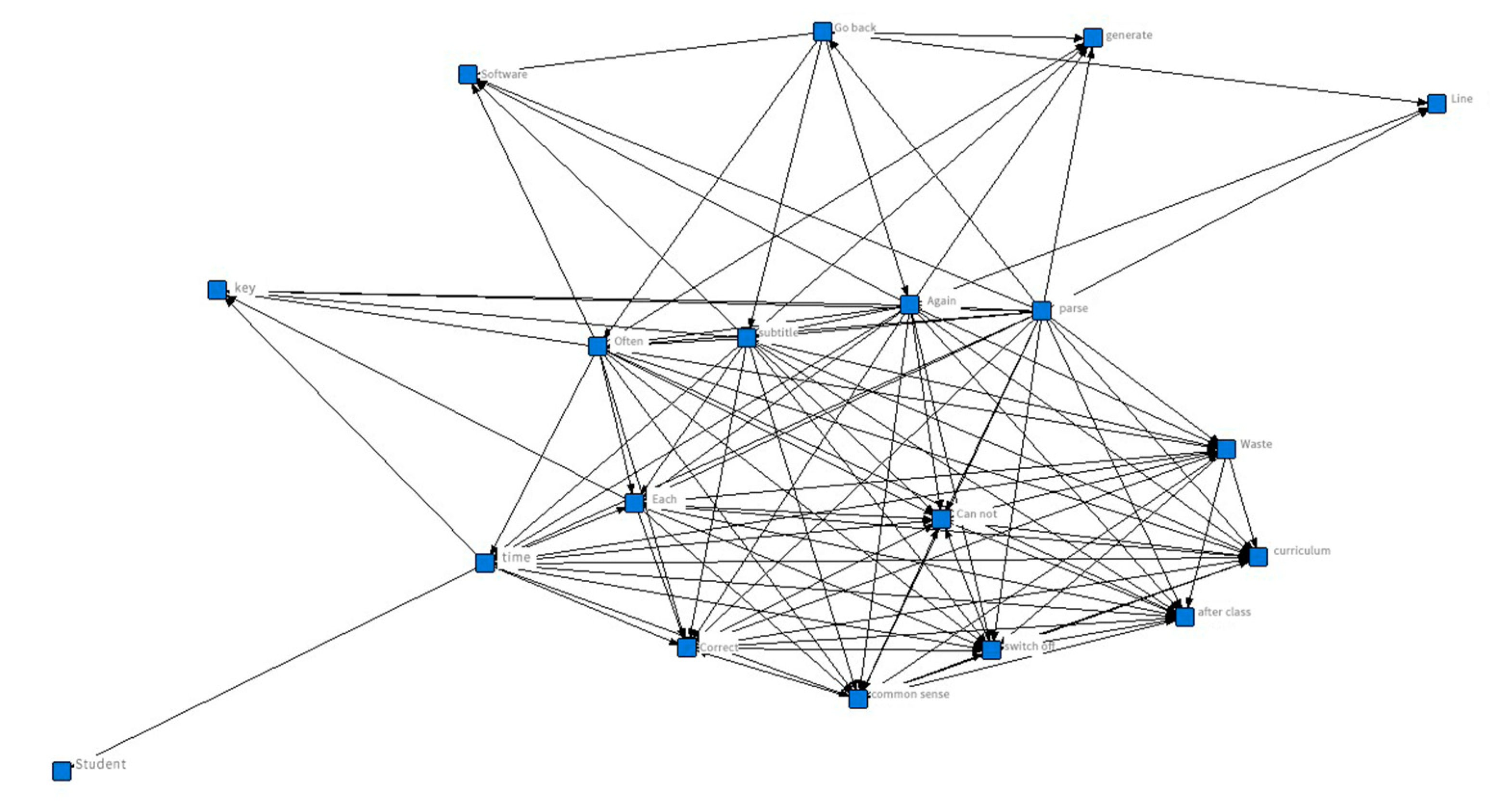

Chaoxing Learning had the lowest overall performance, ranking last before and after the outbreak ofCOVID-19. Before the pandemic, Chaoxing Learning performed poorly in three first-level indicators, including system compatibility and video picture quality, including platform system characteristics, video quality and teaching support system. After the outbreak of the pandemic, its teaching functionalities have been improved, but it still ranks the bottom in five secondary indicators, including the quality of video images, timeliness of video information transmission and course management, and there are still many limitations. Chaoxing Learning is a mobile learning platform, which provides electronic literature review, course learning and group discussion functionalities. However, technical problems often occur on the platform, and the platform has been complained of many times due to the system crash during the pandemic. In addition, when the system crashed, the official reply of Chaoxing Learning was that “efforts are being made to repair it, and we call on more students to learn from the wrong peak, so that the stability will be significantly improved”, which shows the weakness of the technical support behind Chaoxing Learning. Due to the limitations of the technology, it also performs poorly in teaching functionalities such as school role management. Many users commented that they were “unable to sign in” and were defined as absent by the system, as “failure to sign in after the wrong peak is still considered as absenteeism, and the homework notification cannot be received”, etc.