Onboard Spectral and Spatial Cloud Detection for Hyperspectral Remote Sensing Images

Abstract

:1. Introduction

2. Related Work

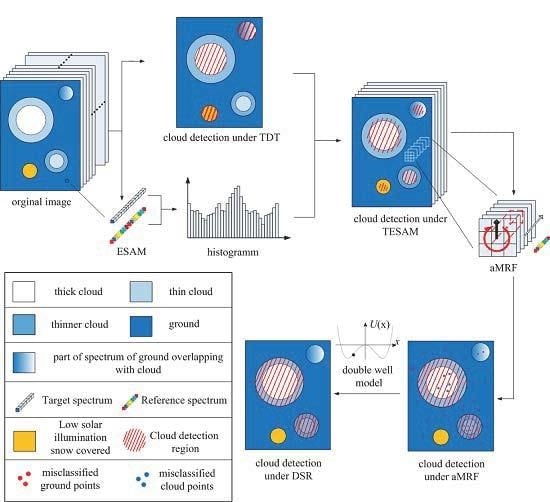

3. Proposed Method

3.1. T-ESAM

3.2. aMRF Model

3.3. Dynamic Stochastic Resonance (DSR) Model

4. Feasibility Study

4.1. Dataset

4.2. Accuracy Accessment

4.3. Detection Results

4.4. Cloud Detection Performance of Each Stage

5. Discussions

5.1. The Effectiveness of Combining the Threshold Decision Tree and Spectral Angle Map

5.2. The Usefulness of Spatial Information for Cloud Detection

5.3. Error Sources of the Proposed Method

5.4. Effect of Compression Based on Cloud Detection

5.5. Applicability of the Developed Methods in the Feature

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| ACCA | Automatic Cloud Cover Algorithm |

| aMRF | adaptive Markov Random Field |

| CC | Cloud Cover |

| DCC-ASE | Detection of Cryospheric Change Automonous Sciencecraft Experiment |

| DSR | Dynamic Source Resonance |

| DTM | Decision Theoretic Method |

| EO-1 | Earth Observing-1 |

| ESAM | Exponential Spectral Angle Map |

| FL | Fast Lossless |

| FN | False Negative |

| FP | False Positive |

| FPR | False Positive Rate |

| HCC | Hyperion Cloud Cover |

| HSI | Hyperspectral Image |

| LUT | Look Up Table |

| MRF | Markov Random Field |

| MODIS | Moderate-resolution Imaging Spectroradiometer |

| NAPC | Noise-adjusted Principle Components |

| NDSI | Normalized Difference Snow Index |

| NIR | Near Infrared |

| ROC | Receiver Operating Characteristic Curve |

| ROI | Region of Interest |

| R-VCANet | Rolling Guidance filter and Vertex Component Network |

| SAM | Spectral Angle Map |

| SVM | Support Vertor Machine |

| SVM-aMRF | Support Vector Machine adaptive Markov Random Field |

| TDT | Threshold Decision Tree |

| TESAM | Threshold assisted Exponential Spectral Angle Map |

| TIR | Thermal Infrared |

| TN | True Negative |

| TOA | Top of Atmosphere |

| TP | True Positive |

| USGS | United States Geological Survey |

| VNIR | Visible and Near Infrared |

| VSWIR | Visible and Short Wave Infrared |

| WMO | World Meteorological Organization |

Appendix A Some Parameters for Meteorological Satellite and Earth Observation Satellite

| Satellite | The Used Sensor | Image Resolution | Data Size | Download Speed | |

|---|---|---|---|---|---|

| Meteorological satellite | FY-3A | MERSI | 1100 m | 4GB | 93 Mb/s |

| Noaa18 | AVHRR | 1100 m | / | 138 Mb/s | |

| GMS-5 | VISSR | 1250 m | / | 14 Mb/s | |

| Meteosat | VISSR | 1000 m | / | 3.2 Mb/s | |

| Meteor-m2 | KMSS | 1000 m | / | 665 kb/s | |

| Earth observation satellite | EO-1 | Hyperion | 30 m | / | 120 Mb/s |

| NEMO(HRST) | AVIRIS | 20 m | 227 GB | 150 Mb/s | |

| QuickBird | QuickBird | 0.6 m | 128 GB | 320 Mb/s | |

| LANDSAT8 | OLI/TIRS | 15 m | 400 GB | 330 Mb/s | |

| EROS B1 | Panchromatic | 0.82 m | / | 280 Mb/s | |

| Resurs dk1 | ESI | 1 m | 768 GB | 330 Mb/s |

Appendix B Pseudocode for the TESAM Model

| Algorithm A1 TDT assisted ESAM |

| Input: the remote sensing image data I with K pixels, each pixel is N-dimentional spectral vectors X=, the referenced spectrum Y= |

| Output: the class labels map M |

| step1: |

| for k=1 to K do |

| = ( computes the exponential spectral angle according to Equations(1)-(3)). |

| end |

| for k=1 to K do |

| = ( computes the number of cloud pixels according to TDT) |

| end |

| step2: |

| Computes the histogram of |

| step3: |

| for k=1 to n do |

| = ( computes the threshold for ESAM according to Equations(4)-(5)) |

| end |

| Step4: |

| for k=1 to K do |

| = ( determine the binary class label according to Equations(6)) |

| end |

Appendix C Pseudocode for the aMRF Model

| Algorithm A2 TESAM-aMRF |

| Input: the remote sensing image data I with K pixels, each pixel is n-dimentional spectral vectors X = , the referenced spectrum Y=, the class labels map M. |

Output: the class labels map M

|

Appendix D Pseudocode for the DSR Model

| Algorithm A3 aMRF-DSR |

| Input: the class labels M |

| Output: the class labels M |

| step1: |

| for k=1 to K do |

| (M(k)=cloud), (M(k)=ground) ( computes the pixel number of 8-neighborhood around pixel k that belongs to ground and cloud respectively); |

| compare and , designating the number of bigger one to ; |

| Refresh x according to Equations (12)-(13); |

| end |

| step2: Refresh M |

| step3: Iterate the procedure of step1-step2; |

References

- Li, W.; Wu, G.; Zhang, F.; Du, Q. Hyperspectral Image Classification Using Deep Pixel-Pair Features. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef]

- Kinter, J.L.; Shukla, J. The Global Hydrologic and Energy Cycles: Suggestions for Studies in the Pre-Global Energy and Water Cycle Experiment (GEWEX) Period. Bull. Am. Meteorol. Soc. 2013, 71, 181–271. [Google Scholar] [CrossRef]

- Shen, H.; Pan, W.D.; Wu, D. Predictive lossess compression of regions of interest in hyperspectral images with no-data regions. IEEE Trans. Geosci. Remote Sens. 2016, 55, 173–182. [Google Scholar] [CrossRef]

- Shen, H.; Pan, W.D. Predictive Lossless Compression of Regions of Interest in Hyperspectral Image Via Correntropy Criterion Based Least Mean Square Learning. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016. [Google Scholar]

- Mandrake, L.; Frankenberg, C.; O’Dell, C.W.; Osterman, G.; Wennberg, P.; Wunch, D. Semi-autonomous sounding selection for OCO-2. Atmosp. Meas. Tech. Discuss. 2013, 6, 5881–5922. [Google Scholar] [CrossRef]

- Chien, L.S.; Mclaren, D.; Tran, D.; Davies, A.G.; Doubleday, J.; Mandl, D. Onboard product generation on earth observing one: A pathfinder for the proposed HyspIRI mission intelligent payload module. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 257–264. [Google Scholar] [CrossRef]

- Xu, X.; Yuan, C.; Liang, X.; Shen, X. Rendering and Modeling of Stratus Cloud Using Weather Forecast Data. In Proceedings of the IEEE International Conference on Virtual Reality and Visualization, Fujian, China, 17–18 October 2015. [Google Scholar]

- King, M.D.; Platnick, S.; Menzel, W.P.; Ackerman, S.A.; Hubanks, P.A. Spatial and temporal distribution of clouds observed by MODIS onboard the Terra and Aqua satellites. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3826–3852. [Google Scholar] [CrossRef]

- Cadau, E.; Laneve, G. Improved MSG-SEVIRI images cloud masking and evaluation of its impact on the fire detection methods. In Proceedings of the IEEE International Conference on Geoscience and Remote Sensing Symposium, Boston, MA, USA, 6–11 July 2008. [Google Scholar]

- Shen, H.; Pan, W.D.; Wang, Y. A Novel Method for Lossless Compression of Arbitrarily Shaped Regions of Interest in Hyperspectral Imagery. In Proceedings of the IEEE Southeast Conference, Fort Lauderdale, FL, USA, 9–12 April 2015. [Google Scholar]

- Mercury, M.; Green, R.; Hook, S.; Oaida, B.; Wu, W.; Gunderson, A.; Chodas, M. Global cloud cover for assessment of optical satellite observation opportunities: A HyspIRI case study. Remote Sens. Environ. 2012, 126, 62–71. [Google Scholar] [CrossRef]

- Conoscenti, M.; Coppola, R.; Magli, E. Constant SNR, Rate Control, and Entropy Coding for Predictive Lossy Hyperspectral Image Compression. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7431–7441. [Google Scholar] [CrossRef]

- Mat Noor, N.R.; Vladimirova, T. Investigation into lossless hyperspectral image compression for satellite remote sensing. Int. J. Remote Sens. 2013, 34, 5072–5104. [Google Scholar] [CrossRef]

- Nian, Y.; Xu, K.; Wan, J.; Wang, L.; He, M. Block-based KLT compression for multispectral images. Int. J. Wavel. Multiresolut. Inf. Process. 2016, 14. [Google Scholar] [CrossRef]

- Wang, L.; Wu, J.; Jiao, L.; Shi, G. Lossy-to-Lossless Hyperspectral Image Compression Based on Multiplierless Reversible Integer TDLT/KLT. IEEE Geosci. Remote Sens. Lett. 2009, 6, 587–591. [Google Scholar] [CrossRef]

- Gonzalez-Conejero, J.; Bartrina-Rapesta, J.; Serra-Sagrista, J. JPEG 2000 encoding of remote sensing multispectral images with no-data regions. IEEE Geosci. Remote Sens. Lett. 2010, 7, 251–255. [Google Scholar] [CrossRef]

- Li, H.; Zheng, H.; Han, C. Adaptive run-length encoding circuit based on cascaded structure for target region data extraction of remote sensing image. In Proceedings of the International Conference on Integrated Circuits and Microsystems, Chengdu, China, 25–28 November 2016. [Google Scholar]

- El-Araby, E.; Taher, M.; El-Ghazawi, T.; Le Moigne, J. Prototyping automatic cloud cover assessment (ACCA) algorithm for remote sensing on-board processing on a reconfigurable computer. In Proceedings of the IEEE International Conference on Field-Programmable Technology, Singapore, 11–14 December 2005. [Google Scholar]

- Gao, X.J.; Wan, Y.C.; Zheng, X.Y. Real-Time automatic cloud detection during the process of taking aerial photographs. Spectrosc. Spectr. Anal. 2014, 34, 1909–1913. [Google Scholar]

- Ackerman, S.A.; Strabala, K.I.; Menzel, W.P.; Frey, R.A.; Moeller, C.C.; Gumley, L.E. Discriminating clear sky from clouds with MODIS. J. Geophys. Res. Atmosp. 1998, 103, 32141–32157. [Google Scholar] [CrossRef]

- Ackerman, S.A.; Holz, R.E.; Frey, R.; Eloranta, E.W.; Maddux, B.C.; McGill, M. Cloud detection with MODIS. Part II: Validation. J. Atmosp. Ocean. Technol. 1998, 103, 1073–1086. [Google Scholar] [CrossRef]

- Frey, R.A.; Ackerman, S.A.; Liu, Y.; Strabala, K.I.; Zhang, H.; Key, J.R.; Wang, X. Cloud detection with MODIS. Part I: Improvements in the MODIS cloud mask for collection 5. J. Atmosp. Ocean. Technol. 2008, 25, 1057–1072. [Google Scholar] [CrossRef]

- Wei, J.; Sun, L.; Jia, C.; Yang, Y.; Zhou, X.; Gan, P.; Jia, S.; Liu, F.; Li, R. Dynamic threshold cloud detection algorithms for MODIS and Landsat 8 data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016. [Google Scholar]

- Griggin, M.; Burke, H.H.; Mandl, D.; Miller, J. Cloud cover detection algorithm for EO-1 hyperion imagery. In Proceedings of the 17th SPIE AeroSense Conference on Algorithms Technology Multispectral, Hyperspectral Ultraspectral Imagery IX, Orlando, FL, USA, 21–25 July 2003. [Google Scholar]

- Doggett, T.; Greeley, R.; Chien, S.; Castano, R.; Cichy, B.; Davies, A.G.; Rabideau, G.; Sherwood, R.; Tran, D.; Baker, V.; et al. Autonomous on-board detection of cryospheric change with Hyperion on-board Earth Observing-1. Remote Sens. Environ. 2006, 101, 447–462. [Google Scholar] [CrossRef]

- Ip, F.; Dohm, J.M.; Baker, V.R.; Doggett, T.; Davies, A.G.; Castano, R.; Chien, S.; Cichy, B.; Greeley, R.; Sherwood, R.; et al. Flood detection and monitoring with the autonomous sciencecraft experiment onboard EO-1. Remote Sens. Environ. 2006, 101, 463–481. [Google Scholar] [CrossRef]

- Irish, R.R. Landsat 7 automatic cloud cover assessment. Algorithms for Multispectral, Hyperspectral, and Ultraspectral Imagery. In Proceedings of the International Society for Optical Engineering, Orlando, FL, USA, 24 April 2000. [Google Scholar]

- Wang, M.; Shi, W. Cloud masking for ocean color data processing in the coastal regions. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3105–3196. [Google Scholar] [CrossRef]

- Deng, J.; Wang, H.; Ma, J. An Automatic cloud detection algorithm for Landsat Remote Sensing Image. In Proceedings of the 4th International Workshop on Earth Observation and Remote Sensing Applications, Guangdong, China, 11–14 December 2016. [Google Scholar]

- Gómez-Chova, L.; Camps-Valls, G.; Calpe-Maravilla, J.; Guanter, L.; Moreno, J. Cloud-screening algorithm for ENVISAT/MERIS multispectral images. IEEE Trans. Geosci. Remote Sens. 2007, 45, 4105–4118. [Google Scholar] [CrossRef]

- Taylor, T.E.; O’Dell, C.W.; O’Brien, D.M.; Kikuchi, N.; Yokota, T.; Nakajima, T.Y.; Ishida, H.; Crisp, D.; Nakajima, T. Comparison of cloud-screening methods applied to GOSAT near-infrared spectra. IEEE Trans. Geosci. Remote Sens. 2012, 50, 295–309. [Google Scholar] [CrossRef]

- Minnis, P.; Trepte, Q.Z.; Sun-Mack, S.; Chen, Y.; Doelling, D.R.; Young, D.F.; Spangenberg, D.A.; Miller, W.F.; Wielicki, B.A.; Brown, R.R.; et al. Cloud detection in nonpolar regions for CERES using TRMM VIRS and terra and aqua MODIS data. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3857–3884. [Google Scholar] [CrossRef]

- Lee, J.; Weger, R.C.; Sengupta, S.K.; Welch, R.M. A neural network approach to cloud classification. IEEE Trans. Geosci. Remote Sens. 1990, 28, 846–855. [Google Scholar] [CrossRef]

- Martins, J.V.; Tanré, D.; Remer, L.; Kaufman, Y.; Mattoo, S.; Levy, R. MODIS cloud screening for remote sensing of aerosols over oceans using spatial variability. Geophys. Res. Lett. 2002, 29, MOD4-1–MOD4-4. [Google Scholar] [CrossRef]

- Bian, J.; Li, A.; Liu, Q.; Huang, C. Cloud and Snow Discrimination for CCD Images of HJ-1A/B Constellation Based on Spectral Signature and Spatio-Temporal Context. Remote Sens. 2016, 8, 31. [Google Scholar] [CrossRef]

- Murtagh, F.; Barreto, D.; Marcello, J. Decision boundaries using Bayes factors. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2952–2958. [Google Scholar] [CrossRef]

- Yu, H.; Gao, L.; Li, J.; Li, S.S.; Zhang, B.; Benediktsson, J.A. Spectral-Spatial Hyperspectral Image Classification Using Subspace-Based Support Vector Machines and Adaptive Markov Random Fields. Remote Sens. 2016, 8, 355. [Google Scholar] [CrossRef]

- Merchant, C.J.; Harris, A.R.; Maturi, E.; MacCallum, S. Probabilistic physically based cloud screening of satellite infrared imagery for operational sea surface temperature retrieval. Q. J. R. Meteorol. Soc. 2005, 131, 2735–2755. [Google Scholar] [CrossRef] [Green Version]

- Thompson, D.R.; Green, R.O.; Keymeulen, D.; Lundeen, S.K.; Mouradi, Y.; Nunes, D.C.; Castaño, R.; Chien, S.A. Rapid Spectral Cloud Screening Onboard Aircraft and Spacecraft. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6779–6792. [Google Scholar] [CrossRef]

- Pan, B.; Shi, Z.; Xu, X. R-VCANet: A New Deep-Learning-Based Hyperspectral Image Classification Method. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 1975–1986. [Google Scholar] [CrossRef]

- Scaramuzza, P.L.; Bouchard, M.A.; Dwyer, J.L. Development of the Landsat Data Continuity Mission Cloud-Cover Assessment Algorithms. IEEE Trans. Geosci. Remote Sens. 2012, 50, 1140–1157. [Google Scholar] [CrossRef]

- Liu, J. Improvement of dynamic threshold value extraction technic in FY-2 cloud detection. J. Infrared Millim. Waves 2010, 29, 288–292. [Google Scholar]

- Chang, C.-I.; Du, Q. Interference and noise-adjusted principal components analysis. IEEE Trans. Geosci. Remote Sens. 1999, 37, 2387–2396. [Google Scholar] [CrossRef]

- Tarabalka, Y.; Benediktsson, J.A.; Chanussot, J. Spectral-spatial classification of hyperspectral imagery based on partitional clustering techniques. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2973–2987. [Google Scholar] [CrossRef]

- Hergert, W.; Wriedt, T. Mie theory: A review. In The Mie Theory; Springer: Berlin, Germany, 2012; Volume 169, pp. 53–71. [Google Scholar]

- Yang, P.; Bi, L.; Baum, B.A.; Liou, K.N.; Kattawar, G.W.; Mishchenko, M.I.; Cole, B. Spectrally consistent scattering, absorption, and polarization properties of atmospheric ice crystals at wavelengths from 0.2 to 100 μm. J. Atmos. Sci. 2013, 70, 330–347. [Google Scholar] [CrossRef]

- Dongmei, H.H.Z.J.W.; Yongyi, L.N.Q. Implementation of Arccosine Function Based on FPGA. Electron. Technol. 2013, 6, 5–8. [Google Scholar]

- Tang, W.; Liu, G. FPGA Fixed-Point Technology of Exponential Function Achieved by CORDIC Algorithm. J. South China Univ. Technol. 2016, 44, 9–14. [Google Scholar]

- Malík, P. High throughput floating point exponential function implemented in FPGA. In Proceedings of the IEEE Computer Society Annual Symposium on VLSI, Montpellier, France, 8–10 July 2015. [Google Scholar]

| Method | Spectra Utilized | Disadvantage |

|---|---|---|

| ACCA [41] | 0.45–0.52 μm, 0.52–0.6 μm, 0.62–0.69 μm, 0.76–0.96 μm, 1.04–1.25 μm, 1.55–1.75 μm | They use the NDSI = (−)/( + ) index which contains spectral bands near 1.65 μm to discriminate snow and clouds. However, sometimes snow covered surfaces and clouds cannot be classified clearly under NDSI because the reflectance features of clouds and snow particles sometimes are similar in particular spectra. |

| HCC [24] | 0.55 μm, 0.66 μm, 0.86 μm, 1.25 μm, 1.38 μm, 1.65 μm | |

| DCC-ASE [25] | 0.43 μm, 0.56 μm, 0.66 μm, 0.86 μm, 1.25 μm, 1.38 μm, 1.65 μm |

| Thermodynamic Phase | Cloud Type | Region | Altitude | Characteristic |

|---|---|---|---|---|

| Water cloud (low) | Cumulus (Cu); Stratus (St) | frigid zone | ground-2km | Composed of water droplets. |

| Stratocumulus (Sc) | Temperate zone | ground-2 km | ||

| Cumulonimbus (Cb) | Tropical region | ground-2 km | ||

| Mixed phase cloud (middle) | Altocumulus (Ac) | frigid zone | 2–4 km | Composed primarily of water droplets; however, they can also be composed of ice crystals if T is low enough. |

| Altostratus (As) | Temperate zone | 2–7 km | ||

| Nimbostratus (Ns) | Tropical region | 2–8 km | ||

| Ice cloud (high) | Cirrus (Ci) | frigid zone | 3–8 km | Typically thin and white in appearance, but can appear in various colours when the sun is low on the horizon. |

| Cirrocumulus (Cc) | Temperate zone | 5–13 km | ||

| Cirrostratus (Cs) | Tropical region | 6–18 km |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, H.; Zheng, H.; Han, C.; Wang, H.; Miao, M. Onboard Spectral and Spatial Cloud Detection for Hyperspectral Remote Sensing Images. Remote Sens. 2018, 10, 152. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10010152

Li H, Zheng H, Han C, Wang H, Miao M. Onboard Spectral and Spatial Cloud Detection for Hyperspectral Remote Sensing Images. Remote Sensing. 2018; 10(1):152. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10010152

Chicago/Turabian StyleLi, Haoyang, Hong Zheng, Chuanzhao Han, Haibo Wang, and Min Miao. 2018. "Onboard Spectral and Spatial Cloud Detection for Hyperspectral Remote Sensing Images" Remote Sensing 10, no. 1: 152. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10010152