1. Introduction

Recent years have witnessed significant developments in computer vision. An enormous amount of research effort has gone into vision-based tasks, such as object tracking [

1,

2,

3,

4,

5,

6] and saliency detection [

7,

8,

9,

10]. As a core field of computer vision, visual tracking [

4,

5,

6,

11] plays an active role in a wide range of applications, including driverless vehicles, robotics, traffic analysis, medical imaging, motion analysis, and many others.

It is critical to employ an efficient feature representation in order to improve the performance in object tracking. Gradient and color features are the most popular single types of feature. In particular, color features, such as color names (CN), help capture rich color characteristics, and histogram of oriented gradient (HOG) [

12] features are adept in capturing abundant gradient information. Based on these feature descriptions, a variety of techniques on target tracking have been proposed. For instance, FragTrack [

13] is devised to build object appearance models by exploiting multiple parts of the target. Babenko et al. [

14] presented a multiple instance learning (MIL) algorithm to develop a discriminative model by bagging all ambiguous negative and positive samples. Grabner et al. [

15] utilized a novel on-line Adaboost feature selection method (OAB), benefitting considerably by on-line training. In a past paper [

2], a structural local sparse representation is applied to tracking task, where both partial and spatial information are exploited. Zhang et al. [

16] discovered the relationship between an object and its spatiotemporal context based on the use of a Bayesian framework. Extended Lucas Kanade (ELK) method [

17] considers two log-likelihood terms that are related to information regarding object pixels or background affiliation, in addition to the standard LK template matching term. Most of the aforementioned techniques are dependent of the intensity or texture information while characterizing a given image. However, it is difficult for them to meet the requirement of processing a large number of frames per second without resorting to parallel computation on a standard PC in dealing with real-time tasks [

17]. From this viewpoint, correlation filters [

18,

19,

20,

21,

22] show their strengths both in speed and in accuracy, where tracking problem is converted from time domain to frequency domain with fast Fourier transform (FFT). In so doing, convolution can be substituted with multiplication in an effort to achieve fast learning and target detection.

Although high tracking speed may be obtained, long-time tracking can often result in model drift. To ensure the stability of model updating in object tracking, Kalal et al. [

1] decomposed the ultimate task of tracking into subtasks of tracking, learning and detection (TLD), where tracking and detection reinforce each other. However, if the location of an object is predicted only with respect to the previous frame, the appearance model may suffer from noisy samples. In particular, when the object is becoming blocked by something else, the tracker will fail immediately. Having taken notice of this, Hare et al. [

2] adjusted the appearance model in a more reliable way, learning a joint structured output (Struck) to predict the object location. Apart from using a correlation filter, Zhu et al. [

21] introduced an additional filter for detection, which greatly alleviated the problems of location error and model drifting caused by serious occlusion. Benefiting from temporal context and online redetector, a method described previously [

22] performs robustly to appearance variation.

Note that following the increasing availability of low-cost, commercially available unmanned aerial vehicles (UAVs), more and more research efforts have been focusing on object detection and tracking by UAV videos. For example, Logoglu et al. [

23] designed a feature-based moving object detection method for aerial videos. Fu et al. [

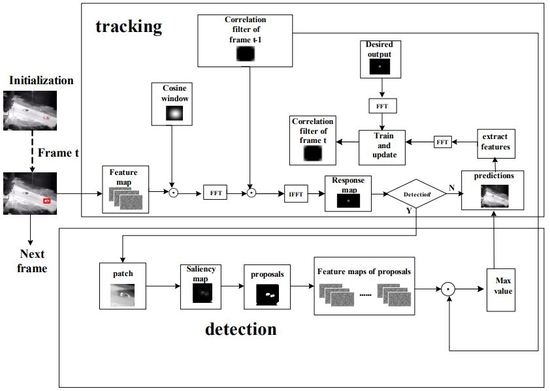

24] proposed a technique named ORVT, for onboard robust visual tracking of targets in aerial images using a reliable global-local object model. However, all methods mentioned above cannot cope well with challenges appearing in such videos, which typically involve illumination variation, background clutter, and occlusion. To address these issues we propose a robust tracking approach for UAV videos, which offers three main contributions: (1) Composed of the HOG and dimension-reduced CN features, fused features are introduced to correlation filter in order to improve the robustness of appearance model in describing the target. (2) To deal with background clutter and meanwhile, and to reduce the risk of model drifts caused by occlusion, saliency proposals are introduced as posterior information to relocate the object. (3) A new adaptive template update method is proposed to further alleviate the problem of model drift that is caused by occlusion or distraction. The effectiveness of this approach is demonstrated through systematic comparisons against other techniques.

The rest of this paper is organized as follows.

Section 2 discusses relevant previous work on correlation filter and saliency detection. Under the general framework of correlation filter,

Section 3 describes our approach.

Section 4 presents an evaluation of the proposed approach and a comparative study with state-of-the-art techniques.

Section 5 discusses the tracking speed of different methods and assesses the effects of each contribution made by the proposed work. Finally,

Section 6 concludes this study and points out interesting further research.

4. Experimental Results

We provide representative experimental results in this section. The proposed tracker is implemented in Matlab2014 on a PC with a 3.4 GHz processor and 16 GB RAM without involving any sophisticated program optimization. In order to present an objective evaluation regarding the performance of the proposed approach, we conduct experiments on two datasets, namely, the VIVID dataset [

39] and the UAV123 dataset [

40], for both qualitative and quantitative evaluations. In these experiments, the parameters are fixed for all of the sequences, in which

and

are set to 0.2 and 0.25, respectively. In addition,

L is set as 7 and the candidate region size for the correlation filter is set to three times as big as that of the object under tracking.

We compare the proposed tracker with a range of excellent state-of-art trackers, including TLD [

1], DSST [

19], BACF [

20], ORVT [

24], Staple [

30], SRDCF [

31], ECO_HC [

32], KCFDP [

41], BIT [

42], and fDSST [

43]. Among these trackers, TLD introduces the detection method into the tracking problem, which performs well when occlusion exists, while DSST, KCFDP, SRDCF, Staple, BACF, ECO_HC, and fDSST involve the use of correlation filters to improve the speed of tracking. In particular, ORVT is an onboard robust aerial tracking algorithm working by the use of a reliable global-local object model. Additionally, BIT is a biologically inspired tracker that extracts low-level biologically inspired features while imitating an advanced learning mechanism to combine generative and discriminative models for target location. Note that we employ publicly available codes of compared trackers for fair comparison.

We follow the standard evaluation metrics for object tracking algorithms in two aspects: the precision rate and success rate. The precision rate shows the percentage of successfully tracked frames on which the center location error (CLE) of a tracker is within a given threshold (e.g., 20 pixels), with CLE defined as the average Euclidean distance between the center locations of the targets and the manually labeled ground truths. A tracking result in a frame is considered successful if for a threshold , where and denote the areas of the bounding boxes of the tracked region and the ground truth, respectively, and represent the intersection and union of two regions, respectively, and denotes the number of pixels in the region. Thus, the success rate is defined as the percentage of frames where the overlap rates are greater than a threshold . Normally, the threshold is set to 0.5.

We present the results under one-pass evaluation (OPE) using the average precision and success rate over all sequences. OPE is the most common evaluation method which runs trackers on each sequence for once. It initializes the trackers with the state of the ground truth object in the first frame and reports the average precision or success rates across all the results obtained.

4.1. Experiments on VIVID Dataset

There are eleven video sequences in the VIVID dataset. Apart from motion blur and fast motion, these video sequences also suffer from further difficulties such as occlusion, scale variation, background clutter, low resolution, etc. In the VIVID dataset, the ground truth is given every ten frames. To evaluate the trackers more accurately, we mark the entire eleven sets of videos’ ground truths, referring to the official data, for quantitative evaluation.

The experimental results on these nine videos are summarized in

Table 1 and

Table 2, which show the overall rates of the success plots and those of the precision plots, respectively. As can be seen from these tables, our tracker performs reliably and can achieve optimal outcomes overall. In particular, regarding the first three video sequences where occlusion occurs seriously, our method exhibits an excellent performance benefitting from saliency based redetection and adaptive template updating, while the other trackers lost the targets under these circumstances. However, the remaining video sequences are frequently affected by scale change, rotation and similar objects which led to a decline in the performance of our algorithm also.

Figure 4 shows the qualitative evaluation on the VIVID dataset.

Figure 4a illustrates the performance of our approach and compared algorithms on the sequence

pktest01. Only our method keeps the virtue of robust tracking after more than 100 frames of occlusion. It is evident that through redetecting object by saliency information, the proposed tracker is more robust than the other trackers. In the sequence

pktest03, in addition to motion blur and fast motion, the other main challenges for tracking are illustration variation, serious occlusion, and background clutter. From the last picture of

Figure 4b, it is obvious that the full occlusion with the car is handled well by our tracker, while the other methods have a shift for the target. This implies that saliency detection makes an important contribution to achieve such an outstanding performance. In addition, almost every frame is subject to a varying degree of background clutter. Note that the scale of the target is too small to recognize, it is almost integrated with the background with certain texture and other details lost. It can be seen from the results that only our algorithm can successfully deal with the problem of background clutter as other methods fail to track the target completely. There is no doubt that fused features help improve the robustness of the proposed appearance model. In addition, the adaptive model update strategy also helps reduce model drift. Both of the above measures lead to the excellent performance of our method.

As shown in

Figure 4c–e, where there is no significant occlusion, our methods can always follow the target as with other trackers. It works even when similar cars appear in the sequence

egtest02. However, when scale variation and rotation occur, the calculated scales of bounding boxes are not sufficiently accurate causing a decrease in the accuracy of our tracker. For the sequences

egtest01 and

redteam, the background is similar with the edge of the target. If the response of the correlation filter is less than the threshold for a long time, our tracker will automatically try to relocate the target by exploiting the vision saliency. Of course, this strategy may gradually introduce certain noise from the background around the target to the template, leading to slight model drift.

4.2. Experiments on UAV123 Dataset

In order to evaluate the performance of our proposed approach, we conduct experiments on twenty challenging video sequences selected from the UAV123 dataset for both quantitative and qualitative analysis. The UAV123 dataset provides a facility for the evaluation of different trackers on a number of fully annotated HD videos captured from a professional grade UAV. It complements those benchmarks establishing the aerial component of tracking while providing a more comprehensive sampling of tracking nuisances that are ubiquitous in low-altitude UAV videos. Apart from aspect ratio change (ARC) and fast motion (FM), these video sequences are also affected by several adverse conditions such as background clutter (BC), camera motion (CM), full occlusion (FOC), illumination variation (IV), low resolution (LR), out of view (OV), partial occlusion (POC), similar object (SOB), scale variation (SV), and viewpoint change (VC). Thus, the experiments carried out herein include all typical challenges involved in real-world aerial tracking problems.

Ranging from 535 to 1783 frames, the twenty selected sequences used here involve all the challenging factors in the UAV123 dataset with different resolutions. Various scenes exist in these sequences, such as roads, buildings, field, beaches, and so on. The targets include aerial vehicles, person, trucks, boats, cars, etc. Detailed information of these sequences is listed in

Table 3.

Table 4 and

Table 5 exhibit the overall rates of the success plots and those of the precision plots on the twenty sequences, respectively. It can be seen that our tracker achieves the best performance on average, demonstrating its robustness in dealing with object tracking tasks involving different challenging factors and various background types.

We also perform an attribute-based comparison with other methods on this subset of the UAV123 dataset.

Figure 5 and

Figure 6 show the success plots and precision plots of twelve respective attributes on the precision and success rates, respectively. As can be seen from these results, our tracker always performs reliably and can achieve the optimal, or at least a close to optimal solution in most cases. Specifically, for the amplified challenging factors in aerial tracking, including CM, BC, SV, ARC, FM, IV, FOC, and VC, our tracker is able to achieve promising results, benefitting from the robustness of fused features as well as from the employment of the appearance template and model updating strategy. For videos with fast moving objects, camera motion, and background clutter, the fused features have stronger abilities to capture the information from the objects and, therefore, lead to better results as compared to the classic single-feature trackers. In addition, when the aspect ratio of an object changes significantly, our adaptive appearance template updating strategy can adjust the template to the appearance of the object. Moreover, thanks to the high confidence model updating method background noise is suppressed as much as possible when serious occlusion exists in aerial videos. Nevertheless, our tracker may not perform equally well when dealing with images of low resolution and targets that are out of view. It is likely due to the fact that such challenging factors usually create very serious problems for saliency detection, resulting in model drift.

Figure 7 illustrates qualitative evaluations on the application of different trackers to example sequences selected from the UAV123 dataset. In the sequence

person16, the background has the similar color with person, making it difficult for the trackers to successfully function to a different extent. Owing to the use of saliency information, our tracker is able to relocate the object after it has been occluded by the tree and outperforms the state-of-the-art tracking methods. As shown in

Figure 7b, the sequence

uav1_3 contains almost all the possible challenges in aerial tracking, especially low resolution and serious background clutter. Benefiting from the target redetection strategy, our tracker can track the target successfully all the time, while the others locate the target correctly only once in a while. Of course, the robustness of fused features also helps ensure the good performance of our tracker. However, for certain sequences with serious scale variation and similar objects, for example the sequence

car10, our tracker slightly underperforms in comparison to several state-of-art algorithms (e.g., ECO_HC, BACF, and Staple). Under such circumstances, our tracker may incur small model drift but it does not lose the target.

6. Conclusions

In this paper, we have proposed a robust tracking method for UAV videos via fused feature based correlation filter and saliency detection. The correlation filter that combines the HOG and dimension-reduced CN features leads to significant contribution in tracking performance while dealing with challenging factors such as occlusion, noise and illumination. To handle serious occlusion, this work has introduced saliency information into the tracker as redetection, thereby reducing background interference. Moreover, an adaptive model update strategy is adopted to alleviate possible model drifts, which is both robust and computationally efficient. Experimental investigations have demonstrated, both quantitatively and qualitatively, that our approach achieves favorable results on the average performance for two popular aerial tracking datasets in comparison with the state-of-the-art methods. Given its reliability and robustness, the proposed tracker can be successfully employed in a wide variety of UAV video applications (beyond those related to surveillance), such as wild-life monitoring, activity control, navigation/localization, and obstacle/object avoiding, especially when real-time processing is mandatory, as in the case of rescue or defense purposes.

As a generic approach for aerial videos, we plan to further develop more robust fused features and to reinforce the fast nature of the redetect methods in future, while operating in real-time. Also, in this work, it has been assumed that each-channel feature is independent of the rest and hence, no interaction between such features has been considered. As such, a channel-wise filter was successfully adopted. However, it would be interesting to explore the interconnections among the information contents conveyed by different channels and to introduce a general linear filter to deal with such cases.