Towards a 20 m Global Building Map from Sentinel-1 SAR Data

Abstract

:1. Introduction

2. Methodology

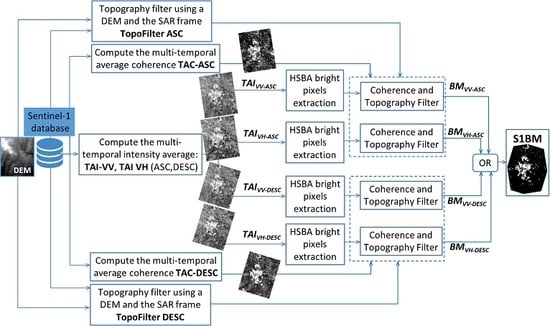

2.1. SAR Feature Extraction and Algorithm Architecture for Identifying Buildings

- (a)

- Temporal average intensity (TAI) VV-ASC & -DESC ( and ): for these features, we average a multi-temporal set of co-polarization SAR intensity images. Both ascending and descending orbits are considered separately, and the two corresponding features will be employed for identifying buildings, i.e., areas of high backscattering. Both features are derived for the co-polarization SAR images knowing that this configuration is favorable for detecting the double-bounce effect that is usually observed in urban areas.

- (b)

- TAI VH-ASC & -DESC ( and ): these features are obtained as in (a) but we consider the cross-polarization channel, which is better suited for detecting buildings with a dihedral shape that are not perfectly aligned with the orbit orientation.

- (c)

- Temporal average coherence (TAC) VV-ASC & -DESC ( and ): the multi-temporal coherence is derived by averaging the coherences extracted from the successive interferometric image pairs of the multi-temporal set. The computation is made for both ascending and descending orbits while only the co-polarization channel is considered.

- (i)

- Identify double-bounce objects, i.e., brighter pixels, in all four temporally averaged intensities (, , , and ) using a hierarchical split-based thresholding approach (HSBA), which is described in the following subsection.

- (ii)

- From the binary maps generated using step (i), remove all pixels that show low coherence values according to the two averaged coherence maps obtained from the temporal series of ascending and descending orbits, and .

- (iii)

- Remove all pixels in mountainous areas potentially affected by foreshortening for ascending or descending orbits, respectively.

- (iv)

- Merge the four separate resulting buildings maps, , , , and , to obtain the final S-1 Buildings Map (S1BM).

2.2. Identification of Bright Pixels in the Co- and Cross-Polarization SAR Channels

- (a)

- The pixel values histogram in the considered tile () must be bimodal (see Equation (1)).

- (b)

- The number of pixels belonging to BC must represent at least 20% of the considered tile.

- (c)

- The mode of PDF of the class of interest, i.e., BC, has to be higher than a predefined value.

3. Test Cases and Dataset

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- GCOS. The Global Observing System for Climate: Implementation Needs. Technical Report, 2016. Available online: https://unfccc.int/sites/default/files/gcos_ip_10oct2016.pdf (accessed on 16 November 2018).

- Gong, P.; Wang, J.; Yu, L.; Zhao, Y.; Zhao, Y.; Liang, L.; Niu, Z.; Huang, X.; Fu, H.; Liu, S.; et al. Finer resolution observation and monitoring of global land cover: first mapping results with Landsat TM and ETM+ data. Int. J. Remote Sens. 2013, 34, 2607–2654. [Google Scholar] [CrossRef]

- Pitman, A.J.; de Noblet-Ducoudré, N.; Avila, F.B.; Alexander, L.V.; Boisier, J.P.; Brovkin, V.; Delire, C.; Cruz, F.; Donat, M.G.; Gayler, V.; et al. Effects of land cover change on temperature and rainfall extremes in multi-model ensemble simulations. Earth Syst. Dyn. 2012, 3. [Google Scholar] [CrossRef]

- Henderson, F.M.; Xia, Z.G. SAR Applications in Human Settlement Detection, Population Estimation and Urban Land Use Pattern Analysis: A Status Report. IEEE Trans. Geosci. Remote Sens. 1997, 35, 79–85. [Google Scholar] [CrossRef]

- United Nations. World Urbanization Prospects—The 2014 Revision. Technical Report, 2014. Available online: http://esa.un.org/unpd/wup/ (accessed on 25 October 2018).

- ESA. The Land Cover Climate Change Initiative (CCI). Technical Report, European Space Agency, 2010. Available online: http://www.esa-landcover-cci.org/ (accessed on 25 October 2018).

- Schneider, A.; Friedl, M.A.; Potere, D. Mapping global urban areas using MODIS 500-m data: New methods and datasets based on urban ecoregions. Remote Sens. Environ. 2010, 114, 1733–1746. [Google Scholar] [CrossRef]

- Pesaresi, M.; Ehrlich, D.; Caravaggi, I.; Kauffmann, M.; Louvrier, C. Toward Global Automatic Built-Up Area Recognition Using Optical VHR Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 923–934. [Google Scholar] [CrossRef]

- Pesaresi, M.; Huadong, G.; Blaes, X.; Ehrlich, D.; Ferri, S.; Gueguen, L.; Halkia, M.; Kauffmann, M.; Kemper, T.; Lu, L.; et al. A Global Human Settlement Layer From Optical HR/VHR RS Data: Concept and First Results. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2102–2131. [Google Scholar] [CrossRef]

- Benedek, C.; Descombes, X.; Zerubia, J. Building Development Monitoring in Multitemporal Remotely Sensed Image Pairs with Stochastic Birth-Death Dynamics. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 33–50. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Grinias, I.; Panagiotakis, C.; Tziritas, G. MRF-based Segmentation and Unsupervised Classification for Building and Road Detection in Peri-urban Areas of High-resolution. ISPRS J. Photogramm. Remote Sens. 2016, 122, 145–166. [Google Scholar] [CrossRef]

- Chini, M.; Chiancone, A.; Stramondo, S. Scale Object Selection (SOS) through a hierarchical segmentation by a multi-spectral per-pixel classification. Pattern Recognit. Lett. 2014, 49, 214–223. [Google Scholar] [CrossRef] [Green Version]

- Dekker, R.J. Texture Analysis and Classification of ERS SAR Images for Map Updating of Urban Areas in The Netherlands. IEEE Trans. Geosci. Remote Sens. 2003, 41. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Gamba, P. Texture-based characterization of urban environments on satellite SAR images. IEEE Trans. Geosci. Remote Sens. 2003, 41, 153–159. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Gamba, P. Discriminating urban environments using multiscale texture and multiple SAR images. Int. J. Remote Sens. 2006, 27, 3797–3812. [Google Scholar] [CrossRef]

- Voisin, A.; Krylov, V.A.; Moser, G.; Serpico, S.B.; Zerubia, J. Classification of Very High Resolution SAR Images of Urban Areas Using Copulas and Texture in a Hierarchical Markov Random Field Model. IEEE Geosci. Remote Sens. Lett. 2013, 10. [Google Scholar] [CrossRef]

- Baselice, F.; Ferraioli, G. Statistical Edge Detection in Urban Areas Exploiting SAR Complex Data. IEEE Geosci. Remote Sens. Lett. 2012, 9, 185–189. [Google Scholar] [CrossRef]

- Matikainen, L.; Hyyppä, J.; Engdahl, M.E. Mapping Built-up Areas from Multitemporal Interferometric SAR Images—A Segment-based Approach. Photogramm. Eng. Remote Sens. 2006, 6, 701–714. [Google Scholar] [CrossRef]

- Corbane, C.; Lemoine, G.; Pesaresi, M.; Kemper, T.; Sabo, F.; Ferri, S.; Syrris, V. Enhanced automatic detection of human settlements using Sentinel-1 interferometric coherence. Int. J. Remote Sens. 2017, 39, 842–853. [Google Scholar] [CrossRef]

- Xiang, D.; Tang, T.; Ban, Y.; Su, Y.; Kuang, G. Unsupervised polarimetric SAR urban area classification based on model-based decomposition with cross scattering. ISPRS J. Photogramm. Remote Sens. 2016, 1116, 86–100. [Google Scholar] [CrossRef]

- Stasolla, M.; Gamba, P. Spatial Indexes for the Extraction of Formal and Informal Human Settlements From High-Resolution SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2008, 1. [Google Scholar] [CrossRef]

- Gamba, P.; Lisini, G. Fast and Efficient Urban Extent Extraction Using ASAR Wide Swath Mode Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6. [Google Scholar] [CrossRef]

- Lisini, G.; Salentinig, A.; Du, P.; Gamba, P. SAR-Based Urban Extents Extraction: From ENVISAT to Sentinel-1. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017. [Google Scholar] [CrossRef]

- Ban, Y.; Jacob, A.; Gamba, P. Spaceborne SAR data for global urban mapping at 30 m resolution using a robust urban extractor. ISPRS J. Photogramm. Remote Sens. 2015, 103. [Google Scholar] [CrossRef]

- Gamba, P.; Aldrighi, M.; Stasolla, M. Robust Extraction of Urban Area Extents in HR and VHR SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4. [Google Scholar] [CrossRef]

- Esch, T.; Heldens, W.; Hirner, A.; Keil, M.; Marconcini, M.; Roth, A.; Zeidler, J.; Dech, S.; Strano, E. Breaking new ground in mapping human settlements from space – The Global Urban Footprint. ISPRS J. Photogramm. Remote Sens. 2017, 134. [Google Scholar] [CrossRef]

- Chini, M.; Hostache, R.; Giustarinij, L.; Matgen, P. A Hierarchical Split-Based Approach (HSBA) for automatically mapping changes using SAR images of variable size and resolution: flood inundation as a test case. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6975–6988. [Google Scholar] [CrossRef]

- Giustarini, L.; Hostache, R.; Kavetski, D.; Chini, M.; Corato, G.; Schlaffer, S.; Matgen, P. Probabilistic Flood Mapping Using Synthetic Aperture Radar Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6958–6969. [Google Scholar] [CrossRef]

- Giustarini, L.; Hostache, R.; Matgen, P.; Schumann, G.J.P.; Bates, P.D.; Mason, D.C. A Change Detection Approach to Flood Mapping in Urban Areas Using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2417–2430. [Google Scholar] [CrossRef]

- Franceschetti, G.; Iodice, A.; Riccio, D. A canonical problem in electromagnetic backscattering from buildings. IEEE Trans. Geosci. Remote Sens. 2002, 40. [Google Scholar] [CrossRef]

- Ferro, A.; Brunner, D.; Bruzzone, L.; Lemoine, G. On the relationship between double bounce and the orientation of buildings in VHR SAR images. IEEE Geosci. Remote Sens. Lett. 2011, 8. [Google Scholar] [CrossRef]

- Pulvirenti, L.; Chini, M.; Pierdicca, N.; Boni, G. Use of SAR Data for Detecting Floodwater in Urban and Agricultural Areas: The Role of the Interferometric Coherence. IEEE Trans. Geosci. Remote Sens. 2016, 54. [Google Scholar] [CrossRef]

- Brunner, D.; Lemoine, G.; Bruzzone, L. Earthquake damage assessment of buildings using VHR optical and SAR imagery. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2403–2420. [Google Scholar] [CrossRef]

- Chini, M. Building Damage from Multi-resolution, Object-Based, Classification Techniques. In Encyclopedia of Earthquake Engineering; Beer, M., Kougioumtzoglou, I.A., Patelli, E., Au, I.S.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 1–11. [Google Scholar]

- Sato, A.; Yamaguchi, Y.; Singh, G.; Park, S.E. Four-Component Scattering Power Decomposition with Extended Volume Scattering Model. IEEE Geosci. Remote Sens. Lett. 2011, 9. [Google Scholar] [CrossRef]

- Thiele, A.; Cadario, E.; Schulz, K.; Thönnessen, U.; Soerge, U. Building recognition from multi-aspect high-resolution InSAR data in urban areas. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3583–3593. [Google Scholar] [CrossRef]

- Zebker, H.; Villasenor, J. Decorrelation in interferometric radar echoes. IEEE Trans. Geosci. Remote Sens. 1992, 30. [Google Scholar] [CrossRef]

- Chini, M.; Albano, M.; Saroli, M.; Pulvirenti, L.; Moro, M.; Bignami, C.; Falcucci, E.; Gori, S.; Modoni, G.; Pierdicca, N.; et al. Coseismic liquefaction phenomenon analysis by COSMO-SkyMed: 2012 Emilia (Italy) earthquake. Int. J. Appl. Earth Obs. Geoinf. 2015, 39. [Google Scholar] [CrossRef]

- Kropatsch, W.G.; Strobl, D. The generation of SAR layover and shadow maps from digital elevation models. IEEE Trans. Geosci. Remote Sens. 1990, 28, 98–107. [Google Scholar] [CrossRef]

- Farr, T.G. The Shuttle Radar Topography Mission. Rev. Geophys. 2007. [Google Scholar] [CrossRef]

- Esch, T.; Thiel, M.; Schenk, A.; Roth, A.; Müller, A.; Dech, S. Delineation of Urban Footprints From TerraSAR-X Data by Analyzing Speckle Characteristics and Intensity Information. IEEE Trans. Geosci. Remote Sens. 2010, 48. [Google Scholar] [CrossRef]

- Esch, T.; Schenk, A.; Ullmann, T.; Thiel, M.; Roth, A.; Dech, S. Characterization of Land Cover Types in TerraSAR-X Images by Combined Analysis of Speckle Statistics and Intensity Information. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1911–1925. [Google Scholar] [CrossRef]

- Esch, T.; Marconcini, M.; Felbier, A.; Roth, A.; Heldens, W.; Huber, M.; Schwinger, M.; Taubenböck, H.; Müller, A.; Dech, S. Urban Footprint Processor—Fully Automated Processing Chain Generating Settlement Masks From Global Data of the TanDEM-X Mission. IEEE Geosci. Remote Sens. Lett. 2013, 10. [Google Scholar] [CrossRef] [Green Version]

- Pierdicca, N.; Pulvirenti, L.; Boni, G.; Squicciarino, G.; Chini, M. Mapping Flooded Vegetation Using COSMO-SkyMed: Comparison With Polarimetric and Optical Data Over Rice Fields. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 2650–2662. [Google Scholar] [CrossRef]

- Richards, J.A. Remote Sensing With Imaging Radar; Springer: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Foody, G.M.; Mathur, A.; Sanchez-Hernande, C.; Boyd, D.S. Training set size requirements for the classification of a specific class. Remote Sens. Environ. 2006, 104. [Google Scholar] [CrossRef]

- Mack, B.; Roscher, R.; Waske, B. Can I Trust My One-Class Classification? Remote Sens. 2014, 6. [Google Scholar] [CrossRef]

- Mack, B.; Roscher, R.; Stenzel, S.; Feilhauer, H.; Schmidtlein, S.; Waske, B. Mapping raised bogs with an iterative one-class classification approach. ISPRS J. Photogramm. Remote Sens. 2016, 120. [Google Scholar] [CrossRef]

- Xie, H.; Pierce, L.E.; Ulaby, F.T. Statistical Properties of Logarithmically Transformed Speckle. IEEE Trans. Geosci. Remote Sens. 2002, 40, 721–727. [Google Scholar] [CrossRef]

- Marquardt, D.W. An algorithm for least-squares estimation of nonlinear parameters. J. Soc. Ind. Appl. Math. 1963, 11. [Google Scholar] [CrossRef]

- Ashman, K.M.; Bird, C.M.; Zepf, S.E. Detecting bimodality in astronomical datasets. Astrophysics 1994, 108, 2348–2361. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shapiro, L.G. Image segmentation techniques. Comput. Vis. Graph. Image Process. 1985, 29, 100–132. [Google Scholar] [CrossRef]

- ESA CCI. Land Cover Newsletter, Special Issue. Technical Report, October 2015. Available online: https://www.esa-landcover-cci.org/?q=webfm_send/86 (accessed on 25 October 2018).

- ESA CCI. Uncertainty in Plant Functional Type Distributions and Its Impact on Land Surface Models, Land Cover Newsletter, Issue 7. Technical Report, April 2017. Available online: https://www.esa-landcover-cci.org/?q=webfm_send/88 (accessed on 25 October 2018).

- Hudson, W.; Ramm, C. Correct formulation of the Kappa coefficient of agreement. Photogramm. Eng. Remote Sens. 1987, 53, 421–422. [Google Scholar]

- Li, Y.; Monti Guarnieri, A.; Hu, C.; Rocca, F. Performance and Requirements of GEO SAR Systems in the Presence of Radio Frequency Interferences. Remote Sens. 2018, 10, 82. [Google Scholar] [CrossRef]

| OA K-Coefficient | GUF | ||||

|---|---|---|---|---|---|

| Building | Non-Building | Total | S1BM | ||

| Egypt | 94.49% 0.40 | 1,098,252 1,958,899 3,057,151 | 922,663 48,389,217 49,311,880 | 2,020,915 50,348,116 52,369,031 | Building Non-Building Total |

| Israel | 91.55% 0.41 | 3,243,643 3,171,558 6,415,201 | 4,441,721 79,280,291 83,722,012 | 7,685,364 82,451,849 90,137,213 | Building Non-Building Total |

| Portugal | 97.93% 0.47 | 1,615,984 1,937,363 3,553,347 | 1,544,888 163,726,890 165,271,778 | 3,160,872 165,664,253 168,825,125 | Building Non-Building Total |

| Tunisia | 95.60% 0.29 | 140,9270 672,887 2,082,157 | 5,525,183 133,535,295 139,060,478 | 6,934,453 134,208,182 141,142,635 | Building Non-Building Total |

| Turkey | 96.94% 0.45 | 1,108,717 484,987 1,593,704 | 2,035,978 78,972,860 81,008,838 | 3,144,695 79,457,847 82,602,542 | Building Non-Building Total |

| S1BM & GUF Cross-Comparison: Overall Accuracy, K-Coefficient | ||||||

|---|---|---|---|---|---|---|

| VV-ASC&DESC VH-ASC&DESC CC, LIA | VV-ASC&DESC VH-ASC&DESC | VV-ASC CC, LIA | VV-DESC CC, LIA | VH-ASC CC, LIA | VH-DESC CC, LIA | |

| Egypt | 94% 0.40 | 93% 0.36 | 94% 0.24 | 94% 0.26 | 94% 0.25 | - |

| Israel | 91% 0.41 | 65% 0.16 | 92% 0.32 | 92% 0.29 | 91% 0.31 | 91% 0.29 |

| Portugal | 98% 0.47 | 83% 0.12 | 98% 0.29 | 98% 0.42 | 98% 0.24 | 98% 0.33 |

| Tunisia | 96% 0.30 | 90% 0.16 | 98% 0.35 | 97% 0.14 | 98% 0.40 | 98% 0.28 |

| Turkey | 97% 0.45 | 90% 0.20 | 98% 0.40 | 97% 0.38 | 98% 0.48 | 98% 0.49 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chini, M.; Pelich, R.; Hostache, R.; Matgen, P.; Lopez-Martinez, C. Towards a 20 m Global Building Map from Sentinel-1 SAR Data. Remote Sens. 2018, 10, 1833. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10111833

Chini M, Pelich R, Hostache R, Matgen P, Lopez-Martinez C. Towards a 20 m Global Building Map from Sentinel-1 SAR Data. Remote Sensing. 2018; 10(11):1833. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10111833

Chicago/Turabian StyleChini, Marco, Ramona Pelich, Renaud Hostache, Patrick Matgen, and Carlos Lopez-Martinez. 2018. "Towards a 20 m Global Building Map from Sentinel-1 SAR Data" Remote Sensing 10, no. 11: 1833. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10111833