Super-Resolution Reconstruction and Its Application Based on Multilevel Main Structure and Detail Boosting

Abstract

:1. Introduction

2. Related Work

3. Method

3.1. RTV Algorithm

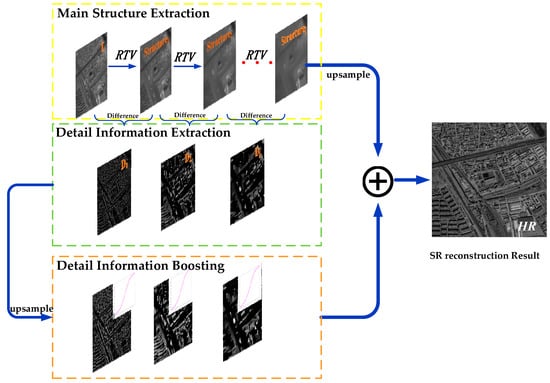

3.2. Multilevel Main Structure and Detail-Information Extraction

3.3. Detail Boosting and Fusion

| Algorithm 1. Multilevel Main Structure and Detail-Boosting SRR | |

| 1: | Input: image I, up-scaling factor , |

| 2: | Initialization |

| 3: | For band=1: n do |

| 4: | Decompose and obtain multilevel main structure, , |

| 5: | Calculate multilevel detail information, |

| 6: | Up-sample multilevel main structure and texture detail, and get and Dj |

| 7: | Detail boosting with Equation (10) |

| 8: | Fusion with Equation (11) |

| 9: | End for |

| 10: | Output: HR image |

3.4. Objective Evaluation

4. Experimental Analysis and Discussion

4.1. Simulation Image SR Experiment

4.2. Real Remote-Sensing Image SR Experiment

4.3. Discussion

- (1)

- To fully extract the information in a single remote-sensing image, a multilevel decomposition model is proposed to extract multilevel main structure and texture detail.

- (2)

- A novel detail-boosting function is put forward to improve the multilevel detail information.

- (3)

- A flexible SRR method is realized using a single LR image without any auxiliary information.

5. Applications

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bätz, M.; Koloda, J.; Eichenseer, A.; Kaup, A. Multi-image super-resolution using a locally adaptive denoising-based refinement. In Proceedings of the 2016 IEEE 18th International Workshop on Multimedia Signal Processing, Montreal, QC, Canada, 21–23 September 2016; pp. 1–6. [Google Scholar]

- Nayak, R.; Harshavardhan, S.; Patra, D. Morphology based iterative back-projection for super-resolution reconstruction of image. In Emerging Technology Trends in Electronics, Communication and Networking (ET2ECN), Proceedings of the 2014 2nd International Conference on, Surat, India, 26–27 December 2014; IEEE: Piscataway, NJ, USA, 2015; pp. 1–6. [Google Scholar]

- Zhu, H.; Tang, X.; Xie, J. Spatio-Temporal Super-Resolution Reconstruction of Remote-Sensing Images Based on Adaptive Multi-Scale Detail Enhancement. Sensors 2018, 18, 498. [Google Scholar] [CrossRef] [PubMed]

- Gou, S.; Liu, S.; Yang, S.; Jiao, L. Remote Sensing Image Super-Resolution Reconstruction Based on Nonlocal Pairwise Dictionaries and Double Regularization. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 7, 4784–4792. [Google Scholar] [CrossRef]

- Li, L.; Wang, W.; Luo, H.; Ying, S. Super-Resolution Reconstruction of High-Resolution Satellite ZY-3 TLC Images. Sensors 2017, 17, 1062. [Google Scholar] [CrossRef]

- Kwan, C.; Choi, J.H.; Chan, S.; Zhou, J.; Budavari, B. Resolution enhancement for hyperspectral images: A super-resolution and fusion approach. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, New Orleans, LA, USA, 5–9 March 2017; pp. 6180–6184. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Lee, K.M. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Yang, D.; Li, Z.; Xia, Y.; Chen, Z. Remote sensing image super-resolution: Challenges and approaches. In Proceedings of the IEEE International Conference on Digital Signal Processing, Singapore, 21–24 July 2015; pp. 196–200. [Google Scholar]

- Dong, W.; Zhang, L.; Lukac, R.; Shi, G. Sparse Representation Based Image Interpolation with Nonlocal Autoregressive Modeling. IEEE Trans. Image Process. 2013, 22, 1382–1394. [Google Scholar] [CrossRef] [PubMed]

- Zhang, L.; Wu, X. An edge-guided image interpolation algorithm via directional filtering and data fusion. IEEE Trans. Image Process. 2006, 15, 2226–2238. [Google Scholar] [CrossRef]

- Zhang, H.; Yang, Z.; Zhang, L.; Shen, H. Super-Resolution Reconstruction for Multi-Angle Remote Sensing Images Considering Resolution Differences. Remote Sens. 2014, 6, 637–657. [Google Scholar] [CrossRef] [Green Version]

- Chambolle, A. An Algorithm for Total Variation Minimization and Applications. J. Math. Imaging Vis. 2004, 20, 89–97. [Google Scholar]

- Fan, C.; Wu, C.; Li, G.; Ma, J. Projections onto Convex Sets Super-Resolution Reconstruction Based on Point Spread Function Estimation of Low-Resolution Remote Sensing Images. Sensors 2017, 17, 362. [Google Scholar] [CrossRef]

- Irani, M.; Peleg, S. Improving resolution by image registration. CVGIP-Gr. Models Image Process. 1991, 53, 231–239. [Google Scholar] [CrossRef]

- Liu, C.; Sun, D. On Bayesian Adaptive Video Super Resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 346–360. [Google Scholar] [CrossRef] [Green Version]

- Freeman, B.; Liu, C. Markov Random Fields for Super-resolution and Texture Synthesis. Adv. Markov Random Fields Vis. Image Process. 2011, 82, 5–7. [Google Scholar]

- Shen, H.; Zhang, L. A MAP-Based Algorithm for Destriping and Inpainting of Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1492–1502. [Google Scholar] [CrossRef]

- Mei, S.; Yuan, X.; Ji, J.; Zhang, Y.; Wan, S.; Du, Q. Hyperspectral Image Spatial Super-Resolution via 3D Full Convolutional Neural Network. Remote Sens. 2017, 9, 1139. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. arXiv, 2016; arXiv:1609.04802. [Google Scholar]

- Dong, C.; Chen, C.L.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef] [PubMed]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Tai, Y.; Liu, S.; Brown, M.S.; Lin, S. Super resolution using edge prior and single image detail synthesis. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA,, 13–18 June 2010; pp. 2400–2407. [Google Scholar] [CrossRef]

- Jiang, H.; Yang, J. In-place similarity and its applications in image and video detail enhancement. Electron. Lett. 2016, 52, 1022–1024. [Google Scholar] [CrossRef]

- Vishnukumar, S.; Wilscy, M. Super-resolution for remote sensing images using content adaptive detail enhanced self examples. In Proceedings of the International Conference on Circuit, Power and Computing Technologies, Nagercoil, India, 18–19 March 2016; pp. 1–5. [Google Scholar]

- Sun, J.; Xu, Z.; Shum, H.Y. Gradient profile prior and its applications in image super-resolution and enhancement. IEEE Trans. Image Process. 2010, 20, 1529–1542. [Google Scholar]

- Yu, L.; Xu, H.; Xu, Y.; Yang, X. Robust single image super-resolution based on gradient enhancement. In Proceedings of the Signal & Information Processing Association Summit and Conference, Hollywood, CA, USA, 3–6 December 2012; pp. 1–6. [Google Scholar]

- Xu, L.; Yan, Q.; Xia, Y.; Jia, J. Structure extraction from texture via relative total variation. ACM Trans. Gr. 2012, 31, 139. [Google Scholar] [CrossRef] [Green Version]

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A Statistical Evaluation of Recent Full Reference Image Quality Assessment Algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef]

- Zhou, W.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Qualifty Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar]

- Tsai, D.Y.; Lee, Y.; Matsuyama, E. Information entropy measure for evaluation of image quality. J. Digit. Imaging 2008, 21, 338–347. [Google Scholar] [CrossRef] [PubMed]

- Agaian, S.; Panetta, K.; Grigoryan, A. Transform-based image enhancement algorithms with performance measure. IEEE Trans. Image Process. 2001, 10, 367–382. [Google Scholar] [CrossRef] [PubMed]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef] [Green Version]

- Gioi, R.G.V.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A line segment detector. Image Process. Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

| No. | Figure | Satellite | View/Spectral Mode | Image Size | Number of Bands | GSD (m) | Acquisition Date |

|---|---|---|---|---|---|---|---|

| 1 | 3a | ZY3 | panchromatic | 500 × 500 | 1 | 2.1 | 6 June 2016 |

| 2 | 3b | ZY3 | panchromatic | 870 × 870 | 1 | 2.1 | 12 February2015 |

| 3 | 3c | ZY3 | multi-spectral | 500 × 500 | 3 | 5 | 10 January 2017 |

| 4 | 3d | GF-2 | multi-spectral | 500 × 500 | 3 | 3.2 | 11 November 2017 |

| Method | Bicubic | IBP | SRCNN [20] | VDSR | HE | MMSDB-SR | |

|---|---|---|---|---|---|---|---|

| Image Data | |||||||

| Exp_1 | PSNR: 25.53 | PSNR: 26.17 | PSNR: 26.36 | PSNR: 26.47 | PSNR: 13.57 | PSNR: 26.59 | |

| SSIM: 0.82 | SSIM: 0.83 | SSIM: 0.85 | SSIM: 0.86 | SSIM: 0.63 | SSIM: 0.88 | ||

| Exp_2 | PSNR: 20.65 | PSNR: 20.71 | PSNR: 20.76 | PSNR: 20.94 | PSNR: 11.69 | PSNR: 21.02 | |

| SSIM: 0.82 | SSIM: 0.83 | SSIM: 0.85 | SSIM: 0.87 | SSIM: 0.62 | SSIM: 0.89 | ||

| Exp_3 | PSNR: 32.09 | PSNR: 32.13 | PSNR: 33.05 | PSNR: 33.08 | PSNR: 13.39 | PSNR: 33.11 | |

| SSIM: 0.81 | SSIM: 0.85 | SSIM: 0.94 | SSIM: 0.95 | SSIM: 0.69 | SSIM: 0.89 | ||

| Exp_4 | PSNR: 29.94 | PSNR: 30.01 | PSNR: 30.15 | PSNR: 30.16 | PSNR: 13.47 | PSNR: 30.17 | |

| SSIM: 0.85 | SSIM: 0.89 | SSIM: 0.90 | SSIM: 0.91 | SSIM: 0.64 | SSIM: 0.92 | ||

| Method | Bicubic | IBP | SRCNN [20] | VDSR | HE | MMSDB-SR | |

|---|---|---|---|---|---|---|---|

| Image Data | |||||||

| Exp_1 | PSNR: 24.16 | PSNR: 24.81 | PSNR: 25.08 | PSNR: 25.17 | PSNR: 13.49 | PSNR: 25.21 | |

| SSIM: 0.76 | SSIM: 0.78 | SSIM: 0.81 | SSIM: 0.83 | SSIM: 0.55 | SSIM: 0.85 | ||

| Exp_2 | PSNR: 20.52 | PSNR: 20.58 | PSNR: 20.63 | PSNR: 20.46 | PSNR: 11.67 | PSNR: 20.53 | |

| SSIM: 0.81 | SSIM: 0.82 | SSIM: 0.84 | SSIM: 0.86 | SSIM: 0.59 | SSIM: 0.89 | ||

| Exp_3 | PSNR: 28.38 | PSNR: 28.49 | PSNR: 29.17 | PSNR: 29.19 | PSNR: 13.37 | PSNR: 29.23 | |

| SSIM: 0.76 | SSIM: 0.79 | SSIM: 0.83 | SSIM: 0.85 | SSIM: 0.66 | SSIM: 0.87 | ||

| Exp_4 | PSNR: 27.92 | PSNR: 28.36 | PSNR: 28.84 | PSNR: 28.87 | PSNR: 13.46 | PSNR: 28.92 | |

| SSIM: 0.72 | SSIM: 0.74 | SSIM: 0.77 | SSIM: 0.81 | SSIM: 0.59 | SSIM: 0.82 | ||

| Index | Method | ×2 | ×2.5 | ×3 | ×3.5 | ×4 | |

|---|---|---|---|---|---|---|---|

| Exp_1 | PNSR | Bicubic | 25.53 | 25.27 | 22.97 | 21.19 | 21.04 |

| IBP | 26.17 | 25.28 | 23.93 | 23.37 | 23.08 | ||

| HE | 13.57 | 13.12 | 12.36 | 12.15 | 12.12 | ||

| MMSDB-SR | 26.59 | 25.35 | 24.36 | 24.05 | 23.93 | ||

| SSIM | Bicubic | 0.82 | 0.82 | 0.74 | 0.61 | 0.57 | |

| IBP | 0.83 | 0.73 | 0.71 | 0.64 | 0.54 | ||

| HE | 0.63 | 0.62 | 0.54 | 0.46 | 0.40 | ||

| MMSDB-SR | 0.88 | 0.88 | 0.84 | 0.83 | 0.81 | ||

| Exp_4 | PNSR | Bicubic | 29.94 | 24.60 | 23.84 | 22.42 | 22.22 |

| IBP | 30.01 | 25.23 | 24.31 | 23.54 | 23.48 | ||

| HE | 13.47 | 13.37 | 13.26 | 13.06 | 13.04 | ||

| MMSDB-SR | 30.17 | 26.39 | 25.05 | 24.98 | 24.90 | ||

| SSIM | Bicubic | 0.85 | 0.71 | 0.61 | 0.47 | 0.45 | |

| IBP | 0.89 | 0.76 | 0.61 | 0.54 | 0.48 | ||

| HE | 0.64 | 0.52 | 0.44 | 0.35 | 0.32 | ||

| MMSDB-SR | 0.92 | 0.85 | 0.78 | 0.76 | 0.75 |

| No. | Figure | Satellite | View/Spectral Mode | Image Size | GSD (m) | Acquisition Date |

|---|---|---|---|---|---|---|

| 1 | 4a | ZY3 | panchromatic | 2000 × 2000 | 2.1 | 10 July 2013 |

| 2 | 4b | GF-2 | panchromatic | 1024 × 1024 | 1 | 1 September 2016 |

| 3 | 4c | WorldView-2 | panchromatic | 500 × 500 | 0.46 | 16 October 2017 |

| 4 | 4d | ZY3 | multi-spectral | 1024 × 1024 | 5 | 9 March 2013 |

| 5 | 4e | GF-2 | multi-spectral | 1024 × 1024 | 4 | 19 May 2016 |

| 6 | 4f | WorldView-2 | multi-spectral | 500 × 500 | 1.8 | 1 June 2016 |

| Index | Bicubic | IBP [14] | SRCNN [20] | VDSR [21] | HE | MMSDB-SR | |

|---|---|---|---|---|---|---|---|

| Exp_1 | Entropy | 6.18 | 6.26 | 6.28 | 6.29 | 6.11 | 6.66 |

| EME | 5.93 | 6.05 | 6.17 | 6.54 | 6.80 | 9.69 | |

| Exp_2 | Entropy | 7.55 | 7.60 | 7.67 | 7.68 | 7.26 | 7.94 |

| EME | 10.23 | 10.51 | 11.70 | 11.71 | 9.18 | 12.45 | |

| Exp_3 | Entropy | 6.98 | 7.01 | 7.03 | 7.02 | 6.74 | 7.21 |

| EME | 5.90 | 11.75 | 11.85 | 12.26 | 9.03 | 13.02 | |

| Exp_4 | Entropy | 7.52 | 7.53 | 7.54 | 7.54 | 6.99 | 7.60 |

| EME | 14.08 | 15.32 | 15.70 | 15.81 | 14.06 | 15.99 | |

| Exp_5 | Entropy | 7.64 | 7.65 | 7.66 | 7.67 | 6.98 | 7.76 |

| EME | 11.39 | 12.18 | 13.73 | 14.73 | 9.68 | 15.07 | |

| Exp_6 | Entropy | 7.39 | 7.42 | 7.47 | 7.52 | 5.99 | 7.99 |

| EME | 20.02 | 20.10 | 21.65 | 21.85 | 20.75 | 23.62 |

| Bicubic | IBP [14] | SRCNN [20] | VDSR [21] | HE | MMSDB-SR | |

|---|---|---|---|---|---|---|

| Exp_1 | 0.08 | 2.90 | 306.92 | 97.75 | 2.02 | 101.40 |

| Exp_2 | 0.26 | 2.14 | 175.06 | 43.14 | 1.37 | 65.03 |

| Exp_3 | 0.23 | 1.41 | 41.12 | 22.22 | 1.17 | 25.87 |

| Exp_4 | 0.14 | 3.17 | 170.77 | 104.88 | 2.33 | 131.13 |

| Exp_5 | 0.14 | 3.17 | 171.75 | 103.06 | 2.36 | 130.16 |

| Exp_6 | 0.08 | 1.74 | 40.87 | 10.49 | 1.85 | 33.25 |

| Index | Method | ×2 | ×2.5 | ×3 | ×3.5 | ×4 | |

|---|---|---|---|---|---|---|---|

| Exp_1 | Entropy | Bicubic | 6.98 | 5.48 | 5.48 | 5.48 | 5.48 |

| IBP | 7.01 | 7.02 | 7.02 | 7.02 | 7.02 | ||

| HE | 6.74 | 5.42 | 5.42 | 5.42 | 5.42 | ||

| MMSDB-SR | 7.21 | 7.20 | 7.20 | 7.20 | 7.20 | ||

| EME | Bicubic | 5.90 | 4.54 | 4.34 | 3.89 | 3.54 | |

| IBP | 11.75 | 6.24 | 6.06 | 5.35 | 4.83 | ||

| HE | 9.03 | 4.49 | 4.42 | 3.36 | 3.31 | ||

| MMSDB-SR | 13.02 | 10.57 | 9.51 | 8.98 | 8.18 | ||

| Exp_4 | Entropy | Bicubic | 7.64 | 6.64 | 6.64 | 6.64 | 6.64 |

| IBP | 7.65 | 6.66 | 6.65 | 6.65 | 6.65 | ||

| HE | 6.98 | 5.99 | 5.99 | 5.99 | 5.99 | ||

| MMSDB-SR | 7.76 | 7.57 | 7.55 | 7.55 | 7.55 | ||

| EME | Bicubic | 11.39 | 11.08 | 6.36 | 6.07 | 5.09 | |

| IBP | 12.18 | 11.75 | 10.94 | 9.22 | 8.37 | ||

| HE | 9.68 | 8.71 | 7.97 | 7.81 | 7.77 | ||

| MMSDB-SR | 15.07 | 14.89 | 12.61 | 11.42 | 10.41 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, H.; Gao, X.; Tang, X.; Xie, J.; Song, W.; Mo, F.; Jia, D. Super-Resolution Reconstruction and Its Application Based on Multilevel Main Structure and Detail Boosting. Remote Sens. 2018, 10, 2065. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10122065

Zhu H, Gao X, Tang X, Xie J, Song W, Mo F, Jia D. Super-Resolution Reconstruction and Its Application Based on Multilevel Main Structure and Detail Boosting. Remote Sensing. 2018; 10(12):2065. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10122065

Chicago/Turabian StyleZhu, Hong, Xiaoming Gao, Xinming Tang, Junfeng Xie, Weidong Song, Fan Mo, and Di Jia. 2018. "Super-Resolution Reconstruction and Its Application Based on Multilevel Main Structure and Detail Boosting" Remote Sensing 10, no. 12: 2065. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10122065