On the Use of Unmanned Aerial Systems for Environmental Monitoring

Abstract

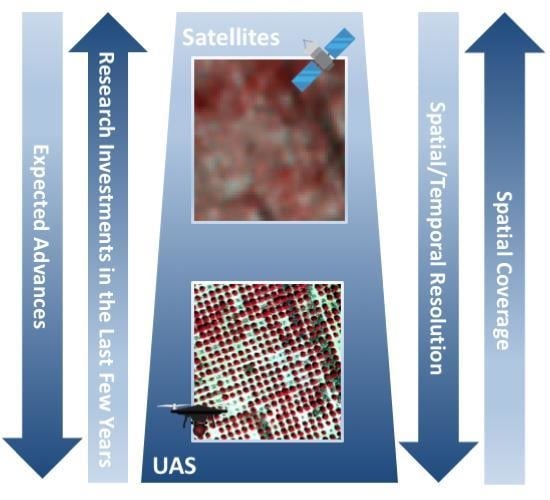

:1. Introduction

2. Data Collection, Processing, and Limitations

2.1. Preflight Planning

2.2. Sensors

2.3. Software

3. Monitoring Agricultural and Natural Ecosystems

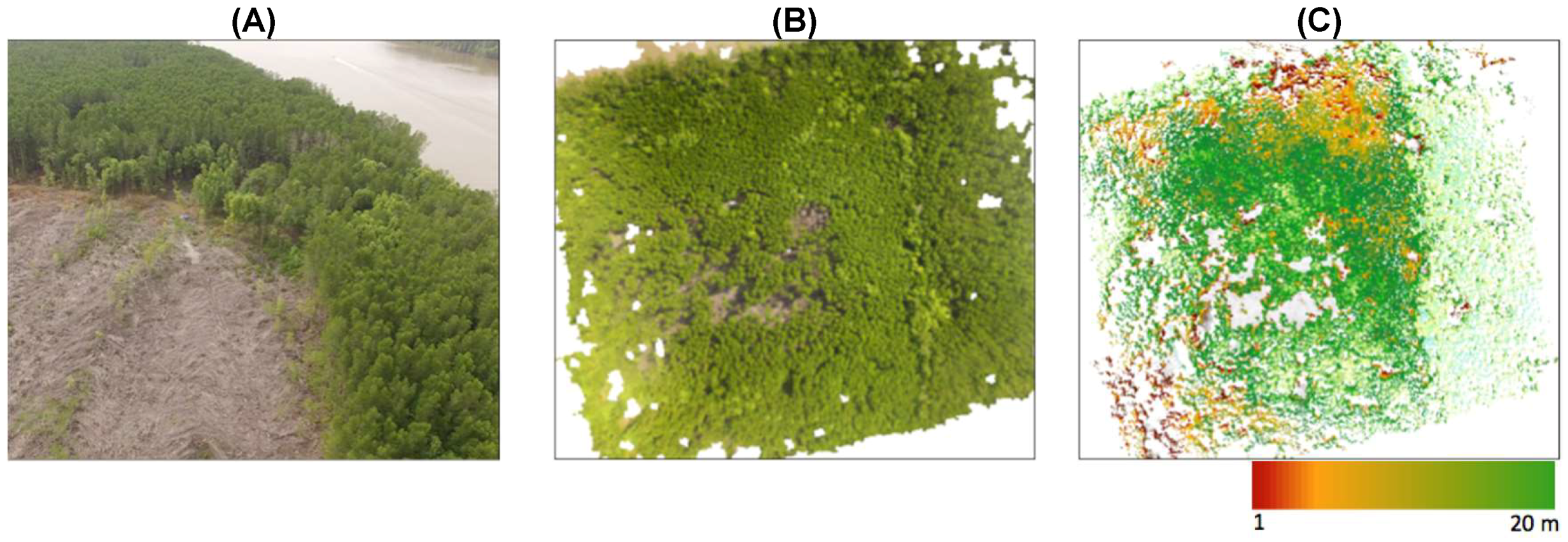

3.1. Vegetation Monitoring and Precision Agriculture

3.2. Monitoring of Natural Ecosystems

4. River Systems and Floods

Flow Monitoring

5. Final Remarks and Challenges

- (i)

- While a direct comparison between different methodologies (UAS, manned airborne, and satellite) is challenging, it was found that UAS systems represent a cost-effective monitoring technique over small regions (<20 ha). For larger extents, manned airborne or satellite platforms may become more effective options, but only when the temporal advantage of the UAS is not considered.

- (ii)

- The limited extent of the studied areas reduces the relative budget available, increasing the fragmentation of the adopted procedures and methodologies.

- (iii)

- Government regulations restricting the Ground Sample Distance (GSD) and the UAS flight mode are limiting the economic advantages related to their use and some potential applications, particularly in urban environments.

- (iv)

- The wide range of experiences described highlighted the huge variability in the strategies, methodologies, and sensors adopted for each specific environmental variable monitored. This identifies the need to find unifying principles in UAS-based studies.

- (v)

- Vulnerability of UAS to weather conditions (e.g., wind, rain) can alter quality of the surveys.

- (vi)

- There are also technical limits, such as weather constraints (strong wind and/or rain), high elevations, or high-temperature environments that can be challenging for most of the devices/sensors and respective UAS operators (see, e.g., [155]).

- (vii)

- The geometric and radiometric limitations of current lightweight sensors make the use of this technology challenging.

- (viii)

- The high spatial resolution of UAS data generates high demand on data storage and processing capacity.

- (ix)

- There is a clear need for procedures to characterize and correct the sensor errors that can propagate in the subsequent mosaicking and related data processing.

- (x)

- Finally, a disadvantage in the use of UAS is represented by the complexity associated to their use that is comparable to that of satellites. In fact, satellite applications are generally associated to a chain of processing assuring the final quality of data. In the case of UAS, all this is left to the final user or researcher, requiring additional steps in order to be able to use the retrieved data.

- One of the aspects directly impacting the area that is able to be sensed is the limited flight times of UAS. This problem is currently managed by mission planning that enables management of multiple flights. Technology is also offering new solutions that will extend the flight endurance up to several hours, making the use of UAS more competitive. For instance, new developments in batteries suggest that the relatively short flying time imposed by current capacity will be significantly improved in the future [156]. In this context, another innovation introduced in the most recent vehicles is an integrated energy supply system connected with onboard solar panels that allow flight endurance to be extended from 40–50 min up to 5 h, depending on the platform.

- The relative ground sampling distance affects the quality of the surveys, but is often not compensated for. This limitation can now be solved by implementing 3D flight paths that follow the surface in order to maintain a uniform GSD. Currently, only a few software suites (e.g., UgCS, eMotion 3) use digital terrain models to adjust the height path of the mission in order to maintain consistent GSD.

- The influence of GSD may be reduced by increasing flight height, making UAS even more cost-competitive (by increasing sensed areas), but current legislation in many jurisdictions limits this to between 120 and 150 m and to within visible line of sight (VLOS). In this context, the development of microdrones will significantly reduce risk associated with their use, and relax some of the constraints due to safety requirements.

- Recent and rapid developments in sensor miniaturization, standardization, and cost reduction have opened new possibilities for UAS applications. However, limits remain, especially for commercial readymade platforms that are used the most among the scientific community.

- Sensor calibration remains an issue, especially for hyperspectral sensors. For example, vegetation can be measured in its state and distribution using RGB, multispectral, hyperspectral, and thermal cameras, as well as with LiDAR.

- Image registration, correction, and calibration remain major challenges. The vulnerability of UAS to weather conditions (wind, rain) and the geometric and radiometric limitations of current lightweight sensors have stimulated the development of new algorithms for image mosaicking and correction. In this context, the development of open source and commercial SfM software allows image mosaicking to be addressed, but radiometric correction and calibration is still an open question that may find a potential solution through experience with EO. Moreover, the development of new mapping-quality cameras has already significantly improved spatial registration and will likely help to also improve the overall quality of the UAS imagery.

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A. Available Sensors and Cameras

| Manufacturer and Model | Sensor Type Resolution (MPx) | FormatType * | Sensor Size (mm2) | Pixel Pitch (μm) | Weight (kg) | Frame Rate (fps) | Max Shutter Speed (s−1) | Approx. Price ($) |

|---|---|---|---|---|---|---|---|---|

| Canon EOS 5DS | CMOS 51 | FF | 36.0 × 24.0 | 4.1 | 0.930 | 5.0 | 8000 | 3400 |

| Sony Alpha 7R II | CMOS 42 | FF MILC | 35.9 × 24.0 | 4.5 | 0.625 | 5.0 | 8000 | 3200 |

| Pentax 645D | CCD 40 | FF | 44.0 × 33.0 | 6.1 | 1.480 | 1.1 | 4000 | 3400 |

| Nikon D750 | CMOS 24 | FF | 35.9 × 24.0 | 6.0 | 0.750 | 6.5 | 4000 | 2000 |

| Nikon D7200 | CMOS 24 | SF | 23.5 × 15.6 | 3.9 | 0.675 | 6.0 | 8000 | 1100 |

| Sony Alpha a6300 | CMOS 24 | SF MILC | 23.5 × 15.6 | 3.9 | 0.404 | 11.0 | 4000 | 1000 |

| Pentax K-3 II | CMOS 24 | SF | 23.5 × 15.6 | 3.9 | 0.800 | 8.3 | 8000 | 800 |

| Foxtech Map-01 | CMOS 24 | APS-C | 23.5 × 15.6 | 3.9 | 0.155 | 6 | 4000 | 880 |

| Canon EOS 7D Mark II | CMOS 20 | SF | 22.3 × 14.9 | 4.1 | 0.910 | 10.0 | 8000 | 1500 |

| Panasonic Lumix DMC GX8 | CMOS 20 | SF MILC | 17.3 × 13.0 | 3.3 | 0.487 | 10.0 | 8000 | 1000 |

| Sony QX1 | CMOS 20 | APS-C | 23.2 × 15.4 | 4.3 | 0.216 | 3.5 | 4000 | 500 |

| Ricoh GXR A16 | CMOS 16 | SF | 23.6 × 15.7 | 4.8 | 0.550 | 2.5 | 3200 | 650 |

| Manufacturer and Model | Resolution (Mpx) | Size (mm) | Pixel Size (μm) | Weight (kg) | Number of Spectral Bands | Spectral Range (nm) | Approx. Price ($) |

|---|---|---|---|---|---|---|---|

| Tetracam MCAW6 (Global shutter) | 1.3 | - | 4.8 × 4.8 | 0.55 | 6 | 450–1000 (*) | 16,995 |

| Tetracam MCAW12 (Global shutter) | 1.3 | - | 4.8 × 4.8 | 0.6 | 12 | 450–1000 (*) | 34,000 |

| Tetracam MicroMCA4 Snap (Global shutter) | 1.3 | 115.6 × 80.3 × 68.1 | 4.8 × 4.8 | 0.497 | 4 | 450–1000 (*) | 9995 |

| Tetracam MicroMCA6 Snap (Global shutter) | 1.3 | 115.6 × 80.3 × 68.1 | 4.8 × 4.8 | 0.53 | 6 | 450–1000 (*) | 14,995 |

| Tetracam MicroMCA12 Snap (Global shutter) | 1.3 | 115.6 × 155 × 68.1 | 4.8 × 4.8 | 1 | 12 | 450–1000 (*) | 29,995 |

| Tetracam MicroMCA6 RS (Rolling shutter) | 1.3 | 115.6 × 80.3 × 68.1 | 4.8 × 4.8 | 0.53 | 6 | 450–1000 (*) | 12,995 |

| Tetracam MicroMCA12 RS (Rolling shutter) | 1.3 | 115.6 × 155 × 68.1 | 4.8 × 4.8 | 1 | 12 | 450–1000 (*) | 25,995 |

| Tetracam ADC micro | 3.2 | 75 × 59 × 33 | 3.2 × 3.2 | 0.9 | 6 | 520–920 (Equiv. to Landsat TM2, 3, 4) | 2995 |

| Quest Innovations Condor-5 ICX 285 | 7 | 150 × 130 × 177 | 6.45 × 6.45 | 1.4 | 5 | 400–1000 | - |

| Parrot Sequoia | 1.2 | 59 × 41 × 28 | 3.75 × 3.75 | 0.72 | 4 | 550–810 | 5300 |

| MicaSense RedEdge | 120 × 66 × 46 | 0.18 | 5 | 475–840 | 4900 | ||

| Sentera Quad | 1.2 | 76 × 62 × 48 | 3.75 × 3.75 | 0.170 | 4 | 400–825 | 8500 |

| Sentera High Precision NDVI and NDRE | 1.2 | 25.4 × 33.8 × 37.3 | 3.75 × 3.75 | 0.030 | 2 | 525–890 | - |

| Sentera Multispectral Double 4K | 12.3 | 59 × 41 × 44.5 | - | 0.080 | 5 | 386–860 | 5000 |

| SLANTRANGE 3P NDVI | 3 | 146 × 69 × 57 | - | 0.350 | 4 | 410–950 | 4500 |

| Mappir Survey2 | 16 | 59 × 41 × 30 | 1.34 × 1.34 | 0.047 | 1–6 (filters)—one lens | 395–945 | 280 |

| Mappir Survey3 | 12 | 59 × 41.5 × 36 | 1.55 × 1.55 | 0.050 | 1–4 (filters)—one lens | 395–945 | 400 |

| Mappir Kernel | 14.4 | 34 × 34 × 40 | 1.4 × 1.4 | 0.045 | 19+ (filters)—six array lens | 395–945 | 1299 |

| Manufacturer and Model | Lens | Size (mm2) | Pixel Size (μm) | Weight (kg) | Spectral Range (nm) | Spectral Bands (N) (Resolution, nm) | Peak SNR | Approx. Price ($) |

|---|---|---|---|---|---|---|---|---|

| Rikola Ltd. hyperspectral camera | CMOS | 5.6 × 5.6 | 5.5 | 0.6 | 500–900 | 40 (10 nm) | - | 40,000 |

| Headwall Photonics Micro-hyperspec X-series NIR | InGaAs | 9.6 × 9.6 | 30 | 1.025 | 900–1700 | 62 (12.9 nm) | - | - |

| BaySpec’s OCI-UAV-1000 | C-mount | 10 × 10 × 10 | N/A | 0.272 | 600–1000 | 100 (5 nm)/20–12 (15 nm) | - | - |

| HySpex Mjolnir V-1240 | - | 25 × 17.5 × 17 | 0.27 mrad | 4.0 | 400–1000 | 200 (3 nm) | >180 | - |

| HySpex Mjolnir S-620 | - | 25.4 × 17.5 × 17 | 0.54 mrad | 4.5 | 970–2500 | 300 (5.1 nm) | >900 | - |

| Specim-AISA KESTREL16 | push-broom | 99 × 215 × 240 | 2.3 | 600–1640 | Up to 350 (3–8 nm) | 400–600 | - | |

| Cornirg microHSI 410 SHARK | CCD/CMOS | 136 × 87 × 70.35 | 11.7 μm | 0.68 | 400–1000 | 300 (2 nm) | - | - |

| Resonon Pika L | 10.0 × 12.5 × 5.3 | 5.86 | 0.6 | 400–1000 | 281 (2.1 nm) | 368–520 | - | |

| CUBERT (S185) | Snapshot + PAN | 19 × 42 × 65 | 0.49 | 450–995 | 125 (8 mm) | - | 50,000 |

| Manufacturer and Model | Resolution (Px) | Sensor Size (mm2) | Pixel Pitch (μm) | Weight (kg) | Spectral Range (μm) | Thermal Sensitivity (mK) | Approx. Price ($) |

|---|---|---|---|---|---|---|---|

| FLIR Duo Pro 640 | 640 × 512 | 10.8 × 8.7 | 17 | <0.115 | 7.5–13.5 | 50 | 10,500 |

| FLIR Duo Pro 336 | 336 × 256 | 5.7 × 4.4 | 17 | <0.115 | 7.5–13.5 | 50 | 7500 |

| FLIR Duo R | 160 × 120 | - | - | 0.084 | 7.5–13.5 | 50 | 2200 |

| FLIR Tau2 640 | 640 × 512 | N/A | 17 | <0.112 | 7.5–13.5 | 50 | 9000 |

| FLIR Tau2 336 | 336 × 256 | N/A | 17 | <0.112 | 7.5–13.5 | 50 | 4000 |

| Optris PI 450 | 382 × 288 | - | - | 0.320 | 7.5–13 | 130 | 7000 |

| Optris PI 640 | 640 × 480 | - | - | 0.320 | 7.5–13 | 130 | 9700 |

| Thermoteknix Miricle 307 K | 640 × 480 | 16.0 × 12.0 | 25 | <0.170 | 8.0–12.0 | 50 | - |

| Thermoteknix Miricle 110 K | 384 × 288 | 9.6 × 7.2 | 25 | <0.170 | 8.0–12.0 | 50/70 | - |

| Workswell WIRIS 640 | 640 × 512 | 16.0 × 12.8 | 25 | <0.400 | 7.5–13.5 | 30/50 | - |

| Workswell WIRIS 336 | 336 × 256 | 8.4 × 6.4 | 25 | <0.400 | 7.5–13.5 | 30/50 | - |

| YUNCGOETEU | 160 × 120 | 81 × 108 × 138 | 12 | 0.278 | 8.0–14.0 | <50 | - |

| Manufacturer and Model | Scanning Pattern | Range (m) | Weight (kg) | Angular Res. (deg) | FOV (deg) | Laser Class and λ (nm) | Frequency (kp/s) | Aprox. Price ($) |

|---|---|---|---|---|---|---|---|---|

| ibeo Automotive Systems IBEO LUX | 4 Scanning parallel lines | 200 | 1 | (H) 0.125 (V) 0.8 | (H) 110 (V) 3.2 | Class A 905 | 22 | - |

| Velodyne HDL-32E | 32 Laser/detector pairs | 100 | 2 | (H)–(V) 1.33 | (H) 360 (V) 41 | Class A 905 | 700 | - |

| RIEGL VQ-820-GU | 1 Scanning line | >1000 | 25.5 | (H) 0.01 (V) N/A | (H) 60 (V) N/A | Class 3B 532 | 200 | - |

| Hokuyo UTM-30LX-EW | 1080 distances in a plane | 30 | 0.37 | (H) 0.25 (V) N/A | (H) 270 (V) N/A | Class 1905 | 200 | - |

| Velodyne Puck Hi-Res | Dual Returns | 100 | 0.590 | (H)–(V) 0.1–0.4 | (H) 360 (V) 20 | Class A-903 | - | - |

| RIEGL VUX-1UAV | Parallel scan lines | 150 | 3.5 | 0.001° | 330 | Class A-NIR | 200 | >120,000 |

| Routescene—UAV LidarPod | 32 Laser/detector pairs | 100 | 1.3 | (H)–(V) 1.33 | (H) 360 (V) 41 | Class A-905 | - | - |

| Quanergy M8-1 | 8 laser/detector pairs | 150 | 0.9 | 0.03–0.2° | (H) 360 (V) 20 | Class A-905 | - | - |

| Phoenix Scout | Dual Returns | 120 | 1.65 | - | (H) 360 (V) 15 | Class 1-905 | 300 | >66,000 |

| Phoenix ALS-32 | 32 Laser/detector pairs | 120 | 2.4 | - | (H) 360 (V) 10–30 | Class 1-905 | 700 | >120,500 |

| YellowScan Surveyor | Dual returns | 100 | 1.6 | 0.125 | 360 | Class 1-905 | 300 | >93,000 |

| YellowScan Vx | Parallel scan lines | 100 | 2.5–3 | - | 360 | Class 1-905 | 100 | >93,000 |

References

- Belward, A.S.; Skøien, J.O. Who Launched What, When and Why; Trends in Global Land-Cover Observation Capacity from Civilian Earth Observation Satellites. ISPRS J. Photogramm. Remote Sens. 2015, 103, 115–128. [Google Scholar] [CrossRef]

- Hand, E. Startup Liftoff. Science 2015, 348, 172–177. [Google Scholar] [CrossRef] [PubMed]

- Wekerle, T.; Bezerra Pessoa Filho, J.; Eduardo Vergueiro Loures da Costa, L.; Gonzaga Trabasso, L. Status and Trends of Smallsats and Their Launch Vehicles—An Up-to-Date Review. J. Aerosp. Technol. Manag. 2017, 9, 269–286. [Google Scholar] [CrossRef]

- McCabe, M.F.; Rodell, M.; Alsdorf, D.E.; Miralles, D.G.; Uijlenhoet, R.; Wagner, W.; Lucieer, A.; Houborg, R.; Verhoest, N.E.C.; Franz, T.E.; et al. The future of Earth observation in hydrology. Hydrol. Earth Syst. Sci. 2017, 21, 3879–3914. [Google Scholar] [CrossRef]

- McCabe, M.F.; Aragon, B.; Houborg, R.; Mascaro, J. CubeSats in hydrology: Ultrahigh-resolution insights into vegetation dynamics and terrestrial evaporation. Water Resour. Res. 2017, 53, 10017–10024. [Google Scholar] [CrossRef]

- Pajares, G. Overview and Current Status of Remote Sensing Applications Based on Unmanned Aerial Vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–329. [Google Scholar] [CrossRef]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, Aircraft and Satellite Remote Sensing Platforms for Precision Viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef]

- Dustin, M.C. Monitoring Parks with Inexpensive UAVs: Cost Benefits Analysis for Monitoring and Maintaining Parks Facilities. Ph.D. Thesis, University of Southern California, Los Angeles, CA, USA, 2015. [Google Scholar]

- Lucieer, A.; Jong, S.M.D.; Turner, D. Mapping landslide displacements using Structure from Motion (SfM) and image correlation of multi-temporal UAV photography. Progr. Phys. Geog. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned aircraft systems in remote sensing and scientific research: Classification and Considerations of use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Van der Wal, T.; Abma, B.; Viguria, A.; Previnaire, E.; Zarco-Tejada, P.J.; Serruys, P.; van Valkengoed, E.; van der Voet, P. Fieldcopter: Unmanned aerial systems for crop monitoring services. Precis. Agric. 2013, 13, 169–175. [Google Scholar]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Whitehead, K.; Hugenholtz, C.H.; Myshak, S.; Brown, O.; LeClair, A.; Tamminga, A.; Barchyn, T.E.; Moorman, B.; Eaton, B. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 2: Scientific and commercial applications. J. Unmanned Veh. Syst. 2014, 2, 86–102. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Tauro, F.; Selker, J.; van de Giesen, N.; Abrate, T.; Uijlenhoet, R.; Porfiri, M.; Manfreda, S.; Caylor, K.; Moramarco, T.; Benveniste, J.; et al. Measurements and Observations in the XXI century (MOXXI): Innovation and multidisciplinarity to sense the hydrological cycle. Hydrolog. Sci. J. 2018, 63, 169–196. [Google Scholar] [CrossRef]

- Singh, K.K.; Frazier, A.E. A meta-analysis and review of unmanned aircraft system (UAS) imagery for terrestrial applications. Int. J. Remote Sens. 2018, 1–21. [Google Scholar] [CrossRef]

- Bryson, M.; Reid, A.; Ramos, F.; Sukkarieh, S. Airborne Vision-Based Mapping and Classification of Large Farmland Environments. J. Field Robot. 2010, 27, 632–655. [Google Scholar] [CrossRef]

- Akar, O. Mapping land use with using Rotation Forest algorithm from UAV images. Eur. J. Remote Sens. 2017, 50, 269–279. [Google Scholar] [CrossRef]

- Bueren, S.K.; Burkart, A.; Hueni, A.; Rascher, U.; Tuohy, M.P.; Yule, I.J. Deploying four optical UAV-based sensors over grassland: Challenges and limitations. Biogeosciences 2015, 12, 163–175. [Google Scholar] [CrossRef] [Green Version]

- Ludovisi, R.; Tauro, F.; Salvati, R.; Khoury, S.; Mugnozza Scarascia, G.; Harfouche, A. UAV-based thermal imaging for high-throughput field phenotyping of black poplar response to drought. Front. Plant Sci. 2017, 8, 1681. [Google Scholar] [CrossRef] [PubMed]

- Zhu, J.; Wang, K.; Deng, J.; Harmon, T. Quantifying Nitrogen Status of Rice Using Low Altitude UAV-Mounted System and Object-Oriented Segmentation Methodology. In Proceedings of the ASME International Design Engineering Technical Conferences and Computers and Information in Engineering Conference, San Diego, CA, USA, 30 August–2 September 2009; pp. 1–7. [Google Scholar]

- Urbahs, A.; Jonaite, I. Features of the use of unmanned aerial vehicles for agriculture applications. Aviation 2013, 17, 170–175. [Google Scholar] [CrossRef]

- Jeunnette, M.N.; Hart, D.P. Remote sensing for developing world agriculture: Opportunities and areas for technical development. In Proceedings of the Remote Sensing for Agriculture, Ecosystems, and Hydrology XVIII, Edinburgh, UK, 26–29 September 2016. [Google Scholar]

- Samseemoung, G.; Soni, P.; Jayasuriya, H.P.W.; Salokhe, V.M. An Application of low altitude remote sensing (LARS) platform for monitoring crop growth and weed infestation in a soybean plantation. Precis. Agric. 2012, 13, 611–627. [Google Scholar] [CrossRef]

- Alvarez-Taboada, F.; Paredes, C.; Julián-Pelaz, J. Mapping of the Invasive Species Hakea sericea Using Unmanned Aerial Vehicle (UAV) and WorldView-2 Imagery and an Object-Oriented Approach. Remote Sens. 2017, 9, 913. [Google Scholar] [CrossRef]

- Witte, B.M.; Singler, R.F.; Bailey, S.C.C. Development of an Unmanned Aerial Vehicle for the Measurement of Turbulence in the Atmospheric Boundary Layer. Atmosphere 2017, 8, 195. [Google Scholar] [CrossRef]

- Stone, H.; D’Ayala, D.; Wilkinson, S. The Use of Emerging Technology in Post-Disaster Reconnaissance Missions; EEFIT Report; Institution of Structural Engineers: London, UK, 2017; 25p. [Google Scholar]

- Frankenberger, J.R.; Huang, C.; Nouwakpo, K. Low-altitude digital photogrammetry technique to assess ephemeral gully erosion. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2008), Boston, MA, USA, 7–11 July 2008; pp. 117–120. [Google Scholar]

- d’Oleire-Oltmanns, S.; Marzolff, I.; Peter, K.D.; Ries, J.B. Unmanned aerial vehicle (UAV) for monitoring soil erosion in Morocco. Remote Sens. 2012, 4, 3390–3416. [Google Scholar] [CrossRef]

- Quiquerez, A.; Chevigny, E.; Allemand, P.; Curmi, P.; Petit, C.; Grandjean, P. Assessing the impact of soil surface characteristics on vineyard erosion from very high spatial resolution aerial images (Côte de Beaune, Burgundy, France). Catena 2014, 116, 163–172. [Google Scholar] [CrossRef]

- Aldana-Jague, E.; Heckrath, G.; Macdonald, A.; van Wesemael, B.; Van Oost, K. UAS-based soil carbon mapping using VIS-NIR (480-1000 nm) multi-spectral imaging: Potential and limitations. Geoderma 2016, 275, 55–66. [Google Scholar] [CrossRef]

- Niethammer, U.; James, M.R.; Rothmund, S.; Travelletti, J.; Joswig, M. UAV-based remote sensing of the Super Sauze landslide: Evaluation and results. Eng. Geol. 2012, 128, 2–11. [Google Scholar] [CrossRef]

- Sieberth, T.; Wackrow, R.; Chandler, J.H. Automatic detection of blurred images in UAV image sets. ISPRS J. Photogramm. Remote Sens. 2016, 122, 1–16. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Rumbao, I.C.; Berrocal, J.A.B.; Porras, A.G.F. Positional quality assessment of orthophotos obtained from sensors on board multi-rotor UAV platforms. Sensors 2014, 14, 22394–22407. [Google Scholar] [CrossRef] [PubMed]

- Ai, M.; Hu, Q.; Li, J.; Wang, M.; Yuan, H.; Wang, S. A robust photogrammetric processing method of low-altitude UAV images. Remote Sens. 2015, 7, 2302–2333. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Mitigating systematic error in topographic models derived from UAV and ground-based image networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef]

- Peppa, M.; Mills, J.P.; Moore, P.; Miller, P.E.; Chambers, J.C. Accuracy assessment of a UAV-based landslide monitoring system. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 895–902. [Google Scholar] [CrossRef]

- Eltner, A.; Schneider, D. Analysis of Different Methods for 3D Reconstruction of Natural Surfaces from Parallel-Axes UAV Images. Photogramm. Record 2015, 30, 279–299. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; d’Oleire-Oltmanns, S.; Niethammer, U. Optimising UAV topographic surveys processed with structure-from-motion: Ground control quality, quantity and bundle adjustment. Geomorphology 2017, 280, 51–66. [Google Scholar] [CrossRef]

- Toth, C.; Jóźków, G. Remote sensing platforms and sensors: A survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Torres-Sanchez, J.; Pena, J.M.; de Castro, A.I.; Lopez-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Saberioon, M.M.; Amina, M.S.M.; Anuar, A.R.; Gholizadeh, A.; Wayayokd, A.; Khairunniza-Bejo, S. Assessment of rice leaf chlorophyll content using visible bands at different growth stages at both the leaf and canopy scale. Int. J. Appl. Earth Obs. Geoinform. 2014, 32, 35–45. [Google Scholar] [CrossRef]

- Jannoura, R.; Brinkmann, K.; Uteau, D.; Bruns, C.; Joergensen, R.G. Monitoring of crop biomass using true colour aerial photographs taken from a remote controlled hexacopter. Biosyst. Eng. 2015, 129, 341–351. [Google Scholar] [CrossRef]

- Hunt, E.R.; Hivel, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; McCarty, G.W. Acquisition of NIR-green-blue digital photographs from unmanned aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Jhan, J.-P.; Rau, J.-Y.; Haala, N.; Cramer, M. Investigation of parallax issues for multi-lens multispectral camera band co-registration. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W6, 157–163. [Google Scholar] [CrossRef]

- Lu, B.; He, Y. Species classification using Unmanned Aerial Vehicle (UAV)-acquired high spatial resolution imagery in a heterogeneous grassland. ISPRS J. Photogramm. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Brook, A.; Ben-Dor, E. Supervised vicarious calibration (SVC) of hyperspectral remote-sensing data. Remote Sens. Environ. 2011, 115, 1543–1555. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Gonzalez-Dugo, V.; Berni, J.A.J. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a microhyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Honkavaara, E.; Rosnell, T.; Oliveira, R.; Tommaselli, A. Band registration of tuneable frame format hyperspectral UAV imagers in complex scenes. ISPRS J. Photogramm. 2017, 134, 96–109. [Google Scholar] [CrossRef]

- Burkart, A.; Aasen, H.; Alonso, L.; Menz, G.; Bareth, G.; Rascher, U. Angular dependency of hyperspectral measurements over wheat characterized by a novel UAV based goniometer. Remote Sens. 2015, 7, 725–746. [Google Scholar] [CrossRef] [Green Version]

- Ben-Dor, E.; Chabrillat, S.; Demattê, J.A.M.; Taylor, G.R.; Hill, J.; Whiting, M.L.; Sommer, S. Using imaging spectroscopy to study soil properties. Remote Sens. Environ. 2009, 113, S38–S55. [Google Scholar] [CrossRef]

- Brook, A.; Ben-Dor, E. Supervised vicarious calibration (SVC) of multi-source hyperspectral remote-sensing data. Remote Sens. 2015, 7, 6196–6223. [Google Scholar] [CrossRef]

- Smigaj, M.; Gaulton, R.; Suarez, J.C.; Barr, S.L. Use of miniature thermal cameras for detection of physiological stress in conifers. Remote Sens. 2017, 9, 20. [Google Scholar] [CrossRef]

- Sankey, T.; Donager, J.; McVay, J.; Sankey, J.B. UAV LiDAR and hyperspectral fusion for forest monitoring in the southwestern USA. Remote Sens. Environ. 2017, 195, 30–43. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; Smith, M.W. 3-D uncertainty-based topographic change detection with structure-from-motion photogrammetry: Precision maps for ground controland directly georeferenced surveys. Earth Surf. Process. Landf. 2017, 42, 1769–1788. [Google Scholar] [CrossRef]

- Manfreda, S.; Caylor, K.K. On the Vulnerability of Water Limited Ecosystems to Climate Change. Water 2013, 5, 819–833. [Google Scholar] [CrossRef]

- Manfreda, S.; Caylor, K.K.; Good, S. An Ecohydrological framework to explain shifts in vegetation organization across climatological gradients. Ecohydrology 2017, 10, 1–14. [Google Scholar] [CrossRef]

- Atzberger, C. Advances in remote sensing of agriculture: Context description, existing operational monitoring systems and major information needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693. [Google Scholar] [CrossRef]

- Huang, Y.; Thomson, S.J.; Hoffmann, W.C.; Lan, Y.; Fritz, B.K. Development and prospect of unmanned aerial vehicle technologies for agricultural production management. Int. J. Agric. Biol. Eng. 2013, 6, 1–10. [Google Scholar]

- Link, J.; Senner, D.; Claupein, W. Developing and evaluating an aerial sensor platform (ASP) to collect multispectral data for deriving management decisions in precision farming. Comput. Electron. Agric. 2013, 94, 20–28. [Google Scholar] [CrossRef]

- Zhang, C.; Walters, D.; Kovacs, J.M. Applications of low altitude remote sensing in agriculture upon farmer requests—A case study in northeastern Ontario, Canada. PLoS ONE 2014, 9, e112894. [Google Scholar] [CrossRef] [PubMed]

- Helman, D.; Givati, A.; Lensky, I.M. Annual evapotranspiration retrieved from satellite vegetation indices for the Eastern Mediterranean at 250 m spatial resolution. Atmos. Chem. Phys. 2015, 15, 12567–12579. [Google Scholar] [CrossRef]

- Gago, J.; Douthe, C.; Coopman, R.; Gallego, P.; Ribas-Carbo, M.; Flexas, J.; Escalona, J.; Medrano, H. UAVs challenge to assess water stress for sustainable agriculture. Agric. Water Manag. 2015, 153, 9–19. [Google Scholar] [CrossRef]

- Helman, D.; Lensky, I.M.; Osem, Y.; Rohatyn, S.; Rotenberg, E.; Yakir, D. A biophysical approach using water deficit factor for daily estimations of evapotranspiration and CO2 uptake in Mediterranean environments. Biogeosciences 2017, 14, 3909–3926. [Google Scholar] [CrossRef]

- Lacaze, B.; Caselles, V.; Coll, C.; Hill, H.; Hoff, C.; de Jong, S.; Mehl, W.; Negendank, J.F.; Riesebos, H.; Rubio, E.; Sommer, S.; et al. DeMon, Integrated approaches to desertification mapping and monitoring in the Mediterranean basin. Final report of De-Mon I Project, Joint. Research Centre of European Commission: Ispra (VA), Italy, 1996. [Google Scholar]

- Gigante, V.; Milella, P.; Iacobellis, V.; Manfreda, S.; Portoghese, I. Influences of Leaf Area Index estimations on the soil water balance predictions in Mediterranean regions. Nat. Hazard Earth Syst. Sci. 2009, 9, 979–991. [Google Scholar] [CrossRef]

- Helman, D. Land surface phenology: What do we really ‘see’ from space? Sci. Total Environ. 2018, 618, 665–673. [Google Scholar] [CrossRef] [PubMed]

- Primicerio, J.; Di Gennaro, S.F.; Fiorillo, E.; Genesio, L.; Lugato, E.; Matese, A.; Vaccari, F.P. A Flexible Unmanned Aerial Vehicle for Precision Agriculture. Precis. Agric. 2012, 13, 517–523. [Google Scholar] [CrossRef]

- McGwire, K.C.; Weltz, M.A.; Finzel, J.A.; Morris, C.E.; Fenstermaker, L.F.; McGraw, D.S. Multiscale Assessment of Green Leaf Cover in a Semi-Arid Rangeland with a Small Unmanned Aerial Vehicle. Int. J. Remote Sens. 2013, 34, 1615–1632. [Google Scholar] [CrossRef]

- Hmimina, G.; Dufrene, E.; Pontailler, J.Y.; Delpierre, N.; Aubinet, M.; Caquet, B.; de Grandcourt, A.S.; Burban, B.T.; Flechard, C.; Granier, A. Evaluation of the potential of MODIS satellite data to predict vegetation phenology in different biomes: An investigation using ground-based NDVI measurements. Remote Sens. Environ. 2013, 132, 145–158. [Google Scholar] [CrossRef]

- Johnson, L.F.; Herwitz, S.; Dunagan, S.; Lobitz, B.; Sullivan, D.; Slye, R. Collection of Ultra High Spatial and Spectral Resolution Image Data over California Vineyards with a Small UAV. In Proceedings of the 30th International Symposium on Remote Sensing of Environment, Honolulu, Hawaii, 10–14 November 2003; pp. 845–849. [Google Scholar]

- Zarco-Tejada, P.J.; Catalina, A.; Gonzalez, M.R.; Martin, P. Relationships between net photosynthesis and steady-state chlorophyll fluorescence retrieved from airborne hyperspectral imagery. Remote Sens. Environ. 2013, 136, 247–258. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Gonzalez-Dugo, V.; Williams, L.E.; Suarez, L.; Berni, J.A.J.; Goldhamer, D.; Fereres, E. A PRI-based water stress index combining structural and chlorophyll effects: Assessment using diurnal narrow-band airborne imagery and the CWSI thermal index. Remote Sens. Environ. 2013, 138, 38–50. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Guillen-Climent, M.L.; Hernandez-Clement, R.; Catalinac, A.; Gonzalez, M.R.; Martin, P. Estimating leaf carotenoid content in vineyards using high resolution hyperspectral imagery acquired from an unmanned aerial vehicle (UAV). Agric. For. Meteorol. 2013, 171–172, 281–294. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Suarez, L.; Gonzalez-Dugo, V. Spatial resolution effects on chlorophyll fluorescence retrieval in a heterogeneous canopy using hyperspectral imagery and radiative transfer simulation. IEEE Geosci. Remote Sens. Lett. 2013, 10, 937–941. [Google Scholar] [CrossRef]

- Hassan-Esfahani, L.; Torres-Rua, A.; Jensen, A.; McKee, M. Assessment of Surface Soil Moisture Using High Resolution Multi-Spectral Imagery and Artificial Neural Networks. Remote Sens. 2015, 7, 2627–2646. [Google Scholar] [CrossRef]

- Manfreda, S.; Brocca, L.; Moramarco, T.; Melone, F.; Sheffield, J. A physically based approach for the estimation of root-zone soil moisture from surface measurements. Hydrol. Earth Syst. Sci. 2014, 18, 1199–1212. [Google Scholar] [CrossRef]

- Baldwin, D.; Manfreda, S.; Keller, K.; Smithwick, E.A.H. Predicting root zone soil moisture with soil properties and satellite near-surface moisture data at locations across the United States. J. Hydrol. 2017, 546, 393–404. [Google Scholar] [CrossRef]

- Sullivan, D.G.; Fulton, J.P.; Shaw, J.N.; Bland, G. Evaluating the sensitivity of an unmanned thermal infrared aerial system to detect water stress in a cotton canopy. Trans. Am. Soc. Agric. Eng. 2007, 50, 1955–1962. [Google Scholar] [CrossRef]

- de Lima, J.L.M.P.; Abrantes, J.R.C.B. Can infrared thermography be used to estimate soil surface microrelief and rill morphology? Catena 2014, 113, 314–322. [Google Scholar] [CrossRef]

- Abrantes, J.R.C.B.; de Lima, J.L.M.P.; Prats, S.A.; Keizer, J.J. Assessing soil water repellency spatial variability using a thermographic technique: An exploratory study using a small-scale laboratory soil flume. Geoderma 2017, 287, 98–104. [Google Scholar] [CrossRef]

- De Lima, J.L.M.P.; Abrantes, J.R.C.B.; Silva, V.P., Jr.; de Lima, M.I.P.; Montenegro, A.A.A. Mapping soil surface macropores using infrared thermography: An exploratory laboratory study. Sci. World J. 2014. [Google Scholar] [CrossRef] [PubMed]

- de Lima, J.L.M.P.; Abrantes, J.R.C.B.; Silva, V.P., Jr.; Montenegro, A.A.A. Prediction of skin surface soil permeability by infrared thermography: A soil flume experiment. Quant. Infrared Thermogr. J. 2014, 11, 161–169. [Google Scholar] [CrossRef]

- de Lima, J.L.M.P.; Abrantes, J.R.C.B. Using a thermal tracer to estimate overland and rill flow velocities. Earth Surf. Process. Landf. 2014b, 39, 1293–1300. [Google Scholar] [CrossRef]

- Abrantes, J.R.C.B.; Moruzzi, R.B.; Silveira, A.; de Lima, J.L.M.P. Comparison of thermal, salt and dye tracing to estimate shallow flow velocities: Novel triple tracer approach. J. Hydrol. 2018, 557, 362–377. [Google Scholar] [CrossRef]

- Jackson, R.D.; Idso, S.B.; Reginato, R.J. Canopy temperature as a crop water stress indicator. Water Resour. Res. 1981, 17, 1133–1138. [Google Scholar] [CrossRef]

- Cohen, Y.; Alchanatis, V.; Saranga, Y.; Rosenberg, O.; Sela, E.; Bosak, A. Mapping water status based on aerial thermal imagery: Comparison of methodologies for upscaling from a single leaf to commercial fields. Precis. Agric. 2017, 18, 801–822. [Google Scholar] [CrossRef]

- Baluja, J.; Diago, M.P.; Balda, P.; Zorer, R.; Meggio, M.; Morales, F.; Tardaguila, J. Assessment of vineyard water status variability by thermal and multispectral imagery using an unmanned aerial vehicle (UAV). Irrig. Sci. 2012, 30, 511–522. [Google Scholar]

- Gago, J.; Douthe, D.; Florez-Sarasa, I.; Escalona, J.M.; Galmes, J.; Fernie, A.R.; Flexas, J.; Medrano, H. Opportunities for improving leaf water use efficiency under climate change conditions. Plant Sci. 2014, 226, 108–119. [Google Scholar] [CrossRef] [PubMed]

- Gonzalez-Dugo, V.; Zarco-Tejada, P.; Nicolas, E.; Nortes, P.A.; Alarcon, J.J.; Intrigliolo, D.S.; Fereres, E. Using high resolution UAV thermal imagery to assess the variability in the water status of five fruit tree species within a commercial orchard. Precis. Agric. 2013, 14, 660–678. [Google Scholar] [CrossRef]

- Bellvert, J.; Zarco-Tejada, P.J.; Girona, J.; Fereres, E. Mapping crop water stress index in a ‘Pinot-noir’ vineyard: Comparing ground measurements with thermal remote sensing imagery from an unmanned aerial vehicle. Precis. Agric. 2014, 15, 361–376. [Google Scholar] [CrossRef]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.B.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agr. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Ben-Dor, E.; Banin, A. Visible and near-infrared (0.4–1.1 μm) analysis of arid and semiarid soils. Remote Sens. Environ. 1994, 48, 261–274. [Google Scholar] [CrossRef]

- Ben-Dor, E.; Banin, A. Evaluation of several soil properties using convolved TM spectra. In Monitoring Soils in the Environment with Remote Sensing and GIS; ORSTOM: Paris, France, 1996; pp. 135–149. [Google Scholar]

- Soriano-Disla, J.M.; Janik, L.J.; Viscarra Rossel, R.A.; Macdonald, L.M.; McLaughlin, M.J. The performance of visible, near-, and mid-infrared reflectance spectroscopy for prediction of soil physical, chemical, and biological properties. Appl. Spectrosc. Rev. 2014, 49, 139–186. [Google Scholar] [CrossRef]

- Costa, F.G.; Ueyama, J.; Braun, T.; Pessin, G.; Osorio, F.S.; Vargas, P.A. The use of unmanned aerial vehicles and wireless sensor network in agricultural applications. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS 2012), Munich, Germany, 22–27 July 2012; pp. 5045–5048. [Google Scholar]

- Peña, J.M.; Torres-Sanchez, J.; de Castro, A.I.; Kelly, M.; Lopez-Granados, F. Weed mapping in early-season maize fields using object-based analysis of unmanned aerial vehicle (UAV) images. PLoS ONE 2013, 8, e77151. [Google Scholar] [CrossRef] [PubMed]

- Peña, J.M.; Torres-Sanchez, J.; Serrano-Perez, A.; de Castro, A.I.; Lopez-Granados, F. Quantifying Efficacy and Limits of Unmanned Aerial Vehicle (UAV) Technology for Weed Seedling Detection as Affected by Sensor Resolution. Sensors 2015, 15, 5609–5626. [Google Scholar] [CrossRef] [PubMed]

- Tang, L.; Shao, G. Drone remote sensing for forestry research and practices. J. For. Res. 2015, 26, 791–797. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar]

- Ventura, D.; Bonifazi, A.; Gravina, M.F.; Ardizzone, G.D. Unmanned Aerial Systems (UASs) for Environmental Monitoring: A Review with Applications in Coastal Habitats. In Aerial Robots-Aerodynamics, Control and Applications; InTech: Rijeka, Croatia, 2017. [Google Scholar] [CrossRef]

- Jones, G.P.; Pearlstine, L.G.; Percival, H.F. An assessment of small unmanned aerial vehicles for wildlife research. Wildl. Soc. Bull. 2006, 34, 750–758. [Google Scholar] [CrossRef]

- Chabot, D.; Bird, D.M. Evaluation of an off-the-shelf unmanned aircraft system for surveying flocks of geese. Waterbirds 2012, 35, 170–174. [Google Scholar] [CrossRef]

- Getzin, S.; Wiegand, K.; Schöning, I. Assessing biodiversity in forests using very high-resolution images and unmanned aerial vehicles. Methods Ecol. Evol. 2012, 3, 397–404. [Google Scholar] [CrossRef]

- Koh, L.P.; Wich, S.A. Dawn of drone ecology: Low-cost autonomous aerial vehicles for conservation. Trop. Conserv. Sci. 2012, 5, 121–132. [Google Scholar] [CrossRef] [Green Version]

- Michez, A.; Piégay, H.; Jonathan, L.; Claessens, H.; Lejeune, P. Mapping of riparian invasive species with supervised classification of Unmanned Aerial System (UAS) imagery. Int. J. Appl. Earth Obs. Geoinform. 2016, 44, 88–94. [Google Scholar] [CrossRef]

- Reif, M.K.; Theel, H.J. Remote sensing for restoration ecology: Application for restoring degraded, damaged, transformed, or destroyed ecosystems. Integr. Environ. Assess. Manag. 2017, 13, 614–630. [Google Scholar] [CrossRef] [PubMed]

- McKenna, P.; Erskine, P.D.; Lechner, A.M.; Phinn, S. Measuring fire severity using UAV imagery in semi-arid central Queensland, Australia. Int. J. Remote Sens. 2017, 38, 4244–4264. [Google Scholar] [CrossRef]

- Klosterman, S.; Richardson, A.D. Observing Spring and Fall Phenology in a Deciduous Forest with Aerial Drone Imagery. Sensors 2017, 17, 2852. [Google Scholar] [CrossRef] [PubMed]

- Lehmann, J.R.K.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of unmanned aerial system-based CIR images in forestry—A new perspective to monitor pest infestation levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Minařík, R.; Langhammer, J. Use of a multispectral UAV photogrammetry for detection and tracking of forest disturbance dynamics. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; p. 41. [Google Scholar]

- Ahmed, O.S.; Shemrock, A.; Chabot, D.; Dillon, C.; Williams, G.; Wasson, R.; Franklin, S.E. Hierarchical land cover and vegetation classification using multispectral data acquired from an unmanned aerial vehicle. Int. J. Remote Sens. 2017, 38, 2037–2052. [Google Scholar]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Puliti, S.; Ørka, H.O.; Gobakken, T.; Næsset, E. Inventory of small forest areas using an unmanned aerial system. Remote Sens. 2015, 7, 9632–9654. [Google Scholar] [CrossRef] [Green Version]

- Dittmann, S.; Thiessen, E.; Hartung, E. Applicability of different non-invasive methods for tree mass estimation: A review. For. Ecol. Manag. 2017, 398, 208–215. [Google Scholar] [CrossRef]

- Otero, V.; Van De Kerchove, R.; Satyanarayana, B.; Martínez-Espinosa, C.; Fisol, M.A.B.; Ibrahim, M.R.B.; Sulong, I.; Mohd-Lokman, H.; Lucas, R.; Dahdouh-Guebas, F. Managing mangrove forests from the sky: Forest inventory using field data and Unmanned Aerial Vehicle (UAV) imagery in the Matang Mangrove Forest Reserve, peninsular Malaysia. For. Ecol. Manag. 2018, 411, 35–45. [Google Scholar] [CrossRef]

- Calviño-Cancela, M.R.; Mendez-Rial, J.R.; Reguera-Salgado, J.; Martín-Herrero, J. Alien plant monitoring with ultralight airborne imaging spectroscopy. PLoS ONE 2014, 9, e102381. [Google Scholar]

- Hill, D.J.C.; Tarasoff, G.E.; Whitworth, J.; Baron, J.L.; Bradshaw, J.S. Church, Utility of unmanned aerial vehicles for mapping invasive plant species: A case study on yellow flag iris (Iris pseudacorus L.). Int. J. Remote Sens. 2017, 38, 2083–2105. [Google Scholar] [CrossRef]

- Müllerová, J.; Bartaloš, T.; Brůna, J.; Dvořák, P.; Vítková, M. Unmanned aircraft in nature conservation—An example from plant invasions. Int. J. Remote Sens. 2017, 38, 2177–2198. [Google Scholar] [CrossRef]

- Müllerová, J.; Brůna, J.; Bartaloš, T.; Dvořák, P.; Vítková, M.; Pyšek, P. Timing is important: Unmanned aircraft versus satellite imagery in plant invasion monitoring. Front. Plant Sci. 2017, 8, 887. [Google Scholar] [CrossRef] [PubMed]

- Rocchini, D.; Andreo, V.; Förster, M.; Garzon Lopez, C.X.; Gutierrez, A.P.; Gillespie, T.W.; Hauffe, H.C.; He, K.S.; Kleinschmit, B.; Mairota, P.; et al. Potential of remote sensing to predict species invasions: A modelling perspective. Prog. Phys. Geogr. 2015, 39, 283–309. [Google Scholar] [CrossRef]

- Lehmann, J.R.; Prinz, T.; Ziller, S.R.; Thiele, J.; Heringer, G.; Meira-Neto, J.A.; Buttschardt, T.K. Open-source processing and analysis of aerial imagery acquired with a low-cost unmanned aerial system to support invasive plant management. Front. Environm. Sci. 2017, 5, 44. [Google Scholar] [CrossRef]

- Getzin, S.; Nuske, R.S.; Wiegand, K. Using unmanned aerial vehicles (UAV) to quantify spatial gap patterns in forests. Remote Sens. 2014, 6, 6988–7004. [Google Scholar] [CrossRef]

- Quilter, M.C.; Anderson, V.J. Low altitude/large scale aerial photographs: A tool for range and resource managers. Rangel. Arch. 2000, 22, 13–17. [Google Scholar] [CrossRef]

- Knoth, C.; Klein, B.; Prinz, T.; Kleinebecker, T. Unmanned aerial vehicles as innovative remote sensing platforms for high-resolution infrared imagery to support restoration monitoring in cut-over bogs. Appl. Veg. Sci. 2013, 16, 509–517. [Google Scholar] [CrossRef]

- Tralli, D.M.; Blom, R.G.; Zlotnicki, V.; Donnellan, A.; Evans, D.L. Satellite Remote Sensing of Earthquake, Volcano, Flood, Landslide and Coastal Inundation Hazards. ISPRS J. Photogramm. Remote Sens. 2005, 59, 185–198. [Google Scholar] [CrossRef]

- Gillespie, T.W.; Chu, J.; Frankenberg, E.; Thomas, D. Assessment and Prediction of Natural Hazards from Satellite Imagery. Prog. Phys. Geogr. 2007, 31, 459–470. [Google Scholar] [CrossRef] [PubMed]

- Joyce, K.E.; Belliss, S.E.; Samsonov, S.V.; McNeill, S.J.; Glassey, P.J. A Review of the Status of Satellite Remote Sensing and Image Processing Techniques for Mapping Natural Hazards and Disasters. Prog. Phys. Geogr. 2009, 33, 183–207. [Google Scholar] [CrossRef]

- Quaritsch, M.; Kruggl, K.; Wischounig-Strucl, D.; Bhattacharya, S.; Shah, M.; Rinner, B. Networked UAVs as aerial sensor network for disaster management applications. Elektrotech. Informationstech. 2010, 127, 56–63. [Google Scholar] [CrossRef]

- Erdelj, M.; Król, M.; Natalizio, E. Wireless sensor networks and multi-UAV systems for natural disaster management. Comput. Netw. 2017, 124, 72–86. [Google Scholar] [CrossRef]

- Syvitski, J.P.M.; Overeem, I.; Brakenridge, G.R.; Hannon, M. Floods, Floodplains, Delta Plains—A Satellite Imaging Approach. Sediment. Geol. 2012, 267–268, 1–14. [Google Scholar] [CrossRef]

- Yilmaz, K.K.; Adlerab, R.F.; Tianbc, Y.; Hongd, Y.; Piercebe, H.F. Evaluation of a Satellite-Based Global Flood Monitoring System. Int. J. Remote Sens. 2010, 31, 3763–3782. [Google Scholar] [CrossRef]

- D’Addabbo, A.; Refice, A.; Pasquariello, G.; Lovergine, F.; Capolongo, D.; Manfreda, S. A Bayesian Network for Flood Detection Combining SAR Imagery and Ancillary Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3612–3625. [Google Scholar] [CrossRef]

- Fujita, I.; Muste, M.; Kruger, A. Large-scale particle image velocimetry for flow analysis in hydraulic engineering applications. J. Hydraul. Res. 1997, 36, 397–414. [Google Scholar] [CrossRef]

- Brevis, W.; Niño, Y.; Jirka, G.H. Integrating cross-correlation and relaxation algorithms for particle tracking velocimetry. Exp. Fluids 2011, 50, 135–147. [Google Scholar] [CrossRef]

- Fujita, I.; Hino, T. Unseeded and seeded PIV measurements of river flows video from a helicopter. J. Vis. 2003, 6, 245–252. [Google Scholar] [CrossRef]

- Fujita, I.; Kunita, Y. Application of aerial LSPIV to the 2002 flood of the Yodo River using a helicopter mounted high density video camera. J. Hydro-Environ. Res. 2011, 5, 323–331. [Google Scholar] [CrossRef]

- Detert, M.; Weitbrecht, V. A low-cost airborne velocimetry system: Proof of concept. J. Hydraul. Res. 2015, 53, 532–539. [Google Scholar] [CrossRef]

- Tauro, F.; Pagano, C.; Phamduy, P.; Grimaldi, S.; Porfiri, M. Large-scale particle image velocimetry from an unmanned aerial vehicle. IEEE/ASME Trans. Mechatron. 2015, 20, 3269–3275. [Google Scholar] [CrossRef]

- Tauro, F.; Porfiri, M.; Grimaldi, S. Surface flow measurements from drones. J. Hydrol. 2016, 540, 240–245. [Google Scholar] [CrossRef]

- Tauro, F.; Petroselli, A.; Arcangeletti, E. Assessment of drone-based surface flow observations. Hydrol. Process. 2016, 30, 1114–1130. [Google Scholar] [CrossRef]

- Tauro, F.; Piscopia, R.; Grimaldi, S. Streamflow observations from cameras: Large Scale Particle Image Velocimetry of Particle Tracking Velocimetry? Water Resour. Res. 2018, 53, 10374–10394. [Google Scholar] [CrossRef]

- Sanyal, J.; Lu, X.X. Application of Remote Sensing in Flood Management with Special Reference to Monsoon Asia: A Review. Na. Hazards 2004, 33, 283–301. [Google Scholar] [CrossRef]

- Perks, M.T.; Russell, A.J.; Large, A.R.G. Technical Note: Advances in flash flood monitoring using unmanned aerial vehicles (UAVs). Hydrol. Earth Syst. Sci. 2016, 20, 4005–4015. [Google Scholar] [CrossRef]

- Ferreira, E.; Chandler, J.; Wackrow, R.; Shiono, K. Automated extraction of free surface topography using SfM-MVS photogrammetry. Flow Meas. Instrum. 2017, 54, 243–249. [Google Scholar] [CrossRef]

- Bandini, F.; Butts, M.; Jacobsen Torsten, V.; Bauer-Gottwein, P. Water level observations from unmanned aerial vehicles for improving estimates of surface water–groundwater interaction. Hydrol. Process. 2017, 31, 4371–4383. [Google Scholar] [CrossRef]

- Detert, M.; Johnson, E.D.; Weitbrecht, V. Proof-of-concept for low-cost and non-contact synoptic airborne river flow measurements. Int. J. Remote Sens. 2017, 38, 2780–2807. [Google Scholar] [CrossRef]

- Flynn, K.F.; Chapra, S.C. Remote sensing of submerged aquatic vegetation in a shallow non-turbid river using an unmanned aerial vehicle. Remote Sens. 2014, 6, 12815–12836. [Google Scholar] [CrossRef]

- Klemas, V.V. Coastal and Environmental Remote Sensing from Unmanned Aerial Vehicles: An Overview. J. Coast. Res. 2015, 31, 1260–1267. [Google Scholar] [CrossRef]

- Wigmore, O.; Bryan, M. Monitoring tropical debris-covered glacier dynamics from high-resolution unmanned aerial vehicle photogrammetry, Cordillera Blanca, Peru. Cryosphere 2017, 11, 2463–2480. [Google Scholar] [CrossRef]

- Langridge, M.; Edwards, L. Future Batteries, Coming Soon: Charge in Seconds, Last Months and Power over the Air. Gadgets 2017. Available online: https://www.pocket-lint.com/ (accessed on 13 February 2017).

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Manfreda, S.; McCabe, M.F.; Miller, P.E.; Lucas, R.; Pajuelo Madrigal, V.; Mallinis, G.; Ben Dor, E.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10040641

Manfreda S, McCabe MF, Miller PE, Lucas R, Pajuelo Madrigal V, Mallinis G, Ben Dor E, Helman D, Estes L, Ciraolo G, et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sensing. 2018; 10(4):641. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10040641

Chicago/Turabian StyleManfreda, Salvatore, Matthew F. McCabe, Pauline E. Miller, Richard Lucas, Victor Pajuelo Madrigal, Giorgos Mallinis, Eyal Ben Dor, David Helman, Lyndon Estes, Giuseppe Ciraolo, and et al. 2018. "On the Use of Unmanned Aerial Systems for Environmental Monitoring" Remote Sensing 10, no. 4: 641. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10040641