Determining Subarctic Peatland Vegetation Using an Unmanned Aerial System (UAS)

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Site

2.2. Vegetation Field Plots

2.3. UAS Image Data Collection

2.4. Georectification

2.5. Texture Analysis

2.6. Data Extraction and Statistical Analysis

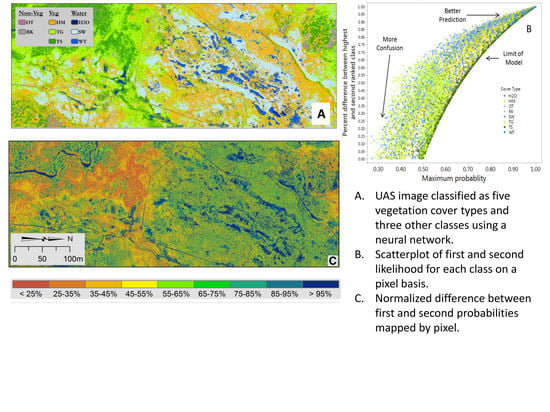

3. Results

Error Analysis and Cover Class Assessment

4. Discussion

Image Classification and Error Estimates

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Schuur, E.A.G.; McGuire, A.D.; Schädel, C.; Grosse, G.; Harden, J.W.; Hayes, D.J.; Hugelius, G.; Koven, C.D.; Kuhry, P.; Lawrence, D.M.; et al. Climate change and the permafrost carbon feedback. Nature 2015, 520, 171. [Google Scholar] [CrossRef] [PubMed]

- Johansson, T.; Malmer, N.; Crill, P.M.; Friborg, T.; ÅKerman, J.H.; Mastepanov, M.; Christensen, T.R. Decadal vegetation changes in a northern peatland, greenhouse gas fluxes and net radiative forcing. Glob. Chang. Biol. 2006, 12, 2352–2369. [Google Scholar] [CrossRef]

- Christensen, T.R.; Johansson, T.; Åkerman, H.J.; Mastepanov, M.; Malmer, N.; Friborg, T.; Crill, P.; Svensson, B.H. Thawing sub-arctic permafrost: Effects on vegetation and methane emissions. Hydrol. Land Surf. Stud. 2004, 31. [Google Scholar] [CrossRef] [Green Version]

- Bäckstrand, K.; Crill, P.M.; Jackowicz-Korczyñski, M.; Mastepanov, M.; Christensen, T.R.; Bastviken, D. Annual carbon gas budget for a subarctic peatland, Northern Sweden. Biogeosciences 2010, 7, 95–108. [Google Scholar] [CrossRef] [Green Version]

- Malhotra, A.; Roulet, N.T. Environmental correlates of peatland carbon fluxes in a thawing landscape: Do transitional thaw stages matter? Biogeosciences 2015, 12, 3119–3130. [Google Scholar] [CrossRef]

- Hodgkins, S.B.; Tfaily, M.M.; McCalley, C.K.; Logan, T.A.; Crill, P.M.; Saleska, S.R.; Rich, V.I.; Chanton, J.P. Changes in peat chemistry associated with permafrost thaw increase greenhouse gas production. Proc. Natl. Acad. Sci. USA 2014, 111, 5819–5824. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ström, L.; Mastepanov, M.; Christensen, T.R.J.B. Species-specific Effects of Vascular Plants on Carbon Turnover and Methane Emissions from Wetlands. Biogeochemistry 2005, 75, 65–82. [Google Scholar] [CrossRef]

- McCalley, C.K.; Woodcroft, B.J.; Hodgkins, S.B.; Wehr, R.A.; Kim, E.-H.; Mondav, R.; Crill, P.M.; Chanton, J.P.; Rich, V.I.; Tyson, G.W.; et al. Methane dynamics regulated by microbial community response to permafrost thaw. Nature 2014, 514, 478. [Google Scholar] [CrossRef] [PubMed]

- Chambers, J.Q.; Asner, G.P.; Morton, D.C.; Anderson, L.O.; Saatchi, S.S.; Espirito-Santo, F.D.; Palace, M.; Souza, C., Jr. Regional ecosystem structure and function: Ecological insights from remote sensing of tropical forests. Trends Ecol. Evol. 2007, 22, 414–423. [Google Scholar] [CrossRef] [PubMed]

- Harris, A.; Bryant, R.G. A multi-scale remote sensing approach for monitoring northern peatland hydrology: Present possibilities and future challenges. J. Environ. Manag. 2009, 90, 2178–2188. [Google Scholar] [CrossRef] [PubMed]

- Hill, M.J.; Zhou, Q.; Sun, Q.; Schaaf, C.B.; Palace, M. Relationships between vegetation indices, fractional cover retrievals and the structure and composition of Brazilian Cerrado natural vegetation. Int. J. Remote Sens. 2017, 38, 874–905. [Google Scholar] [CrossRef]

- Morton, D.C.; Nagol, J.; Carabajal, C.C.; Rosette, J.; Palace, M.; Cook, B.D.; Vermote, E.F.; Harding, D.J.; North, P.R.J. Amazon forests maintain consistent canopy structure and greenness during the dry season. Nature 2014, 506, 221. [Google Scholar] [CrossRef] [PubMed]

- McMichael, C.H.; Bush, M.B.; Silman, M.R.; Piperno, D.R.; Raczka, M.; Lobato, L.C.; Zimmerman, M.; Hagen, S.; Palace, M. Historical fire and bamboo dynamics in western Amazonia. J. Biogeogr. 2012, 40, 299–309. [Google Scholar] [CrossRef]

- Palace, M.W.; McMichael, C.N.H.; Braswell, B.H.; Hagen, S.C.; Bush, M.B.; Neves, E.; Tamanaha, E.; Herrick, C.; Frolking, S. Ancient Amazonian populations left lasting impacts on forest structure. Ecosphere 2017, 8, e02035. [Google Scholar] [CrossRef] [Green Version]

- Lees, K.J.; Quaife, T.; Artz, R.R.E.; Khomik, M.; Clark, J.M. Potential for using remote sensing to estimate carbon fluxes across northern peatlands—A review. Sci. Total Environ. 2018, 615, 857–874. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Key, J.R. Assessment of Arctic Cloud Cover Anomalies in Atmospheric Reanalysis Products Using Satellite Data. J. Clim. 2016, 29, 6065–6083. [Google Scholar] [CrossRef]

- Arroyo-Mora, J.P.; Kalacska, M.; Soffer, R.J.; Moore, T.R.; Roulet, N.T.; Juutinen, S.; Ifimov, G.; Leblanc, G.; Inamdar, D. Airborne Hyperspectral Evaluation of Maximum Gross Photosynthesis, Gravimetric Water Content, and CO2 Uptake Efficiency of the Mer Bleue Ombrotrophic Peatland. Remote Sens. 2018, 10, 565. [Google Scholar] [CrossRef]

- Pellissier, P.A.; Ollinger, S.V.; Lepine, L.C.; Palace, M.W.; McDowell, W.H. Remote sensing of foliar nitrogen in cultivated grasslands of human dominated landscapes. Remote Sens. Environ. 2015, 167, 88–97. [Google Scholar] [CrossRef] [Green Version]

- Siewert, M.B. High-resolution digital mapping of soil organic carbon in permafrost terrain using machine learning: A case study in a sub-Arctic peatland environment. Biogeosciences 2018, 15, 1663–1682. [Google Scholar] [CrossRef]

- Malmer, N.; Johansson, T.; Olsrud, M.; Christensen, T.R. Vegetation, climatic changes and net carbon sequestration in a North-Scandinavian subarctic mire over 30 years. Glob. Chang. Biol. 2005, 11, 1895–1909. [Google Scholar] [CrossRef]

- Virtanen, T.; Ek, M. The fragmented nature of tundra landscape. Int. J. Appl. Earth Obs. 2014, 27, 4–12. [Google Scholar] [CrossRef]

- Lovitt, J.; Rahman, M.M.; Saraswati, S.; McDermid, G.J.; Strack, M.; Xu, B. UAV Remote Sensing Can Reveal the Effects of Low-Impact Seismic Lines on Surface Morphology, Hydrology, and Methane (CH4) Release in a Boreal Treed Bog. J. Geophys. Res.-Biogeosci. 2018, 123, 1117–1129. [Google Scholar] [CrossRef]

- Rahman, M.M.; McDermid, G.J.; Strack, M.; Lovitt, J. A New Method to Map Groundwater Table in Peatlands Using Unmanned Aerial Vehicles. Remote Sens. 2017, 9, 1057. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Marris, E. Drones in science: Fly, and bring me data. Nature 2013, 498, 156–158. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bemis, S.P.; Micklethwaite, S.; Turner, D.; James, M.R.; Akciz, S.; Thiele, S.T.; Bangash, H.A. Ground-based and UAV-Based photogrammetry: A multi-scale, high-resolution mapping tool for structural geology and paleoseismology. J. Struct. Geol. 2014, 69, 163–178. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Watson, C. An Automated Technique for Generating Georectified Mosaics from Ultra-High Resolution Unmanned Aerial Vehicle (UAV) Imagery, Based on Structure from Motion (SfM) Point Clouds. Remote Sens. 2012, 4, 1392–1410. [Google Scholar] [CrossRef] [Green Version]

- Sona, G.; Pinto, L.; Pagliari, D.; Passoni, D.; Gini, R. Experimental analysis of different software packages for orientation and digital surface modelling from UAV images. Earth Sci. Inf. 2014, 7, 97–107. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef] [Green Version]

- Strahler, A.H.; Woodcock, C.E.; Smith, J.A. On the nature of models in remote sensing. Remote Sens. Environ. 1986, 20, 121–139. [Google Scholar] [CrossRef]

- Frolking, S.; Palace, M.W.; Clark, D.B.; Chambers, J.Q.; Shugart, H.H.; Hurtt, G.C. Forest disturbance and recovery: A general review in the context of spaceborne remote sensing of impacts on aboveground biomass and canopy structure. J. Geophys. Res.-Biogeosci. 2009, 114. [Google Scholar] [CrossRef] [Green Version]

- Xie, Y.; Sha, Z.; Yu, M. Remote sensing imagery in vegetation mapping: A review. J. Plant Ecol. 2008, 1, 9–23. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P.M. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Cracknell, M.J.; Reading, A.M. Geological mapping using remote sensing data: A comparison of five machine learning algorithms, their response to variations in the spatial distribution of training data and the use of explicit spatial information. Comput. Geosci. 2014, 63, 22–33. [Google Scholar] [CrossRef]

- Englemann, B.; Hayden, E.; Tasche, D. Measuring the Discriminative Power of Rating Systems. In Discussion Paper Series 2: Banking and Financial Studies; Deutsche Bundesbank: Frankfurt am Main, Germany, 2003; p. 24. [Google Scholar]

- Mahmon, N.A.; Ya’acob, N. A review on classification of satellite image using Artificial Neural Network (ANN). In Proceedings of the 2014 IEEE 5th Control and System Graduate Research Colloquium, Shah Alam, Malaysia, 11–12 August 2014; pp. 153–157. [Google Scholar]

- Atkinson, P.M.; Tatnall, A.R.L. Introduction Neural networks in remote sensing. Int. J. Remote Sens. 1997, 18, 699–709. [Google Scholar] [CrossRef]

- Nogueira, K.; Penatti, O.A.B.; dos Santos, J.A. Towards better exploiting convolutional neural networks for remote sensing scene classification. Pattern Recognit. 2017, 61, 539–556. [Google Scholar] [CrossRef] [Green Version]

- Turner, D.; Lucieer, A.; Malenovský, Z.; King, D.; Robinson, S. Spatial Co-Registration of Ultra-High Resolution Visible, Multispectral and Thermal Images Acquired with a Micro-UAV over Antarctic Moss Beds. Remote Sens. 2014, 6, 4003–4024. [Google Scholar] [CrossRef] [Green Version]

- Wang, R.; Gamon, J.A.; Cavender-Bares, J.; Townsend, P.A.; Zygielbaum, A.I. The spatial sensitivity of the spectral diversity–biodiversity relationship: An experimental test in a prairie grassland. Ecol. Appl. 2017, 28, 541–556. [Google Scholar] [CrossRef] [PubMed]

- Treat, C.C.; Marushchak, M.E.; Voigt, C.; Zhang, Y.; Tan, Z.; Zhuang, Q.; Virtanen, T.A.; Räsänen, A.; Biasi, C.; Hugelius, G.; et al. Tundra landscape heterogeneity, not inter-annual variability, controls the decadal regional carbon balance in the Western Russian Arctic. Glob. Chang. Biol. 2018. [Google Scholar] [CrossRef] [PubMed]

- Jonasson, C.; Sonesson, M.; Christensen, T.R.; Callaghan, T.V. Environmental monitoring and research in the Abisko area-an overview. Ambio 2012, 41 (Suppl. 3), 178–186. [Google Scholar] [CrossRef]

- Malhotra, A.; Moore, T.R.; Limpens, J.; Roulet, N.T. Post-thaw variability in litter decomposition best explained by microtopography at an ice-rich permafrost peatland. Arct. Antarct. Alp. Res. 2018, 50, e1415622. [Google Scholar] [CrossRef]

- Jackowicz-Korczyński, M.; Christensen, T.R.; Bäckstrand, K.; Crill, P.; Friborg, T.; Mastepanov, M.; Ström, L. Annual cycle of methane emission from a subarctic peatland. J. Geophys. Res.-Biogeosci. 2010, 115. [Google Scholar] [CrossRef] [Green Version]

- Mouillot, D.; Leprêtre, A. A comparison of species diversity estimators. Res. Popul. Ecol. 1999, 41, 203–215. [Google Scholar] [CrossRef]

- Lupascu, M.; Wadham, J.L.; Hornibrook, E.R.C.; Pancost, R.D. Temperature Sensitivity of Methane Production in the Permafrost Active Layer at Stordalen, Sweden: A Comparison with Non-permafrost Northern Wetlands. Arct. Antarct. Alp. Res. 2012, 44, 469–482. [Google Scholar] [CrossRef] [Green Version]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cyb. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Soares, J.V.; Rennó, C.D.; Formaggio, A.R.; da Costa Freitas Yanasse, C.; Frery, A.C. An investigation of the selection of texture features for crop discrimination using SAR imagery. Remote Sens. Environ. 1997, 59, 234–247. [Google Scholar] [CrossRef]

- Hudak, A.T.; Wessman, C.A. Textural Analysis of Historical Aerial Photography to Characterize Woody Plant Encroachment in South African Savanna. Remote Sens. Environ. 1998, 66, 317–330. [Google Scholar] [CrossRef]

- Ouma, Y.O.; Tetuko, J.; Tateishi, R. Analysis of co-occurrence and discrete wavelet transform textures for differentiation of forest and non-forest vegetation in very-high-resolution optical-sensor imagery. Int. J. Remote Sens. 2008, 29, 3417–3456. [Google Scholar] [CrossRef]

- Palace, M.; Keller, M.; Asner, G.P.; Hagen, S.; Braswell, B. Amazon Forest Structure from IKONOS Satellite Data and the Automated Characterization of Forest Canopy Properties. Biotropica 2007, 40, 141–150. [Google Scholar] [CrossRef]

- Gonzalez, P.; Asner, G.P.; Battles, J.J.; Lefsky, M.A.; Waring, K.M.; Palace, M. Forest carbon densities and uncertainties from Lidar, QuickBird, and field measurements in California. Remote Sens. Environ. 2010, 114, 1561–1575. [Google Scholar] [CrossRef]

- Ahmed, A.; Gibbs, P.; Pickles, M.; Turnbull, L. Texture analysis in assessment and prediction of chemotherapy response in breast cancer. J. Magn. Reson. Imaging 2013, 38, 89–101. [Google Scholar] [CrossRef] [PubMed]

- Yuan, J.; Wang, D.; Li, R. Remote Sensing Image Segmentation by Combining Spectral and Texture Features. IEEE Trans. Geosci. Remote 2014, 52, 16–24. [Google Scholar] [CrossRef]

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Nagelkerke, N.J.D. A note on a general definition of the coefficient of determination. Biometrika 1991, 78, 691–692. [Google Scholar] [CrossRef]

- Paliwal, M.; Kumar, U.A. Neural networks and statistical techniques: A review of applications. Expert Syst. Appl. 2009, 36, 2–17. [Google Scholar] [CrossRef]

- Guisan, A.; Zimmermann, N.E. Predictive habitat distribution models in ecology. Ecol. Model. 2000, 135, 147–186. [Google Scholar] [CrossRef]

- Nakagawa, S.; Schielzeth, H. A general and simple method for obtaining R2 from generalized linear mixed-effects models. Methods Ecol. Evol. 2012, 4, 133–142. [Google Scholar] [CrossRef]

- Cragg, J.G.; Uhler, R.S. The Demand for Automobiles. Can. J. Econ. Rev. Can. d’Econ. 1970, 3, 386–406. [Google Scholar] [CrossRef]

- Cox, D.R.; Snell, E.J. The Analysis of Binary Data, 2nd ed.; Chapman and Hall/CRC: London, UK, 1989; p. 240. [Google Scholar]

- Pontius, R.G.; Millones, M. Death to Kappa: Birth of quantity disagreement and allocation disagreement for accuracy assessment. Int. J. Remote Sens. 2011, 32, 4407–4429. [Google Scholar] [CrossRef]

- Tapia, R.; Stein, A.; Bijker, W. Optimization of sampling schemes for vegetation mapping using fuzzy classification. Remote Sens. Environ. 2005, 99, 425–433. [Google Scholar] [CrossRef]

- Mikola, J.; Virtanen, T.; Linkosalmi, M.; Vähä, E.; Nyman, J.; Postanogova, O.; Räsänen, A.; Kotze, D.J.; Laurila, T.; Juutinen, S.; et al. Spatial variation and linkages of soil and vegetation in the Siberian Arctic tundra—Coupling field observations with remote sensing data. Biogeosciences 2018, 15, 2781–2801. [Google Scholar] [CrossRef]

- Palace, M.W.; Sullivan, F.B.; Ducey, M.J.; Treuhaft, R.N.; Herrick, C.; Shimbo, J.Z.; Mota-E-Silva, J. Estimating forest structure in a tropical forest using field measurements, a synthetic model and discrete return lidar data. Remote Sens. Environ. 2015, 161, 1–11. [Google Scholar] [CrossRef]

- Palace, M.; Sullivan, F.B.; Ducey, M.; Herrick, C. Estimating Tropical Forest Structure Using a Terrestrial Lidar. PLoS ONE 2016, 11, e0154115. [Google Scholar] [CrossRef] [PubMed]

- Howey, M.C.L.; Sullivan, F.B.; Tallant, J.; Kopple, R.V.; Palace, M.W. Detecting Precontact Anthropogenic Microtopographic Features in a Forested Landscape with Lidar: A Case Study from the Upper Great Lakes Region, AD 1000–1600. PLoS ONE 2016, 11, e0162062. [Google Scholar] [CrossRef] [PubMed]

| Cover Type | Soils and Vegetation | Dominant Vegetative Species | % | Second Dominant Vegetative Species | % | Diversity Index | Connecting Letter Report |

|---|---|---|---|---|---|---|---|

| Tall Shrub | Ombrotrophic, found in dry areas | Dwarf Birch (Betula nana) | 18.7 | Cloudberry (Rubus chamaemorus) | 11.4 | 1.53 | A |

| Hummock | Ombrotrophic, on permafrost | Crowberry (Empetrum hermaphroditum) | 16.9 | Hares Tail (Eriophorum vaginatum) | 16.1 | 1.44 | A |

| Semi-Wet | Ombrotrophic or minerotrophic | Spagnum sp. | 43.1 | Hares Tail (Eriophorum vaginatum) | 15.6 | 0.61 | B |

| Wet | Ombrotrophic | Open Water | 43.1 | Spagnum | 8.2 | 0.70 | B |

| Tall Graminoid | Wet minerotrophic | Carex sp. | 30.7 | Cotton Tail (Eriophorum angustafolium) | 11.5 | 0.90 | B |

| Cover Type | Abbrev. | Pixels | Percent |

|---|---|---|---|

| Other | OT | 1,028,465 | 0.7% |

| Rock | RK | 4,882,573 | 3.1% |

| Tall Graminoid | TG | 38,379,784 | 24.4% |

| Hummock | HM | 42,193,103 | 26.8% |

| Tall Shrub | TS | 26,852,493 | 17.1% |

| Water | H2O | 787,946 | 0.5% |

| Wet | WT | 8,538,871 | 5.4% |

| Semi-Wet | SW | 34,574,233. | 22.0% |

| Total | TT | 157,237,468 | 100.0% |

| Training Prediction Rate | ||||||||

|---|---|---|---|---|---|---|---|---|

| Classes | H2O | HM | OT | RK | SW | TG | TS | WT |

| H2O | 1.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| HM | 0.00 | 0.82 | 0.00 | 0.00 | 0.07 | 0.08 | 0.02 | 0.00 |

| OT | 0.00 | 0.00 | 0.96 | 0.04 | 0.00 | 0.00 | 0.00 | 0.00 |

| RK | 0.00 | 0.01 | 0.02 | 0.79 | 0.01 | 0.03 | 0.13 | 0.02 |

| SW | 0.00 | 0.13 | 0.00 | 0.01 | 0.77 | 0.08 | 0.00 | 0.02 |

| TG | 0.00 | 0.13 | 0.00 | 0.01 | 0.11 | 0.50 | 0.25 | 0.00 |

| TS | 0.00 | 0.04 | 0.00 | 0.02 | 0.03 | 0.32 | 0.59 | 0.00 |

| WT | 0.00 | 0.19 | 0.00 | 0.00 | 0.13 | 0.12 | 0.01 | 0.55 |

| Validation Prediction Rate | ||||||||

|---|---|---|---|---|---|---|---|---|

| Classes | H2O | HM | OT | RK | SW | TG | TS | WT |

| H2O | 1.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

| HM | 0.00 | 0.84 | 0.00 | 0.00 | 0.07 | 0.08 | 0.02 | 0.01 |

| OT | 0.00 | 0.00 | 0.95 | 0.05 | 0.00 | 0.00 | 0.00 | 0.00 |

| RK | 0.00 | 0.01 | 0.02 | 0.79 | 0.01 | 0.03 | 0.13 | 0.02 |

| SW | 0.00 | 0.14 | 0.00 | 0.00 | 0.75 | 0.08 | 0.00 | 0.02 |

| TG | 0.00 | 0.14 | 0.00 | 0.00 | 0.11 | 0.50 | 0.25 | 0.00 |

| TS | 0.00 | 0.04 | 0.00 | 0.02 | 0.03 | 0.33 | 0.58 | 0.00 |

| WT | 0.00 | 0.20 | 0.00 | 0.00 | 0.13 | 0.11 | 0.01 | 0.55 |

| Classes | Training | Error | Validation | Error |

|---|---|---|---|---|

| Omission | Comission | Omission | Comission | |

| H2O | 0.00 | 0.00 | 0.00 | 0.00 |

| HM | 0.29 | 0.18 | 0.30 | 0.16 |

| OT | 0.03 | 0.04 | 0.02 | 0.05 |

| RK | 0.20 | 0.21 | 0.17 | 0.21 |

| SW | 0.29 | 0.23 | 0.29 | 0.25 |

| TG | 0.52 | 0.50 | 0.52 | 0.50 |

| TS | 0.32 | 0.41 | 0.32 | 0.42 |

| WT | 0.13 | 0.45 | 0.15 | 0.46 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Palace, M.; Herrick, C.; DelGreco, J.; Finnell, D.; Garnello, A.J.; McCalley, C.; McArthur, K.; Sullivan, F.; Varner, R.K. Determining Subarctic Peatland Vegetation Using an Unmanned Aerial System (UAS). Remote Sens. 2018, 10, 1498. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10091498

Palace M, Herrick C, DelGreco J, Finnell D, Garnello AJ, McCalley C, McArthur K, Sullivan F, Varner RK. Determining Subarctic Peatland Vegetation Using an Unmanned Aerial System (UAS). Remote Sensing. 2018; 10(9):1498. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10091498

Chicago/Turabian StylePalace, Michael, Christina Herrick, Jessica DelGreco, Daniel Finnell, Anthony John Garnello, Carmody McCalley, Kellen McArthur, Franklin Sullivan, and Ruth K. Varner. 2018. "Determining Subarctic Peatland Vegetation Using an Unmanned Aerial System (UAS)" Remote Sensing 10, no. 9: 1498. https://0-doi-org.brum.beds.ac.uk/10.3390/rs10091498