TopoLAP: Topology Recovery for Building Reconstruction by Deducing the Relationships between Linear and Planar Primitives

Abstract

:1. Introduction

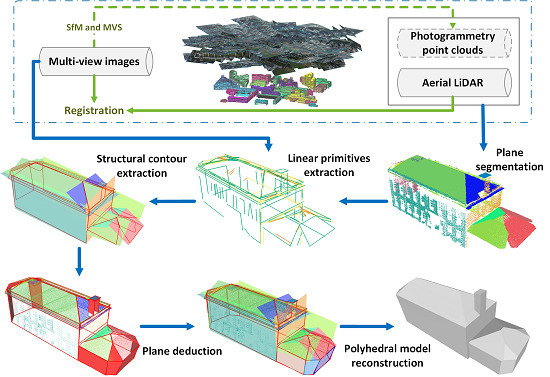

- Structural contour extraction from point clouds, taking full advantages of the characteristics of topological and textural information.

- Topology recovery for the incomplete planar primitives based on the relationship between the linear and planar primitives, which generates a complete set of candidate planes to describe the building.

- An optimized pipeline to reconstruct polyhedral models efficiently with high fitness to point clouds and high correctness of planar primitives.

2. Related Works

2.1. Linear Primitive Extraction and Generalization

2.2. Planar Primitive-Based Building Reconstruction

2.3. Topology Repair towards the Incomplete Dataset

3. Overview

4. Structural Planar Contour Extraction

4.1. Linear Feature Detection

| Algorithm 1. Boundary line contouring | ||

| Input: Concave hull point string ; Boundary lines and the endpoints | ||

| Output: Looped boundary line strings ; | ||

| 1 for each do | ||

| 2 | if then | |

| 3 | Status() = UNUSED | |

| 4 | end | |

| 5 end 6 for each do | ||

| 7 8 9 | closest to closest to for each Status() = UNUSED, do | |

| 10 | add to the final endpoint set | |

| 11 12 | end for each do | |

| 13 14 | add to | |

| 15 | end | |

| 16 end | ||

4.2. Contour Optimization

4.2.1. Hypothesis Generation

4.2.2. Energy Formulation

5. Plane Deduction Based on the Relationships between Linear and Planar Primitives

5.1. Geometry Primitive Candidates

5.2. Plane Detection from Lines and Validation Criterion

5.2.1. Line-and-Plane (LaP) Group

5.2.2. Line-and-Line (LaL) Unit

- Connected junction. Border Lines are considered as connected and snapped together if they satisfy that two lines intersect, and the sum of the distance from the intersection point to the closer end point of each segment is less than a distance threshold. (a) If three or more border lines are connected as a triple junction, check if the extended line of the outward borders meets an existing plane candidate. Connect the intersection points on the plane if found, otherwise, connect the two outward endpoints of the triple junction as the contour to enclose the unit. (b) If only two border lines connected, check if there are planes intersect with the junction. Take the connections of intersection points as contour segments if planes are found. If no plane is around (refer to the third subfigure in Figure 9b), find inner edges from and points from UA inside the angle between the two segments. When more than candidates are found, close the group polygon by convex hull; otherwise, add parallel segments to but leave this LaP group pending in the candidate pools. (c) For the junction that the segments are intersected but the endpoints are not connected, interrupt the segments by the intersection point and divide the group into 4 areas, each of which forms as the type (b). Examine each sub-part as (b) and confirm that with the largest sum of candidates the final LaP group.

- Parallel pair. Border lines that are confirmed as parallel pair are valid only if more than candidates can be attached to the LaP. Then the borders are connected similarly to the triple junction as illustrated in Figure 9a.

- Scattering lines. LaP group where no connected junction or parallel pair can be detected is treated as a group of scattering lines (Figure 9e) and is validated simply by the number of lines and points attached to it, i.e., abandon the group to which less than candidates can be attached.

5.3. Deduction Iterations

- Planes are detected from based on RANSAC and new LaP groups are formed.

- For each , the LaL Unit is identified and evaluated according to each unit pattern. Once the LaL Unit is validated, assemble the lines of as a new plane and assign lines and points candidates that are -close to this plane, retrieving from and respectively, to .

- Subsequently, the structural contour of the plane is extracted based on the algorithm proposed in Section 4 and the contour segments are added into if available.

- Back to start and repeat steps 1–3. The next iteration starts with the increased until no more LaP group can be detected and/or no more LaL units can be validated.

5.4. Watertight Polyhedral Model Reconstruction based on Optimized PolyFit

6. Experimental Results and Discussion

6.1. Data Overview

6.2. Building Reconstruction Results

6.3. Performance Analysis of the Linear Primitives

6.4. Limitations

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Biljecki, F.; Stoter, J.; Ledoux, H.; Zlatanova, S.; Çöltekin, A. Applications of 3D City Models: State of the Art Review. ISPRS Int. J. Geo-Inf. 2015, 4, 2842–2889. [Google Scholar] [CrossRef] [Green Version]

- Haala, N.; Kada, M. An update on automatic 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2010, 65, 570–580. [Google Scholar] [CrossRef]

- Musialski, P.; Wimmer, M. Inverse-Procedural Methods for Urban Models. In Proceedings of the Eurographics Workshop on Urban Data Modelling and Visualisation, Girona, Spain, 5 May 2013; pp. 31–32. [Google Scholar] [CrossRef]

- Wang, R.; Peethambaran, J.; Dong, C. LiDAR Point Clouds to 3D Urban Models: A Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 606–627. [Google Scholar] [CrossRef]

- Gröger, G.; Plümer, L. CityGML - Interoperable semantic 3D city models. ISPRS J. Photogramm. Remote Sens. 2012, 71, 12–33. [Google Scholar] [CrossRef]

- Rottensteiner, F.; Sohn, G.; Gerke, M.; Wegner, J.D.; Breitkopf, U.; Jung, J. Results of the ISPRS benchmark on urban object detection and 3D building reconstruction. ISPRS J. Photogramm. Remote Sens. 2014, 93, 256–271. [Google Scholar] [CrossRef]

- Salehi, A.; Mohammadzadeh, A. Building Roof Reconstruction Based on Residue Anomaly Analysis and Shape Descriptors from Lidar and Optical Data. Photogramm. Eng. Remote Sens. 2017, 83, 281–291. [Google Scholar] [CrossRef]

- Yang, L.; Sheng, Y.; Wang, B. 3D reconstruction of building facade with fused data of terrestrial LiDAR data and optical image. Optik (Stuttg). 2016, 127, 2165–2168. [Google Scholar] [CrossRef]

- Zhu, Q.; Li, Y.; Hu, H.; Wu, B. Robust point cloud classification based on multi-level semantic relationships for urban scenes. ISPRS J. Photogramm. Remote Sens. 2017, 129, 86–102. [Google Scholar] [CrossRef]

- Hofer, M.; Maurer, M.; Bischof, H. Efficient 3D scene abstraction using line segments. Comput. Vis. Image Underst. 2017, 157, 167–178. [Google Scholar] [CrossRef]

- Lin, Y.; Wang, C.; Cheng, J.; Chen, B.; Jia, F.; Chen, Z.; Li, J. Line segment extraction for large scale unorganized point clouds. ISPRS J. Photogramm. Remote Sens. 2015, 102, 172–183. [Google Scholar] [CrossRef]

- Ni, H.; Lin, X.; Ning, X.; Zhang, J. Edge detection and feature line tracing in 3D-point clouds by analyzing geometric properties of neighborhoods. Remote Sens. 2016, 8, 710. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point-Cloud Shape Detection. In Computer Graphics Forum; Wiley: Oxford, UK, 2007; Volume 26, pp. 214–226. [Google Scholar] [CrossRef]

- Desolneux, A.; Moisan, L.; Morel, J.-M. From Gestalt Theory to Image Analysis: A Probabilistic Approach; Springer Science & Business Media: New York, NY, USA, 2007; Volume 34. [Google Scholar] [CrossRef]

- Gómez, A.; Randall, G.; von Gioi, R.G. A contrario 3D point alignment detection algorithm. IPOL J. Image Process. Line 2017, 7, 399–417. [Google Scholar] [CrossRef]

- Lezama, J.; Morel, J.M.; Randall, G.; Grompone Von Gioi, R. A contrario 2D point alignment detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 499–512. [Google Scholar] [CrossRef]

- Hackel, T.; Wegner, J.D.; Schindler, K. Joint classification and contour extraction of large 3D point clouds. ISPRS J. Photogramm. Remote Sens. 2017, 130, 231–245. [Google Scholar] [CrossRef]

- Cao, R.; Zhang, Y.; Liu, X.; Zhao, Z. 3D building roof reconstruction from airborne LiDAR point clouds: A framework based on a spatial database. Int. J. Geogr. Inf. Sci. 2017, 31, 1359–1380. [Google Scholar] [CrossRef]

- Cao, R.; Zhang, Y.; Liu, X.; Zhao, Z. Roof plane extraction from airborne lidar point clouds. Int. J. Remote Sens. 2017, 38, 3684–3703. [Google Scholar] [CrossRef]

- Wang, J.; Fang, T.; Su, Q.; Zhu, S.; Liu, J.; Cai, S.; Tai, C.L.; Quan, L. Image-Based Building Regularization Using Structural Linear Features. IEEE Trans. Vis. Comput. Graph. 2016, 22, 1760–1772. [Google Scholar] [CrossRef]

- Xiong, B.; Oude Elberink, S.; Vosselman, G. Building modeling from noisy photogrammetric point clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, II-3, 197–204. [Google Scholar] [CrossRef] [Green Version]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building Extraction in Very High Resolution Remote Sensing Imagery Using Deep Learning. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Isack, H.; Boykov, Y. Energy-based geometric multi-model fitting. Int. J. Comput. Vis. 2012, 97, 123–147. [Google Scholar] [CrossRef]

- Li, Y.; Wu, X.; Chrysathou, Y.; Sharf, A.; Cohen-Or, D.; Mitra, N.J. GlobFit: Consistently Fitting Primitives by Discovering Global Relations. ACM Trans. Graph. 2011, 30, 1. [Google Scholar] [CrossRef]

- Monszpart, A.; Mellado, N.; Brostow, G.J.; Mitra, N.J. RAPter: Rebuilding Man-made Scenes with Regular Arrangements of Planes. ACM Trans. Graph. 2015, 34, 103:1–103:12. [Google Scholar] [CrossRef]

- Boulch, A.; de La Gorce, M.; Marlet, R. Piecewise-planar 3D reconstruction with edge and corner regularization. Eurographics Symp. Geom. Process. 2014, 33, 55–64. [Google Scholar] [CrossRef]

- Oesau, S.; Lafarge, F.; Alliez, P. Planar shape detection and regularization in tandem. In Computer Graphics Forum; Wiley: Zürich, Switzerland, 2016; Volume 35, pp. 203–215. [Google Scholar] [CrossRef]

- Holzmann, T.; Maurer, M.; Fraundorfer, F.; Bischof, H. Semantically aware urban 3d reconstruction with plane-based regularization. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 487–503. [Google Scholar]

- Coughlan, J.M.; Yuille, A.L. Manhattan World: compass direction from a single image by Bayesian inference. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; Volume 2, pp. 941–947. [Google Scholar] [CrossRef]

- Nan, L.; Sharf, A.; Zhang, H.; Cohen-Or, D.; Chen, B. SmartBoxes for interactive urban reconstruction. ACM Trans. Graph. 2010, 29, 1. [Google Scholar] [CrossRef]

- Oesau, S.; Lafarge, F.; Alliez, P. Indoor scene reconstruction using feature sensitive primitive extraction and graph-cut. ISPRS J. Photogramm. Remote Sens. 2014, 90, 68–82. [Google Scholar] [CrossRef] [Green Version]

- Elberink, S.O.; Vosselman, G. Building reconstruction by target based graph matching on incomplete laser data: Analysis and limitations. Sensors 2009, 9, 6101–6118. [Google Scholar] [CrossRef]

- Perera, G.S.N.; Maas, H.G. Cycle graph analysis for 3D roof structure modelling: Concepts and performance. ISPRS J. Photogramm. Remote Sens. 2014, 93, 213–226. [Google Scholar] [CrossRef]

- Xu, B.; Jiang, W.; Li, L. HRTT: A hierarchical roof topology structure for robust building roof reconstruction from point clouds. Remote Sens. 2017, 9, 354. [Google Scholar] [CrossRef]

- Nan, L.; Wonka, P. PolyFit: Polygonal Surface Reconstruction from Point Clouds. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2353–2361. [Google Scholar]

- Zolanvari, S.M.I.; Laefer, D.F.; Natanzi, A.S. Three-dimensional building façade segmentation and opening area detection from point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 143, 134–149. [Google Scholar] [CrossRef]

- Xiong, B.; Oude Elberink, S.; Vosselman, G. A graph edit dictionary for correcting errors in roof topology graphs reconstructed from point clouds. ISPRS J. Photogramm. Remote Sens. 2014, 93, 227–242. [Google Scholar] [CrossRef]

- Xiong, B.; Jancosek, M.; Oude Elberink, S.; Vosselman, G. Flexible building primitives for 3D building modeling. ISPRS J. Photogramm. Remote Sens. 2015, 101, 275–290. [Google Scholar] [CrossRef]

- Li, M.; Wonka, P.; Nan, L. Manhattan-world urban reconstruction from point clouds. In European Conference on Computer Vision; Springer: Amsterdam, The Netherlands, 2016; pp. 54–69. [Google Scholar]

- Gao, X.; Shen, S.; Zhou, Y.; Cui, H.; Zhu, L.; Hu, Z. Ancient Chinese architecture 3D preservation by merging ground and aerial point clouds. ISPRS J. Photogramm. Remote Sens. 2018. [Google Scholar] [CrossRef]

- Gao, X.; Shen, S.; Hu, Z.; Wang, Z. Ground and aerial meta-data integration for localization and reconstruction: A review. Pattern Recognit. Lett. 2018, 1–13. [Google Scholar] [CrossRef]

- Sakurada, K.; Tetsuka, D.; Okatani, T. Temporal city modeling using street level imagery. Comput. Vis. Image Underst. 2017, 157, 55–71. [Google Scholar] [CrossRef]

- Zhang, S.; Tao, P.; Wang, L.; Hou, Y.; Hu, Z. Improving Details of Building Façades in Open LiDAR Data Using Ground Images. Remote Sens. 2019, 11, 420. [Google Scholar] [CrossRef]

- Arikan, M.; Schwärzler, M.; Flöry, S.; Wimmer, M.; Maierhofer, S. O-snap: Optimization-based snapping for modeling architecture. ACM Trans. Graph. 2013, 32, 1–15. [Google Scholar] [CrossRef]

- Ochmann, S.; Vock, R.; Klein, R. Automatic reconstruction of fully volumetric 3D building models from oriented point clouds. ISPRS J. Photogramm. Remote Sens. 2019, 151, 251–262. [Google Scholar] [CrossRef] [Green Version]

- Boykov, Y.; Veksler, O.; Zabih, R. Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1. [Google Scholar] [CrossRef]

- Grompone Von Gioi, R.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A fast line segment detector with a false detection control. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 722–732. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, H.; Wu, F. MSLD: A robust descriptor for line matching. In Proceedings of the 2009 11th IEEE International Conference on Computer-Aided Design and Computer Graphics, Huangshan, China, 19–21 August 2009; Volume 42, pp. 128–133. [Google Scholar] [CrossRef]

- Lyu, C.; Jiang, J. Remote sensing image registration with line segments and their intersections. Remote Sens. 2017, 9, 439. [Google Scholar] [CrossRef]

- ALSDublin ALSDublin15. Available online: https://serv.cusp.nyu.edu/projects/ALSDublin15/ALSDublin15.html (accessed on 4 June 2019).

- Fuhrmann, S.; Langguth, F.; Goesele, M. MVE-A Multi-View Reconstruction Environment. EUROGRAPHICS Work. Graph. Cult. Herit. 2014, 11–18. [Google Scholar] [CrossRef]

- Oude Elberink, S.; Vosselman, G. Quality analysis on 3D building models reconstructed from airborne laser scanning data. ISPRS J. Photogramm. Remote Sens. 2011, 66, 157–165. [Google Scholar] [CrossRef]

- Zhou, Q.-Y.; Neumann, U. 2.5 d dual contouring: A robust approach to creating building models from aerial lidar point clouds. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2010; pp. 115–128. [Google Scholar]

| Input Planar Segments | Reference Image | Output Models | |

|---|---|---|---|

| Dublin1 |  |  |  |

| Dublin2 |  |  |  |

| Dublin3 |  |  |  |

| Dublin4 |  |  |  |

| Ningbo |  |  |  |

| MVS |  |  |  |

| Bld. | Input Data | Model Reconstruction | ||||

|---|---|---|---|---|---|---|

| #Points/av. Spacing 1 | #Images/H/Gsd 2 | #Planes/#Segments 3 | #Deduced/#Model Planes 4 | Runtime (s) | ||

| Dublin1 | 1.1M/0.11 | 115/300/3.4 | 103/65 | 125/109 |  | 35.5 |

| Dublin2 | 695K/0.09 | 118/300/3.4 | 26/19 | 30/22 |  | 20.3 |

| Dublin3 | 266K/0.07 | 41/300/3.4 | 23/14 | 29/25 |  | 25.6 |

| Dublin4 | 158K/0.11 | 49/300/3.4 | 24/26 | 35/16 |  | 18.8 |

| Ningbo | 17K/0.64 | 99/900/4.8 | 15/14 | 25/14 |  | 15.9 |

| MVS | 237K/0.24 | 50/300/3.8 | 20/10 | 28/23 |  | 23.6 |

| Parameter | Value | Representation | ||

|---|---|---|---|---|

| Dublin | Ningbo | MVS | ||

| 0.1 m | 1 m | 1 m | Distance threshold in plane segmentation | |

| 1000 | 200 | 1000 | Min points number to support a plane segment | |

| Max square of circumradius of facets in α-shape mesh | ||||

| 2m | Max distance to intersect two planes | |||

| 0.5 m | 2 m | 2 m | Buffer threshold to support a line | |

| 10 | Smooth scalar in contour optimization | |||

| 5 | The min number of the sum of lines and points to support a new LaP group. | |||

| 2.5D-DC 1 | LoD2 | PolyFit | TopoLAP | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Dublin4 |  |  |  |  | |||||

| 0.060 | 0.187 | 0.222 | 0.429 | 0.343 | 0.583 | 0.238 | 0.442 | ||

| 6.56 × 10−3 | 0.093 | 4.23 × 10−3 | 0.069 | 1.86 × 10−3 | 0.01 | 1.53 × 10−3 | 0.024 | ||

| #Planes | 11,138 | 124 | 17 | 16 | |||||

| 86.6% | 91.3% | 76.9% | 82.3% | 76.5% | 76.5% | 93.7% | 93.7% | ||

| Ningbo |  |  |  |  | |||||

| 0.446 | 0.885 | 0.209 | 0.517 | 0.535 | 0.946 | 0.392 | 0.761 | ||

| 830 × 10−3 | 0.918 | 59 × 10−3 | 0.638 | 6.69 × 10−3 | 0.224 | 6.12 × 10−3 | 0.169 | ||

| #Planes | 1121 | 342 | 12 | 14 | |||||

| 62.3% | 67.4% | 73.6% | 82.1% | 66.7% | 66.7% | 92.8% | 100.0% | ||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Zhang, Y.; Ling, X.; Wan, Y.; Liu, L.; Li, Q. TopoLAP: Topology Recovery for Building Reconstruction by Deducing the Relationships between Linear and Planar Primitives. Remote Sens. 2019, 11, 1372. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111372

Liu X, Zhang Y, Ling X, Wan Y, Liu L, Li Q. TopoLAP: Topology Recovery for Building Reconstruction by Deducing the Relationships between Linear and Planar Primitives. Remote Sensing. 2019; 11(11):1372. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111372

Chicago/Turabian StyleLiu, Xinyi, Yongjun Zhang, Xiao Ling, Yi Wan, Linyu Liu, and Qian Li. 2019. "TopoLAP: Topology Recovery for Building Reconstruction by Deducing the Relationships between Linear and Planar Primitives" Remote Sensing 11, no. 11: 1372. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111372