Towards Automatic Segmentation and Recognition of Multiple Precast Concrete Elements in Outdoor Laser Scan Data

Abstract

:1. Introduction

- For PCE shape, we scan 3 PCEs of the same type but with different shapes simultaneously.

- For PCE type, we scan 3 PCEs of the different types simultaneously.

- For PCE amount, we scan 16 PCEs simultaneously, which contain 9 different types of PCEs.

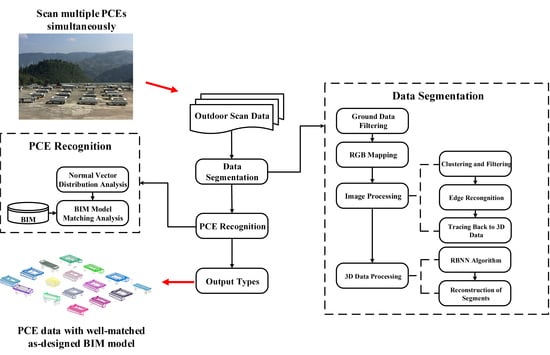

- To improve the quality inspection efficiency of PCEs, we have proposed an automatic segmentation and recognition approach to extract and identify the accurate type of each PCE in outdoor laser scan data. To the best of our knowledge, this is the first attempt in the literature for automatic recognition of multiple PCEs scanned simultaneously.

- To handle the huge computation burden, we have proposed an approach based on the image processing and RBNN algorithm to segment the outdoor laser scan data.

- To solve the problem of backtracking from 2D image cluster to 3D laser scan data, we have developed a novel algorithm using active window to trace back the laser scan data based on an edge image.

- To verify the effectiveness of the proposed approach, experiments on outdoor laser scan data containing multiple PCEs have been performed in three aspects. To the best of our knowledge, no research has investigated such comprehensive experiments before.

2. Preliminary

2.1. Research Background & Motivation

2.2. Related Works

2.2.1. Laser Scan Data Segmentation

2.2.2. Object recognition in the AEC industry

3. The Proposed Segmentation and Recognition Approach

3.1. Data Segmentation

3.1.1. Ground Data Filtering

3.1.2. RGB Mapping

3.1.3. Image Processing

Clustering and Filtering

Edge Recognition

Tracing Back to 3D Data

- The central element in the active window should be placed at the calculated grid in the edge image. Other elements in the active window are placed at the corresponding grids in the edge image.

- When the size of surrounding elements of the calculated grid is less than the size of the active window, the surrounding elements will be supplemented by 0.

- All the selected elements in the first rule of the edge image are multiplied by the elements at the corresponding location in the active window, and the results replace the selected elements.

- The algorithm is stopped until all elements in the edge image become 0. A minimum rectangular range which can include all the tracks of the active window during the calculating process is taken as the output range of the elements in the edge image.

| Algorithm 1: Active window algorithm |

| Input: Mapping image I, Edge image E, Active window size n |

| Output: Segmented data D |

|

3.1.4. 3D Data Processing

3.2. Precast Concrete Element Recognition

3.2.1. Normal Vector Distribution Analysis

3.2.2. BIM model Matching Analysis

4. Experiments on the Scanning of Multiple PCEs Simultaneously

4.1. Validation Experiment

Experimental Data Information

4.2. Segmentation Results

4.3. Recognition Results

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| PCE | Precast concrete element |

| TLS | Terrestrial laser scanner |

| BIM | Building information modeling |

| RBNN | Radially bounded nearest neighbor graph |

| AEC | Architecture, engineering, and construction |

| RANSAC | Random sample consensus |

| PCA | Principal components analysis |

| DOC | Degree of completeness |

References

- Jaillon, L.; Poon, C.S.; Chiang, Y.H. Quantifying the waste reduction potential of using prefabrication in building construction in Hong Kong. Waste Manag. 2009, 29, 309–320. [Google Scholar] [CrossRef] [PubMed]

- Yee, A.A. Social and Environmental Benefits of Precast Concrete Technology. PCI J. 2001, 46, 14–19. [Google Scholar] [CrossRef] [Green Version]

- Sacks, R.; Lee, G. Process Model Perspectives on Management and Engineering Procedures in the Precast/Prestressed Concrete Industry. J. Constr. Eng. Manag. 2004, 130, 206–215. [Google Scholar] [CrossRef] [Green Version]

- Kim, M.K.; Sohn, H.; Chang, C.C. Automated dimensional quality assessment of precast concrete panels using terrestrial laser scanning. Autom. Constr. 2014, 45, 163–177. [Google Scholar] [CrossRef]

- Kim, M.K.; Wang, Q.; Park, J.W.; Cheng, J.C.P.; Sohn, H.; Chang, C.C. Automated dimensional quality assurance of full-scale precast concrete elements using laser scanning and BIM. Autom. Constr. 2016, 72, 102–114. [Google Scholar] [CrossRef]

- Wang, Q.; Kim, M.K.; Cheng, J.C.P.; Sohn, H. Automated quality assessment of precast concrete elements with geometry irregularities using terrestrial laser scanning. Autom. Constr. 2016, 68, 170–182. [Google Scholar] [CrossRef]

- Wang, Q.; Sohn, H.; Cheng, J.C. Automatic As-Built BIM Creation of Precast Concrete Bridge Deck Panels Using Laser Scan Data. J. Comput. Civ. Eng. 2018, 32. [Google Scholar] [CrossRef]

- Standard, C. Technical Standard for Assembly Buildings with Concrete Structure; GB51231-2016; China Building Industry Press: Beijing, China, 2017. [Google Scholar]

- Standard, C. Technical Secification for Precast Concrete Structures; JGJ1-2014; China Building Industry Press: Beijing, China, 2014. [Google Scholar]

- Kim, M.K.; Wang, Q.; Li, H. Non-contact sensing based geometric quality assessment of buildings and civil structures: A review. Autom. Constr. 2019, 100, 163–179. [Google Scholar] [CrossRef]

- Zhu, Z.; Brilakis, I. Detecting air pockets for architectural concrete quality assessment using visual sensing. J. Inf. Technol. Constr. (ITcon) 2008, 13, 86–102. [Google Scholar]

- Hutchinson, T.C.; Chen, Z. Improved image analysis for evaluating concrete damage. J. Comput. Civ. Eng. 2006, 20, 210–216. [Google Scholar] [CrossRef]

- Bosché, F. Automated recognition of 3D CAD model objects in laser scans and calculation of as-built dimensions for dimensional compliance control in construction. Adv. Eng. Inform. 2010, 24, 107–118. [Google Scholar] [CrossRef]

- Faro. Focus-3D Technical Specification; Faro Inc.: Lake Mary, FL, USA, 2018. [Google Scholar]

- Wang, Q.; Kim, M.; Sohn, H.; Cheng, J.C.P. Surface flatness and distortion inspection of precast concrete elements using laser scanning technology. Smart Struct. Syst. 2016, 18, 601–623. [Google Scholar] [CrossRef] [Green Version]

- Yoon, S.; Wang, Q.; Sohn, H. Optimal placement of precast bridge deck slabs with respect to precast girders using 3D laser scanning. Autom. Constr. 2018, 86, 81–98. [Google Scholar] [CrossRef]

- Bosché, F.; Guenet, E. Automating surface flatness control using terrestrial laser scanning and building information models. Autom. Constr. 2014, 44, 212–226. [Google Scholar] [CrossRef]

- Sharif, M.M.; Nahangi, M.; Haas, C.; West, J. Automated Model-Based Finding of 3D Objects in Cluttered Construction Point Cloud Models. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 893–908. [Google Scholar] [CrossRef]

- Klasing, K.; Wollherr, D.; Buss, M. A clustering method for efficient segmentation of 3D laser data. In Proceedings of the IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 4043–4048. [Google Scholar]

- Pheng, L.S.; Meng, C.Y. Managing Productivity in Construction: JIT Operations and Measurements; Routledge: Abingdon, UK, 2018. [Google Scholar]

- Nguyen, A.; Le, B. 3D point cloud segmentation: A survey. In Proceedings of the 6th IEEE International Conference on Robotics, Automation and Mechatronics (RAM), Manila, Philippines, 12–15 November 2013; pp. 225–230. [Google Scholar]

- Grilli, E.; Menna, F.; Remondino, F. A review of point clouds segmentation and classification algorithms. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W3, 339–344. [Google Scholar] [CrossRef]

- Bhanu, B.; Lee, S.; Ho, C.C.; Henderson, T. Range data processing: Representation of surfaces by edges. In Proceedings of the Eighth International Conference on Pattern Recognition, Paris, France, 27–31 October 1986; pp. 236–238. [Google Scholar]

- Besl, P.J.; Jain, R.C. Segmentation through variable-order surface fitting. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 10, 167–192. [Google Scholar] [CrossRef]

- Rabbani, T.; Van Den Heuvel, F.; Vosselmann, G. Segmentation of point clouds using smoothness constraint. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 248–253. [Google Scholar]

- Che, E.; Olsen, M.J. Multi-scan segmentation of terrestrial laser scanning data based on normal variation analysis. ISPRS J. Photogramm. Remote Sens. 2018, 143, 233–248. [Google Scholar] [CrossRef]

- Maalek, R.; Lichti, D.; Ruwanpura, J. Robust Segmentation of Planar and Linear Features of Terrestrial Laser Scanner Point Clouds Acquired from Construction Sites. Sensors 2018, 18, 819. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient Graph-Based Image Segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Golovinskiy, A.; Funkhouser, T. Min-cut based segmentation of point clouds. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Kyoto, Japan, 27 September–4 October 2010; pp. 39–46. [Google Scholar]

- Golovinskiy, A.; Kim, V.G.; Funkhouser, T. Shape-based recognition of 3D point clouds in urban environments. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 2154–2161. [Google Scholar]

- Strom, J.; Richardson, A.; Olson, E. Graph-based segmentation for colored 3D laser point clouds. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 2131–2136. [Google Scholar]

- Awadallah, M.; Abbott, L.; Ghannam, S. Segmentation of sparse noisy point clouds using active contour models. In Proceedings of the 2014 IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; pp. 6061–6065. [Google Scholar]

- Adam, A.; Chatzilari, E.; Nikolopoulos, S.; Kompatsiaris, I. H-RANSAC: A hybrid point cloud segmentation combining 2D and 3D data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, IV-2, 1–8. [Google Scholar] [CrossRef]

- Vosselman, G.; Gorte, B.G.; Sithole, G.; Rabbani, T. Recognising structure in laser scanner point clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 46, 33–38. [Google Scholar]

- Pu, S.; Vosselman, G. Automatic extraction of building features from terrestrial laser scanning. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 25–27. [Google Scholar]

- Luo, D.a.; Wang, Y.m. Rapid extracting pillars by slicing point clouds. In Proceedings of the XXI ISPRS Congress, IAPRS Citeseer, Beijing, China, 3–11 July 2008; Volume 37, pp. 215–218. [Google Scholar]

- Díaz-Vilariño, L.; Conde, B.; Lagüela, S.; Lorenzo, H. Automatic detection and segmentation of columns in as-built buildings from point clouds. Remote Sens. 2015, 7, 15651–15667. [Google Scholar] [CrossRef]

- Cong, H.P.N.; Choi, Y. Comparison of point cloud data and 3D CAD data for on-site dimensional inspection of industrial plant piping systems. Autom. Constr. 2018, 91, 44–52. [Google Scholar] [CrossRef]

- Chen, J.; Fang, Y.; Cho, Y.K.; Kim, C. Principal axes descriptor for automated construction-equipment classification from point clouds. J. Comput. Civ. Eng. 2016, 31, 04016058. [Google Scholar] [CrossRef]

- Chen, J.; Fang, Y.; Cho, Y.K. Performance evaluation of 3D descriptors for object recognition in construction applications. Autom. Constr. 2018, 86, 44–52. [Google Scholar] [CrossRef]

- Wang, Q.; Cheng, J.C.P.; Sohn, H. Automated Estimation of Reinforced Precast Concrete Rebar Positions Using Colored Laser Scan Data. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 787–802. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for point-cloud shape detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Cheng, F.; Venetsanopoulos, A. An adaptive morphological filter for image processing. IEEE Trans. Image Process. 1992, 1, 533. [Google Scholar] [CrossRef] [PubMed]

- Macqueen, J. Some Methods for Classification and Analysis of MultiVariate Observations. In Proceedings of the Berkeley Symposium on Mathematical Statistics and Probability; University of California Press: Berkeley, CA, USA, 1967; pp. 281–297. [Google Scholar]

- Jain, A.K.; Murty, M.N.; Flynn, P.J. Data clustering: A review. ACM Comput Surv. ACM Comput. Surv. 1999, 31, 264–323. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Karlpearson, F.R.S. LIII. On lines and planes of closest fit to systems of points in space. Philos. Mag. 1901, 2, 559–572. [Google Scholar] [CrossRef]

- Aiger, D.; Mitra, N.J.; Cohen-Or, D. 4-points congruent sets for robust pairwise surface registration. ACM Trans. Graph. (TOG) 2008, 27, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Glira, P.; Pfeifer, N.; Briese, C.; Ressl, C. [10]A Correspondence Framework for ALS Strip Adjustments based on Variants of the ICP Algorithm. Photogramm. Fernerkund. Geoinf. 2015, 2015, 275–289. [Google Scholar] [CrossRef]

- Besl, P.; McKay, N.D. A method for registration of 3-D shapes. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 14, 239–256. [Google Scholar] [CrossRef]

- Olson, D.L.; Delen, D. Advanced Data Mining Techniques; Springer Science and Business Media: Berlin, Germany, 2008. [Google Scholar]

| Project | Tolerances (mm) | Measurement Methods | ||

|---|---|---|---|---|

| Length | Beam, Column, Slab, Truss | <12 m | ±5 | Rule detection |

| ≥12 m and <18 m | ±10 | |||

| ≥18 m | ±20 | |||

| Wall panel | ±4 | |||

| Width, Height (Thickness) | The section of Beam, Column, Slab, Truss | ±5 | Rule detection | |

| Wall panel | ±3 | |||

| Laser Scan Data | ||||

|---|---|---|---|---|

| The data in Figure 10 | 281,270 | 14,542 | 5.17 | 2 |

| 4005 | 1.42 | |||

| The data in Figure 11a | 99,747 | 76 | 0.08 | 0 |

| The data in Figure 11b | 184,866 | 29,055 | 15.72 | 1 |

| Experimental Data | Number of PCEs | PCE Type | Type Number | |

|---|---|---|---|---|

| 3 | Panel | |||

| 3 | Panel Stair Cassion toilet | |||

| 16 | Beam | |||

| Column | ||||

| Stair | ||||

| Cassion toilet | ||||

| Slab | ||||

| Panel | T-08 | |||

| T-07 | ||||

| T-06 | ||||

| T-02 | ||||

| Experimental Data | ||

|---|---|---|

| 10,111,120 | 3,164,312 | |

| 3,842,303 | 1,195,405 | |

| 11,340,361 | 3,115,780 | |

| 4,370,985 | 1,170,248 | |

| 141,342,932 | 28,571,678 |

| Experimental Data | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Number | 1 | 2 | 4 | 7 | 10 | 11 | 14 | 16 | 1 | 2 | 5 | 9 | 14 | 15 | |

| 534,529 | 4878 | 99,810 | 7029 | 114,124 | 103,919 | 2252 | 173,061 | 203,429 | 1859 | 38,089 | 1770 | 43,700 | 39738 | ||

() | - - | - - | 45,928 85.23 | - - | 46,555 86.40 | 46,143 85.63 | - - | - - | - - | - - | 41,092 76.26 | - - | 42,907 79.63 | 42,600 79.06 | |

() | - - | - - | 46,010 85.47 | - - | 46,436 86.26 | 46,087 85.61 | - - | - - | - - | - - | 41,166 76.47 | - - | 42,157 78.31 | 40,163 74.61 | |

| Prediction | - | - | 2 | - | 1 | 1 | - | - | - | - | 2 | - | 1 | 1 | |

| Actual type | - | - | 2 | - | 1 | 1 | - | - | - | - | 2 | - | 1 | 1 | |

| Experimental Data | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Number | 3 | 4 | 5 | 6 | 12 | 18 | 20 | 4 | 7 | 10 | 18 |

| 24,455 | 301,777 | 119,004 | 132,635 | 87,637 | 3565 | 7182 | 45,946 | 50,595 | 115,313 | 31,444 | |

| Matched model | - | - | - | - | - | ||||||

| - | 53,886 | 33,409 | 46,587 | - | - | - | 33,409 | 46,587 | 53,886 | - | |

| - | 47,728 | 32,265 | 29,907 | - | - | - | 31,405 | 24,044 | 44,986 | - | |

| - | 88.57 | 96.58 | 64.20 | - | - | - | 94.00 | 51.61 | 83.48 | - | |

| Experimental Data | ||||||||

|---|---|---|---|---|---|---|---|---|

| Number | 4 | 5 | 7 | 8 | 10 | 14 | 16 | |

| 1,500,955 | 1,542,872 | 1,665,727 | 1,692,552 | 1,729,999 | 1,974,485 | 2,113,982 | ||

() | - - | - - | - - | - - | 51,597 81.58 | 53,824 85.10 | 51,663 81.68 | |

() | - - | - - | - - | - - | 50,175 80.32 | 49,673 79.52 | 52,283 83.70 | |

() | - - | - - | - - | - - | 50,101 83.40 | 47,662 79.34 | 48,314 80.43 | |

() | 45,007 74.93 | 45,480 75.72 | 47,273 78.70 | 45,721 76.12 | - - | - - | - - | |

() | 46,790 74.48 | 47,379 75.42 | 46,002 73.23 | 48,744 77.59 | - - | - - | - - | |

() | 46,458 74.31 | 50,897 81.41 | 47,167 75.44 | 47,502 75.98 | - - | - - | - - | |

() | 46,708 80.23 | 45,547 78.24 | 44,976 77.26 | 44,411 76.29 | - - | - - | - - | |

| Prediction | ||||||||

| Actual type | ||||||||

| Experimental Data | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Number | 1 | 2 | 3 | 6 | 9 | 11 | 12 | 13 | 15 | 17 | 18 |

| 7804 | 8868 | 952,500 | 1,575,529 | 1,707,636 | 1,788,907 | 1,793,559 | 1,817,340 | 2,075,280 | 2,245,653 | 2,312,013 | |

| Matched model | - | - | |||||||||

| - | - | 28,612 | 53,226 | 33,409 | 30,038 | 19,963 | 46,275 | 14,339 | 61,033 | 53,287 | |

| - | - | 28,607 | 46,365 | 28,317 | 22,743 | 18,773 | 43,703 | 11,587 | 51,711 | 43,854 | |

| - | - | 99.98 | 87.11 | 84.76 | 75.71 | 94.04 | 94.44 | 80.81 | 84.73 | 82.30 | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, J.; Li, D.; Feng, L.; Liu, P.; Wu, W. Towards Automatic Segmentation and Recognition of Multiple Precast Concrete Elements in Outdoor Laser Scan Data. Remote Sens. 2019, 11, 1383. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111383

Liu J, Li D, Feng L, Liu P, Wu W. Towards Automatic Segmentation and Recognition of Multiple Precast Concrete Elements in Outdoor Laser Scan Data. Remote Sensing. 2019; 11(11):1383. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111383

Chicago/Turabian StyleLiu, Jiepeng, Dongsheng Li, Liang Feng, Pengkun Liu, and Wenbo Wu. 2019. "Towards Automatic Segmentation and Recognition of Multiple Precast Concrete Elements in Outdoor Laser Scan Data" Remote Sensing 11, no. 11: 1383. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11111383