Unmanned Aerial Vehicle for Remote Sensing Applications—A Review

Abstract

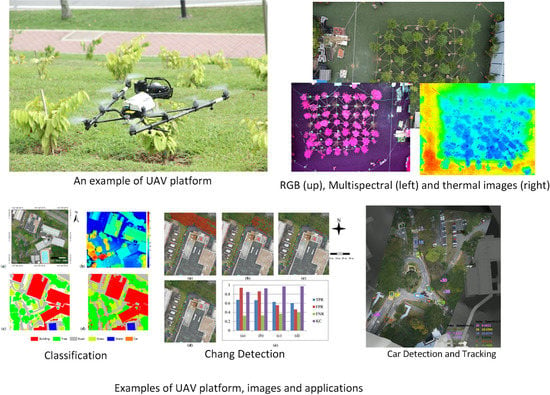

:1. Introduction

2. Overview of UAV Sensors

2.1. RGB Cameras

2.2. Light-Weight Multispectral Cameras

2.3. Light-Weight Hyperspectral Sensors

2.4. Light-Weight Thermal Infrared Sensors

2.5. UAV LiDAR

3. UAVs Remote Sensing Data Analysis

3.1. Land-Use/Land-Cover (LULC) Mapping

3.2. Change Detection

4. UAVs Remote Sensing Applications

4.1. Precision Agriculture and Vegetation

4.2. Urban Environment and Management

4.3. Disaster, Hazard, and Rescue

5. Conclusions and Future Trends

Author Contributions

Funding

Acknowledgments

Disclaimer

Conflicts of Interest

References

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–329. [Google Scholar] [CrossRef]

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Bhardwaj, A.; Sam, L.; Martín-Torres, F.J.; Kumar, R. UAVs as remote sensing platform in glaciology: Present applications and future prospects. Remote Sens. Environ. 2016, 175, 196–204. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Di Gennaro, S.F.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.Y.; Vosselman, G. Review of automatic feature extraction from high-resolution optical sensor data for UAV-based cadastral mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Yan, W.Y.; Shaker, A.; El-Ashmawy, N. Urban land cover classification using airborne LiDAR data: A review. Remote Sens. Environ. 2015, 158, 295–310. [Google Scholar] [CrossRef]

- Zhang, Q.; Qin, R.; Huang, X.; Fang, Y.; Liu, L. Classification of ultra-high resolution orthophotos combined with DSM using a dual morphological top hat profile. Remote Sens. 2015, 7, 16422–16440. [Google Scholar] [CrossRef]

- Moranduzzo, T.; Melgani, F. Detecting cars in UAV images with a catalog-based approach. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6356–6367. [Google Scholar] [CrossRef]

- Rodríguez-Canosa, G.R.; Thomas, S.; Del Cerro, J.; Barrientos, A.; MacDonald, B. A real-time method to detect and track moving objects (DATMO) from unmanned aerial vehicles (UAVs) using a single camera. Remote Sens. 2012, 4, 1090–1111. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Korchenko, A.G.; Illyash, O.S. The generalized classification of Unmanned Air Vehicles. In Proceedings of the 2013 IEEE 2nd International Conference Actual Problems of Unmanned Air Vehicles Developments Proceedings (APUAVD), Kiev, Ukraine, 15–17 October 2013; pp. 28–34. [Google Scholar]

- Dalamagkidis, K. Classification of UAVs. In Handbook of Unmanned Aerial Vehicles; Valavanis, K.P., Vachtsevanos, G.J., Eds.; Springer: Dordrecht, The Netherlands, 2015; pp. 83–91. [Google Scholar] [CrossRef]

- Department of Defense of USA; Office of the Secretary of Defense. Army Roadmap for Unmanned Aircraft Systems, 2010–2035; U.S. Army UAS Center of Excellence and Fort Rucker: Dale County, AL, USA, 2010. [Google Scholar]

- Zhang, L.; Huang, X.; Huang, B.; Li, P. A pixel shape index coupled with spectral information for classification of high spatial resolution remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2950–2961. [Google Scholar] [CrossRef]

- Woodcock, C.E.; Strahler, A.H. The factor of scale in remote sensing. Remote Sens. Environ. 1987, 21, 311–332. [Google Scholar] [CrossRef]

- Westoby, M.; Brasington, J.; Glasser, N.; Hambrey, M.; Reynolds, J. ‘Structure-from-Motion’photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Wallace, L. Direct georeferencing of ultrahigh-resolution UAV imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2738–2745. [Google Scholar] [CrossRef]

- Yang, B.; Chen, C. Automatic registration of UAV-borne sequent images and LiDAR data. ISPRS J. Photogramm. Remote Sens. 2015, 101, 262–274. [Google Scholar] [CrossRef]

- Segl, K.; Roessner, S.; Heiden, U.; Kaufmann, H. Fusion of spectral and shape features for identification of urban surface cover types using reflective and thermal hyperspectral data. ISPRS J. Photogramm. Remote Sens. 2003, 58, 99–112. [Google Scholar] [CrossRef]

- Eisenbeiß, H. UAV Photogrammetry; ETH Zurich: Zürich, Switzerland, 2009. [Google Scholar]

- Du, S.; Zhang, Y.; Qin, R.; Yang, Z.; Zou, Z.; Tang, Y.; Fan, C. Building change detection using old aerial images and new LiDAR data. Remote Sens. 2016, 8, 1030. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and hyperspectral remote sensing for identification and mapping of wetland vegetation: A review. Wetl. Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Calderón, R.; Montes-Borrego, M.; Landa, B.B.; Navas-Cortés, J.A.; Zarco-Tejada, P.J. Detection of downy mildew of opium poppy using high-resolution multi-spectral and thermal imagery acquired with an unmanned aerial vehicle. Precis. Agric. 2014, 15, 639–661. [Google Scholar] [CrossRef]

- Kislik, C.; Dronova, I.; Kelly, M. UAVs in Support of Algal Bloom Research: A Review of Current Applications and Future Opportunities. Drones 2018, 2, 35. [Google Scholar] [CrossRef]

- Suomalainen, J.; Anders, N.; Iqbal, S.; Roerink, G.; Franke, J.; Wenting, P.; Hünniger, D.; Bartholomeus, H.; Becker, R.; Kooistra, L. A lightweight hyperspectral mapping system and photogrammetric processing chain for unmanned aerial vehicles. Remote Sens. 2014, 6, 11013–11030. [Google Scholar] [CrossRef]

- Burkart, A.; Cogliati, S.; Schickling, A.; Rascher, U. A novel UAV-based ultra-light weight spectrometer for field spectroscopy. IEEE Sens. J. 2014, 14, 62–67. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Psomas, A.; Kneubühler, M.; Huber, S.; Itten, K.; Zimmermann, N. Hyperspectral remote sensing for estimating aboveground biomass and for exploring species richness patterns of grassland habitats. Int. J. Remote Sens. 2011, 32, 9007–9031. [Google Scholar] [CrossRef]

- Van der Meer, F.D.; Van der Werff, H.M.; Van Ruitenbeek, F.J.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; Van Der Meijde, M.; Carranza, E.J.M.; De Smeth, J.B.; Woldai, T. Multi-and hyperspectral geologic remote sensing: A review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Benediktsson, J.A.; Palmason, J.A.; Sveinsson, J.R. Classification of hyperspectral data from urban areas based on extended morphological profiles. IEEE Trans. Geosci. Remote Sens. 2005, 43, 480–491. [Google Scholar] [CrossRef]

- Rufino, G.; Moccia, A. Integrated VIS-NIR hyperspectral/thermal-IR electro-optical payload system for a mini-UAV. In Infotech@ Aerospace; AIAA: Reston, VA, USA, 2005; p. 7009. [Google Scholar]

- Lucieer, A.; Malenovský, Z.; Veness, T.; Wallace, L. HyperUAS—Imaging spectroscopy from a multirotor unmanned aircraft system. J. Field Robot. 2014, 31, 571–590. [Google Scholar] [CrossRef]

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D hyperspectral information with lightweight UAV snapshot cameras for vegetation monitoring: From camera calibration to quality assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Robles-Kelly, A.; Huynh, C.P. Imaging Spectroscopy for Scene Analysis; Springer Science & Business Media: Berlin, Germany, 2012. [Google Scholar]

- Prakash, A. Thermal remote sensing: Concepts, issues and applications. Int. Arch. Photogramm. Remote Sens. 2000, 33, 239–243. [Google Scholar]

- Sheng, H.; Chao, H.; Coopmans, C.; Han, J.; McKee, M.; Chen, Y. Low-cost UAV-based thermal infrared remote sensing: Platform, calibration and applications. In Proceedings of the 2010 IEEE/ASME International Conference on Mechatronics and Embedded Systems and Applications (MESA), Qingdao, China, 15–17 July 2010; pp. 38–43. [Google Scholar]

- Rudol, P.; Doherty, P. Human body detection and geolocalization for UAV search and rescue missions using color and thermal imagery. In Proceedings of the IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008; pp. 1–8. [Google Scholar]

- Ambrosia, V.G.; Wegener, S.S.; Sullivan, D.V.; Buechel, S.W.; Dunagan, S.E.; Brass, J.A.; Stoneburner, J.; Schoenung, S.M. Demonstrating UAV-acquired real-time thermal data over fires. Photogramm. Eng. Remote Sens. 2003, 69, 391–402. [Google Scholar] [CrossRef]

- Ibarguren, A.; Molina, J.; Susperregi, L.; Maurtua, I. Thermal tracking in mobile robots for leak inspection activities. Sensors 2013, 13, 13560–13574. [Google Scholar] [CrossRef] [PubMed]

- Berni, J.A.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Winters, C.; Rango, A. UAS remote sensing missions for rangeland applications. Geocarto Int. 2011, 26, 141–156. [Google Scholar] [CrossRef]

- Jensen, A.M.; Neilson, B.T.; McKee, M.; Chen, Y. Thermal remote sensing with an autonomous unmanned aerial remote sensing platform for surface stream temperatures. In Proceedings of the 2012 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; pp. 5049–5052. [Google Scholar]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Hoffmann, H.; Nieto, H.; Jensen, R.; Guzinski, R.; Zarco-Tejada, P.; Friborg, T. Estimating evaporation with thermal UAV data and two-source energy balance models. Hydrol. Earth Syst. Sci. 2016, 20, 697–713. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Bolten, A.; Bareth, G. Introducing a low-cost mini-UAV for thermal-and multispectral-imaging. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2012, 39, 345–349. [Google Scholar] [CrossRef]

- Dalponte, M.; Coops, N.C.; Bruzzone, L.; Gianelle, D. Analysis on the use of multiple returns LiDAR data for the estimation of tree stems volume. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2009, 2, 310–318. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.; Turner, D. Development of a UAV-LiDAR system with application to forest inventory. Remote Sens. 2012, 4, 1519–1543. [Google Scholar] [CrossRef]

- Rieke, M.; Foerster, T.; Geipel, J.; Prinz, T. High-Precision Positioning and Real-Time Data Processing of UAV Systems. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2011, 38, 119–124. [Google Scholar] [CrossRef]

- Campos-Taberner, M.; Romero-Soriano, A.; Gatta, C.; Camps-Valls, G.; Lagrange, A.; Saux, B.L.; Beaupère, A.; Boulch, A.; Chan-Hon-Tong, A.; Herbin, S.; et al. Processing of Extremely High-Resolution LiDAR and RGB Data: Outcome of the 2015 IEEE GRSS Data Fusion Contest–Part A: 2-D Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5547–5559. [Google Scholar] [CrossRef]

- Vo, A.; Truong-Hong, L.; Laefer, D.F.; Tiede, D.; d’Oleire-Oltmanns, S.; Baraldi, A.; Shimoni, M.; Moser, G.; Tuia, D. Processing of Extremely High Resolution LiDAR and RGB Data: Outcome of the 2015 IEEE GRSS Data Fusion Contest—Part B: 3-D Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 5560–5575. [Google Scholar] [CrossRef]

- Belward, A.S.; Skøien, J.O. Who launched what, when and why; trends in global land-cover observation capacity from civilian earth observation satellites. ISPRS J. Photogramm. Remote Sens. 2015, 103, 115–128. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X.; Cheng, L.; Du, P.; Liu, Y. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Guo, L.; Liu, Z.; Bu, S.; Ren, J. Effective and efficient midlevel visual elements-oriented land-use classification using VHR remote sensing images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 4238–4249. [Google Scholar] [CrossRef]

- Carleer, A.; Wolff, E. Urban land cover multi-level region-based classification of VHR data by selecting relevant features. Int. J. Remote Sens. 2006, 27, 1035–1051. [Google Scholar] [CrossRef]

- Fry, J.; Coan, M.; Homer, C.; Meyer, D.; Wickham, J. Completion of the National Land Cover Database (NLCD) 1992–2001 Land cover Change Retrofit Product; US Geological Survey: Reston, VA, USA, 2009; pp. 1258–2331. [Google Scholar]

- Gong, P.; Wang, J.; Yu, L.; Zhao, Y.; Zhao, Y.; Liang, L.; Niu, Z.; Huang, X.; Fu, H.; Liu, S. Finer resolution observation and monitoring of global land cover: First mapping results with Landsat TM and ETM+ data. Int. J. Remote Sens. 2013, 34, 2607–2654. [Google Scholar] [CrossRef]

- Salazar, A.; Baldi, G.; Hirota, M.; Syktus, J.; McAlpine, C. Land use and land cover change impacts on the regional climate of non-Amazonian South America: A review. Glob. Planet. Chang. 2015, 128, 103–119. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Tamouridou, A.; Alexandridis, T.; Pantazi, X.; Lagopodi, A.; Kashefi, J.; Moshou, D. Evaluation of UAV imagery for mapping Silybum marianum weed patches. Int. J. Remote Sens. 2017, 38, 2246–2259. [Google Scholar] [CrossRef]

- Qin, R. An object-based hierarchical method for change detection using unmanned aerial vehicle images. Remote Sens. 2014, 6, 7911–7932. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; López-Granados, F.; Peña, J.M. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- Timm, B.C.; McGarigal, K. Fine-scale remotely-sensed cover mapping of coastal dune and salt marsh ecosystems at Cape Cod National Seashore using Random Forests. Remote Sens. Environ. 2012, 127, 106–117. [Google Scholar] [CrossRef]

- Hayes, M.M.; Miller, S.N.; Murphy, M.A. High-resolution landcover classification using Random Forest. Remote Sens. Lett. 2014, 5, 112–121. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Baatz, M.; Benz, U.; Dehghani, S.; Heynen, M.; Höltje, A.; Hofmann, P.; Lingenfelder, I.; Mimler, M.; Sohlbach, M.; Weber, M. ECognition Professional User Manual 4; Definiens Imaging: München, Germany, 2004. [Google Scholar]

- Gaetano, R.; Scarpa, G.; Poggi, G. Hierarchical texture-based segmentation of multiresolution remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2129–2141. [Google Scholar] [CrossRef]

- Trias-Sanz, R.; Stamon, G.; Louchet, J. Using colour, texture, and hierarchial segmentation for high-resolution remote sensing. ISPRS J. Photogramm. Remote Sens. 2008, 63, 156–168. [Google Scholar] [CrossRef]

- Shackelford, A.K.; Davis, C.H. A hierarchical fuzzy classification approach for high-resolution multispectral data over urban areas. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1920–1932. [Google Scholar] [CrossRef] [Green Version]

- Zhang, P.; Lv, Z.; Shi, W. Object-based spatial feature for classification of very high resolution remote sensing images. IEEE Geosci. Remote Sens. Lett. 2013, 10, 1572–1576. [Google Scholar] [CrossRef]

- Ming, D.; Li, J.; Wang, J.; Zhang, M. Scale parameter selection by spatial statistics for GeOBIA: Using mean-shift based multi-scale segmentation as an example. ISPRS J. Photogramm. Remote Sens. 2015, 106, 28–41. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Kim, M.; Madden, M.; Warner, T. Estimation of optimal image object size for the segmentation of forest stands with multispectral IKONOS imagery. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 291–307. [Google Scholar]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F. Geographic object-based image analysis–towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef]

- Agüera, F.; Aguilar, F.J.; Aguilar, M.A. Using texture analysis to improve per-pixel classification of very high resolution images for mapping plastic greenhouses. ISPRS J. Photogramm. Remote Sens. 2008, 63, 635–646. [Google Scholar] [CrossRef]

- Kim, M.; Madden, M.; Warner, T.A. Forest type mapping using object-specific texture measures from multispectral Ikonos imagery. Photogramm. Eng. Remote Sens. 2009, 75, 819–829. [Google Scholar] [CrossRef]

- Feng, Q.; Liu, J.; Gong, J. UAV remote sensing for urban vegetation mapping using random forest and texture analysis. Remote Sens. 2015, 7, 1074–1094. [Google Scholar] [CrossRef]

- Moranduzzo, T.; Mekhalfi, M.L.; Melgani, F. LBP-based multiclass classification method for UAV imagery. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 2362–2365. [Google Scholar]

- Smits, P.C.; Annoni, A. Updating land-cover maps by using texture information from very high-resolution space-borne imagery. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1244–1254. [Google Scholar] [CrossRef]

- Kurtz, C.; Passat, N.; Gancarski, P.; Puissant, A. Extraction of complex patterns from multiresolution remote sensing images: A hierarchical top-down methodology. Pattern Recognit. 2012, 45, 685–706. [Google Scholar] [CrossRef] [Green Version]

- Laliberte, A.S.; Rango, A. Texture and scale in object-based analysis of subdecimeter resolution unmanned aerial vehicle (UAV) imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar] [CrossRef]

- Gevaert, C.; Persello, C.; Sliuzas, R.; Vosselman, G. Informal settlement classification using point-cloud and image-based features from UAV data. ISPRS J. Photogramm. Remote Sens. 2017, 125, 225–236. [Google Scholar] [CrossRef]

- Immitzer, M.; Stepper, C.; Böck, S.; Straub, C.; Atzberger, C. Use of WorldView-2 stereo imagery and National Forest Inventory data for wall-to-wall mapping of growing stock. For. Ecol. Manag. 2016, 359, 232–246. [Google Scholar] [CrossRef]

- Salehi, B.; Zhang, Y.; Zhong, M. Object-based land cover classification of urban areas using VHR imagery and photogrammetrically-derived DSM. In Proceedings of the ASPRS 2011 Annual Conference, Milwaukee, WI, USA, 1–5 May 2011. [Google Scholar]

- Sohn, G.; Dowman, I. Data fusion of high-resolution satellite imagery and LiDAR data for automatic building extraction. ISPRS J. Photogramm. Remote Sens. 2007, 62, 43–63. [Google Scholar] [CrossRef]

- Khan, S.; Aragão, L.; Iriarte, J. A UAV–lidar system to map Amazonian rainforest and its ancient landscape transformations. Int. J. Remote Sens. 2017, 38, 2313–2330. [Google Scholar] [CrossRef]

- Gao, Y.; Zhang, W. LULC classification and topographic correction of Landsat-7 ETM+ imagery in the Yangjia River Watershed: The influence of DEM resolution. Sensors 2009, 9, 1980–1995. [Google Scholar] [CrossRef] [PubMed]

- Watanachaturaporn, P.; Arora, M.K.; Varshney, P.K. Multisource Classification Using Support Vector Machines. Photogramm. Eng. Remote Sens. 2008, 74, 239–246. [Google Scholar] [CrossRef] [Green Version]

- Guo, L.; Chehata, N.; Mallet, C.; Boukir, S. Relevance of airborne lidar and multispectral image data for urban scene classification using Random Forests. ISPRS J. Photogramm. Remote Sens. 2011, 66, 56–66. [Google Scholar] [CrossRef]

- Pix4D, SA. Pix4Dmapper: Professional Drone Mapping and Photogrammetry Software | Pix4D. Available online: https://www.pix4d.com/ (accessed on 30 December 2018).

- Agisoft LLC. Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 30 December 2018).

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.; Gong, W. Information fusion of aerial images and LIDAR data in urban areas: Vector-stacking, re-classification and post-processing approaches. Int. J. Remote Sens. 2011, 32, 69–84. [Google Scholar] [CrossRef]

- Qin, R. A mean shift vector-based shape feature for classification of high spatial resolution remotely sensed imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1974–1985. [Google Scholar] [CrossRef]

- Vincent, L. Morphological grayscale reconstruction in image analysis: Applications and efficient algorithms. IEEE Trans. Image Process. 1993, 2, 176–201. [Google Scholar] [CrossRef]

- Zhang, X.; Xiao, P.; Feng, X. Toward combining thematic information with hierarchical multiscale segmentations using tree Markov random field model. ISPRS J. Photogramm. Remote Sens. 2017, 131, 134–146. [Google Scholar] [CrossRef]

- Crommelinck, S.; Bennett, R.; Gerke, M.; Koeva, M.; Yang, M.; Vosselman, G. SLIC superpixels for object delineation from UAV data. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 9. [Google Scholar] [CrossRef]

- Corcoran, P.; Winstanley, A. Using texture to tackle the problem of scale in land-cover classification. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 113–132. [Google Scholar] [Green Version]

- Pal, M.; Mather, P. Support vector machines for classification in remote sensing. Int. J. Remote Sens. 2005, 26, 1007–1011. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Paola, J.D.; Schowengerdt, R.A. A detailed comparison of backpropagation neural network and maximum-likelihood classifiers for urban land use classification. IEEE Trans. Geosci. Remote Sens. 1995, 33, 981–996. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L. An SVM Ensemble Approach Combining Spectral, Structural, and Semantic Features for the Classification of High-Resolution Remotely Sensed Imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 257–272. [Google Scholar] [CrossRef]

- Merentitis, A.; Debes, C.; Heremans, R. Ensemble Learning in Hyperspectral Image Classification: Toward Selecting a Favorable Bias-Variance Tradeoff. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1089–1102. [Google Scholar] [CrossRef]

- Bergado, J.R.; Persello, C.; Stein, A. Recurrent Multiresolution Convolutional Networks for VHR Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 6361–6374. [Google Scholar] [CrossRef] [Green Version]

- Mboga, N.; Georganos, S.; Grippa, T.; Lennert, M.; Vanhuysse, S.; Wolff, E. Fully Convolutional Networks and Geographic Object-Based Image Analysis for the Classification of VHR Imagery. Remote Sens. 2019, 11, 597. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Yu, F.; Koltun, V. Multi-Scale Context Aggregation by Dilated Convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Liu, Y.; Fan, B.; Wang, L.; Bai, J.; Xiang, S.; Pan, C. Semantic labeling in very high resolution images via a self-cascaded convolutional neural network. ISPRS J. Photogramm. Remote Sens. 2018, 145, 78–95. [Google Scholar] [CrossRef] [Green Version]

- Marmanis, D.; Schindler, K.; Wegner, J.D.; Galliani, S.; Datcu, M.; Stilla, U. Classification with an edge: Improving semantic image segmentation with boundary detection. ISPRS J. Photogramm. Remote Sens. 2018, 135, 158–172. [Google Scholar] [CrossRef] [Green Version]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Beyond RGB: Very high resolution urban remote sensing with multimodal deep networks. ISPRS J. Photogramm. Remote Sens. 2018, 140, 20–32. [Google Scholar] [CrossRef] [Green Version]

- Liu, Y.; Piramanayagam, S.; Monteiro, S.T.; Saber, E. Dense Semantic Labeling of Very-High-Resolution Aerial Imagery and LiDAR with Fully-Convolutional Neural Networks and Higher-Order CRFs. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1561–1570. [Google Scholar]

- Hu, F.; Xia, G.-S.; Hu, J.; Zhang, L. Transferring deep convolutional neural networks for the scene classification of high-resolution remote sensing imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote sensing image scene classification: Benchmark and state of the art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Penatti, O.A.B.; Nogueira, K.; Santos, J.A.d. Do deep features generalize from everyday objects to remote sensing and aerial scenes domains? In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Boston, MA, USA, 7–12 June 2015; pp. 44–51. [Google Scholar]

- Wu, Z.; Han, X.; Lin, Y.-L.; Uzunbas, M.G.; Goldstein, T.; Lim, S.N.; Davis, L.S. DCAN: Dual Channel-Wise Alignment Networks for Unsupervised Scene Adaptation. In Proceedings of the Computer Vision—ECCV 2018, Munich, Germany, 8–14 September 2018; pp. 535–552. [Google Scholar]

- LTA. Maintaining Our Roads and Facilities. Available online: http://www.lta.gov.sg/content/ltaweb/en/roads-and-motoring/road-safety-and-regulations/maintaining-our-roads-and-facilities.html (accessed on 29 May 2019).

- Qin, R.; Gruen, A. 3D change detection at street level using mobile laser scanning point clouds and terrestrial images. ISPRS J. Photogramm. Remote Sens. 2014, 90, 23–35. [Google Scholar] [CrossRef]

- Saur, G.; Krüger, W. Change Detection in Uav Video Mosaics Combining a Feature Based Approach and Extended Image Differencing. In Proceedings of the 2016 International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Prague, Czech Republic, 12–19 July 2016; pp. 557–562. [Google Scholar]

- Ma, Y.; Wu, X.; Yu, G.; Xu, Y.; Wang, Y. Pedestrian detection and tracking from low-resolution unmanned aerial vehicle thermal imagery. Sensors 2016, 16, 446. [Google Scholar] [CrossRef]

- Gaszczak, A.; Breckon, T.P.; Han, J. Real-time people and vehicle detection from UAV imagery. In Proceedings of the IS&T/SPIE Electronic Imaging. International Society for Optics and Photonics, San Fransico, CA, USA, 23 January 2011; p. 78780B. [Google Scholar]

- Butenuth, M.; Burkert, F.; Schmidt, F.; Hinz, S.; Hartmann, D.; Kneidl, A.; Borrmann, A.; Sirmacek, B. Integrating pedestrian simulation, tracking and event detection for crowd analysis. In Proceedings of the 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops), Barcelona, Spain, 6–13 November 2011; pp. 150–157. [Google Scholar]

- De Smedt, F.; Hulens, D.; Goedemé, T. On-board real-time tracking of pedestrians on a UAV. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Boston, MA, USA, 7–12 July 2015; pp. 1–8. [Google Scholar]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle adjustment—A modern synthesis. In International Workshop on Vision Algorithms; Springer: Berlin/Heidelberg, Germany, 2000; pp. 298–372. [Google Scholar]

- Walter, V. Object-based classification of remote sensing data for change detection. ISPRS J. Photogramm. Remote Sens. 2004, 58, 225–238. [Google Scholar] [CrossRef]

- Qin, R.; Tian, J.; Reinartz, P. 3D change detection–approaches and applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef]

- Lefebvre, A.; Corpetti, T.; Hubert-Moy, L. Object-oriented approach and texture analysis for change detection in very high resolution images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; pp. IV-663–IV-666. [Google Scholar]

- Gamanya, R.; De Maeyer, P.; De Dapper, M. Object-oriented change detection for the city of Harare, Zimbabwe. Expert Syst. Appl. 2009, 36, 571–588. [Google Scholar] [CrossRef]

- Xian, G.; Homer, C. Updating the 2001 National Land Cover Database impervious surface products to 2006 using Landsat imagery change detection methods. Remote Sens. Environ. 2010, 114, 1676–1686. [Google Scholar] [CrossRef]

- Bontemps, S.; Bogaert, P.; Titeux, N.; Defourny, P. An object-based change detection method accounting for temporal dependences in time series with medium to coarse spatial resolution. Remote Sens. Environ. 2008, 112, 3181–3191. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Cui, X.; Zhang, L. A post-classification change detection method based on iterative slow feature analysis and Bayesian soft fusion. Remote Sens. Environ. 2017, 199, 241–255. [Google Scholar] [CrossRef]

- Tewkesbury, A.P.; Comber, A.J.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Frost, S. Study Analysing the Current Activities in the Field of UAV. ENTR/2007/065. 2007. Available online: https://ec.europa.eu/home-affairs/sites/homeaffairs/files/e-library/documents/policies/security/pdf/uav_study_element_2_en.pdf (accessed on 17 June 2019).

- Ivushkin, K.; Bartholomeus, H.; Bregt, A.K.; Pulatov, A.; Franceschini, M.H.D.; Kramer, H.; van Loo, E.N.; Jaramillo Roman, V.; Finkers, R. UAV based soil salinity assessment of cropland. Geoderma 2019, 338, 502–512. [Google Scholar] [CrossRef]

- Anderson, C. Agricultural Drones: Relatively cheap drones with advanced sensors and imaging capabilities are giving farmers new ways to increase yields and reduce crop damage. MIT Technol. Rev 2014, 17, 3–58. [Google Scholar]

- Sugiura, R.; Noguchi, N.; Ishii, K. Remote-sensing technology for vegetation monitoring using an unmanned helicopter. Biosyst. Eng. 2005, 90, 369–379. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef]

- Zhu, J.S.; Sun, K.; Jia, S.; Li, Q.Q.; Hou, X.X.; Lin, W.D.; Liu, B.Z.; Qiu, G.P. Urban Traffic Density Estimation Based on Ultrahigh-Resolution UAV Video and Deep Neural Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4968–4981. [Google Scholar] [CrossRef]

- Congress, S.S.C.; Puppala, A.J.; Lundberg, C.L. Total system error analysis of UAV-CRP technology for monitoring transportation infrastructure assets. Eng. Geol. 2018, 247, 104–116. [Google Scholar] [CrossRef]

- Malihi, S.; Zoej, M.J.V.; Hahn, M. Large-Scale Accurate Reconstruction of Buildings Employing Point Clouds Generated from UAV Imagery. Remote Sens. 2018, 10, 1148. [Google Scholar] [CrossRef]

- Tokarczyk, P.; Leitao, J.P.; Rieckermann, J.; Schindler, K.; Blumensaat, F. High-quality observation of surface imperviousness for urban runoff modelling using UAV imagery. Hydrol. Earth Syst. Sci. 2015, 19, 4215. [Google Scholar] [CrossRef]

- Bendea, H.; Boccardo, P.; Dequal, S.; Giulio Tonolo, F.; Marenchino, D.; Piras, M. Low cost UAV for post-disaster assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1373–1379. [Google Scholar]

- Fernandez Galarreta, J.; Kerle, N.; Gerke, M. UAV-based urban structural damage assessment using object-based image analysis and semantic reasoning. Nat. Hazards Earth Syst. Sci. 2015, 15, 1087–1101. [Google Scholar] [CrossRef] [Green Version]

- Boccardo, P.; Chiabrando, F.; Dutto, F.; Tonolo, F.G.; Lingua, A. UAV deployment exercise for mapping purposes: Evaluation of emergency response applications. Sensors 2015, 15, 15717–15737. [Google Scholar] [CrossRef] [PubMed]

- Schultjan, M. Towards the Deployment of UAVs for Fire Surveillance; Hamburg University of Technology: Hamburg, Germany, 2012. [Google Scholar]

- Lucieer, A.; Jong, S.M.d.; Turner, D. Mapping landslide displacements using Structure from Motion (SfM) and image correlation of multi-temporal UAV photography. Prog. Phys. Geogr. Earth Environ. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; De Jong, S.M. Time Series Analysis of Landslide Dynamics Using an Unmanned Aerial Vehicle (UAV). Remote Sens. 2015, 7, 1736–1757. [Google Scholar] [CrossRef] [Green Version]

- Barlow, J.; Gilham, J.; Ibarra Cofrã, I. Kinematic analysis of sea cliff stability using UAV photogrammetry. Int. J. Remote Sens. 2017, 38, 2464–2479. [Google Scholar] [CrossRef]

- Sturdivant, E.J.; Lentz, E.E.; Thieler, E.R.; Farris, A.S.; Weber, K.M.; Remsen, D.P.; Miner, S.; Henderson, R.E. UAS-SfM for Coastal Research: Geomorphic Feature Extraction and Land Cover Classification from High-Resolution Elevation and Optical Imagery. Remote Sens. 2017, 9, 1020. [Google Scholar] [CrossRef]

- McBratney, A.; Pringle, M. Estimating average and proportional variograms of soil properties and their potential use in precision agriculture. Precis. Agric. 1999, 1, 125–152. [Google Scholar] [CrossRef]

- Atzberger, C. Advances in remote sensing of agriculture: Context description, existing operational monitoring systems and major information needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

- Dunford, R.; Michel, K.; Gagnage, M.; Piégay, H.; Trémelo, M.-L. Potential and constraints of Unmanned Aerial Vehicle technology for the characterization of Mediterranean riparian forest. Int. J. Remote Sens. 2009, 30, 4915–4935. [Google Scholar] [CrossRef]

- Johnson, L.F. Temporal stability of an NDVI-LAI relationship in a Napa Valley vineyard. Aust. J. Grape Wine Res. 2003, 9, 96–101. [Google Scholar] [CrossRef]

- Steltzer, H.; Welker, J.M. Modeling the effect of photosynthetic vegetation properties on the NDVI–LAI relationship. Ecology 2006, 87, 2765–2772. [Google Scholar] [CrossRef]

- Wang, Q.; Adiku, S.; Tenhunen, J.; Granier, A. On the relationship of NDVI with leaf area index in a deciduous forest site. Remote Sens. Environ. 2005, 94, 244–255. [Google Scholar] [CrossRef]

- Fensholt, R.; Sandholt, I.; Rasmussen, M.S. Evaluation of MODIS LAI, fAPAR and the relation between fAPAR and NDVI in a semi-arid environment using in situ measurements. Remote Sens. Environ. 2004, 91, 490–507. [Google Scholar] [CrossRef]

- Wang, X.; Wang, M.; Wang, S.; Wu, Y. Extraction of vegetation information from visible unmanned aerial vehicle images. Trans. Chin. Soc. Agric. Eng. 2015, 31, 152–159. [Google Scholar]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bareth, G. UAV-based imaging for multi-temporal, very high Resolution Crop Surface Models to monitor Crop Growth VariabilityMonitoring des Pflanzenwachstums mit Hilfe multitemporaler und hoch auflösender Oberflächenmodelle von Getreidebeständen auf Basis von Bildern aus UAV-Befliegungen. Photogramm. Fernerkund. Geoinf. 2013, 2013, 551–562. [Google Scholar]

- Dong, J.; Burnham, J.G.; Boots, B.; Rains, G.; Dellaert, F. 4d crop monitoring: Spatio-temporal reconstruction for agriculture. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, Singapore, 29 May–3 June 2017; pp. 3878–3885. [Google Scholar]

- UN DESA. World Urbanization Prospects: The 2014 Revision. 2015. Available online: https://esa.un.org/unpd/wup/Publications/Files/WUP2014-Report.pdf (accessed on 17 June 2019).

- Branco, L.H.C.; Segantine, P.C.L. MaNIAC-UAV-a methodology for automatic pavement defects detection using images obtained by Unmanned Aerial Vehicles. In Journal of Physics: Conference Series; IOP Publishing: Bristol, UK, 2015; p. 012122. [Google Scholar]

- Knyaz, V.; Chibunichev, A. Photogrammetric techniques for road surface analysis. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 515–520. [Google Scholar] [CrossRef]

- Phung, M.; Dinh, T.; Hoang, V.; Ha, Q. Automatic Crack Detection in Built Infrastructure Using Unmanned Aerial Vehicles. In Proceedings of the 2017 International Symposium on Automation and Robotics in Construction (ISARC), Taipei, Taiwan, 28 June–1 July 2017; pp. 823–829. [Google Scholar]

- Nishar, A.; Richards, S.; Breen, D.; Robertson, J.; Breen, B. Thermal infrared imaging of geothermal environments and by an unmanned aerial vehicle (UAV): A case study of the Wairakei–Tauhara geothermal field, Taupo, New Zealand. Renew. Energy 2016, 86, 1256–1264. [Google Scholar] [CrossRef]

- Saari, H.; Aallos, V.-V.; Akujärvi, A.; Antila, T.; Holmlund, C.; Kantojärvi, U.; Mäkynen, J.; Ollila, J. Novel miniaturized hyperspectral sensor for UAV and space applications. In Sensors, Systems, and Next-Generation Satellites XIII; SPIE: Bellingham, WA, USA, 2018; p. 74741M. [Google Scholar]

- Herold, M.; Roberts, D.; Smadi, O.; Noronha, V. Road condition mapping with hyperspectral remote sensing. In Proceedings of the 2004 AVIRIS Workshop, Pasadena, CA, USA, 31 March–2 April 2004. [Google Scholar]

- Joyce, K.E.; Belliss, S.E.; Samsonov, S.V.; McNeill, S.J.; Glassey, P.J. A review of the status of satellite remote sensing and image processing techniques for mapping natural hazards and disasters. Prog. Phys. Geogr. 2009, 33, 183–207. [Google Scholar] [CrossRef] [Green Version]

- Dong, L.; Shan, J. A comprehensive review of earthquake-induced building damage detection with remote sensing techniques. ISPRS J. Photogramm. Remote Sens. 2013, 84, 85–99. [Google Scholar] [CrossRef]

- Xu, Z.; Yang, J.; Peng, C.; Wu, Y.; Jiang, X.; Li, R.; Zheng, Y.; Gao, Y.; Liu, S.; Tian, B. Development of an UAS for post-earthquake disaster surveying and its application in Ms7. 0 Lushan Earthquake, Sichuan, China. Comput. Geosci. 2014, 68, 22–30. [Google Scholar] [CrossRef]

- Vetrivel, A.; Gerke, M.; Kerle, N.; Nex, F.; Vosselman, G. Disaster damage detection through synergistic use of deep learning and 3D point cloud features derived from very high resolution oblique aerial images, and multiple-kernel-learning. ISPRS J. Photogramm. Remote Sens. 2018, 140, 45–59. [Google Scholar] [CrossRef]

- Li, S.; Tang, H.; He, S.; Shu, Y.; Mao, T.; Li, J.; Xu, Z. Unsupervised detection of earthquake-triggered roof-holes from UAV images using joint color and shape features. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1823–1827. [Google Scholar]

- Leprince, S.; Ayoub, F.; Klinger, Y.; Avouac, J. Co-Registration of Optically Sensed Images and Correlation (COSI-Corr): An operational methodology for ground deformation measurements. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 1943–1946. [Google Scholar]

- Kotsis, I.; Kontoes, C.; Paradissis, D.; Karamitsos, S.; Elias, P.; Papoutsis, I. A Methodology to Validate the InSAR Derived Displacement Field of the September 7(th), 1999 Athens Earthquake Using Terrestrial Surveying. Improvement of the Assessed Deformation Field by Interferometric Stacking. Sensors (Basel) 2008, 8, 4119–4134. [Google Scholar] [CrossRef]

| Common and/or Example Camera and Its Spectral Range, Resolution, and Payload | Applications | Benefits and Obstacles in Practical Applications | |||||

|---|---|---|---|---|---|---|---|

| RGB cameras | Sony A9 | ~400–700 nm | 24.2 MP | 588 g | Visual analysis, mapping, land cover/land use, classification, pedestrians and vehicles detection and tracking, etc. | Advantages: (1) high availability in products ranging across different levels of cost, resolution, and weight; (2) easy to be integrated in different platforms (3) well-modeled camera geometry with a large number of software solutions; and (4) videos. Disadvantages: (1) Often come without radiometric/geometric calibration; and (2) lack of spectral information for many tasks. | |

| Canon EOS 5D mark IV | ~400–700 nm | 30.4 MP | ~800 g | ||||

| Nikon D850 | ~400–700 nm | 45.7 MP | 915 g | ||||

| Light-weight multispectral cameras | Sentera Quad Multispectral Sensor | ~400–700 nm | 1.2 MP | 170 g | Visual analysis, vegetation detection and analysis, crop monitoring, mining, soil moisture estimation, fires detection, water level measurement, land cover/land use mapping, etc. | Advantages: (1) wider spectrum range and narrower bandwidth; (2) often come with means of radiometric calibration; (3) most of the sensors still follow a perspective model that can be well-processed for geometric reconstruction; and (4) allow for sub-decimeter multispectral mapping. Disadvantages: (1) data format compatibility (sometimes 12 or 16-bit) for software packages; (2) as a component of a UAV system, its cost remains to be relatively high; (3) sensor compatibility to drones may be limited; and (4) videos may not be available. | |

| ~655 nm | |||||||

| ~725 nm | |||||||

| ~800 nm | |||||||

| Quest Condor5-UAV | 400–1000 nm | 2048 × 1088 (2.2 MP) | ~1450–1950 g | ||||

| Phaseone iXU/iXU-RS 1000 Aerial Cameras | ~400–700 nm | 100 MP | 1430–1700 g | ||||

| Hyperspectral sensors | Rikola Hyperspectral Camera | 500–900 nm | 1.05 MP | <600 g | Land cover/land use mapping, vegetation indices estimation, biophysical, physiological, or biochemical parameters estimation, agriculture and vegetation disease detection, disaster damage assessment, etc. | Advantages: abundant spectral information, 10 nm-level bandwidth for more advanced applications in material identification and so on. Disadvantages: (1) high cost; (2) most of them are linear-array and require specialized software, and the users may take care of the data format and geometric corrections; (3) dimension reduction is needed for typical classification tasks; (4) sensor compatibility to drones may be limited; and (5) videos may not be available. | |

| Resonon Pika NIR-640 | 900–1700 nm | 640 pixels | 2700 g | ||||

| High-Efficiency Hyperspec SWIR | 1000–2500 nm | 384 pixels | 4400 g | ||||

| Light-weight thermal infrared sensors | FLIR Vue Pro | 7.5–13.5 µm | 640 × 512 pixels | 72 g | Tracking creatures, volcanos detection, forest fire detection, hydrothermal studies, urban heat island measurement, etc. | Advantages: (1) well-targeted sensor for surface temperature measurement that drives a lot of new applications; (2) the camera model is normally perspective, and relatively easy to be processed than linear-array cameras. Disadvantages: (1) lack of texture information of its imageries brings difficulties in 3D reconstruction tasks; (2) for direct temperature measurement, it needs careful calibration; (3) cost is relatively high comparing to that of RGB cameras; (4) comparatively lower resolution than that of RGB cameras due to sensor design; (5) sensor compatibility to drones may be limited. | |

| Workswell WIRIS 640 | 7.5–13.5 μm | 640 × 512 pixels | <390 g | ||||

| YUNEEC CGOET thermal imaging camera and low-light camera | 8–14 μm | 2.1 MP | 278 g | ||||

| UAV LiDAR | RIEGL VUX-240 | Near -infrared | Up to 1,500,000 per second | ≤3800 g | Vegetation canopy analysis, estimation of forest carbon absorption, mapping cultural heritage, building information modeling, etc. | Advantages: (1) direct geometric measurement; (2) multiple returns of the signals are useful for terrain modeling under thin canopies. Disadvantages: (1) high equipment cost; (2) highly dependent on expensive onboard GPS/IMU measurement (potentially with external reference stations); (3) increased payload for surveying quality LiDAR; (4) may not work in GPS-denied regions. | |

| Velodyne Puck LITE | 903 nm | Up to ~600,000 per second | ~590 g | ||||

| Livox Mid-40 | 905 nm | 100,000 per second | 760 g | ||||

| LULC Mapping | Change Detection | ||

| Low-to-moderate-resolution satellite RS data |

|

| |

| High-to-very high-resolution satellite or airborne data |

|

| |

| Ultra-high-resolution UAV-borne data |

|

| |

| Selected Applications | Highlights | |

|---|---|---|

| Precision agriculture and vegetation | Soil property estimation [135]; crop/vegetation management [136,137]; forest structure assessment [138]. |

|

| Urban environment and management | Traffic control [139]; urban infrastructure management [140]; building observation [141]; urban environment mapping [142]. |

|

| Disaster hazard and rescue | Post-disaster assessment [143,144]; emergency responses [145]; fire surveillance [146]; landslide dynamic monitoring [147,148]; coastal vulnerability assessment [149,150] |

|

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yao, H.; Qin, R.; Chen, X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sens. 2019, 11, 1443. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11121443

Yao H, Qin R, Chen X. Unmanned Aerial Vehicle for Remote Sensing Applications—A Review. Remote Sensing. 2019; 11(12):1443. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11121443

Chicago/Turabian StyleYao, Huang, Rongjun Qin, and Xiaoyu Chen. 2019. "Unmanned Aerial Vehicle for Remote Sensing Applications—A Review" Remote Sensing 11, no. 12: 1443. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11121443