1. Introduction

The detection of straight and accurate building outlines is essential for urban mapping applications like 3D city modeling, disaster management, cadaster, and taxation. To accommodate the high demand of various applications, accurate building outline extraction requires an automated procedure. Rottensteiner and Briese [

1] stated that in the building reconstruction task, building boundary determination is a crucial but difficult step. In recent years, automatic approaches for detecting building roof outlines are still intensively studied. In urban remote sensing, automatic building line detection has a low success rate due to scene complexity, incomplete cue extraction, and sensor dependencies [

2].

LiDAR point clouds have become one of the most commonly used input data for large-scale mapping. For efficiency purposes, the need to optimize LiDAR data usage has increased rapidly. As LiDAR is able to provide accurate three-dimensional (x, y, z) point clouds free from relief displacement, the use of LiDAR data to extract building polygons automatically has become a key target for researchers and practitioners within the geospatial industry. However, extracting building boundaries from point clouds is still a challenging task because LiDAR points do not always exactly hit the edge of a building. As a result, LiDAR point clouds feature jagged edges instead of straight and continuous lines. In addition, different kind of building roof configurations (size, shape, color, etc.) and the surrounding context increase the difficulties to design an automated method. A robust approach is required to adapt to different kinds of buildings and overcome the influence of noise. Efforts on building outline extraction were also conducted on the combination of LiDAR point clouds and aerial images to use each of their advantages. Unfortunately, to fuse different input data is not easy as building representations may suffer from relief displacement or building distortion in image scenes [

3]. In many cases, the geometric position of images and LiDAR point clouds hardly match.

Machine learning approaches, such as Support Vector Machine (SVM), Random Forest (RF), deep learning, etc., undeniable provided a breakthrough in the field of point cloud processing. Machine learning has been widely used to improve object extraction (e.g., building, road, trees, etc.), classification, and segmentation. Many geo-applications (base map production, cadaster, road inventory, etc.) require fine object boundaries to generate Geographic Information System (GIS) vector data as a final product. However, machine learning methods use neighborhood information to obtain learned or handcrafted features. Notably at borders between segments, such as at building outlines, such features are fuzzy. As a consequence, extracting sharp edges is difficult for machine learning methods and results typically do not meet map production requirements. Therefore, post processing is necessary. Currently, a limited number of image-based building delineation tools exist, including BREC [

4], as well as point cloud-based commercial software such as TerraScan [

5] and ENVI [

6]. Nevertheless, the quality (geometric accuracy, straightness, and completeness) of the extracted building outline results need to be improved, especially for complex buildings [

7,

8]. This study aims to provide an alternative solution to extract accurate and straight building outlines from point clouds automatically.

The problem of line detection method is one of establishing meaningful groups of edge points that lies along straight lines. Hough transformation is a well-known model-based approach that uses length-angle or slope-intercept parameters to detect lines [

9]. Hough transform was introduced by Paul Hough in 1962 to detect curves in images and was applied to the field of computer vision by Duda and Hart [

10], who encouraged the use of the length from origin R and orientation angle θ for line parameterization. It was designed to solve a number of computer vision problems. Vosikis and Jansa [

11] stated that Hough transformation is a powerful tool for automated building extraction and creation of digital city models, but also that the degree of automation is still highly correlated to the quality of the input data. Another challenging problem of the use of the Hough transform is the limited accuracy of the object extraction, which is sensitive to the resolution of the accumulator space and to the noise in the data [

12,

13]. Performance on detecting different sizes and orientations of buildings automatically also remains a problem.

This study proposes a new method to extract accurate building outlines from ALS (Airborne Laser Scanner) point clouds automatically using an extension of Hough transform that exploits lists of ordered points to define line segments and corners. We provide the following three significant contributions to overcome common issues when dealing with the use of Hough transformation for line extraction:

Detection of arbitrary directions. Regularization should not hamper the extraction of consecutive roof edges that are not perpendicular or roof edges with an orientation not matching the overall orientation of a building;

Extraction of different interrupted segments of different lengths belonging to the same line. Instead of a line, collinear line segments should be distinguishable for preserving the original building geometry;

Robustness to noise, flaws, and irregularity. The shape and size of a building should be preserved in case jaggy points or due to objects exist (e.g., trees) adjacent to the building causing flaws in the building segmentation result.

The rest of this article is organized as follows:

Section 2 describes related work on 2D building outline extraction. The methodological framework is presented in

Section 3.

Section 4 describes different test sets and data preprocessing steps used in this study.

Section 5 presents the sensitivity analyses and experiments followed by

Section 6 that presents and discusses results. Finally, conclusions and recommendations are given in

Section 7.

2. Related Work

Several methods to extract building outlines from point clouds have been proposed in the past. This literature review first discusses building extraction methods using various remote sensing techniques, followed by the use of LiDAR data and regularization for building extraction. Second, the use of Hough transform for line extraction is discussed.

Recent studies on edge detection using deep learning techniques focus more on natural images [

14,

15,

16,

17,

18,

19] and remote sensing images [

20]. Resulting edges are often thick and noisy and require post processing before thinned and sharp boundaries are obtained [

21]. In 2018, Microsoft conducts building footprints extraction for the US and Canada areas from satellite images by first classify building pixels using a deep learning toolkit (called CNTK), followed by a polygonization step that converts building pixel into polygon. The polygonization is conducted by imposing a priori building properties that are manually defined and automatically tuned [

22]. Some of these a priori properties are

Building edge should have some minimal some minimal length, both relative and absolute, e.g., 3 m;

Consecutive edge angles are likely to be 90 degrees;

Consecutive angles cannot be very sharp, i.e., smaller than some auto-tuned threshold, e.g., 30 degrees;

Building angles likely have few dominant angles, meaning that all building edges are forming an angle of (dominant angle ± nπ/2).

Yu et al. [

23] claim to present the first edge-aware deep learning network for 3D reconstruction from point cloud data, namely EC-Net. Edge-aware means here that the network learns the geometry of edges from training data, and during testing, it identifies edge points and generates more points along edges (and over the surface). This method has limitations in cases of large holes and otherwise incomplete data. Sharp edges around tiny structures that are severely under-sampled may not be extracted because the training patches become too large for tiny structures.

Many studies combine different type of remote sensing to acquire accurate building outlines. Sohn and Downman [

2] proposed a method for building footprint extraction from a combination of IKONOS and LiDAR data. They apply a model-driven approach on a LiDAR point cloud and a data-driven approach on satellite images. Li et al. [

24] present an automatic boundary extraction method by combining LiDAR and aerial images to handle various building shapes. Their method consists of three main steps. First, roof patch points are detected from filtering, building detection, and removal of wall points. Second, initial edges are obtained using a Canny detector constrained by buffer areas of edges extracted in the first step. In the final step, roof patches and initial edges are fused using mathematical morphology to form complete building boundaries. It is stated that the boundary result contains redundancies, which need further simplification. The low point density causes a high number of false negatives and false positives.

Zhao et al. [

25] propose building footprint extraction and regularization using connected components from airborne LiDAR data and aerial images. Building candidates are separated from a LiDAR Digital Surface Model (DSM) using connected operators and trees are removed using NDVI values derived from the image. Building boundary lines are traced by a sleeve line simplification algorithm and are regularized based on the principal building direction. This study identifies different sources of errors like the regularization process, DSM interpolation, and vegetation points near the buildings. Awrangjeb [

26] determined building outlines from point cloud data by boundary tracing and regularization to preserve high detail boundaries and return high pixel-based completeness. Errors occur due to failures to estimate a dominant direction. The method is extended by Akbulut et al. [

27] to smooth jagged building boundaries generated by an active contour algorithm. LiDAR point clouds and aerial images were combined to improve the segmentation quality of the active contour method. Siddiqui et al. [

28] performed a gradient-based approach to extract building outlines from both LiDAR and photogrammetric images. Gradient information obtained from LiDAR height and local color matching is used to separate trees from buildings. Prominent building orientations are regularized based on the assumption that building edges are mainly oriented at 0° (parallel), 90° (perpendicular), 45° (diagonally), 22.5°, or 11.25° to each other. The proposed method is able to deliver consistent results. However, their method is designed to extract buildings with flat and sloped roofs. Xie et al. [

29] presented a method for hierarchical regularization of building boundaries in noisy ALS and photogrammetric point clouds consisting of two stages. First, boundary points are shifted along their refined normal vector and divided into piecewise smooth line segments. In the second stage, parallel and vertical relationships between line segments are discovered to further regularize edges. 2D building footprints extraction was tested on two ISPRS Toronto benchmark datasets and obtained 0.77 m and 0.68 m RMSE.

Several studies focus on the utilization of LiDAR data as a single input for building extraction and apply regularization to improve the result [

25,

28,

30,

31,

32,

33,

34]. Regularization is applied to enforce rectangularity and orthogonality of human-made objects. Lach and Kerekes [

35] report on boundary extraction from LiDAR point cloud using 2D α-shapes and apply consecutive regularization. Line simplification based on a sleeve-fitting approach is applied once the edge points are extracted. Then, regularization is used to force boundary line segments to be either parallel or perpendicular to dominant building orientations. In this study, a quantitative analysis and geometric accuracy of the result is not given. Dorninger and Pfeifer [

31] use mean shift segmentation to detect a building and use 2D α-shape generalization to extract initial roof outlines from an airborne LiDAR point cloud. Based on the angular direction of subsequent line segments and connected linear components of the α-shape, regularization is then applied to enforce orthogonality and parallelism of linear components. Hence, the adjusted building edges are either parallel or orthogonal, and the method is not applicable for a building that has more than two edge directions.

Sampath and Shan [

34] modified a convex hull algorithm to trace building boundaries from raw point cloud data and determine dominant directions. Then, regularization is applied using hierarchical least-squares to extract building outlines such that the slopes of line segments are either equal or perpendicular. However, the regularization quality was found to be dependent on the point density of the LiDAR data and only considers two dominant directions. Gilani et al. [

32] propose building detection and regularization using multisource data which are ALS point cloud data, orthoimages, and Digital Terrain Models (DTM). Candidate buildings are identified using connected component analysis from a building mask generated from ALS data. Building outlines are then detected by hierarchical clustering and filtering. Building footprints are generated using image lines and extracted building boundaries. Regularization begins with the selection of the longest line and it next adjusts nearby lines. The regularized building outlines may deviate from the correct building orientations since the result depends on the selection of longest lines.

A comprehensive review on the use of Hough transforms in image processing and computer vision is presented in Illingworth and Kittler [

36]. Mukhopadhyay and Chaudhuri [

37] present a comprehensive and up-to-date survey of Hough transform on various issues of Hough transforms, which even after 51 years of discovery is a lively topic of research and applications. Herout et al. [

38] specifically review the use of Hough transforms for line detection. Morgan and Habib [

39] used Hough transforms from a TIN model of LiDAR point clouds to determine building boundaries. Triangles incident to the building edges (internal breaklines) that connect buildings and ground points are selected and used to obtain triangle centers. These points are fed into a Hough transform to detect lines. Because of limited point density and smaller numbers of extracted triangles on some short building boundaries, the Hough transform detects less building lines than it should. Guercke and Sester [

40] used Hough transforms to simplify and straighten the shape of building footprints extracted from LiDAR data. A jagged building outline is divided into small line segments and is then transformed into Hough space. Line hypotheses are determined based on the dominant direction detected as peaks in Hough space. These hypothesis lines are then refined by least squares to form a closed polygon. The method has problems on buildings with multi-parallel short building edges (stair-like shape) due to peak detection failures.

Iterative Hough transform is proposed [

41] to detect building edges from 3D point clouds. Each line is optimized with an orthogonal least square fit. After a line is found, points belonging to this line are removed and the Hough transform procedure is repeated until no points are left or a sufficient number of lines is found. However, the proposed method has drawbacks such as memory insufficiency and overflow in the accumulator matrix because many points belong to a specific line. Another drawback is a premature stop of the iteration process due to many identical points with the same coordinates, which then yield no line direction due to zero covariance matrix. The Hough Transform suffers from several well-known problems including spurious peaks and quantization effects. Miller [

42] extracted building edges from a DSM generated from a LiDAR point cloud. Each edge is converted to lines using a Hough transform to get the building footprint. Inaccuracy of the extracted building footprint of a large dormer and tall extruding roof structure are mainly caused by rotation and bigger pixel size (down sampling) that ultimately reduced the performance of their approach. Oesau [

43] proposes a multiline extraction method for shape detection of mobile laser scanner (MLS) point cloud data. 2D line segments are extracted through a Hough Accumulator that combines both a Hough transform and global maxima in a discrete parameter space. However, over-simplification is introduced by the coarse resolution of the Hough Accumulator. Albers et al. [

44] use Hough transform to extract building line segments from airborne LiDAR point clouds. The building edge points are selected by a 2D α-shape algorithm and then repositioned based on energy minimization using three terms: distance estimated line to input points, angle between consecutive lines, and line segment length. The proposed method allows presenting more than one building orientation but is reported that to work for consecutive segments with 45° and 90° angle difference (angle ∈ {45°,90°,135°,180°}). Hohle [

45] generates straight building polygons from aerial images using Hough transform. It follows an orthogonality and parallelism scheme by assuming that consecutive building edges are orthogonal.

In summary, obtaining accurate building boundaries are still an open problem. Prior Hough transform works mentioned above have certain limitation either to determine arbitrary building orientation or in accurate peak detection. Thus, the absence of building detection of arbitrary directions from point cloud motivates us to develop an automatic approach to extract building outlines accurately from a given point cloud.

3. Methodology

The goal of this study is to obtain the 2D outline or bounding polygon of a building automatically. We propose a hierarchical approach to select accurate lines by generating a point accumulator matrix from ordered building edge points.

The expected result from this study is a set of 2D building polygons in vector format that meet the map following specifications:

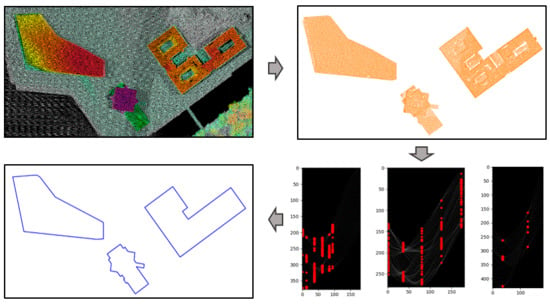

We propose a general framework for obtaining building outlines and demonstrate its ability by applying it to different test sets. The general framework of this study consists of five major steps: pre-processing, edge point selection, building line segment detection using Ordered point aided Hough Transform (OHT), line segment intersection, and 2D closed building polygon extraction from ordered building corners. The whole framework is shown in

Figure 1.

The novelty of our method lies in the use of ordered points, which to the best our knowledge has never been used to detect building lines of different length in Hough space. The capability of the proposed method to detect arbitrary building orientations provides another advantage over existing methods. The proposed OHT (approach consists of the following steps:

Extract ordered 2D edge points from a given building segment by applying K-NN concave hull;

Parameterize all possible lines through the 2D edge points, and store the distances to the origin r of these lines in a matrix R. A Hough accumulator matrix HA counts accumulated points of the same orientation angle and distance r;

Detect dominant building directions;

Identify candidate cells representing prominent lines along dominant directions;

Create an Ordered Point List matrix OPL to store lists of ordered points. OPL is then used for detecting and filtering line segments, generating building corners, and forming a closed polygon.

An overview of the proposed building outline extraction is illustrated in

Figure 2.

3.1. Edge Point Selection

Our method requires ordered building edge points. Only the 2D coordinates of the edge points will be kept to extract building outlines. For this task, we apply the concave hull K-Nearest Neighbor algorithm [

47] that uses the value of

k as the only parameter to control the smoothness of the result. In the beginning, this algorithm finds its first vertex (point A) based on the lowest Y value. Then it will search

k-nearest points (for

k = 3: point B, C, D), as candidate for the next vertex. Point C will be assigned as the next vertex if it has the largest angle of right-hand turn measured from the horizontal line through point A. In the next step, the

k nearest points of point C are queried, and the selected vertex is appointed once it has the largest angle of right-hand turn from line A–C. The process is repeated until the first vertex is selected once again as candidate.

Higher k will lead to smoother polygons. In this study, the k values vary from 3 to 11 depending on the point density. A building with irregular point intervals may require higher value of k to derive suitable edges.

3.2. Hough Accumulator Matrix

The key idea of the Hough transform is to select straight-line candidates based on a voting scheme in a parameter space. To parameterize a line, we use two parameters: distance to the origin,

r, and orientation angle,

. The mapping relations of a point in object space (

x, y) and (

) parameter space is specified in Equation (1).

The chosen polar parameters are advantageous over the slope-intercept (m, c) parameterization since the (m, c) may have a singularity when the slope of the line is infinite. The number of rows and columns of the matrix is adjusted according to the bin sizes of the two parameters. Each cell contains a number of lines having and r values.

As illustrated in

Figure 3a, one line defined by a pair

may contain different building edge points, here A, B, and C. The fan of all lines passing through one point (

Figure 3a) corresponds to a sinusoidal curve in Hough space (

Figure 3b), while each point in Hough space corresponds to one straight line in object space.

For a given set of n ordered LiDAR points Pi = (xi, yi), for i = 1, 2, …n, forming a building roof boundary, a matrix R containing line distance r parameter values is created as follows:

Fix origin O at (min xi, min yi) for (xi, yi), i = 1, 2, …, n and initiate a matrix R with dimension (n × 181);

For point Pi and for each {0°, 1°, 2°,.., 180°}, determine ri() using Equation (1);

Store ri in matrix R at position (i, ).

Matrix R stores r-values for lines of different angles (0° to 180°) through each point Pi. Next, it will be considered if points share lines. Lines common to many points are likely to define a building edge. To identify common lines, i.e., lines defined by different points but sharing the same values, the Hough Accumulator (HA) is created next. HA requires binning of r and θ to represent the location of its cells. Each cell in HA stores the number of points with matching θ and r. Different cells in HA represent different combinations of θ and r values. The higher the number in a HA cell, the more likely the cell produces a correct line for an edge.

HA is a 2D array of size (number of

binr × 180). The trade-off between the number of bins of the matrix and the number of available observations is crucial [

8]. Too many bins may lead to a sparse representation of the density that will decrease the ability to detect prominent lines. On the other hand, too few bins will reduce the resolution and accuracy of the building line results. We recommended that the bin width of

r, (

binr), is set according to the average point interval.

3.3. Detection of Arbitrary Building Directions

Building shapes and other man-made objects are often characterized by certain geometrical regularities [

45], mostly appearing as perpendicularity or parallelism. However, in a reality, not every building is constructed using such geometrical regularities. Therefore, determining possibly arbitrary building direction is an important strategy for the building extraction process.

One limitation of Hough Transform is that when the number of lines increases, the correlated error around the peak in the parameter space could cause ambiguities for line [

48]. To limit the search space for selecting line candidates, the proposed algorithm uses local maxima detection instead of global maxima. Local maxima are detected by identifying peaks in the graph of the variance of

along the columns of the matrix HA. The variance is defined as the average of the squared deviations from the mean number of lines along the

column of matrix HA. Finding local maxima means to detect accumulator cells that have higher vote than their neighborhood (peak). For detecting peaks, we first apply a Savitzky Golay filter [

49] to denoise the data. The basic idea of Savitzky Golay filtering is to replace each point by the corresponding value of a least squares fit of a low order polynomial fitted to points in a window centered at that point.

In the smoothed variance data, peaks are detected if they meet two criteria: normalized threshold (

amp) and minimum distance (

mindist) between each detected peak. The normalized threshold will select peaks with higher amplitude than the threshold.

Figure 4 illustrates the one-dimensional peak detection from a smoothed variance input (magenta graph) to define the direction. In most cases, the difference between two dominant building directions is close to 90°.

3.4. Hotspot Selection

After dominant directions are detected, the algorithm will next search along the corresponding columns for cells in matrix HA that have at least minL edge points. These cells are then preserved as initial hotspots. An initial hotspot is a candidate cell to represent a building line. The minimum edge length (minL) parameter is set based on the required building length, ℓ, as minL = , where d denotes the point interval. If, for example, the point interval is 0.5 m and the minimum length of the building edge to be extracted ℓ is 2.5 m, the required threshold minL = 5.

However, in HA, different column adjacent hotspots (one cell difference) may represent lines belonging to the same edge. This happens because some noise from the same edge points result in slightly different

combinations. Hotspot filtering is applied by searching for any adjacent hotspots along a dominant direction. Only the hotspot that has maximal

HA(i,j) value is kept.

Figure 2d,e, respectively, present the result of initial hotspot detection and hotspot filtering.

3.5. Ordered Point List

One of our main contributions is to extract building outlines using a so-called Ordered Point List (OPL) matrix. This matrix is generated based on the classic Hough accumulator. OPL has the same dimension as HA and the same () parameters. The difference is that HA stores just the number of accumulated points voting for a line while OPL stores the actual ordered lists of points voting for a line. This means that each cell in OPL () contains an ordered list of points Pi that are on the parametric line r = .

To obtain more accurate and complete building edges, in OPL, the contents of each filtered hotspot is merged with its adjacent cells of the same column (Δ

binr = 0 and Δ

binr = 1). Matrix OPL

m contains the merged point members. The difference cell value between HA, OPL, HA

m, and OPL

m of specific (

) is llustrated in

Figure 5. Points accumulated in HA (

Figure 5a) are specified in OPL (

Figure 5b). The red cell marks one of the hotspots. Point members of hotspot cells of merged OPL

m (a red cell in

Figure 5d) are adapted by include neighboring cells. As an example, OPL

m(105,87) has 11 additional points that complement the existing ordered point members of OPL

(105,87).

3.6. Segment Detection and Filtering

Our algorithm yields arbitrary main directions that are used to select prominent lines. For some buildings with a complex shape, a false main direction may get detected because the corresponding θ has the highest vote in HA. Therefore, we apply hierarchical filtering to eliminate false lines resulting from a wrong main direction.

Using filtered hotspots and the merged Point Accumulator matrix OPLm as main input, the algorithm measures the distance of each point belonging to a filtered hotspot, to the hotspot line parameterized by the pair (). Then, it counts the number of hotspot points that have a distance more than binr. A hotspot will be removed from the list if one of two following conditions holds:

This mean distance threshold is set based on an empirical observation for detecting false lines and distinguishing those from correct but noisy lines.

A line resulting from the traditional Hough transform cannot distinguish different segments belonging to the same line. In this case, two different building edge segments that share the same () will not be detected. The proposed edge extraction algorithm requires segments, instead of lines, for producing a closed polygon. In this study, a segment is defined as a part of a line that is bounded by two building corner points. Note that a building line may contain more than one segment.

Segment detection exploits ordered edge points stored in OPLm. Each segment has a θ, an r, and a number of ordered points. Multiple segments on a line are identified by a gap or point jump between ordered points in the list. We set the gap threshold to 2 (two points jump). In this case, a gap is detected if there are at least two consecutive points missing from the list of ordered points.

An example of segment detection is illustrated in

Figure 6. The red line in

Figure 6a consists of two segments as a gap exists in the list of ordered points (L9) as presented in

Figure 6b. Line L9 has two different segments. Point members of segment

L9A are marked in red (from 58 to 77), while the point members of segment

L9B are marked in blue (from 116 to 135). The two segments are separated by a large point interval.

A segment is then identified and selected as a list containing a minimum number of points

minL. The minimum segment length is adjusted according to the output requirements. As an example, L1 in

Figure 6b consists of two lists of ordered points separated by a big gap. Nevertheless, it will return one segment only because the first list of ordered points only has two members, points 0 and 1.

After all segments are identified, matrix OPLm contains different segments. These segments are sorted based on the first element of the list of points. Finally, segment filtering is performed. This last filtering step is needed to remove false segments that may remain in the result. A shorter segment having all points the same as a longer segment will be removed if one of two following conditions holds:

Lists of points are input for corner extraction. First, all building segments are sorted based on their lowest participating point label. Then, the algorithm extracts intersections from consecutive segments. A closed building polygon is formed by connecting all consecutive segment intersections.

From two given parametric lines,

The intersection point

c (

xc, yc) is computed as

Table 1 summarizes different kind of point and line representations used in our proposed method. A point in object space is translated into a row in R, as a curve in HA, and as part of a list in OPL. Lines and segments in object space are represented by a subset of a column in R and as a cell in HA. A cell in HA shows the number of points belonging to the same line, while a cell in OPL shows the list of points of a line. Hence, in OPL, lines and segments are represented by list of several points.

3.7. Validation

Two different quantitative analyses are performed to evaluate the result of the building outline extraction: performance metrics and positional accuracy. The ground truth used as reference to assess our results is described in

Section 4. We used three performance metrics [

50] to evaluate the building polygon results, completeness (

Cp), correctness (

Cr), and quality (

Q). The performance metrics are calculated based on an area comparison of buildings in the reference data and in the result in the unit m

2.

The positional accuracy is a geometric validation that evaluates if the quality of the extracted building polygons meets the geometric accuracy criteria. The positional accuracy is determined using a Root Mean Square Error (RMSE) value. The squared root of the average of the squared differences between corner positions (X and Y coordinate) in the reference and in the result is calculated to estimate the RMSE.

where

= Coordinates of resulting corner points

= Coordinates of corner points in the ground truth

n = Total number of corner points

6. Results and Discussion

In the following, we will discuss the overall results of the proposed method. A more detailed discussion of the experiments for the Makassar test set and the Vaihingen test sets respectively, are given next. Finally, a comparison to previous results on the benchmark test set (Vaihingen-B) is presented.

6.1. General Evaluation

Goal of this study is to provide a robust procedure for automatic building outline extraction from airborne LiDAR point clouds. Using the ground truth described in

Section 4, we evaluate our method. Our method is able to achieve high completeness and correctness for both study areas as shown in

Table 4. For Makassar, building polygons results achieve 91.8% completeness, 99.2% correctness and 91.1% quality. The quality metrics of the Vaihingen-A test set are also high, 96.4%, 96.5%, and 93.2%, respectively. Vaihingen-B achieves slightly less good quality metrics of 90.1% completeness, 99.4% correctness and 89.6% quality.

We use different methods of point cloud filtering, classification, and segmentation to assure that our building extraction method is able to adapt to and is not limited to a specific processing workflow. The proposed workflow for extracting building outlines requires segmentation as a pre-processing step. The quality of the segmentation result influences the extracted building outlines. Irregular concave hull outlines as shown in

Figure 2b, are not suitable as input for map products.

Table 2 shows a comparison between an object-based evaluation of our results and the concave hull of the segmented building points. Our results have better quality and correctness, with an increase of between 1% and 3%, except for Vaihingen-B. This proves that our algorithm is able to increase the quality of building outlines resulting from the concave hull and improve the segmentation result quality.

It should also be noted that the computational performance of the proposed method is not an issue. The average computation time of the proposed OHT method for delineating 2D building outlines on an Intel Core 2Duo CPU with a 2.4 GHz processor is about 0.579s per-building.

The completeness of our building outlines results is lower than the correctness. This means that, on average, building polygons are smaller than the ground truth data. As LiDAR points rarely sample a building edge, only points located within the building roof are used, which causes a shrinking of the polygons. We estimated the percentage of shrinking area using all buildings of simple (square) size that are well-segmented in our test sets. The average of the building shrinking is consistent at 4.24% for the Makassar test set and 4.36% for the Vaihingen test sets.

6.2. Results for Makassar

Indonesian base map specifications [

46] require that each building edge of at least 2.5 m should be presented on the map. Accordingly, the minimum length

minL of a segment for the Makassar test set is five points (2.5m/0.5m). The

binr size is set to 0.5 m. The ground truth data used as reference for Makassar is the Indonesian base map at a scale of 1:10,000. The base map is obtained by manual 3D-compilation of stereo-photos acquired at the same time and from the same platform as the LiDAR data we use.

The comparison between the extracted building polygons of Makassar and the ground truth is presented in

Figure 16b. From 42 buildings present on the base map, 37 buildings are extracted. The five missing buildings are indicated by blue stars. In addition, our method is able to extract one building that is not present in the ground truth (as indicated by a black arrow in

Figure 16b). This building has a size of 15.5 m by 43 m and should have been present in the base map.

Even though some noise exists in the segmented building points, the proposed method is still able to extract accurate building polygons. As highlighted in

Figure 16a, most filtered building segments (showed in blue) are noisy. High vegetation points connecting to the buildings cause most of the noise. Six buildings have a size or shape different from the base map (indicated by black stars) due to imperfect filtering and segmentation of building points. Low elevation of building roof points is assumed as the main cause why five buildings (indicated by blue arrows) are completely missing.

Positional accuracy is measured for buildings that exist in our result and the base map. RMSE is measured based on the coordinate differences between building corners from our result and reference data. The RMSE for complete building polygons is between 0.38–0.57 m. For a building where some parts of its roof are not completely detected (as indicated by the black circle in

Figure 16b), the building corners shift reaches 10.84 m.

During the experiment, the proposed method can actually extract building edges of a length less than the required 2.5 m from the Makassar data. The two buildings, as indicated by red arrows in

Figure 16b, are annexes of a size of 1.3 m by 6 m. These building annexes are likely not included in the map, as their size does not meet the 1:10,000 base map specification. Our method is able to extract such small building parts by applying the minimum edge length paramater

minL to

minL = 3.

6.3. Results for Vaihingen

The chosen test sets of Vaihingen consist of high-residential buildings that have complex shape and are surrounded by trees. Several buildings have multiple roof layers of different heights and various length of edges. We set 1.5 m as the minimum length of a building line segment corresponding to minL = 3 for both of the Vaihingen test sets. The binr size setting is between 0.3 and 0.6 m.

We succeeded to extract all buildings in the Vaihingen-A and the Vaihingen-B test set. For the Vaihingen-A test set, the reference data is acquired by Open Street Map (OSM) validated by aerial orthophotos provided by ISPRS. For the Vaihingen-B test set, we use a set of building outlines provided by ISPRS as the ground truth. The RMSE result of buildings in the Vaihingen-A data that are completely segmented is between 0.2–0.37 m. As shown in

Figure 17b, five out of 27 buildings have different shape due to over-segmentation (three red stars) or under-segmentation (three black stars) due to dense trees above the building roof.

The RMSE of extracted building corners is between 0.19–0.96 m. According to the ground truth, the Vaihingen-B results have the lowest quality metrics among our three test sets. The main cause for a lower quality metric is mis-detection of a vegetated building roof part of significant size (8.5 m by 9.5 m), marked by the black circle in

Figure 17c, which is likely a low roof covering an underground basement. The filtering process failed to detect this roof part as a building as the height difference between this basement roof and the ground is less than 1 m. The Digital Surface Model (DSM) of this subset area, as presented in

Figure 18b, shows that height information may not help to detect this kind of building (inside the white circle). In addition, some vegetated building roofs are not completely detected (indicated by brown circles in

Figure 18a,b). This happens because the trees and their surroundings disturb the expected planarity.

In the results, several building polygons have a shape and size different from the ground truth because of tree points allocated to the segmented building points. High vegetation adjacent to buildings is the main cause for the high false positive rate of the building polygon results. Moreover, neighboring trees that cover some parts of the roof induce some false negatives. For example, in

Figure 19a, the building segment contains parts of four adjacent trees. These tree points have similar height as the building roof, which range from 275.5 to 276.5 m. The height difference to the mean is about 40 cm as shown in

Figure 19c. However, our line extraction algorithm is able to ignore 1 out of 4 trees as indicated by the yellow circle in

Figure 19a. An extra feature, such as intensity as shown in

Figure 19d may not work for obtaining a correct outline for this building case. A trade off in using the intensity value to remove trees will reduce the building completeness as there is a roof part covered by a big tree. An additional clustering step (using e.g., DBSCAN) may work to remove trees. Nevertheless, when a building roof is covered by dense trees (e.g., as marked by the purple circle in

Figure 19a), orthogonal input data (such as ALS point cloud data or airborne images) may not work to detect the building boundaries accurately.

6.4. Comparison to Previous Building Outline Works

An analysis is performed to compare the performance of the proposed method to previous methods. Several previous results are selected from the Vaihingen-B evaluation, which is available on the ISPRS web page (

http://www2.isprs.org/commissions/comm3/wg4/results/a2_detect.html) and confirmed by the corresponding articles. We only consider methods aiming at obtaining straight building outlines that implement a data-driven approach and apply a regularization approach similar to our research objective. The selection is also limited to works that use ALS point clouds or a combination of ALS point clouds and aerial photos as input. We also include two results that are not presented on the ISPRS web page [

25,

33] in our comparison.

As shown in

Table 5, the MON3 represents Siddiqui et al. [

28] method has highest completeness but this method uses additional color information from aerial photos to get straight lines. Building boundaries in the Vaihingen-B test set are clearly recognizable as bold white pixels in the aerial photos. Hence, the low building roof (as marked by the white circle in

Figure 18a) as well as vegetated roofs (as marked by the brown circle in

Figure 18a) can still be detected. However, the lower correctness metric of the MON3 method indicates that some buildings may be over-segmented. On the contrary, our method has best correctness and quality metric for this test set. The higher quality metric indicates that our method delivers complete and accurate building polygon results.

Our proposed method requires building roof points as input. Then, the 2D edge points are transformed to Hough space to obtain prominent building outlines. Based on this scheme, as long as building points are given, our method should work for arbitrary point cloud data including point clouds generated from images by for example dense image matching process.

7. Conclusions and Future Work

We have presented a framework for delineating 2D building outlines automatically from segmented ALS point clouds. An adaptive approach to obtain boundary lines of different building shapes and sizes is developed based on an extension of Hough transformation exploiting the order of points forming a building outline. Point votes for all possible lines passing through given edge points are stored in a Hough accumulator matrix. Based on the accumulator matrix, the algorithm uses local maxima for detecting dominant building orientations. The prominent lines are selected based on detected dominant directions. A hierarchical filtering approach by empowering the Hough transform with ordered edge points is introduced to select correct building segments and derive accurate building corners. Many problems that occur with the original Hough Transform are avoided.

Our enhanced Hough transformation method, which exploits the ordered points and regularity, gives a substantial improvement in the quality of building outline extraction as concluded from a comparison to existing benchmark results. Based on our extensive evaluation, the proposed procedure is able to deliver high completeness and correctness as well as high positional accuracy. Even though the Hough transformation involves many matrices, aside from the pre-processing step, the processing speed to process the building outline is not an issue as the proposed algorithm works per single building. Another advantage of the proposed method is that it directly uses the point cloud. No data conversion or additional data is required. The proposed procedure is tested on different areas to verify the robustness of our method to the variation of different data specifications, and urban landscape characteristics. In case noise and small flaws exist in the data, the voting scheme of the Hough transform makes our method feasible for preserving the actual building shape and size. Implementation of the proposed outline extraction on different building segmentation attests that the use of the algorithm is not limited to a particular segmentation method.

We implemented a data-driven method that involves directed regularization that works effectively to detect multiple building orientations and derive accurate straight outlines. Our algorithm, accordingly, has limitation to detect curved outlines. As the algorithm requires edge points for delineating outlines, it is sensitive to the pre-processing steps, which are segmentation and concave hull. It may require parameter tuning for different dataset and different output requirements to achieve satisfactory results. Therefore, to guarantee an optimal result, understanding the input data and determining output criteria is necessary.

Extension of the present work should consider the implementation and performance evaluation of the proposed method for massive map production. Applying a robust classification and segmentation method for a larger area may become one of the challenges in future, as it may influence the outline extraction result. Application of Hough transform for curved buildings should also be elaborated.