1. Introduction

Slope failures are dangerous mass movements that occur frequently in mountainous terrains, causing extensive damage to natural features, as well as to economic and social infrastructure [

1]. They can also have direct, long-term, physical impacts on major infrastructure such as roads, bridges, and human habitations, with severe effects on local infrastructure development and land use management [

2]. Slope failures can occur for a variety of reasons; they can, for example, be triggered by heavy rainfall, slope disturbance during road construction, or earthquake shocks. There have been a number of investigations into different types of slope failure susceptibility analysis, and mitigation strategies are increasingly being applied [

3,

4,

5]. Slope failure analysis generally aims not only to detect slope failures but also to delineate the areas affected and compile inventories. Recent susceptibility analyses have aimed to determine hazard-prone areas on the basis of previously generated inventory data set [

6]. In order to complete a slope failure susceptibility analysis it is crucial to first analyze any available inventory of previous events and their areal extent, and to investigate the relationship between their spatial distribution and the conditioning factors, in order to then be able to identify other hazard-prone areas [

3,

7]. The results are heavily dependent on the accuracy of the inventory data set, especially when using machine learning for susceptibility analysis as both training and testing are based on selected portions of the inventory [

8]. Although most of the knowledge-based susceptibility analysis approaches are not dependent on inventory data sets, the hazard-prone areas predicted still need to be validated, for which a reliable inventory data set is required [

9]. Both machine learning and knowledge-based mass movement susceptibility analyses therefore require an accurate inventory data set in order to be able to model and map hazard-prone areas [

10].

Slope failures are often dangerous, particularly those that occur along a road. Falling masses of soil and rock require a rapid response [

1]. Since such mass movements often occur in remote mountainous areas, there can be considerable time pressure to delineate the areas affected so that any victims can be reached in good time and provided with humanitarian support [

11]. Remote sensing and GIS are therefore considered fundamental for slope failure analysis [

12]. However, while remote sensing provides frequently updateable data for almost all parts of the world, the data products generated often suffer from relatively low resolution.

In recent years unmanned aerial vehicle (UAV) technologies have become widely accepted as a way of providing low-cost opportunities to obtain critical up-to-date and accurate field data. This technology is still developing and is being used for an increasing number of applications [

12]. UAV-based field surveys have been assisted by improvements in the global navigation satellite system (GNSS) [

13]. Enhanced data production workflows allow emergency response teams to obtain the required images in a timely manner, in order to respond to emergency situations. The potential for UAV use in natural hazard and emergency situations is clearly growing, and the latest technological developments have been described in recent publications [

1,

4,

14,

15]. Compared to traditional mapping methods, the use of UAVs has the potential to acquire data with higher spatial and temporal resolutions, as well as offering flexible deployment capabilities [

16].

As well as the possibility of using UAVs to obtain very high resolution (VHR) imagery, software such as Agisoft PhotoScan or Pix4D Processes has also helped in the compilation of mosaics, as well as with image georeferencing and post-processing, allowing users to obtain, for example, digital elevation models (DEMs) [

13]. UAVs have in the past been used on a number of occasions to collect data for slope failure inventories [

12,

17,

18], but analysis and classification of remotely sensed (UAV-derived) imagery in order to extract slope failures along roads is a new area of research.

The strategies used for image analysis and classification are generally either object-based or pixel-based. Both have been integrated into different machine learning models for semi-automated feature extraction [

19]. Although object-based analysis has become more common [

20], pixel-based methods have long been the mainstay for remotely sensed image classification [

19]. A number of object-based and pixel-based semi-automated approaches have already been developed for mass movement detection, using different machine learning models [

6,

21,

22].

Over the last decade deep-learning models, and in particular CNNs, have been successfully used for classification and segmentation of remotely sensed imagery [

23,

24,

25], for scene annotation, and for a broad range of object detection applications [

26]. Although there are some ways of using deep learning approaches in an unsupervised mode, CNNs are supervised machine learner in which masses of labelled sample patches are used to feed learnable filters, in order to minimize the applied loss function [

27]. The performance of these approaches is strongly dependent on their network architecture, the sample patches selected for input, and on graphics processing unit (GPU) speed [

28]. While CNNs have been shown to have superior capabilities for extracting features from remotely sensed images, only a limited number of investigations have been carried out to date using CNN approaches for slope failure detection. Ghorbanzadeh et al. [

28] evaluated different CNN approaches using different input data sets and sample patch widow sizes for their research into landslide detection in the high Himalayas of Nepal. They trained their CNN approaches on two sets of spectral band data together with topographic information. Their highest detection accuracy using CNNs was obtained using a small window size for the CNN sample patches, with a mean intersection-over-union. Ding et al. [

29] used a CNN approach to detect landslides in Shenzhen, China, with optical data from GF-1 imagery, but only obtained a relatively low overall landslide detection accuracy of 67%.

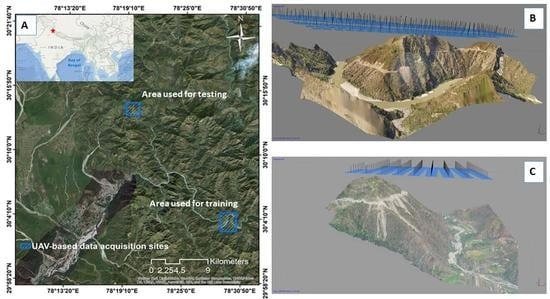

The literature review has revealed that we are not the first to use CNN approaches for mass movement detection. However, the potential of CNN approaches using data from UAV remotely sensed imagery for slope failure detection remains under-explored. We have therefore investigated the use of CNN approaches for slope failure detection from UAV remote sensing imagery. By using the spectral information from the UAV imagery, together with a slope data, we have demonstrated the performance of CNN approaches and the advantages of using topographic data, in particular slope data, for slope failure detection. We compared the maps produced using different CNN approaches with manually extracted slope failure inventory data sets obtained from a variety of different sources. The maps were evaluated using a frequency area distribution (FAD) technique. The detected slope failures were then validated using conventional computer vision validation methods.

5. Comparison of Results Obtained by Manual Detection with Those Obtained Using CNNs

A number of accuracy assessment measures relevant to this study were used to comprehensively evaluate the performance of the applied CNNs by analyzing the conformity between the manually detected slope failure inventory dataset and the dataset derived using CNNs. The performances were compared using the positive predictive value (PPV), the true positive rate (TPR) and the F-score metrics. These accuracy assessment measures were calculated using three different pixel classifications: true positive (TP), false positive (FP), and false negative (FN) - see

Table 5. The PPV, also known as the precision (Prec) [

45], the proportion of slope failure pixels correctly identified by the CNNs, which can be calculated as:

where TP is the number of pixels that were correctly detected as slope failure areas (i.e., the number of true positives) and FP is the number of false positives – i.e., pixels that were incorrectly identified as slope failure areas (see

Figure 12).

The true positive rate (TPR), also known as the recall (Rec), is the proportion of slope failure pixels in the polygons extracted manually from the slope failure inventory that were correctly detected by the CNNs, which is derived as follows:

in which FN is the number of inventory slope failure areas that were not detected by the CNNs, i.e., the number of slope failure areas missed (see

Figure 12). The F-score was used to reach an overall model accuracy assessment. The F-score, also known as the F1 measure (F1), is defined as the weighted harmonic mean of the PPV and the TPR, which is calculated using Equation (5):

where α is a figure used to define the desired balance between the PPV and the TPR. When α= 0.5, the PPV and the TPR are in balance, a compromise that gave the highest level of accuracy in our CNN results. The over prediction rate (OPR) - also known as the commission error, the unpredicted presence rate (UPR) [

46], are the other relevant accuracy assessment measures used to assess the similarity between manually extracted slope failure areas and CNN-detected areas. These measures are also based on matches and mismatches between the inventory and the CNN-detected polygons of slope failure areas using TPs, FPs and FNs, which can be estimated using Equations (6) and (7):

The mean intersection-over-union (mIOU) is another accuracy assessment metric that applied in this study. The mIOU is a known accuracy assessment metric in computer vision domain, particularly for semantic segmentation and object detection studies. The application of mIOU for landslide detection validation is fully described by [

28] and [

40]. In general, the mIOU is an appropriate accuracy assessment metric where any approach that results in bounding polygons (see

Figure 13) can be validated by using this metric based on the ground truth dataset. It is specified as the mean of Equation (8):

The

approach achieved the highest overall accuracy assessment, with an F-score of 85.46%, followed by the

and

approaches which achieved F-scores of 80.67 and 79.42%, respectively (see

Table 6).

The and approaches resulted in the lowest F-scores, both having values of just over 59%. All CNNs apart from those that used a sample patch size of 80 × 80 pixels, yielded higher F-scores when the slope data was taken into account in the training and testing processes. The greatest increase in accuracy obtained by including the slope data was in the CNN with a sample patch size of 48 × 48 pixels, whose F-score increased by 2%; the mIOU value of results from the same CNN when including the slope data increased by about 35. The increases for CNNs with sample patch sizes of 32 × 32 and 64 × 64 pixels were smaller, at about 18 and 19 percent, respectively. The PPV for was 83%, whereas that for was 89.9%; their TPR values decreased to 78.46% and 81.85%, respectively.