Cropland Mapping Using Fusion of Multi-Sensor Data in a Complex Urban/Peri-Urban Area

Abstract

:1. Introduction

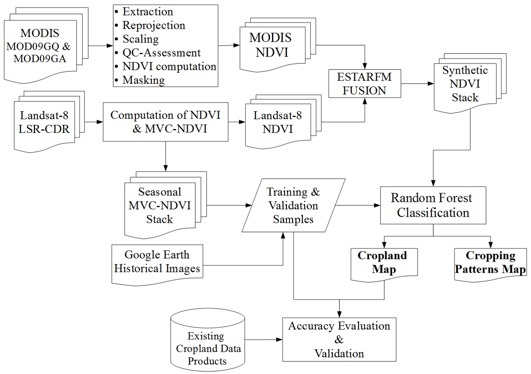

2. Data and Methods

2.1. Site Description

2.2. Data Acquisition and Pre-Processing

2.3. Spatio-Temporal Image Fusion

2.4. Training and Validation Data Collection

2.5. Time Series Classification

2.6. Accuracy Assessment

3. Results

3.1. Fusion Results

3.2. Classification Results

3.2.1. Cropland Extent

3.2.2. Cropping Regimes

3.2.3. Peanuts and Other Crops

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Brown, M.E.; Funk, C.C. Food security under climate change. Nat. Clim. Chang. 2008, 6, 10–13. [Google Scholar] [CrossRef] [PubMed]

- Porter, J.R.; Dyball, R.; Dumaresq, D.; Deutsch, L.; Matsuda, H. Feeding capitals: Urban food security and self-provisioning in Canberra, Copenhagen and Tokyo. Glob. Food Secur. 2014, 3, 1–7. [Google Scholar] [CrossRef]

- Eigenbrod, C.; Gruda, N. Urban vegetable for food security in cities. A review. Agron. Sustain. Dev. 2015, 35, 483–498. [Google Scholar] [CrossRef]

- Opitz, I.; Berges, R.; Piorr, A.; Krikser, T. Contributing to food security in urban areas: Differences between urban agriculture and peri-urban agriculture in the Global North. Agric. Hum. Values 2016, 33, 341–358. [Google Scholar] [CrossRef]

- Besthorn, F.H. Vertical farming: Social work and sustainable urban agriculture in an age of global food crises. Aust. Soc. Work 2013, 66, 187–203. [Google Scholar] [CrossRef]

- Lang, T.; Barling, D. Food security and food sustainability: Reformulating the debate. Geogr. J. 2012, 178, 313–326. [Google Scholar] [CrossRef]

- Thebo, A.L.; Drechsel, P.; Lambin, E.F. Global assessment of urban and peri-urban agriculture: Irrigated and rainfed croplands. Environ. Res. Lett. 2014, 9, 114002. [Google Scholar] [CrossRef]

- Martellozzo, F.; Landry, J.S.; Plouffe, D.; Seufert, V.; Rowhani, P.; Ramankutty, N. Urban agriculture: A global analysis of the space constraint to meet urban vegetable demand. Environ. Res. Lett. 2014, 9, 064025. [Google Scholar] [CrossRef]

- d’Amour, C.B.; Reitsma, F.; Baiocchi, G.; Barthel, S.; Güneralp, B.; Erb, K.; Haberl, H.; Creutzig, F.; Seto, K.C. Future urban land expansion and implications for global croplands. Proc. Natl. Acad. Sci. USA 2017, 114, 8939–8944. [Google Scholar] [CrossRef]

- Waldner, F.; Canto, G.S.; Defourny, P. Automated annual cropland mapping using knowledge-based temporal features. ISPRS J. Photogramm. Remote Sens. 2015, 110, 1–13. [Google Scholar] [CrossRef]

- Thenkabail, P.S. Global Food Security Support Analysis Data at Nominal 1 km (GFSAD1km) Derived from Remote Sensing in Support of Food Security in the Twenty-First Century: Current Achievements and Future Possibilities. In Remote Sensing Handbook-Three Volume Set; CRC Press: Boca Raton, FL, USA, 2019; pp. 865–894. [Google Scholar]

- Teluguntla, P.; Thenkabail, P.S.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Oliphant, A.; Poehnelt, J.; Yadav, K.; Rao, M.; Massey, R. Spectral matching techniques (SMTs) and automated cropland classification algorithms (ACCAs) for mapping croplands of Australia using MODIS 250-m time-series (2000–2015) data. Int. J. Digit. Earth 2017, 10, 944–977. [Google Scholar] [CrossRef] [Green Version]

- Teluguntla, P.; Thenkabail, P.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m Landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Matton, N.; Canto, G.S.; Waldner, F.; Valero, S.; Morin, D.; Inglada, J.; Arias, M.; Bontemps, S.; Koetz, B.; Defourny, P. An automated method for annual cropland mapping along the season for various globally-distributed agrosystems using high spatial and temporal resolution time series. Remote Sens. 2015, 7, 13208–13232. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Jakubauskas, M.E.; Legates, D.R.; Kastens, J.H. Crop identification using harmonic analysis of time-series AVHRR NDVI data. Comput. Electron. Agric. 2002, 37, 127–139. [Google Scholar] [CrossRef]

- Mingwei, Z.; Zhou, Q.; Chen, Z.; Liu, J.; Zhou, Y.; Cai, C. Crop discrimination in Northern China with double cropping systems using Fourier analysis of time-series MODIS data. Int. J. Appl. Earth Obs. Geoinf. 2008, 10, 476–485. [Google Scholar] [CrossRef]

- McNairn, H.; Champagne, C.; Shang, J.; Holmstrom, D.; Reichert, G. Integration of optical and Synthetic Aperture Radar (SAR) imagery for delivering operational annual crop inventories. ISPRS J. Photogramm. Remote Sens. 2009, 64, 434–449. [Google Scholar] [CrossRef]

- Siachalou, S.; Mallinis, G.; Tsakiri-Strati, M. A hidden Markov models approach for crop classification: Linking crop phenology to time series of multi-sensor remote sensing data. Remote Sens. 2015, 7, 3633–3650. [Google Scholar] [CrossRef]

- Inglada, J.; Arias, M.; Tardy, B.; Hagolle, O.; Valero, S.; Morin, D.; Dedieu, G.; Sepulcre, G.; Bontemps, S.; Defourny, P.; et al. Assessment of an operational system for crop type map production using high temporal and spatial resolution satellite optical imagery. Remote Sens. 2015, 7, 12356–12379. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First experience with Sentinel-2 data for crop and tree species classifications in central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Vladimir, C.; Lugonja, P.; Brkljač, B.N.; Brunet, B. Classification of small agricultural fields using combined Landsat-8 and RapidEye imagery: Case study of northern Serbia. J. Appl. Remote Sens. 2014, 8, 083512. [Google Scholar]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef] [Green Version]

- Petitjean, F.; Jordi, I.; Pierre, G. Satellite image time series analysis under time warping. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3081–3095. [Google Scholar] [CrossRef]

- Petitjean, F.; Kurtz, C.; Passat, N.; Gançarski, P. Spatio-temporal reasoning for the classification of satellite image time series. Pattern Recognit. Lett. 2012, 33, 1805–1815. [Google Scholar] [CrossRef] [Green Version]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.; Zhang, L. Missing information reconstruction of remote sensing data: A technical review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Cheng, Q.; Shen, H.; Zhang, L.; Peng, Z. Missing Information Reconstruction for Single Remote Sensing Images Using Structure-Preserving Global Optimization. IEEE Signal Process. Lett. 2017, 24, 1163–1167. [Google Scholar] [CrossRef]

- Julien, Y.; Sobrino, J.A. Comparison of cloud-reconstruction methods for time series of composite NDVI data. Remote Sens. Environ. 2010, 114, 618–625. [Google Scholar] [CrossRef]

- Zhao, Y.; Huang, B.; Song, H. A robust adaptive spatial and temporal image fusion model for complex land surface changes. Remote Sens. Environ. 2018, 208, 42–62. [Google Scholar] [CrossRef]

- Pohl, C.; Van Genderen, J.L. Review article multisensor image fusion in remote sensing: Concepts, methods and applications. Int. J. Remote Sens. 1998, 19, 823–854. [Google Scholar] [CrossRef]

- Solberg, A.H.S. Data fusion for remote sensing applications. Signal Image Process. Remote Sens. 2006, 249–271. [Google Scholar]

- Schmitt, M.; Zhu, X.X. Data fusion and remote sensing: An ever-growing relationship. IEEE Geosci. Remote Sens. Mag. 2016, 4, 6–23. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2013; Available online: http://www.R-project.org/ (accessed on 19 April 2018).

- QGIS Development Team. QGIS Geographic Information System. Open Source Geospatial Foundation. 2009. Available online: http://qgis.osgeo.org (accessed on 10 March 2018).

- Ministry of Agriculture, Forestry and Fisheries(MAFF), Japan. FY 2016 Summary of the Annual Report on Food, Agriculture and Rural Areas in Japan. 2016. Available online: http://www.maff.go.jp/j/wpaper/w_maff/h28/attach/pdf/index-28.pdf (accessed on 16 October 2018).

- Tivy, J. Agricultural Ecology; Routledge: Abingdon-on-Thames, UK, 2014. [Google Scholar]

- EarthExplorer. Available online: https://earthexplorer.usgs.gov/ (accessed on January 2018).

- Mosleh, M.; Hassan, Q.; Chowdhury, E. Application of remote sensors in mapping rice area and forecasting its production: A review. Sensors 2015, 15, 769–791. [Google Scholar] [CrossRef] [PubMed]

- Motohka, T.; Nasahara, K.N.; Miyata, A.; Mano, M.; Tsuchida, S. Evaluation of optical satellite remote sensing for rice paddy phenology in monsoon Asia using a continuous in situ dataset. Int. J. Remote Sens. 2009, 30, 4343–4357. [Google Scholar] [CrossRef]

- NASA LP DAAC. MODIS Land Products Quality Assurance Tutorial: Part-1. 2013. Available online: https://lpdaac. usgs. gov/sites/default/files/public/modis/docs/MODIS_LP_QA_Tutorial-2. pdf (accessed on 23 February 2018).

- Vermote, E. MOD09A1 MODIS/Terra Surface Reflectance 8-Day L3 Global 500m SIN Grid V006; NASA EOSDIS Land Processes DAAC: Sioux Falls, SD, USA, 2015; Volume 10.

- Lobell, D.B.; Asner, G.P. Cropland distributions from temporal unmixing of MODIS data. Remote Sens. Environ. 2004, 93, 412–422. [Google Scholar]

- Liao, C.; Wang, J.; Pritchard, I.; Liu, J.; Shang, J. A spatio-temporal data fusion model for generating NDVI time series in heterogeneous regions. Remote Sens. 2017, 9, 1125. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Jarihani, A.A.; McVicar, T.R.; van Niel, T.G.; Emelyanova, I.V.; Callow, J.N.; Johansen, K. Blending Landsat and MODIS data to generate multispectral indices: A comparison of “Index-then-Blend” and “Blend-then-Index” approaches. Remote Sens. 2014, 6, 9213–9238. [Google Scholar] [CrossRef]

- Li, L.; Zhao, Y.; Fu, Y.; Pan, Y.; Yu, L.; Xin, Q. High resolution mapping of cropping cycles by fusion of landsat and MODIS data. Remote Sens. 2017, 9, 1232. [Google Scholar] [CrossRef]

- Remote Sensing and Spatial Analysis Lab, ESTARFM. 27 July 2018. Available online: https://xiaolinzhu.weebly.com/open-source-code.html (accessed on 10 March 2018).

- Waldner, F.; Fritz, S.; di Gregorio, A.; Defourny, P. Mapping priorities to focus cropland mapping activities: Fitness assessment of existing global, regional and national cropland maps. Remote Sens. 2015, 7, 7959–7986. [Google Scholar] [CrossRef]

- Japan High Resolution Land Use Land Cover (2014~2016) (Version 18.03). Available online: https://www.eorc.jaxa.jp/ALOS/lulc/lulc_jindex_v1803.htm (accessed on 9 February 2018).

- Oliphant, A.J.; Thenkabail, P.S.; Teluguntla, P.; Xiong, J.; Congalton, R.G.; Yadav, K.; Massey, R.; Gumma, M.K.; Smith, C. NASA Making Earth System Data Records for Use in Research Environments (MEaSUREs) Global Food Security-support Analysis Data (GFSAD) Cropland Extent 2015 Southeast Asia 30 m V001; NASA EOSDIS Land Processes DAAC, USGS Earth Resources Observation and Science (EROS); NASA EOSDIS Land Processes DAAC: Sioux Falls, SD, USA, 2017.

- Japan CROPs. Available online: https://japancrops.com/en/prefectures/chiba/ (accessed on 16 October 2018).

- Japan External Trade Organization (JETRO). Jitsukawa Foods: Chiba: A Peanut Paradise. Available online: https://www.jetro.go.jp/en/mjcompany/jitsukawafoods.html (accessed on 16 October 2018).

- Ito, K.; Aoki, S.T.; Shimuzu, A. Japan’s Peanut Market Report. Global Agricultural Information Network; USDA Foreign Agricultural Service; GAIN Report Number: JA9532; 22 December 2009. Available online: https://www.fas.usda.gov/ (accessed on 16 October 2018).

- Dickens, J.W. Peanut curing and post-harvest physiology. In Peanuts Culture and Uses; APRES Inc.: Roanoke, VA, USA, 1973. [Google Scholar]

- Allen, W.S.; and Sorenson, J.W.; Person, N.K., Jr. Guide for Harvesting, Handling and Drying Peanuts; No. 1029; Texas Agricultural Extension Service: College Station, TX, USA, 1971. [Google Scholar]

- Sonobe, R.; Tani, H.; Wang, X.; Kobayashi, N.; Shimamura, H. Random forest classification of crop type using multi-temporal TerraSAR-X dual-polarimetric data. Remote Sens. Lett. 2014, 5, 157–164. [Google Scholar] [CrossRef] [Green Version]

- Tatsumi, K.; Yamashiki, Y.; Torres, M.A.C.; Taipe, C.L.R. Crop classification of upland fields using Random forest of time-series Landsat 7 ETM+ data. Comput. Electron. Agric. 2015, 115, 171–179. [Google Scholar] [CrossRef]

- Hao, P.; Zhan, Y.; Wang, L.; Niu, Z.; Shakir, M. Feature selection of time series MODIS data for early crop classification using random forest: A case study in Kansas, USA. Remote Sens. 2015, 7, 5347–5369. [Google Scholar] [CrossRef]

- Wang, L.A.; Zhou, X.; Zhu, X.; Dong, Z.; Guo, W. Estimation of biomass in wheat using random forest regression algorithm and remote sensing data. Crop J. 2016, 4, 212–219. [Google Scholar] [CrossRef] [Green Version]

- Vogels, M.F.A.; De Jong, S.M.; Sterk, G.; Addink, E.A. Agricultural cropland mapping using black-and-white aerial photography, object-based image analysis and random forests. Int. J. Appl. Earth Obs. Geoinf. 2017, 54, 114–123. [Google Scholar] [CrossRef]

- Lebourgeois, V.; Dupuy, S.; Vintrou, É.; Ameline, M.; Butler, S.; Bégué, A. A combined random forest and OBIA classification scheme for mapping smallholder agriculture at different nomenclature levels using multisource data (simulated Sentinel-2 time series, VHRS and DEM). Remote Sens. 2017, 9, 259. [Google Scholar] [CrossRef]

- Leutner, B.; Horning, N. RStoolbox: Tools for Remote Sensing Data Analysis; CRAN–Package RStoolbox. 2017. Available online: https://cran. r-project. org/web/packages/RStoolbox/index. html (accessed on 5 February 2018).

- Cohen, J. Weighted kappa: Nominal scale agreement provision for scaled disagreement or partial credit. Psychol. Bull. 1968, 70, 213. [Google Scholar] [CrossRef] [PubMed]

- Congalton, R.G. 4A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Sharma, R.C.; Tateishi, R.; Hara, K.; Iizuka, K. Production of the Japan 30-m land cover map of 2013–2015 using a Random Forests-based feature optimization approach. Remote Sens. 2016, 8, 429. [Google Scholar] [CrossRef]

- Dong, J.; Xiao, X. Evolution of regional to global paddy rice mapping methods: A review. ISPRS J. Photogramm. Remote Sens. 2016, 119, 214–227. [Google Scholar] [CrossRef]

| Date (Year 2015) | Day of Year (DOY) | % Land Cloud Cover |

|---|---|---|

| 10th January | 10 | 16.31 |

| 26th January | 26 | 50.37 |

| 11th February | 42 | 50.63 |

| 27th February | 58 | 31.79 |

| 15th March | 74 | 83.76 |

| 31st March | 90 | 3.71 |

| 16th April | 106 | 9.11 |

| 2nd May | 122 | 1.92 |

| 18th May | 138 | 59.24 |

| 3rd June | 154 | 100 |

| 19th June | 170 | 100 |

| 5th July | 186 | 100 |

| 21st July | 202 | 10.38 |

| 6th August | 218 | 3.52 |

| 22nd August | 234 | 52.59 |

| 7th September | 250 | 100 |

| 23rd September | 266 | 19.39 |

| 9th October | 282 | 0.92 |

| 25th October | 298 | 2.28 |

| 10th November | 314 | 68.93 |

| 26th November | 330 | 42.17 |

| 12th December | 346 | 12.42 |

| 28th December | 362 | 15.26 |

| Day of Year (DOY) | ||||||||

|---|---|---|---|---|---|---|---|---|

| Available Landsat 8 Images (Cloud Cover < 20%) | 10 | 90 | 106 | 122 | 202 | 218 | 282 | 298 |

| MODIS 8-day Composite | 9 | 89 | 105 | 121 | 201 | 217 | 281 | 297 |

| Cropland | Forest | Grassland | Paddy | Urban & Water | Total | User’s Accuracy (UA) (%) | |

|---|---|---|---|---|---|---|---|

| Cropland | 542 | 2 | 38 | 15 | 30 | 627 | 86.4 |

| Forest | 7 | 691 | 4 | 0 | 0 | 702 | 98.4 |

| Grassland | 38 | 0 | 638 | 27 | 0 | 703 | 90.8 |

| Paddy | 36 | 0 | 11 | 597 | 7 | 651 | 91.7 |

| Urban & Water | 56 | 0 | 0 | 12 | 640 | 708 | 90.4 |

| Total | 679 | 693 | 691 | 651 | 677 | 3391 | |

| Producer’s Accuracy (PA) (%) | 79.8 | 99.7 | 92.3 | 91.7 | 94.5 | ||

| Overall Accuracy (OA) (%) | 91.7 | ||||||

| Kappa | 0.9 |

| Double Cropping | Forest | Grassland | Paddy | Single Cropping | Urban & Water | Total | User’s Accuracy (UA) (%) | |

|---|---|---|---|---|---|---|---|---|

| Double Cropping | 1546 | 4 | 65 | 17 | 716 | 48 | 2396 | 64.5 |

| Forest | 11 | 3217 | 1 | 0 | 104 | 0 | 3333 | 96.5 |

| Grassland | 124 | 1 | 3015 | 109 | 312 | 0 | 3561 | 84.7 |

| Paddy | 49 | 0 | 44 | 2467 | 82 | 41 | 2683 | 91.9 |

| Single Cropping | 735 | 27 | 173 | 80 | 1295 | 84 | 2394 | 54.1 |

| Urban & Water | 125 | 0 | 0 | 60 | 328 | 3068 | 3581 | 85.7 |

| Total | 2590 | 3249 | 3298 | 2733 | 2837 | 3241 | 17,948 | |

| Producer’s Accuracy (PA) (%) | 59.7 | 99.0 | 91.4 | 90.3 | 45.6 | 94.7 | ||

| Overall Accuracy (OA) (%) | 81.4 | |||||||

| Kappa | 0.776 |

| Crop Type | Market Availability |

|---|---|

| * Cabbage | March∼May; September and October |

| * Carrot | April and May; September∼December |

| * Spinach | April and May; September∼December |

| * Taro | September∼December |

| * Turnip | May; October∼January |

| ** Peanuts | September∼December |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nduati, E.; Sofue, Y.; Matniyaz, A.; Park, J.G.; Yang, W.; Kondoh, A. Cropland Mapping Using Fusion of Multi-Sensor Data in a Complex Urban/Peri-Urban Area. Remote Sens. 2019, 11, 207. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11020207

Nduati E, Sofue Y, Matniyaz A, Park JG, Yang W, Kondoh A. Cropland Mapping Using Fusion of Multi-Sensor Data in a Complex Urban/Peri-Urban Area. Remote Sensing. 2019; 11(2):207. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11020207

Chicago/Turabian StyleNduati, Eunice, Yuki Sofue, Akbar Matniyaz, Jong Geol Park, Wei Yang, and Akihiko Kondoh. 2019. "Cropland Mapping Using Fusion of Multi-Sensor Data in a Complex Urban/Peri-Urban Area" Remote Sensing 11, no. 2: 207. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11020207