Detecting Building Changes between Airborne Laser Scanning and Photogrammetric Data

Abstract

:1. Introduction

- We propose a method to detect building changes between ALS data and photogrammetric data. First, we provide an effective solution to convert and normalize multimodal point clouds to 2D image patches. The converted image patches are fed into a lightweight pseudo-Siamese convolutional neural network (PSI-CNN) to quickly detect change locations.

- The proposed PSI-CNN is compared to five other CNN variants with different inputs and configurations. In particular, the performance of the pseudo-Siamese architecture and feed-forward architecture are compared quantitatively and qualitatively. Different configurations of multimodal inputs are compared.

2. Related Work

2.1. Multimodal Change Detection

2.2. Deep Learning for Multimodal Data Processing

3. Materials and Methods

3.1. Preprocessing: Registration, Conversion, and Normalization

3.2. Network Architecture

4. Experiments

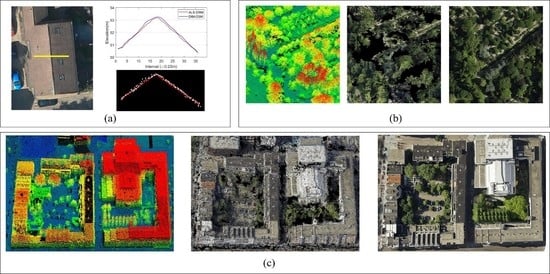

4.1. Descriptions of Experimental Data

4.2. Experimental Setup

- (1)

- If the ratio of pixels for water and data gaps is larger than then eliminate this patch.

- (2)

- If the ratio of changed pixels is larger than this patch is labeled as changed; otherwise it is unchanged.

4.3. Contrast Experiments

- PSI-HHC: The proposed PSI-DC directly took the difference between the two DSMs in the beginning. We also implemented an architecture which uses the ALS-DSM and DIM-DSM together as one branch (two channels) and takes R, G, B as the other branch (three channels). This architecture called PSI-HHC works as a late fusion of the two DSMs, compared with PSI-DC.

- PSI-HH: In this SI-CNN architecture, one branch is the ALS-DSM and the other is the DIM-DSM. Color channels are not applied.

- FF-HHC: It is interesting to compare the performance of feed-forward architecture and pseudo-Siamese architecture for our task. A feed-forward CNN (FF-HHC) is adopted as shown in Figure 7. The five channels are stacked in the beginning and then fed into convolutional blocks and fully connected layers for feature extraction. HHC (height–height–color) indicates that two DSM patches and one orthoimage patch are taken as input. For more details, the readers are referred to [47].

- FF-DC: This feed-forward architecture takes four channels as input: DiffDSM, R, G, and B.

- FF-HH: This feed-forward architecture takes the ALS-DSM and DIM-DSM as input. Color channels are not applied.

- DSM-Diff: Given two DSM patches from ALS data and DIM data over the sample area, a simple DSM differencing produces differential-DSM. The height difference averaged over each pixel on the patch will bring the average height difference (AHD) between the two patches. Intuitively, the two patches are more likely to be changed if the AHD is high, and vice versa. The optimal AHD threshold can be obtained with Otsu’s thresholding algorithm [48]. This method can classify the patches into changed or unchanged in an unsupervised way.

4.4. Evaluation Metrics

5. Results and Discussion

5.1. Results

5.2. Visualization of Feature Maps

5.3. Impact of Patch Size

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Tran, T.H.G.; Ressl, C.; Pfeifer, N. Integrated change detection and classification in urban areas based on airborne laser scanning point clouds. Sensors 2018, 18, 448. [Google Scholar] [CrossRef] [PubMed]

- Matikainen, L.; Hyyppä, J.; Kaartinen, H. Automatic detection of changes from laser scanner and aerial image data for updating building maps. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 434–439. [Google Scholar]

- Qin, R.; Gruen, A. 3D change detection at street level using mobile laser scanning point clouds and terrestrial images. ISPRS J. Photogramm. Remote Sens. 2014, 90, 23–35. [Google Scholar] [CrossRef]

- Zhang, Z.; Gerke, M.; Vosselman, G.; Yang, M.Y. A patch-based method for the evaluation of dense image matching quality. Int. J. Appl. Earth Obs. Geoinf. 2018, 70, 25–34. [Google Scholar] [CrossRef]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F. State of the art in high density image matching. Photogramm. Rec. 2014, 29, 144–166. [Google Scholar] [CrossRef] [Green Version]

- Nex, F.; Gerke, M.; Remondino, F.; Przybilla, H.J.; Bäumker, M.; Zurhorst, A. ISPRS benchmark for multi-platform photogrammetry. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 2, 135–142. [Google Scholar] [CrossRef]

- Ressl, C.; Brockmann, H.; Mandlburger, G.; Pfeifer, N. Dense image matching vs. airborne laser scanning–comparison of two methods for deriving terrain models. Photogramm. Fernerkund. Geoinf. 2016, 2, 57–73. [Google Scholar] [CrossRef]

- Mandlburger, G.; Wenzel, K.; Spitzer, A.; Haala, N.; Glira, P.; Pfeifer, N. Improved topographic models via concurrent airborne lidar and dense image matching. ISPRS Ann. Photogram. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W4, 259–266. [Google Scholar] [CrossRef]

- Hirschmüller, H. Stereo processing by semi-global matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef]

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric surface reconstruction from imagery. In Proceedings of the LC3D Workshop, Berlin, Germany, December 2012; Volume 8, p. 2. [Google Scholar]

- Xu, S.; Vosselman, G.; Oude Elberink, S. Detection and classification of changes in buildings from airborne laser scanning data. Remote Sens. 2015, 7, 17051–17076. [Google Scholar] [CrossRef]

- Singh, A. Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Vosselman, G.; Gorte, B.G.H.; Sithole, G. Change detection for updating medium scale maps using laser altimetry. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 34, 207–212. [Google Scholar]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change detection based on deep Siamese Convolutional Network for optical aerial images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Cui, X.; Zhang, L. A post-classification change detection method based on iterative slow feature analysis and Bayesian soft fusion. Remote Sens. Environ. 2017, 199, 241–255. [Google Scholar] [CrossRef]

- Mou, L.; Bruzzone, L.; Zhu, X.X. Learning spectral-spatial-temporal features via a recurrent convolutional neural network for change detection in multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2019, 57, 924–935. [Google Scholar] [CrossRef]

- Choi, K.; Lee, I.; Kim, S. A feature based approach to automatic change detection from LiDAR data in urban areas. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, 18, 259–264. [Google Scholar]

- Volpi, M.; Camps-Valls, G.; Tuia, D. Spectral alignment of multi-temporal cross-sensor images with automated kernel canonical correlation analysis. ISPRS J. Photogramm. Remote Sens. 2015, 107, 50–63. [Google Scholar] [CrossRef]

- Gong, M.; Zhan, T.; Zhang, P.; Miao, Q. Superpixel-based difference representation learning for change detection in multispectral remote sensing images. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2658–2673. [Google Scholar] [CrossRef]

- Volpi, M.; Tuia, D.; Bovolo, F.; Kanevski, M.; Bruzzone, L. Supervised change detection in VHR images using contextual information and support vector machines. Int. J. Appl. Earth Obs. Geoinf. 2013, 20, 77–85. [Google Scholar] [CrossRef]

- Malpica, J.A.; Alonso, M.C.; Papí, F.; Arozarena, A.; Martínez De Agirre, A. Change detection of buildings from satellite imagery and lidar data. Int. J. Remote Sens. 2013, 34, 1652–1675. [Google Scholar] [CrossRef]

- Chen, L.C.; Lin, L.J. Detection of building changes from aerial images and light detection and ranging (LIDAR) data. J. Appl. Remote Sens. 2010, 4, 41870. [Google Scholar]

- Tian, J.; Cui, S.; Reinartz, P. Building change detection based on satellite stereo imagery and digital surface models. IEEE Trans. Geosci. Remote Sens. 2014, 52, 406–417. [Google Scholar] [CrossRef]

- Basgall, P.L.; Kruse, F.A.; Olsen, R.C. Comparison of LiDAR and stereo photogrammetric point clouds for change detection. In Laser Radar Technology and Applications XIX; and Atmospheric Propagation XI; International Society for Optics and Photonics: Bellingham WA, USA, 2014; Volume 9080, p. 90800R. [Google Scholar]

- Du, S.; Zhang, Y.; Qin, R.; Yang, Z.; Zou, Z.; Tang, Y.; Fan, C. Building change detection using old aerial images and new LiDAR data. Remote Sens. 2016, 8, 1030. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 91–99. [Google Scholar] [CrossRef]

- Sherrah, J. Fully convolutional networks for dense semantic labelling of high-resolution aerial imagery. arXiv 2016, arXiv:1606.02585. [Google Scholar]

- Bromley, J.; Guyon, I.; LeCun, Y.; Säckinger, E.; Shah, R. Signature verification using a “Siamese” time delay Neural Network. Adv. Neural Inf. Process. Syst. 1994, 737–744. [Google Scholar]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 20–26 June 2005; Volume 1, pp. 539–546. [Google Scholar]

- Taigman, Y.; Yang, M.; Ranzato, M.A.; Wolf, L. Deepface: Closing the gap to human-level performance in face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2014; pp. 1701–1708. [Google Scholar]

- Koch, G.; Zemel, R.; Salakhutdinov, R. Siamese Neural Networks for One-Shot Image Recognition. Master’s Thesis, University of Toronto, Toronto, ON, Canada, 2015. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Learning to compare image patches via convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 14 April 2015; pp. 4353–4361. [Google Scholar]

- Eitel, A.; Springenberg, J.T.; Spinello, L.; Riedmiller, M.; Burgard, W. Multimodal deep learning for robust rgb-d object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 681–687. [Google Scholar]

- Zbontar, J.; LeCun, Y. Computing the stereo matching cost with a convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, NA, USA, 7–12 June 2015; pp. 1592–1599. [Google Scholar]

- Luo, W.; Schwing, A.G.; Urtasun, R. Efficient deep learning for stereo matching. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 5695–5703. [Google Scholar]

- He, H.; Chen, M.; Chen, T.; Li, D. Matching of remote Sensing Images with Complex Background Variations via Siamese Convolutional Neural Network. Remote Sens. 2018, 10, 355. [Google Scholar] [CrossRef]

- Lefèvre, S.; Tuia, D.; Wegner, J.D.; Produit, T.; Nassaar, A.S. Toward seamless multiview scene analysis from satellite to street level. Proc. IEEE 2017, 105, 1884–1899. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 1097–1105. [Google Scholar] [CrossRef]

- Mou, L.; Schmitt, M.; Wang, Y.; Zhu, X.X. A CNN for the identification of corresponding patches in SAR and optical imagery of urban scenes. In Proceedings of the Urban Remote Sensing Event (JURSE), Dubai, UAE, 6–8 March 2017; pp. 1–4. [Google Scholar]

- Daudt, R.C.; Le Saux, B.; Boulch, A. Fully convolutional siamese networks for change detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4063–4067. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A.; Bengio, Y. Deep Learning; MIT Press: Cambridge, UK, 2016; Volume 1. [Google Scholar]

- Cyclomedia. Available online: https://www.cyclomedia.com (accessed on 11 October 2019).

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Urban change detection for multispectral earth observation using convolutional neural networks. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Hu, X.; Yuan, Y. Deep-learning-based classification for DTM extraction from ALS point cloud. Remote Sens. 2016, 8, 730. [Google Scholar] [CrossRef]

- PyTorch. Available online: https://pytorch.org/ (accessed on 11 October 2019).

- Zhang, Z.; Vosselman, G.; Gerke, M.; Persello, C.; Tuia, D.; Yang, M.Y. Change detection between digital surface models from airborne laser scanning and dense image matching using convolutional neural networks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2/W5, 453–460. [Google Scholar] [CrossRef]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef]

| Threshold | Value | Description |

|---|---|---|

| 0.1 | A sample is valid only if the ratio of water and data gaps is smaller than . | |

| 0.1 | A sample is changed if the ratio of changed pixels is larger than ; otherwise it is unchanged. | |

| 2 m | The minimum height change of a building we aim to detect. | |

| 10 m | Considering the data quality from dense image matching, we aim to detect building changes longer than 10 m. |

| Data Set | Changed | Unchanged | Total Samples | Ratio |

|---|---|---|---|---|

| Training | 22,398 | 116,061 | 138,459 | 1:5.18 |

| Validation | 2925 | 104,111 | 107,036 | 1:35.6 |

| Testing | 6192 | 129,026 | 135,218 | 1:20.8 |

| Network | Recall | Precision | F1-Score |

|---|---|---|---|

| DSM-Diff | 89.12 | 37.61 | 52.90 |

| FF-HH | 81.43 | 62.65 | 70.81 |

| FF-HHC | 82.17 | 67.17 | 73.92 |

| FF-DC | 82.33 | 65.09 | 72.70 |

| PSI-HH | 80.26 | 62.49 | 70.27 |

| PSI-HHC | 84.63 | 61.03 | 70.92 |

| PSI-DC | 86.17 | 68.16 | 76.13 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Vosselman, G.; Gerke, M.; Persello, C.; Tuia, D.; Yang, M.Y. Detecting Building Changes between Airborne Laser Scanning and Photogrammetric Data. Remote Sens. 2019, 11, 2417. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11202417

Zhang Z, Vosselman G, Gerke M, Persello C, Tuia D, Yang MY. Detecting Building Changes between Airborne Laser Scanning and Photogrammetric Data. Remote Sensing. 2019; 11(20):2417. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11202417

Chicago/Turabian StyleZhang, Zhenchao, George Vosselman, Markus Gerke, Claudio Persello, Devis Tuia, and Michael Ying Yang. 2019. "Detecting Building Changes between Airborne Laser Scanning and Photogrammetric Data" Remote Sensing 11, no. 20: 2417. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11202417