Deep Multi-Scale Recurrent Network for Synthetic Aperture Radar Images Despeckling

Abstract

:1. Introduction

2. Review of Speckle Model and Neural Network

2.1. Speckle Model of Sar Images

2.2. Convolutional Long Short-Term Memory

3. Proposed Method

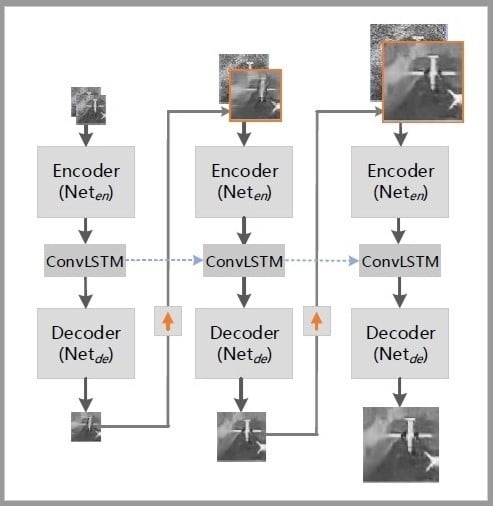

3.1. Architecture

3.2. Single Scale Network

3.3. Sub-Pixel Convolution

3.4. Proposed Evaluation Criterion

Edge Feature Keep Ratio and Feature Point Keep Ratio

- Edge detection processing for clean and test image using edge detection algorithms such as Sobel [47], Canny [48], Prewitt [49], and Roberts [50] methods. After this, two images only with only edge lines are obtained. The values of pixels in the edge position are set to 1, the values of the other position are set to 0.

- Bit-wise and operation on two images from step 1, values at the position where the edges coincide are set to 1, values at other locations are set to 0.

- Count the number of value 1 in the edge detected the result of a clean image and the number of 1 in step 2, and calculate the ratio of the two numbers.

4. Experiments and Results

4.1. Dataset

4.2. Experimental Settings

4.3. Experimental Results

4.3.1. Results on Synthetic Images

4.3.2. Results on Real Sar Images

4.3.3. Runtime Comparisons

5. Discussion

5.1. Choice of Scales

5.2. Loss Function

6. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

References

- Ranjani, J.J.; Thiruvengadam, S. Dual-tree complex wavelet transform based SAR despeckling using interscale dependence. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2723–2731. [Google Scholar] [CrossRef]

- Ahishali, M.; Kiranyaz, S.; Ince, T.; Gabbouj, M. Dual and Single Polarized SAR Image Classification Using Compact Convolutional Neural Networks. Remote Sens. 2019, 11, 1340. [Google Scholar] [CrossRef]

- Jun, S.; Long, M.; Xiaoling, Z. Streaming BP for non-linear motion compensation SAR imaging based on GPU. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2035–2050. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, X.; Shi, J.; Wei, S.; Tian, B. Ground Moving Target 2-D Velocity Estimation and Refocusing for Multichannel Maneuvering SAR with Fixed Acceleration. Sensors 2019, 19, 3695. [Google Scholar] [CrossRef]

- Lee, J.S.; Wen, J.H.; Ainsworth, T.L.; Chen, K.S.; Chen, A.J. Improved sigma filter for speckle filtering of SAR imagery. IEEE Trans. Geosci. Remote Sens. 2008, 47, 202–213. [Google Scholar]

- Yue, D.X.; Xu, F.; Jin, Y.Q. SAR despeckling neural network with logarithmic convolutional product model. Int. J. Remote Sens. 2018, 39, 7483–7505. [Google Scholar] [CrossRef]

- Xie, H.; Pierce, L.E.; Ulaby, F.T. Statistical properties of logarithmically transformed speckle. IEEE Trans. Geosci. Remote Sens. 2002, 40, 721–727. [Google Scholar] [CrossRef]

- Lee, J.S.; Hoppel, K.W.; Mango, S.A.; Miller, A.R. Intensity and phase statistics of multilook polarimetric and interferometric SAR imagery. IEEE Trans. Geosci. Remote Sens. 1994, 32, 1017–1028. [Google Scholar]

- Lattari, F.; Gonzalez Leon, B.; Asaro, F.; Rucci, A.; Prati, C.; Matteucci, M. Deep Learning for SAR Image Despeckling. Remote Sens. 2019, 11, 1532. [Google Scholar] [CrossRef]

- Lopes, A.; Touzi, R.; Nezry, E. Adaptive speckle filters and scene heterogeneity. IEEE Trans. Geosci. Remote Sens. 1990, 28, 992–1000. [Google Scholar] [CrossRef]

- Frost, V.S.; Stiles, J.A.; Shanmugan, K.S.; Holtzman, J.C. A model for radar images and its application to adaptive digital filtering of multiplicative noise. IEEE Trans. Pattern Anal. Mach. Intell. 1982, PAMI-4l, 157–166. [Google Scholar] [CrossRef]

- Kuan, D.T.; Sawchuk, A.A.; Strand, T.C.; Chavel, P. Adaptive noise smoothing filter for images with signal-dependent noise. IEEE Trans. Pattern Anal. Mach. Intell. 1985, PAMI-7, 165–177. [Google Scholar] [CrossRef]

- Lopes, A.; Nezry, E.; Touzi, R.; Laur, H. Structure detection and statistical adaptive speckle filtering in SAR images. Int. J. Remote Sens. 1993, 14, 1735–1758. [Google Scholar] [CrossRef]

- Kaur, L.; Gupta, S.; Chauhan, R. Image Denoising Using Wavelet Thresholding. ICVGIP Indian Conf. Comput. Vision Graph. Image Process. 2002, 2, 16–18. [Google Scholar]

- Cohen, A.; Daubechies, I.; Feauveau, J.C. Biorthogonal bases of compactly supported wavelets. Commun. Pure Appl. Math. 1992, 45, 485–560. [Google Scholar] [CrossRef]

- Donovan, G.C.; Geronimo, J.S.; Hardin, D.P.; Massopust, P.R. Construction of orthogonal wavelets using fractal interpolation functions. Siam J. Math. Anal. 1996, 27, 1158–1192. [Google Scholar] [CrossRef]

- Xie, H.; Pierce, L.E.; Ulaby, F.T. SAR speckle reduction using wavelet denoising and Markov random field modeling. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2196–2212. [Google Scholar] [CrossRef] [Green Version]

- Candes, E.J.; Donoho, D.L. Curvelets: A Surprisingly Effective Nonadaptive Representation for Objects with Edges; Technical Report; Stanford University, Department of Statistics: Stanford, CA, USA, 2000. [Google Scholar]

- Meyer, F.G.; Coifman, R.R. Brushlets: A tool for directional image analysis and image compression. Appl. Comput. Harmon. Anal. 1997, 4, 147–187. [Google Scholar] [CrossRef]

- Do, M.N.; Vetterli, M. The contourlet transform: an efficient directional multiresolution image representation. IEEE Trans. Image Process. 2005, 14, 2091–2106. [Google Scholar] [CrossRef] [Green Version]

- Buades, A.; Coll, B.; Morel, J.M. A non-local algorithm for image denoising. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 2, pp. 60–65. [Google Scholar]

- Parrilli, S.; Poderico, M.; Angelino, C.V.; Verdoliva, L. A nonlocal SAR image denoising algorithm based on LLMMSE wavelet shrinkage. IEEE Trans. Geosci. Remote Sens. 2011, 50, 606–616. [Google Scholar] [CrossRef]

- Deledalle, C.A.; Denis, L.; Tupin, F.; Reigber, A.; Jäger, M. NL-SAR: A unified nonlocal framework for resolution-preserving (Pol)(In) SAR denoising. IEEE Trans. Geosci. Remote Sens. 2014, 53, 2021–2038. [Google Scholar] [CrossRef]

- Kervrann, C.; Boulanger, J.; Coupé, P. Bayesian non-local means filter, image redundancy and adaptive dictionaries for noise removal. In Proceedings of the International Conference on Scale Space and Variational Methods in Computer Vision, Ischia, Italy, 30 May–2 June 2007; pp. 520–532. [Google Scholar]

- Wang, P.; Zhang, H.; Patel, V.M. SAR image despeckling using a convolutional neural network. IEEE Signal Process. Lett. 2017, 24, 1763–1767. [Google Scholar] [CrossRef]

- Bai, Y.C.; Zhang, S.; Chen, M.; Pu, Y.F.; Zhou, J.L. A Fractional Total Variational CNN Approach for SAR Image Despeckling. In Proceedings of the International Conference on Intelligent Computing, Bengaluru, India, 6 July 2018; pp. 431–442. [Google Scholar]

- Zhang, Q.; Yuan, Q.; Li, J.; Yang, Z.; Ma, X. Learning a dilated residual network for SAR image despeckling. Remote Sens. 2018, 10, 196. [Google Scholar] [CrossRef]

- Kaplan, L.M. Analysis of multiplicative speckle models for template-based SAR ATR. IEEE Trans. Aerosp. Electron. Syst. 2001, 37, 1424–1432. [Google Scholar] [CrossRef]

- Oliver, C.; Quegan, S. Understanding Synthetic Aperture Radar Images; SciTech Publishing: Noida, India, 2004. [Google Scholar]

- Wang, C.; Shi, J.; Yang, X.; Zhou, Y.; Wei, S.; Li, L.; Zhang, X. Geospatial Object Detection via Deconvolutional Region Proposal Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3014–3027. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef]

- Xingjian, S.; Chen, Z.; Wang, H.; Yeung, D.Y.; Wong, W.K.; Woo, W.C. Convolutional LSTM network: A machine learning approach for precipitation nowcasting. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2015; pp. 802–810. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Chen, Q.; Koltun, V. Photographic image synthesis with cascaded refinement networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 1511–1520. [Google Scholar]

- Srivastava, R.K.; Greff, K.; Schmidhuber, J. Highway networks. arXiv 2015, arXiv:1505.00387. [Google Scholar]

- He, K.; Sun, J. Convolutional neural networks at constrained time cost. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Santiago, Chile, 7–13 December 2015; pp. 5353–5360. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Dumoulin, V.; Visin, F. A guide to convolution arithmetic for deep learning. arXiv 2016, arXiv:1603.07285. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Santiago, Chile, 7–13 December 2015; pp. 3431–3440. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Odena, A.; Dumoulin, V.; Olah, C. Deconvolution and checkerboard artifacts. Distill 2016, 1, e3. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1874–1883. [Google Scholar]

- Goodman, J.W. Statistical properties of laser speckle patterns. In Laser Speckle and Related Phenomena; Springer: Berlin/Heidelberg, Germany, 1975; pp. 9–75. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: from error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Anfinsen, S.N.; Doulgeris, A.P.; Eltoft, T. Estimation of the equivalent number of looks in polarimetric synthetic aperture radar imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3795–3809. [Google Scholar] [CrossRef]

- Sobel, I. History and definition of the sobell operator. Retrieved World Wide Web. 2014. Available online: Available online: https://www.researchgate.net/publication/239398674 (accessed on 23 October 2019).

- Bao, P.; Zhang, L.; Wu, X. Canny edge detection enhancement by scale multiplication. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 1485–1490. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dim, J.R.; Takamura, T. Alternative approach for satellite cloud classification: edge gradient application. Adv. Meteorol. 2013, 2013, 584816. [Google Scholar] [CrossRef]

- Davis, L.S. A survey of edge detection techniques. Comput. Graph. Image Process. 1975, 4, 248–270. [Google Scholar] [CrossRef]

- Tareen, S.A.K.; Saleem, Z. A comparative analysis of sift, surf, kaze, akaze, orb, and brisk. In Proceedings of the IEEE 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; pp. 1–10. [Google Scholar]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Conference on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar]

- Couturier, R.; Perrot, G.; Salomon, M. Image Denoising Using a Deep Encoder-Decoder Network with Skip Connections. In Proceedings of the International Conference on Neural Information Processing, Siem Reap, Cambodia, 13–16 December 2018; pp. 554–565. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Kaplan, A.; Avraham, T.; Lindenbaum, M. Interpreting the ratio criterion for matching SIFT descriptors. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 697–712. [Google Scholar]

| Classes | Index | SAR-BM3D | ID-CNN | RED-Net | SS-Net | MSR-Net |

|---|---|---|---|---|---|---|

| PSNR | 20.343 ± 0.043 | 21.639 ± 0.073 | 22.321 ± 0.065 | 22.467 ± 0.039 | 22.768 ± 0.052 | |

| building | SSIM | 0.579± 0.005 | 0.599 ± 0.002 | 0.672 ± 0.005 | 0.663 ± 0.002 | 0.684 ± 0.004 |

| EFKR | 0.3872± 0.061 | 0.3888 ± 0.058 | 0.5131 ± 0.053 | 0.4950± 0.040 | 0.5322 ± 0.049 | |

| PSNR | 22.478 ± 0.081 | 23.17± 0.046 | 25.157± 0.033 | 25.255 ± 0.057 | 25.526± 0.064 | |

| freeway | SSIM | 0.581 ± 0.002 | 0.536 ± 0.003 | 0.662 ± 0.003 | 0.663 ± 0.048 | 0.680 ± 0.002 |

| EFKR | 0.3046± 0.052 | 0.3376 ± 0.061 | 0.5071 ± 0.061 | 0.4929 ± 0.064 | 0.5065 ± 0.057 | |

| PSNR | 21.351± 0.045 | 24.006 ± 0.058 | 23.97± 0.062 | 24.166 ± 0.046 | 24.433± 0.049 | |

| airplane | SSIM | 0.602 ± 0.004 | 0.641 ± 0.003 | 0.638 ± 0.001 | 0.636 ± 0.002 | 0.649 ± 0.005 |

| EFKR | 0.3048± 0.069 | 0.2965 ± 0.073 | 0.4929 ± 0.063 | 0.4800 ± 0.058 | 0.5123 ± 0.065 |

| Classes | Index | SAR-BM3D | ID-CNN | RED-Net | SS-Net | MSR-Net |

|---|---|---|---|---|---|---|

| PSNR | 21.789 ± 0.046 | 24.319 ± 0.101 | 24.366 ± 0.059 | 24.279 ± 0.032 | 24.370± 0.038 | |

| building | SSIM | 0.694 ± 0.003 | 0.721 ± 0.005 | 0.737 ± 0.006 | 0.722 ± 0.001 | 0.735 ± 0.005 |

| EFKR | 0.5029± 0.059 | 0.5459± 0.057 | 0.5646± 0.050 | 0.5742± 0.0423 | 0.6021± 0.051 | |

| PSNR | 24.351 ± 0.059 | 26.008 ± 0.051 | 26.821 ± 0.063 | 26.734 ± 0.030 | 26.893 ± 0.049 | |

| freeway | SSIM | 0.645 ± 0.003 | 0.670 ± 0.002 | 0.718 ± 0.005 | 0.711 ± 0.003 | 0.722 ± 0.003 |

| EFKR | 0.4897± 0.061 | 0.5019± 0.060 | 0.5469± 0.057 | 0.5511± 0.065 | 0.5608± 0.051 | |

| PSNR | 23.261 ± 0.041 | 25.747 ± 0.067 | 25.849 ± 0.050 | 25.905 ± 0.046 | 26.046 ± 0.064 | |

| airplane | SSIM | 0.657 ± 0.003 | 0.677 ± 0.005 | 0.691 ± 0.004 | 0.686 ± 0.002 | 0.693 ± 0.005 |

| EFKR | 0.4976± 0.061 | 0.5149 ± 0.058 | 0.5484 ± 0.056 | 0.5728 ± 0.039 | 0.5871 ± 0.055 |

| Classes | Index | SAR-BM3D | ID-CNN | RED-Net | SS-Net | MSR-Net |

|---|---|---|---|---|---|---|

| PSNR | 23.851 ± 0.071 | 26.004 ± 0.055 | 25.685 ± 0.043 | 25.989 ± 0.064 | 26.026 ± 0.048 | |

| building | SSIM | 0.752 ± 0.003 | 0.780 ± 0.002 | 0.769 ± 0.05 | 0.774 ± 0.004 | 0.782 ± 0.004 |

| EFKR | 0.5992± 0.065 | 0.6191± 0.055 | 0.6305± 0.050 | 0.6261± 0.0525 | 0.6476± 0.060 | |

| PSNR | 26.374 ± 0.081 | 27.738 ± 0.049 | 27.785 ± 0.068 | 28.027 ± 0.053 | 28.111 ± 0.057 | |

| freeway | SSIM | 0.714 ± 0.003 | 0.740 ± 0.006 | 0.751 ± 0.006 | 0.753 ± 0.004 | 0.757 ± 0.003 |

| EFKR | 0.5432± 0.041 | 0.5809± 0.053 | 0.5990± 0.052 | 0.5890 ± 0.057 | 0.5954± 0.048 | |

| PSNR | 25.31 ± 0.064 | 27.438 ± 0.051 | 27.161 ± 0.044 | 27.404 ± 0.061 | 27.443 ± 0.042 | |

| airplane | SSIM | 0.71 ± 0.005 | 0.731 ± 0.004 | 0.720 ± 0.004 | 0.728 ± 0.005 | 0.732 ± 0.002 |

| EFKR | 0.5872± 0.057 | 0.6039 ± 0.047 | 0.6113 ± 0.053 | 0.6187 ± 0.038 | 0.6293 ± 0.051 |

| Classes | Index | SAR-BM3D | ID-CNN | RED-Net | SS-Net | MSR-Net |

|---|---|---|---|---|---|---|

| PSNR | 24.979 ± 0.060 | 26.891 ± 0.043 | 26.879 ± 0.056 | 26.929 ± 0.071 | 26.942 ± 0.052 | |

| building | SSIM | 0.781 ± 0.004 | 0.805 ± 0.0002 | 0.805 ± 0.004 | 0.802 ± 0.005 | 0.806 ± 0.003 |

| EFKR | 0.6184± 0.051 | 0.6441± 0.049 | 0.6528± 0.047 | 0.6596± 0.054 | 0.6664± 0.058 | |

| PSNR | 27.444 ± 0.042 | 28.643 ± 0.059 | 28.905 ± 0.047 | 28.787 ± 0.066 | 28.893 ± 0.050 | |

| freeway | SSIM | 0.747 ± 0.003 | 0.768 ± 0.006 | 0.782 ± 0.007 | 0.776 ± 0.004 | 0.778 ± 0.005 |

| EFKR | 0.5975± 0.056 | 0.6130± 0.053 | 0.6218± 0.056 | 0.6247± 0.067 | 0.6197± 0.051 | |

| PSNR | 26.463 ± 0.053 | 28.362 ± 0.64 | 28.352 ± 0.078 | 28.349 ± 0.059 | 28.407 ± 0.065 | |

| airplane | SSIM | 0.737 ± 0.005 | 0.756 ± 0.003 | 0.758 ± 0.003 | 0.754 ± 0.005 | 0.756 ± 0.006 |

| EFKR | 0.6008± 0.059 | 0.6275 ± 0.057 | 0.6432 ± 0.052 | 0.6465 ± 0.052 | 0.6433 ± 0.053 |

| Data | Original | SAR-BM3D | ID-CNN | RED-Net | SS-Net | MSR-Net |

|---|---|---|---|---|---|---|

| region1 | 3 | 51 | 104 | 317 | 394 | 540 |

| region2 | 51 | 195 | 173 | 413 | 2906 | 644 |

| region3 | 3 | 80 | 101 | 333 | 339 | 622 |

| region4 | 4 | 99 | 95 | 235 | 369 | 560 |

| fp1 | fp2 | FPKR (r = 0.25) | FPKR (r = 0.30) | FPKR (r = 0.35) | |

|---|---|---|---|---|---|

| original | 5637 | 4531 | 0.0004 ( 2 ) | 0.0077 ( 35 ) | 0.0362 (164) |

| SAR-BM3D | 4554 | 5947 | 0.0046 (21) | 0.0212 (110) | 0.0894 (407) |

| ID-CNN | 3233 | 3981 | 0.0049 (16) | 0.0294 ( 95 ) | 0.1148 (371) |

| RED-Net | 3636 | 4644 | 0.0055 (20) | 0.0292 (106) | 0.0976 (355) |

| SS-Net | 3429 | 4357 | 0.0052 (18) | 0.0286 ( 98 ) | 0.1155 (396) |

| MSR-net | 3080 | 4216 | 0.0071 (22) | 0.0425 (106) | 0.0981 (302) |

| fp1 | fp2 | FPKR (r = 0.25) | FPKR (r = 0.30) | FPKR (r = 0.35) | |

|---|---|---|---|---|---|

| original | 4511 | 3476 | 0.0012 ( 4 ) | 0.0118 ( 41 ) | 0.0437 (152) |

| SAR-BM3D | 3878 | 5289 | 0.0044 (17) | 0.0273 (106) | 0.0952 (369) |

| ID-CNN | 2021 | 2533 | 0.0124 (25) | 0.0574 (116) | 0.1366 (267) |

| RED-Net | 2137 | 2797 | 0.0140 (30) | 0.0543 (116) | 0.1526 (326) |

| MSR-net | 2021 | 2533 | 0.0166 (33) | 0.0674(134) | 0.1579 (314) |

| Size | SAR-BM3D | ID-CNN | RED-Net | SS-Net | MSR-Net |

|---|---|---|---|---|---|

| 12.79 | 0.448 | 2.241 | 0.339 | 0.586 | |

| 52.813 | 1.562 | 8.943 | 1.342 | 2.375 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, Y.; Shi, J.; Yang, X.; Wang, C.; Kumar, D.; Wei, S.; Zhang, X. Deep Multi-Scale Recurrent Network for Synthetic Aperture Radar Images Despeckling. Remote Sens. 2019, 11, 2462. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11212462

Zhou Y, Shi J, Yang X, Wang C, Kumar D, Wei S, Zhang X. Deep Multi-Scale Recurrent Network for Synthetic Aperture Radar Images Despeckling. Remote Sensing. 2019; 11(21):2462. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11212462

Chicago/Turabian StyleZhou, Yuanyuan, Jun Shi, Xiaqing Yang, Chen Wang, Durga Kumar, Shunjun Wei, and Xiaoling Zhang. 2019. "Deep Multi-Scale Recurrent Network for Synthetic Aperture Radar Images Despeckling" Remote Sensing 11, no. 21: 2462. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11212462