An Efficient Encoding Voxel-Based Segmentation (EVBS) Algorithm Based on Fast Adjacent Voxel Search for Point Cloud Plane Segmentation

Abstract

:1. Introduction

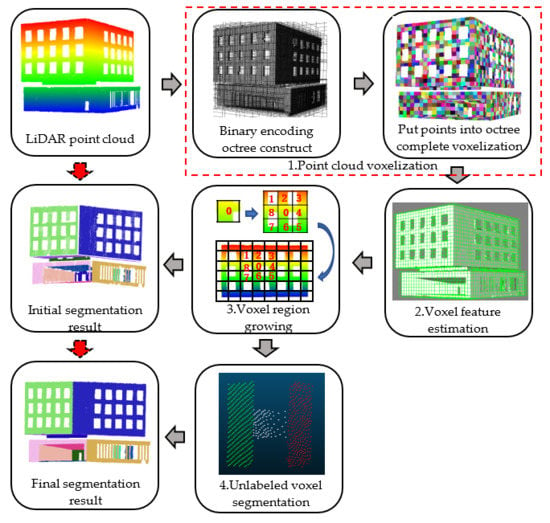

2. Methods

2.1. Point Cloud Voxelization

2.1.1. Voxelization Based on Binary Coding Octree

- Extract the code value related to X in the code, i.e., the value 1 of the first bit and the value 0 of the fourth bit, constitute the binary code is 10, and the decimal system is 2, then the voxel X coordinate is 2 1 = 2 m.

- Similarly, Y = 3 1 = 3 m and Z = 3 1 = 3 m.

2.1.2. Searching Adjacent Voxel

2.2. Voxel Feature Estimation

- (1)

- The spatial position of a voxel is expressed by the average coordinate values of all points in a voxel (centroid ).

- (2)

- The normal vector of a voxel is calculated by principal component analysis (PCA). First, the covariance matrix is calculated by point set in a voxel:where is the centroid of a point cloud in a voxel and matrix is a symmetric positive definite matrix; matrix is decomposed into eigenvalues:where are the three eigenvalues of matrix and ; are the eigenvectors corresponding to the eigenvalues. The first eigenvector is the maximum variance direction of the point cloud data. The second eigenvector corresponds to the direction in which the data variance is the largest in the vertical direction of the first eigenvector, and so on. For point cloud plane estimation, the first two eigenvectors form the basis of the plane, the third eigenvector is orthogonal to the first two eigenvectors, and the normal of the fitting plane is defined [29]. In other words, the eigenvector corresponding to the minimum eigenvalue of the matrix is regarded as the normal vector of the point cloud data fitting plane in the voxel.

- (3)

- The residual in the plane fitting of point cloud data can be caused by noise points and the inconsistency between a point and a plane model. The equation for calculating the residual is as follows:where is the distance from the point to the fitting plane. For a single voxel, the residual value of a point cloud in a voxel indicates the degree of dispersion from points to the fitting plane, as shown in Figure 4. The smaller the residual value of the voxel, the higher is the flatness of a point cloud in a voxel.

2.3. Voxel-Based Region Growing

2.3.1. Selection of Seed Voxel

2.3.2. Selection of Growing Conditions

- (1)

- Smoothness constraint: Equation (7) expresses a plane equation in a 3D space. (A, B, C) is the normal of the plane. If points in two voxels are on the same plane, then the normal of the fitting planes of these two voxels should be approximately parallel. This is called the smoothness constraint.Smoothness constraint is measured by comparing the angles between the normal vectors of two voxels. As shown in Figure 5a, two adjacent voxels A and B exist; their normal and , respectively, have been computed in the feature estimation of voxels in Section 2.2. The angle between them is obtained by point multiplication, as shown in Equations (8) and (9):

- (2)

- Continuity constraint: To judge whether points in two adjacent voxels belong to the same plane, it is insufficient to rely solely on the smoothness constraint. As shown in Figure 6b, the normal vectors of two adjacent voxels are parallel to each other, satisfying the smooth connection constraint; however, the point clouds of the two voxels do not belong to the same plane. Therefore, the parameter D in the plane equation should also satisfy the corresponding conditions to ensure that the points in the two voxels belong to the same plane. We call this constraint the continuity constraint. The continuity constraint of the voxels implies that if the points in two voxels belong to the same plane, then the two parts of the points are continuous, as shown in Figure 6a. To measure the continuity between two voxels, we compute the centroids , of voxels A and B, respectively, and then connect the centroids of two voxels to obtain vector . Before applying the continuity constraint, the smoothness constraint is first applied; in other words, the smoothness constraint is satisfied before the continuity constraint is applied. Therefore, the normal vectors of the two voxels are approximately parallel before the continuity constraint is applied. If ( is the normal of voxel A), the points in two voxels are considered to be continuous and cluster them into the same plane. If , then the point clouds in two voxels do not satisfy the continuity constraint. is the threshold. This can solve the problem of clustering the parallel planes which are close to each other into the same plane (shown in Section 4.3).

2.4. Unlabeled Voxel Segmentation

| Algorithm 1: Unlabeled voxel segmentation. |

| Data: Octree , coarse segmentation plane set , distance threshold . |

| Result: Place the points in the unsegmented voxels into the correct plane |

| initialization: Place the unsegmented voxel in octree into |

| begin: |

| for each do find adjoin voxels B of for each do for each compute d between to find and its if < then insert into |

3. Experiments and Analysis

3.1. Results of Terrestrial Laser Scanner Data

3.2. Results of Mobile Laser Scanner Data

4. Discussion

4.1. Voxel Size Setting

4.2. Curved Point Cloud Surface

4.3. Two Parallel Planes Which are Close to Each Other

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Liu, M. Robotic Online Path Planning on Point Cloud. IEEE Trans. Cybern. 2015, 46, 1217–1228. [Google Scholar] [CrossRef] [PubMed]

- Boulch, A.; Guerry, J.; Le Saux, B.; Audebert, N. Snapnet: 3D point cloud semantic labeling with 2D deep segmentation networks. Comput. Graph. 2018, 71, 189–198. [Google Scholar] [CrossRef]

- Hu, Q.; Wang, S.; Fu, C.; Yu, D.; Wang, W.T. Fine Surveying and 3D Modeling Approach for Wooden Ancient Architecture via Multiple Laser Scanner Integration. Remote Sens. 2016, 8, 270. [Google Scholar] [CrossRef]

- Ma, L.; Favier, R.; Do, L.; Bondarev, E.; De With, P.H.N. Plane segmentation and decimation of point clouds for 3D environment reconstruction. In Proceedings of the IEEE Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 11–14 January 2013; pp. 43–49. [Google Scholar]

- Xu, Y.; Heogner, L.; Tuttas, S.; Stilla, U. A voxel- and graph-based strategy for segmenting man-made infrastructures using perceptual grouping laws: Comparison and evaluation. Photogramm. Eng. Remote Sens. 2018, 84, 377–391. [Google Scholar] [CrossRef]

- Rebolj, D.; Pučko, Z.; Babič, N.Č.; Bizjak, M.; Mongus, D. Point cloud quality requirements for Scan-vs-BIM based automated construction progress monitoring. Autom. Constr. 2017, 84, 323–334. [Google Scholar] [CrossRef]

- Xu, Y.; Hoegner, L.; Tuttas, S.; Stilla, U. Voxel- and graph-based point cloud segmentation of 3d scenes using perceptual grouping laws. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 43–50. [Google Scholar] [CrossRef]

- Filin, S. Surface clustering from airborne laser scanning data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 119–124. [Google Scholar]

- Lerma, J.; Biosca, J. Segmentation and filtering of laser scanner data for cultural heritage. In Proceedings of the CIPA 2005 XX International Symposium, Torino, Italy, 26 September–1 October 2005; Volume 26. [Google Scholar]

- Chehata, N.; David, N.; Bretar, F. Lidar data classification using hierarchical k-means clustering. In Proceedings of the ISPRS Congress, Beijing, China, 3–11 July 2008; pp. 325–330. [Google Scholar]

- Wang, Y.; Hao, W.; Ning, X.; Zhao, M.; Zhao, M.H.; Zhang, J.L.; Shi, Z.H.; Zhang, X.P. Automatic segmentation of urban point clouds based on the Gaussian map. Photogram. Rec. 2013, 28, 342–361. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. A paradigm for model fitting with applications to image analysis and automated cartography. Comm. ACM. 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Sveier, A.; Kleppe, A.L.; Tingelstad, L.; Egeland, O. Object detection in point clouds using conformal geometric algebra. Adv. Appl. Clifford Algebras 2017, 27, 1961–1976. [Google Scholar] [CrossRef]

- Zeineldin, R.A.; El-Fishawy, N.A. Fast and accurate ground plane detection for the visually impaired from 3D organized point clouds. In Proceedings of the 2016 SAI Computing Conference (SAI), London, UK, 13–15 July 2016; pp. 373–379. [Google Scholar]

- Torr, P.H.S.; Zisserman, A. MLESAC: A New Robust Estimator with Application to Estimating Image Geometry. Comput. Vis. Image Underst. 2000, 78, 138–156. [Google Scholar] [CrossRef]

- Li, L.; Yang, F.; Zhu, H.; Li, D.; Li, Y.; Tang, L. An improved RANSAC for 3D point cloud plane segmentation based on normal distribution transformation cells. Remote Sens. 2017, 9, 433. [Google Scholar] [CrossRef]

- Schnabel, R.; Wahl, R.; Klein, R. Efficient RANSAC for Point-Cloud Shape Detection. Comput. Graph. Forum 2007, 26, 214–226. [Google Scholar] [CrossRef]

- Besl, P.J.; Jain, R.C. Segmentation through variable-order surface fitting. IEEE Trans. Pattern Anal. Mach. Intell. 1988, 10, 167–192. [Google Scholar] [CrossRef]

- Rabbani, T.; Van Den Heuvel, F.; Vosselmann, G. Segmentation of point clouds using smoothness constraint. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2006, 36, 248–253. [Google Scholar]

- Cai, Z.; Ma, H.; Zhang, L. A Building Detection Method Based on Semi-Suppressed Fuzzy C-Means and Restricted Region Growing Using Airborne LiDAR. Remote Sens. 2019, 11, 848. [Google Scholar] [CrossRef]

- Tovari, D.; Pfeifer, N. Segmentation based robust interpolation-a new approach to laser data filtering. Remote Sens. Spat. Inf. Sci. 2005, 36, 79–84. [Google Scholar]

- Woo, H.; Kang, E.; Wang, S.; Lee, K. A new segmentation method for point cloud data. Int. J. Mach. Tools Manuf. 2002, 42, 167–178. [Google Scholar] [CrossRef]

- Su, Y.T.; Bethel, J.; Hu, S. Octree-based segmentation for terrestrial LiDAR point cloud data in industrial applications. ISPRS J. Photogramm. Remote Sens. 2016, 113, 59–74. [Google Scholar] [CrossRef]

- Vo, A.V.; Truong-Hong, L.; Laefer, D.F.; Bertolotto, M. Octree-based region growing for point cloud segmentation. ISPRS J. Photogramm. Remote Sens. 2015, 104, 88–100. [Google Scholar] [CrossRef]

- Lin, Y.; Wang, C.; Zhai, D.; Li, W.; Li, J. Toward better boundary preserved supervoxel segmentation for 3D point clouds. ISPRS J. Photogramm. Remote Sens. 2018, 143, 39–47. [Google Scholar] [CrossRef]

- Marx, A.; Chou, Y.H.; Mercy, K.; Windisch, R. A Lightweight, Robust Exploitation System for Temporal Stacks of UAS Data: Use Case for Forward-Deployed Military or Emergency Responders. Drones 2019, 3, 29. [Google Scholar] [CrossRef]

- Jiang, X.T.; Dai, N.; Cheng, X.S.; Zang, C.D.; Guo, B.S. Fast Neighborhood Search of Large-Scale Scattered Point Cloud Based on the Binary-Encoding Octree. Comput.-Aided Des. Comput. Graph. 2018, 30, 80–88. [Google Scholar]

- Keling, N.; Yusoff, I.M.; Ujang, U.; Lateh, H. Highly Efficient Computer Oriented Octree Data Structure and Neighbours Search in 3D GIS. In Advances in 3D Geoinformation; Springer: Cham, Switzerland, 2017; pp. 285–303. [Google Scholar]

- Nurunnabi, A.; Belton, D.; West, G. Robust Segmentation in Laser Scanning 3D Point Cloud Data. In Proceedings of the International Conference on Digital Image Computing Techniques and Applications (DICTA), Fremantle, Australia, 3–5 December 2012; pp. 1–8. [Google Scholar]

- Xu, Y.; Tuttas, S.; Hoegner, L.; Stilla, U. Geometric primitive extraction from point clouds of construction sites using VGS. IEEE Geosci. Sens. Lett. 2017, 14, 424–428. [Google Scholar] [CrossRef]

- Horvat, D.; Žalik, B.; Mongus, D. Context-dependent detection of non-linearly distributed points for vegetation classification in airborne lidar. ISPRS J. Photogramm. Remote Sens. 2016, 116, 1–14. [Google Scholar] [CrossRef]

- Filin, S.; Pfeifer, N. Neighborhood systems for airborne laser data. Photogramm. Eng. Remote Sens. 2005, 71, 743–755. [Google Scholar] [CrossRef]

- Hackel, T.; Savinov, N.; Ladicky, L.; Wegner, J.D.; Schindler, K.; Pollefeys, M. SEMANTIC3D.NET: A new large-scale point cloud classification benchmark. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Hannover, Germany, 6–9 June 2017; pp. 91–98. [Google Scholar]

- Vallet, B.; Brédif, M.; Serna, A.; Marcotegui, B.; Paparoditis, N. TerraMobilita/iQmulus urban point cloud analysis benchmark. Comput. Gr. 2015, 49, 126–133. [Google Scholar] [CrossRef]

- Li, Y.; Li, L.; Li, D.; Yang, F.; Liu, Y. A density-based clustering method for urban scene mobile laser scanning data segmentation. Remote Sens. 2017, 9, 331. [Google Scholar] [CrossRef]

- Cabo, C.; Ordoñez, C.; García-Cortés, S.; Martínez, J. An algorithm for automatic detection of pole-like street furniture objects from mobile laser scanner point clouds. ISPRS J. Photogramm. Remote Sens. 2014, 87, 47–56. [Google Scholar] [CrossRef]

- Deschaud, J.E.; Goulette, F. A fast and accurate plane detection algorithm for large noisy point clouds using filtered normals and voxel growing. In Proceedings of the 3D Processing, Visualization and Transmission Conference (3DPVT2010), Paris, France, 17–20 May 2010. [Google Scholar]

- Feng, C.; Taguchi, Y.; Kamat, V.R. Fast plane extraction in organized point clouds using agglomerative hierarchical clustering. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 6218–6225. [Google Scholar]

- Liu, P.; Li, Y.; Hu, W.; Ding, X. Segmentation and Reconstruction of Buildings with Aerial Oblique Photography Point Clouds. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-7/W4, 109–112. [Google Scholar] [CrossRef]

- Pan, Y.; Dong, Y.; Wang, D.; Chen, A.; Ye, Z. Three-dimensional reconstruction of structural surface model of heritage bridges using UAV-based photogrammetric point clouds. Remote Sens. 2019, 11, 1204. [Google Scholar] [CrossRef]

- Xu, Y.; Boerner, R.; Yao, W.; Hoegner, L.; Stilla, U. Automated coarse registration of point clouds in 3D urban scenes using voxel based plane constraint. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W4, 185–191. [Google Scholar] [CrossRef] [Green Version]

| ER | 0.034 | - | 25.8 | 0.034 |

| SCRG | - | 0.05 | 25.8 | |

| VGS | 0.1 | - | - | - |

| EVBS | 0.1 | 0.05 | 25.8 | 0.05 |

| Precision | Recall | F1 | Time (s) | |

|---|---|---|---|---|

| ER | 0.78 | 0.70 | 0.73 | 329.6 |

| SCRG | 0.51 | 0.53 | 0.52 | 749.3 |

| VGS | 0.80 | 0.62 | 0.70 | 77.3 |

| EVBS | 0.85 | 0.85 | 0.85 | 7.8 |

| Precision | Recall | F1 | Time (s) | |

|---|---|---|---|---|

| ER | 0.85 | 0.71 | 0.77 | 249.8 |

| SCRG | 0.66 | 0.29 | 0.40 | 295.1 |

| VGS | 0.86 | 0.57 | 0.69 | 49.3 |

| EVBS | 0.86 | 0.77 | 0.81 | 5.1 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, M.; Wei, P.; Liu, X. An Efficient Encoding Voxel-Based Segmentation (EVBS) Algorithm Based on Fast Adjacent Voxel Search for Point Cloud Plane Segmentation. Remote Sens. 2019, 11, 2727. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11232727

Huang M, Wei P, Liu X. An Efficient Encoding Voxel-Based Segmentation (EVBS) Algorithm Based on Fast Adjacent Voxel Search for Point Cloud Plane Segmentation. Remote Sensing. 2019; 11(23):2727. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11232727

Chicago/Turabian StyleHuang, Ming, Pengcheng Wei, and Xianglei Liu. 2019. "An Efficient Encoding Voxel-Based Segmentation (EVBS) Algorithm Based on Fast Adjacent Voxel Search for Point Cloud Plane Segmentation" Remote Sensing 11, no. 23: 2727. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11232727