4.1. Airborne Laser Scanning vs. Hyperspectral Images

The results of single sensor classifications (ALS15, HS15, ALS17, and HS17) confirm the high data relevance, generating high Kappa values ranging from 0.70 to 0.79 (

Table 6). These results conform with findings of other researchers who successfully classified Natura 2000 habitats using only ALS [

8,

41,

42] or only HS data [

43,

44] with similar accuracies. This allows us to claim that for the purposes of non-forest Natura 2000 habitats classification, for which the data sets are correctly matched in terms of spatial resolution (2 m

2) and point cloud density (8 pts./m

2), both sensors can be useful. In addition to the claim that both sensors can be useful, it should be noted that, depending on the year and manner of data collection (MFDF, IF), the ALS and HS data achieve interchangeably high Kappa accuracy (

Figure 2). Thus, it is not possible to conclude that either HS or ALS data has more relevance. This has had significant practical implications for planning remote sensing data collection for the purpose of non-forest vegetation classification. When planning and executing of remote sensing HS or ALS data collection is in process, there is no way of telling which single sensor data source would yield a more accurate classification. The researchers are assured that the parameters and conditions of ALS and HS data collection in different years were comparable, yet the classification results remain statistically different (

Table 6). The spread of output values ranged around Kappa 0.09 and result recurrence was not achieved. The reason behind the lack of recurrence is argued to be an unknown variable that determines the performance of remote sensing data.

For the adopted data collection parameters, ALS (8 pts./m

2) and HS (GSD = 2m), the analysis of efficiency of aerial data collection of ALS and HS data (

Figure 3) indicates that ALS aerial data collection was approximately 40 per cent more efficient. It should be noted that the speed of sensor data collection depends on technical parameters, which, in turn, depend on technological development [

45]. Sensor efficiency can be also compared in terms of the conditions required for aerial data collection. As an active sensor, ALS can be used regardless of lighting conditions or the presence of cloud shadows [

46]. In case of the passive HS sensor, lighting conditions, altitude, azimuth of the sun and cloud shadows significantly reduce the time window for HS data collection [

47]. The conditions of HS aerial data collection that determine data quality significantly limit the time window when it can be effectively collected [

48].

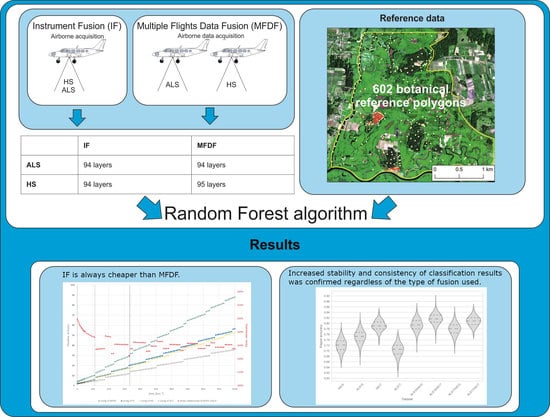

4.2. IF vs. MFDF Instrument Fusion vs. Multiple Flights Data Fusion

To our knowledge, until now, there have been no studies comparing results of classifications carried out using real data collected through MFDF or IF. Theoretical and experimental considerations suggest that IF data would yield higher accuracy [

15,

21], focusing on the analysis of the influence of the data’s geometric accuracy. Considering the conclusions from previous research, this study achieved subpixel co-registration accuracy of all integrated data in both MFDF and IF approaches. The results shown in

Figure 2 and

Table 6 indicate the resulting increase of Kappa accuracies of the classification on three non-forest Natura 2000 habitats when using MFDF or IF compared to using SS data (

Table 6). Employing data fusion results in increased Kappa values regardless of the fusion type (IF, MFDF). Similar findings were observed by other researchers who used IF to increase the accuracy of tree species identification [

49], or invasive species classification [

50] compared to SS data classification.

MFDF data fusion of ALS17HS15 data with inherently low SS accuracy yielded an average increase in Kappa values by 0.10. As a comparison, the lowest increase in Kappa values, an average of 0.07, was recorded for MFDF data fusion of ALS15HS17 with inherently high SS accuracy (

Figure 2,

Table 6). Similar trends were observed in the classification of tree species, achieving an average Kappa value increase of 0.21 by fusing data with varying accuracy (0.53 using ALS, 0.73 using HS and 0.74 using IF) [

49]. The range of output classifications of non-forest Natura 2000 habitats using data fusion remained in the Kappa 0.04 range (

Table 6). This confirms the increased stability of output classification results, regardless of the type of data fusion used and the information load of SS data for which Kappa stayed within the 0.09 range (

Table 6).

The two top classification results (ALS15HS17 and ALS17HS17) differed by only 0.01, leaning to the advantage of MFDF over IF (

Figure 2,

Table 6). It should be noted, however, that MFDF fusion results (ALS15HS17) were obtained by combining highly accurate SS data created artificially by combining data from different years. Conversely, when MFDF fusion was executed on SS data from the same year (ALS15HS15), the resulting classification accuracy was below that of IF data fusion (ALS17HS17). At the same time, the ALS17HS17 data fusion yielded the highest increase in Kappa value of 0.16, compared to ALS17, by fusing SS data with significantly differing accuracy (

Figure 2,

Table 6). Without complete knowledge of the influence of factors determined by the conditions of aerial data collection on SS data relevance, the application of data fusion minimizes the risk of not yielding consistent results that meet the expected classification accuracy levels. This finding is inconsistent with the findings of researchers studying savanna ecosystems [

49], who argue that based on obtained tree classification results, the “classification with HS achieved results close enough to a fused classification approach and as such negated the need to include costly LiDAR”. Such conclusions were drawn with only one repetition and not considering the influence of data fusion on output stability, and without ensuring the application of comparable HS and ALS data parameters.

The differences in classification between MFDF and IF were expected to result from data incoherence ensuing from changes occurring in the study area between individual data collection runs using MFDF. The dynamic environment of a river valley can see changes in natural habitats occur in a matter of days due to varying water conditions or agricultural use, or in a matter of 1–2 years due to growth of expansive species or differing patterns of land use [

51]. The 0.01 difference in Kappa values between the best classification results using MFDF (ALS15HS17) and IF (ALS17HS17) may lead to a conclusion that data coherence pursuant to simultaneous data collection (IF) is of lesser importance, compared to the data relevance of MFDF. However, we need to remember that during preparation of the set of reference polygons using MFDF, samples exhibiting real change that occurred between 2015 and 2017 were eliminated. The resulting number of reference polygons dropped by 17% (

Table 4); this mitigated any inconsistency in training and validation datasets at the stage of machine learning. However, the data inconsistency problem remained unresolved at the stage of extrapolating the model onto the map—outside the reference polygon areas. Considering the above and Kappa differences of 0.01 between the two top classification results using MFDF (ALS15HS17) and IF (ALS17HS17), the use of IF classification seems to prevail over MFDF in terms of effectiveness.

The most natural and simple solution for the application of data fusion is to combine data collected in MFDF [

52,

53,

54]. This kind of data fusion does not require the use of non-standard technical solutions. There is evidence indicating that this method of data collection is prone to the risk of geometric incoherence [

21] of terrain coverage of the data being fused. As a natural consequence, it became necessary to identify a solution to mitigate this risk. The solution was the application of IF, which yielded higher Kappa accuracy. In addition, the cost-effectiveness analysis of data fusion favors IF over MFDF. According to the assumptions used in similar analyses, the costs of collecting aerial data for similar purposes have been compared [

55]. It was assumed that the data collection flights account for the highest cost in the remote sensing analysis budget [

56] and the costs relating to processing and analysis of aerial remote sensing data are the same for MFDF and IF. As a consequence, the comparison of data collection flight time using MFDF and IF illustrates the benefit of using the IF method. MFDF data collection time equals the sum of total time collecting HS and ALS data, while the same data collected using IF equals to 106% of the HS data collection time. The time required to collect the data depends on the size of the studied area. Considering these two variables, the greater the area of data collection, the greater the difference between the time necessary for data collection using both data fusion methods. The greatest difference—of nearly 200%—in efficiency between IF and MFDF occurs when the collection area allows simultaneous data collection using IF during a single flight. For sensors used in the study, this was a study area of 110 km

2. For areas larger than 330 km

2, the difference in effectiveness between MFDF and IF stabilizes, oscillating (+/- 10%) at around 160%. The efficiency thresholds connected with the studied area size depend on attainable airspeed, single flight time, and selection of sensors with comparable efficiency at the given flight parameters. This is confirmed by similar findings [

56] comparing the effectiveness of UAV airborne and orbital platforms. This is of particular importance, because aerial remote sensing data analysis using HS and ALS data fusion is most commonly conducted for areas up to 100 km

2 e.g., [

18,

38] rarely reaching an area of 300 km

2 e.g., [

57,

58].

The shorter time required for IF aerial data collection reduces the risk of data collection interruption caused by atmospheric conditions or another force majeure. Furthermore, IF ensures data consistency in terms of changes of the studied object over time. This is particularly important for areas undergoing dynamic changes in vegetation even within days (due to agricultural use or floods).