Deep Learning Based Fossil-Fuel Power Plant Monitoring in High Resolution Remote Sensing Images: A Comparative Study

Abstract

:1. Introduction

2. Related Works

2.1. Deep Learning Based Object Detection

2.2. Deep Learning Based Object Detection in RSIs

2.3. Fossil-Fuel Power Plant Monitoring in RSIs

3. Deep Learning Based Fossil-Fuel Power Plant Monitoring

3.1. Deep Learning Models for Comparative Study

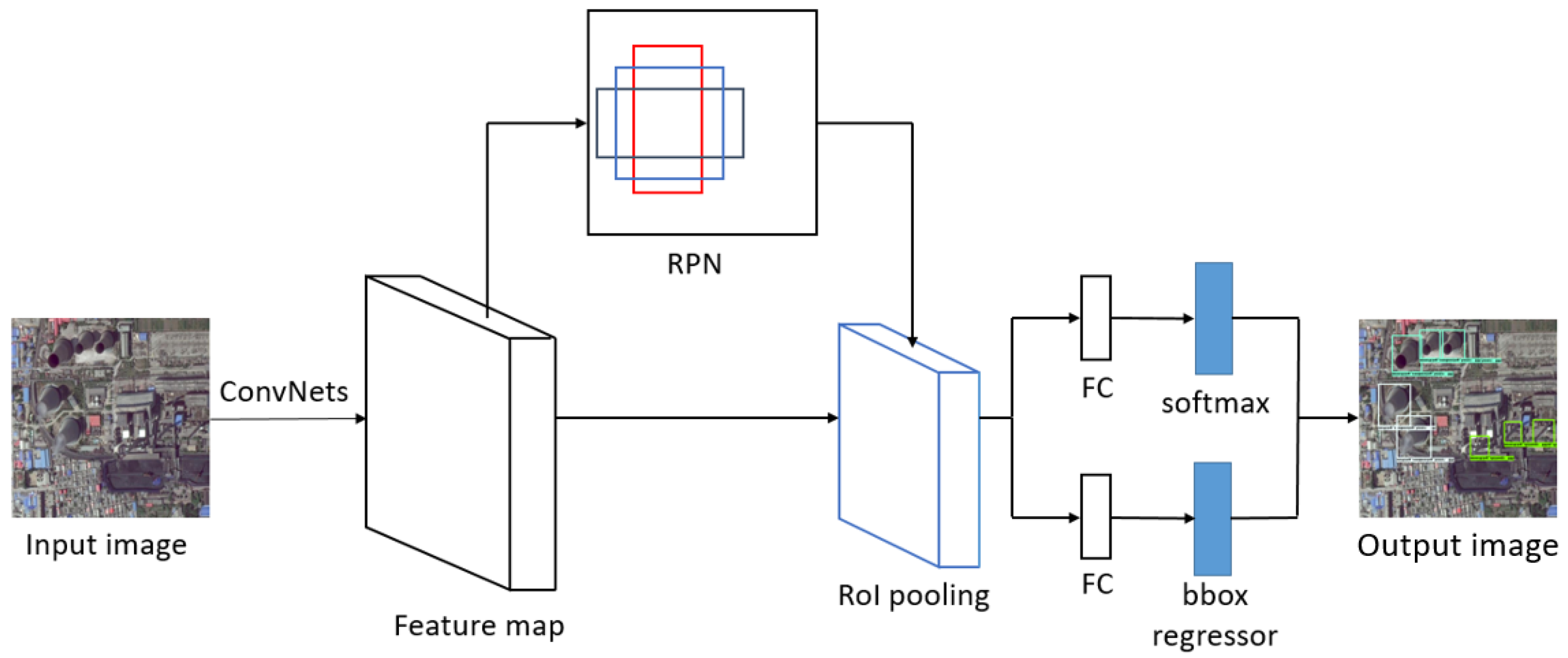

3.1.1. Faster R-CNN

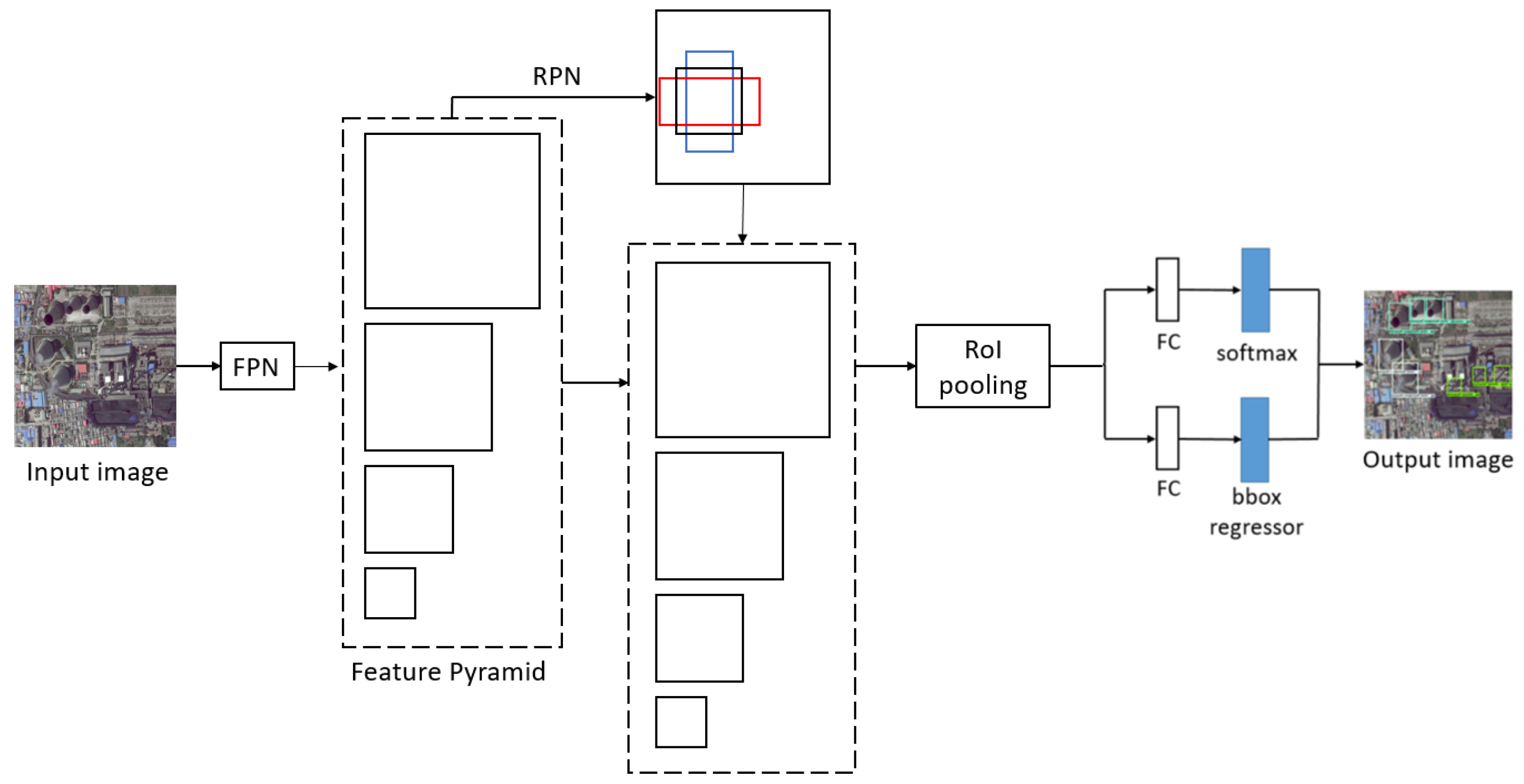

3.1.2. FPN

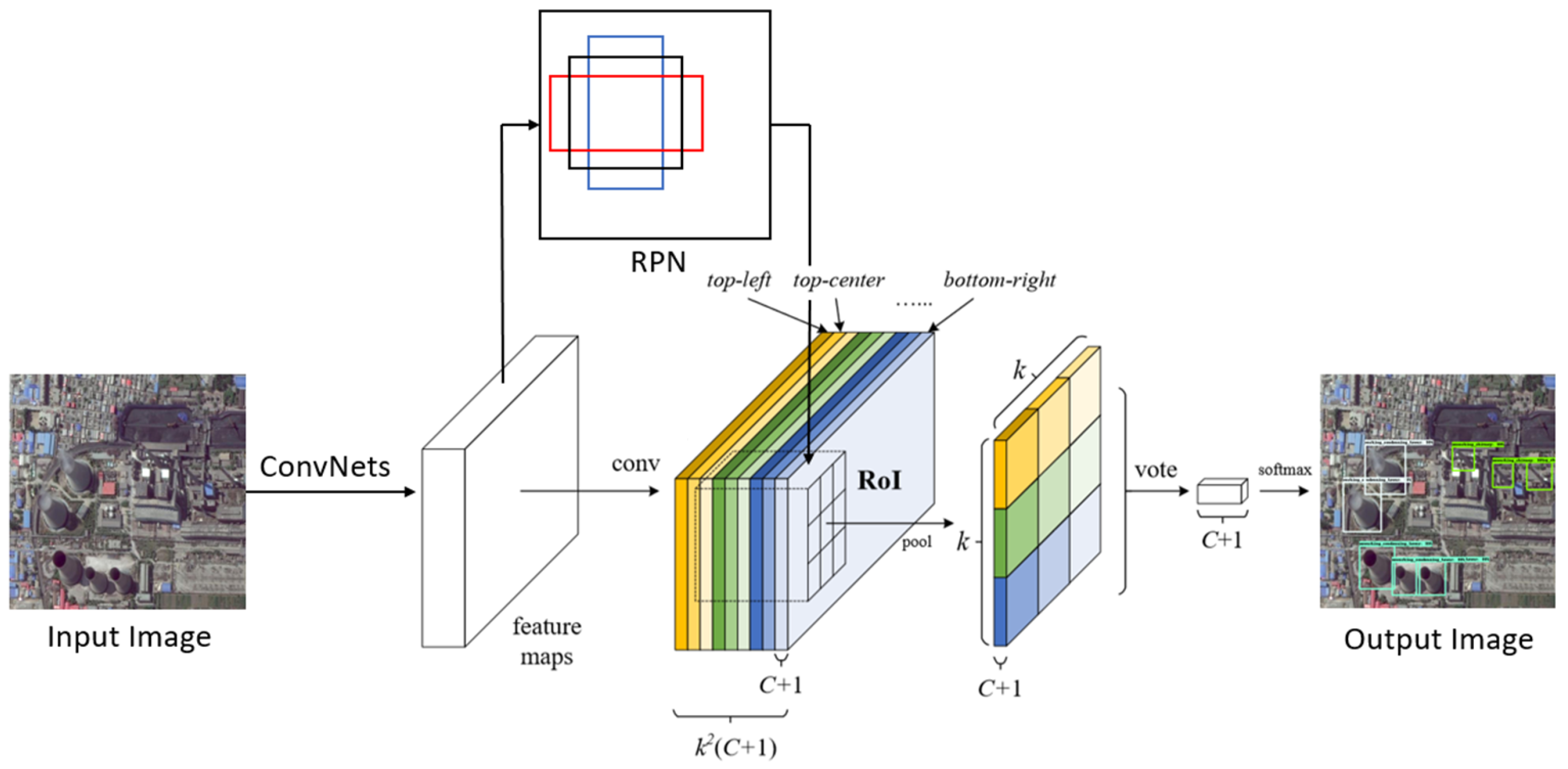

3.1.3. R-FCN

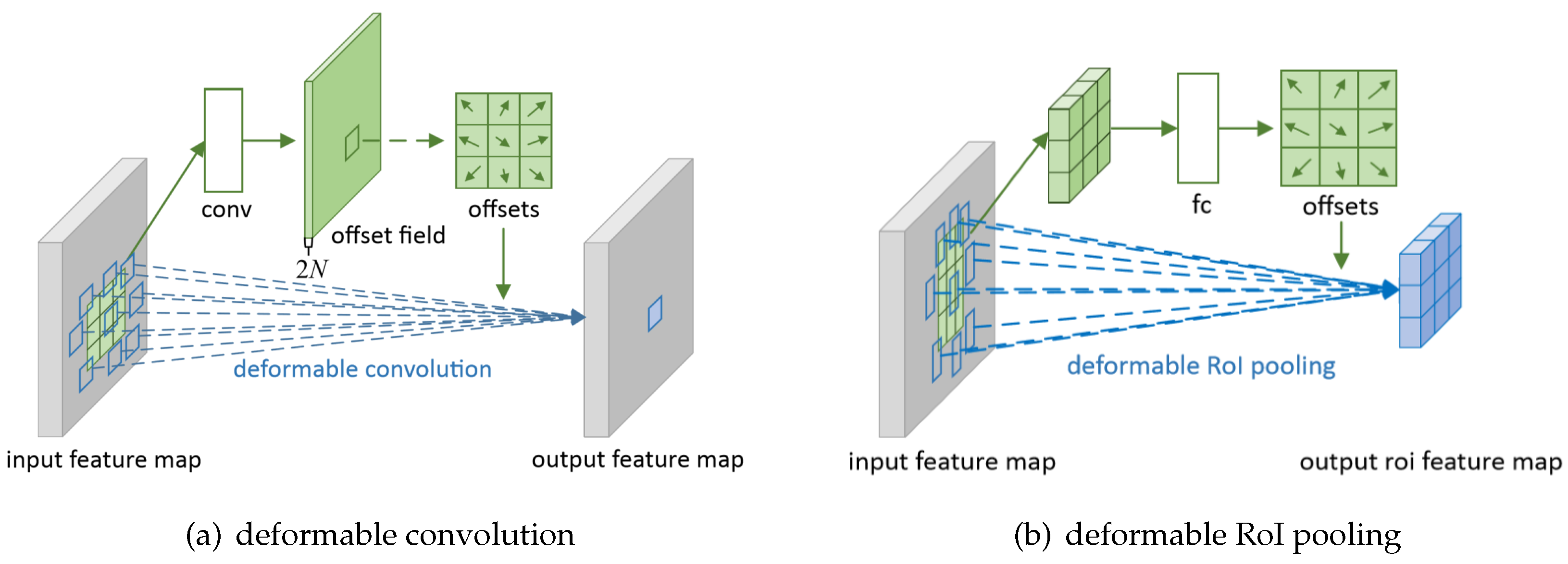

3.1.4. DCN

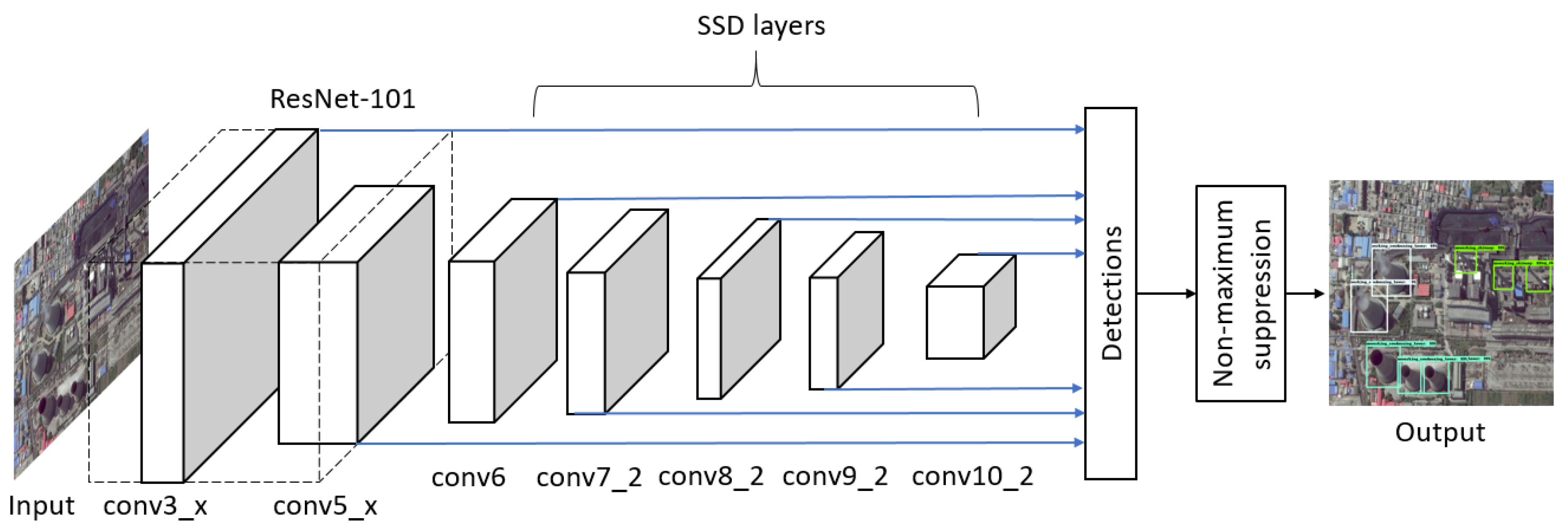

3.1.5. SSD

3.1.6. DSSD

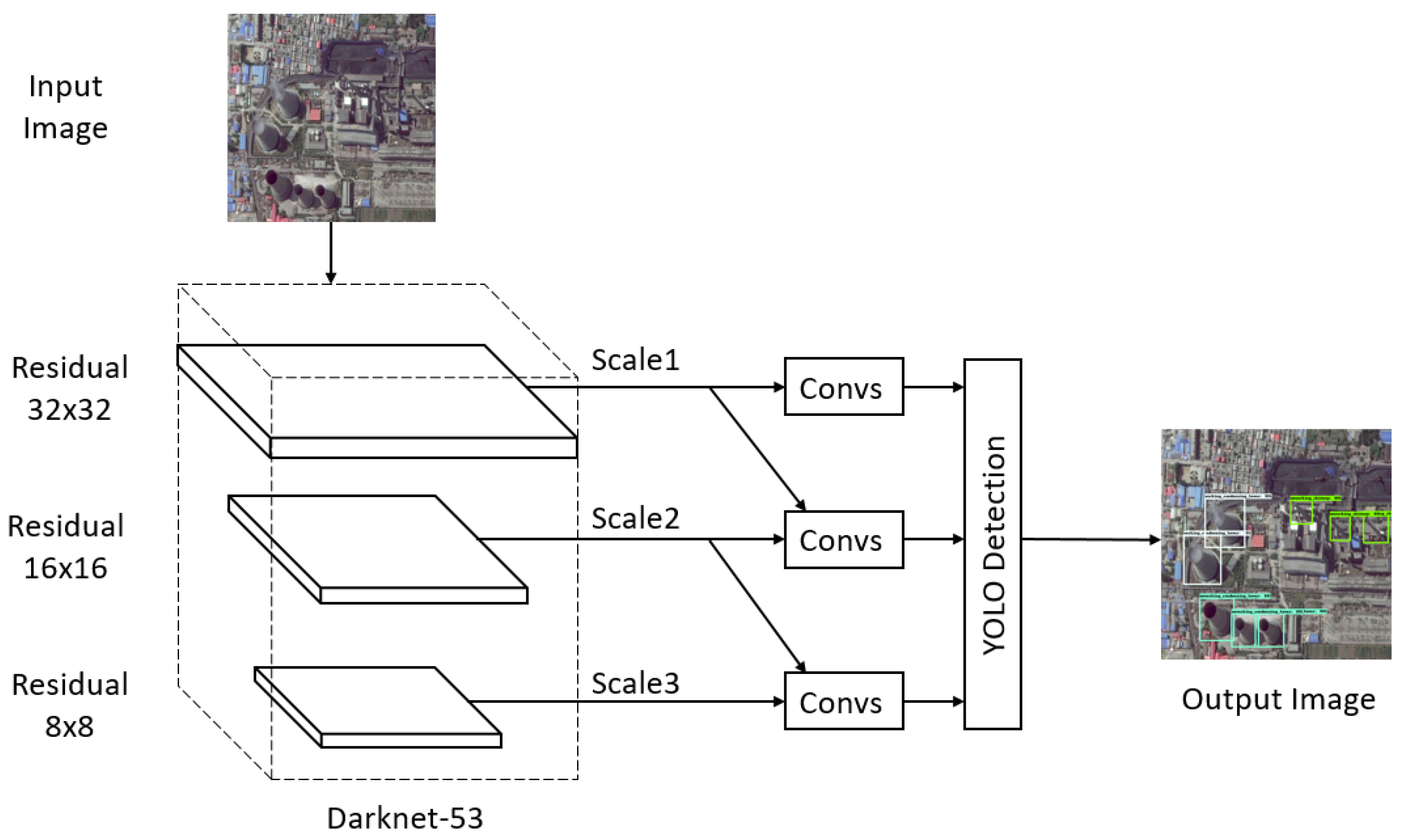

3.1.7. YOLO

3.1.8. RetinaNet

3.2. Implementation Details

3.2.1. Backbone Network

3.2.2. Training Details

4. Experimental Results

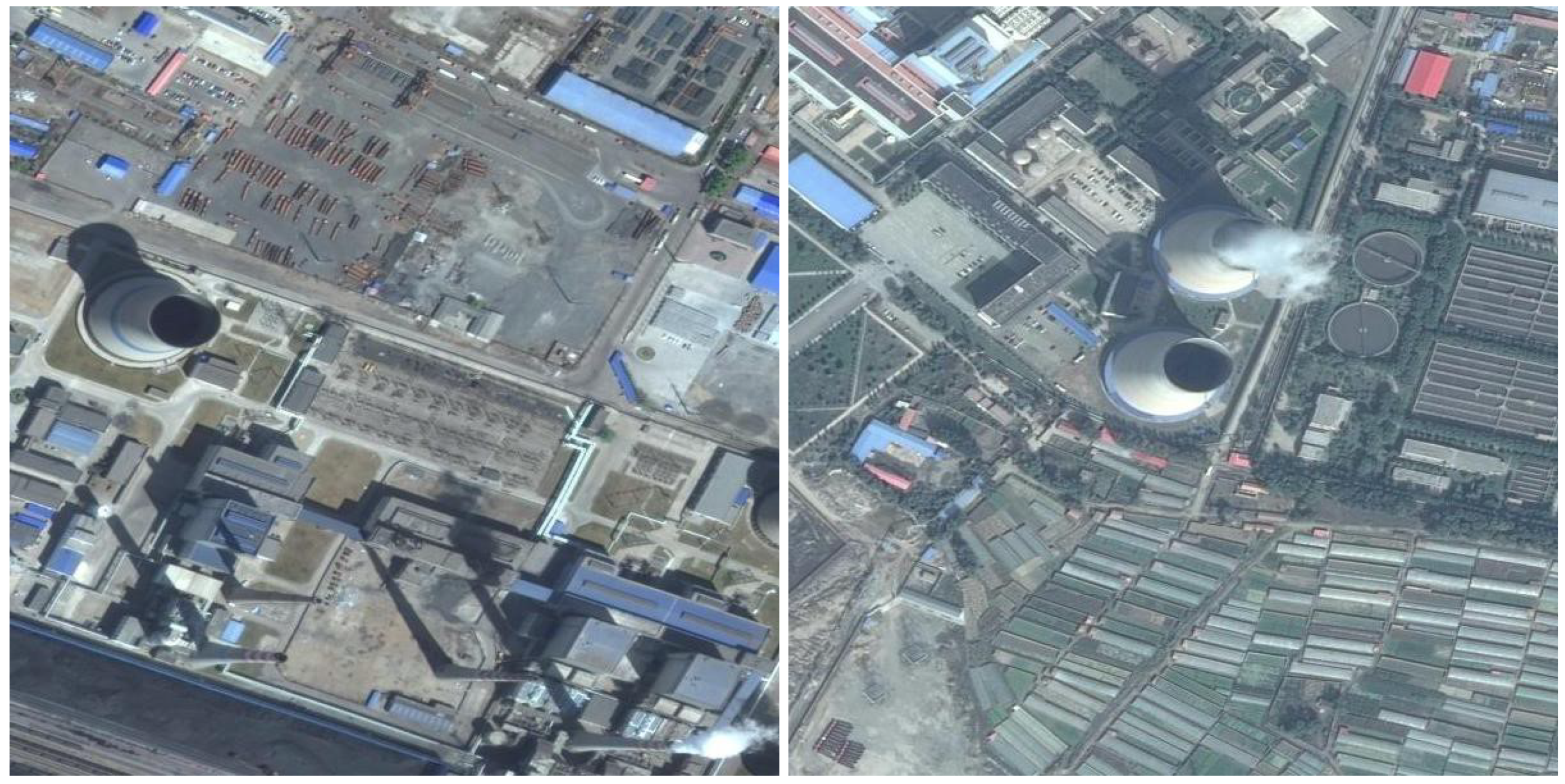

4.1. BUAA-FFPP60 Dataset

4.2. Index for Evaluation

4.3. Training Loss

4.4. Accuracy Comparison

4.5. Model Size and Memory Cost

4.6. Running Time

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- He, X.; Xue, Y.; Guang, J.; Shi, Y.; Xu, H.; Cai, J.; Mei, L.; Li, C. The analysis of the haze event in the north china plain in 2013. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Quebec City, QC, Canada, 13–18 July 2014; pp. 5002–5005. [Google Scholar] [CrossRef]

- Feng, X.; Li, Q.; Zhu, Y. Bounding the role of domestic heating in haze pollution of beijing based on satellite and surface observations. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Quebec City, QC, Canada, 13–18 July 2014; pp. 5727–5728. [Google Scholar] [CrossRef]

- Kai, Q.; Hu, M.; Lixin, W.; Rao, L.; Lang, H.; Wang, L.; Yang, B. Satellite remote sensing of aerosol optical depth, SO2 and NO2 over China’s Beijing-Tianjin-Hebei region during 2002–2013. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 5727–5728. [Google Scholar] [CrossRef]

- Yao, Y.; Jiang, Z.; Zhang, H.; Cai, B.; Meng, G.; Zuo, D. Chimney and condensing tower detection based on faster R-CNN in high resolution remote sensing images. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3329–3332. [Google Scholar] [CrossRef]

- Zhang, F.; Du, B.; Zhang, L.; Xu, M. Weakly supervised learning based on coupled convolutional neural networks for aircraft detection. IEEE Trans. Geosci. Remote Sens. 2016, 5553–5563. [Google Scholar] [CrossRef]

- Cai, B.; Jiang, Z.; Zhang, H.; Yao, Y.; Nie, S. Online Exemplar-Based Fully Convolutional Network for Aircraft Detection in Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1095–1099. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z. Ship detection in spaceborne optical image with svd networks. IEEE Trans. Geosci. Remote Sens. 2016, 5832–5845. [Google Scholar] [CrossRef]

- Yao, Y.; Jiang, Z.; Zhang, H.; Zhao, D.; Cai, B. Ship detection in optical remote sensing images based on deep convolutional neural networks. J. Appl. Remote Sens. 2017, 11, 11–12. [Google Scholar] [CrossRef]

- Yang, X.; Sun, H.; Fu, K.; Yang, J.; Sun, X.; Yan, M.; Guo, Z. Automatic Ship Detection in Remote Sensing Images from Google Earth of Complex Scenes Based on Multiscale Rotation Dense Feature Pyramid Networks. Remote Sens. 2018, 10, 132. [Google Scholar] [CrossRef]

- Zhang, L.; Shi, Z.; Wu, J. A Hierarchical Oil Tank Detector With Deep Surrounding Features for High-Resolution Optical Satellite Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4895–4909. [Google Scholar] [CrossRef]

- Yao, Y.; Jiang, Z.; Zhang, H. Oil tank detection based on salient region and geometric features. Proc. SPIE 2016, 92731G. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, D.; Jiang, Z. Oil Tank Detection via Target-Driven Learning Saliency Model. In Proceedings of the 2017 4th IAPR Asian Conference on Pattern Recognition (ACPR), Nanjing, China, 26–29 November 2017; pp. 156–161. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Han, G.C.J. A survey on object detection in optical remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 11–28. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z. Random Access Memories: A New Paradigm for Target Detection in High Resolution Aerial Remote Sensing Images. IEEE Trans. Image Process. 2018, 27, 1100–1111. [Google Scholar] [CrossRef] [PubMed]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. In Proceedings of the The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake, UT, USA, 18–22 June 2018. [Google Scholar]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q. Learning RoI Transformer for Detecting Oriented Objects in Aerial Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Advances in Neural Information Processing Systems 28; Cortes, C., Lawrence, N.D., Lee, D.D., Sugiyama, M., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2015; pp. 91–99. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar] [CrossRef]

- Lin, T.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-FCN: Object Detection via Region-based Fully Convolutional Networks. In Advances in Neural Information Processing Systems 29; Lee, D.D., Sugiyama, M., Luxburg, U.V., Guyon, I., Garnett, R., Eds.; Curran Associates, Inc.: Red Hook, NY, USA, 2016; pp. 379–387. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable Convolutional Networks. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar] [CrossRef]

- Sermanet, P.; Eigen, D.; Zhang, X.; Mathieu, M.; Fergus, R.; Lecun, Y. OverFeat: Integrated Recognition, Localization and Detection using Convolutional Networks. In Proceedings of the ICLR 2014, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Computer Vision–ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.K.; Girshick, R.B.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. DSSD: Deconvolutional Single Shot Detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Lin, T.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar] [CrossRef]

- Xu, Z.; Xu, X.; Wang, L.; Yang, R.; Pu, F. Deformable ConvNet with Aspect Ratio Constrained NMS for Object Detection in Remote Sensing Imagery. Remote Sens. 2017, 9, 1312. [Google Scholar] [CrossRef]

- Ren, Y.; Zhu, C.; Xiao, S. Deformable Faster R-CNN with Aggregating Multi-Layer Features for Partially Occluded Object Detection in Optical Remote Sensing Images. Remote Sens. 2018, 10, 1470. [Google Scholar] [CrossRef]

- Liu, X.; Tian, Y.; Yuan, C.; Zhang, F.; Yang, G. Opium Poppy Detection Using Deep Learning. Remote Sens. 2018, 10, 1886. [Google Scholar] [CrossRef]

- Tao, Y.; Xu, M.; Zhang, F.; Du, B.; Zhang, L. Unsupervised-Restricted Deconvolutional Neural Network for Very High Resolution Remote-Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 6805–6823. [Google Scholar] [CrossRef]

- Chang, Y.L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.Y.; Lee, W.H. Ship Detection Based on YOLOv2 for SAR Imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic Ship Detection Based on RetinaNet Using Multi-Resolution Gaofen-3 Imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef]

- Foody, G.M.; Ling, F.; Boyd, D.S.; Li, X.; Wardlaw, J. Earth Observation and Machine Learning to Meet Sustainable Development Goal 8.7: Mapping Sites Associated with Slavery from Space. Remote Sens. 2019, 11, 266. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the ICLR 2014, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

| Model | Backbone | Category | Application in Remote Sensing |

|---|---|---|---|

| Faster R-CNN [18] | ResNet-101 | two-stage | [4,8,38] |

| FPN [22] | ResNet-101 | two-stage | [9] |

| R-FCN [23] | ResNet-101 | two-stage | [6,32] |

| DCN [24] | ResNet-101 | two-stage | [33] |

| SSD [26] | ResNet-101 | one-stage | [34] |

| DSSD [28] | ResNet-101 | one-stage | [35] |

| YOLOv3 [30] | Darknet-53 | one-stage | [36] |

| RetinaNet [31] | ResNet-101 | one-stage | [37] |

| Models | Warm-up Step | Train Step | Initial lr | lr Schedule | Batch Size |

|---|---|---|---|---|---|

| Faster R-CNN [18] | 0 | 72000 | STEP | 1 | |

| FPN [22] | 0 | 60000 | STEP | 1 | |

| R-FCN [23] | 0 | 60000 | STEP | 1 | |

| DCN [24] | 1000 | 60000 | STEP | 1 | |

| SSD [26] | 200 | 5600 | EXPONENTIAL | 24 | |

| DSSD [28] | 0 | 30000 | STEP | 8 | |

| YOLOv3 [30] | 500 | 30000 | ADAM | 6 | |

| RetinaNet [31] | 0 | 30000 | ADAM | 2 |

| Class | Chimney | Condensing Tower | RSI | ||

|---|---|---|---|---|---|

| Working | Not Working | Working | Not Working | ||

| Number in training set (augmented + original) | 198 | 435 | 408 | 426 | 861 |

| Number in testing set (original) | 21 | 36 | 65 | 28 | 31 |

| Total number (augmented + original) | 219 | 471 | 473 | 454 | 892 |

| Model | Chimney | Condensing Tower | ||

|---|---|---|---|---|

| Precision | Recall | Precision | Recall | |

| Faster R-CNN [18] | 0.7342 | 0.8681 | 0.9293 | 0.9683 |

| FPN [22] | 0.7361 | 0.8526 | 0.9525 | 0.9711 |

| R-FCN [23] | 0.7022 | 0.8092 | 0.9223 | 0.9576 |

| DCN [24] | 0.6469 | 0.7813 | 0.8673 | 0.9287 |

| SSD [26] | 0.6557 | 0.7861 | 0.8661 | 0.9478 |

| DSSD [28] | 0.6432 | 0.7627 | 0.8593 | 0.9341 |

| YOLOv3 [30] | 0.6751 | 0.7892 | 0.8723 | 0.9583 |

| RetinaNet [31] | 0.7254 | 0.8461 | 0.9428 | 0.9731 |

| Yao et al. [4] | 0.6710 | 0.8390 | 0.9080 | 0.9570 |

| Model | AP of Chimney | AP of Condensing Tower | mAP | ||

|---|---|---|---|---|---|

| Working | Not Working | Working | Not Working | ||

| Faster R-CNN [18] | 0.6979 | 0.6602 | 0.9283 | 0.9648 | 0.8128 |

| FPN [22] | 0.6878 | 0.7239 | 0.9245 | 0.9730 | 0.8273 |

| R-FCN [23] | 0.5952 | 0.6786 | 0.9144 | 0.9502 | 0.7846 |

| DCN [24] | 0.5426 | 0.5696 | 0.8672 | 0.9152 | 0.7234 |

| SSD [26] | 0.5323 | 0.6031 | 0.8964 | 0.9510 | 0.7457 |

| DSSD [28] | 0.5336 | 0.6042 | 0.8877 | 0.9149 | 0.7376 |

| YOLOv3 [30] | 0.5532 | 0.5851 | 0.8765 | 0.9396 | 0.7386 |

| RetinaNet [31] | 0.6564 | 0.6725 | 0.9308 | 0.9603 | 0.8075 |

| Model | Space Occupancy | Memory Cost |

|---|---|---|

| Faster R-CNN [21] | 425.6 MB | 4.61 GB |

| FPN [22] | 464.2 MB | 4.36 GB |

| R-FCN [23] | 443.1 MB | 3.60 GB |

| DCN [24] | 494.6 MB | 0.95 GB |

| SSD [26] | 218.7 MB | 0.86 GB |

| DSSD [28] | 246.6 MB | 1.76 GB |

| YOLOv3 [30] | 235.6 MB | 2.61 GB |

| RetinaNet [31] | 635.1 MB | 3.79 GB |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, H.; Deng, Q. Deep Learning Based Fossil-Fuel Power Plant Monitoring in High Resolution Remote Sensing Images: A Comparative Study. Remote Sens. 2019, 11, 1117. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11091117

Zhang H, Deng Q. Deep Learning Based Fossil-Fuel Power Plant Monitoring in High Resolution Remote Sensing Images: A Comparative Study. Remote Sensing. 2019; 11(9):1117. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11091117

Chicago/Turabian StyleZhang, Haopeng, and Qin Deng. 2019. "Deep Learning Based Fossil-Fuel Power Plant Monitoring in High Resolution Remote Sensing Images: A Comparative Study" Remote Sensing 11, no. 9: 1117. https://0-doi-org.brum.beds.ac.uk/10.3390/rs11091117