Towards Benthic Habitat 3D Mapping Using Machine Learning Algorithms and Structures from Motion Photogrammetry

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Benthic Cover Field Data

2.3. Methodology

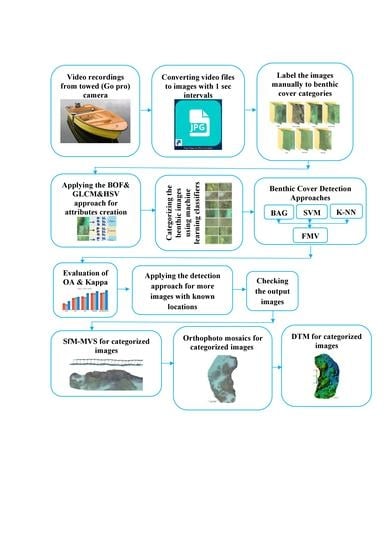

- All four hours of the video recordings were converted to geolocated images using a free video to JPG converter program with one-second intervals synchronized with the DGPS recorded locations.

- A total of 3000 converted images were labeled individually by a human expert according to seven benthic cover categories: corals (Acropora and Porites), blue corals (H. coerulea), brown algae, blue algae, soft sand, hard sediments (pebbles, cobbles, and boulders), and seagrass.

- These labeled geolocated images were used as inputs for the BOF, Hue Saturation Value (HSV), and Gray Level Co-occurrence Matrix (GLCM) approaches to create the attributes for the semiautomatic classification.

- The extracted attributes produced from the BOF, HSV, and GLCM approaches were used as the inputs for training three machine learning soft classifiers (BAG, SVM, and k-NN), and the image labels were used as the outputs.

- The three classifiers were combined with the FMV algorithm to classify the benthic cover categories.

- The entire classifier evaluation process was conducted using 2250 independent randomly sampled images (75%) for training and 750 images (25%) for testing.

- After the FMV algorithm was trained and validated, it was used to categorize more images, and the resulting images were checked individually.

- SfM-MVS techniques were performed to produce 3D mosaics and digital terrain models DTMs for each habitat class using the correctly categorized geolocated JPG images.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Hossain, M.S.; Bujang, J.S.; Zakaria, M.H.; Hashim, M. The application of remote sensing to seagrass ecosystems: An overview and future research prospects. Int. J. Remote Sens. 2015, 36, 61–114. [Google Scholar] [CrossRef]

- Vassallo, P.; Bianchi, C.N.; Paoli, C.; Holon, F.; Navone, A.; Bavestrello, G.; Cattaneo Vietti, R.; Morri, C. A predictive approach to benthic marine habitat mapping: Efficacy and management implications. Mar. Pollut. Bull. 2018, 131, 218–232. [Google Scholar] [CrossRef] [PubMed]

- Gauci, A.; Deidun, A.; Abela, J.; Zarb Adami, K. Machine Learning for benthic sand and maerl classification and coverage estimation in coastal areas around the Maltese Islands. J. Appl. Res. Technol. 2016, 14, 338–344. [Google Scholar] [CrossRef] [Green Version]

- Anderson, T.J.; Cochrane, G.R.; Roberts, D.A.; Chezar, H.; Hatcher, G. A rapid method to characterize seabed habitats and associated macro-organisms. Mapp. Seafloor Habitat Charact. Geol. Assoc. Can. Spec. Pap. 2007, 47, 71–79. [Google Scholar]

- Smith, J.; O’Brien, P.E.; Stark, J.S.; Johnstone, G.J.; Riddle, M.J. Integrating multibeam sonar and underwater video data to map benthic habitats in an East Antarctic nearshore environment. Estuar. Coast. Shelf Sci. 2015, 164, 520–536. [Google Scholar] [CrossRef]

- Mallet, D.; Pelletier, D. Underwater video techniques for observing coastal marine biodiversity: A review of sixty years of publications (1952–2012). Fish. Res. 2014, 154, 44–62. [Google Scholar] [CrossRef]

- Hamylton, S.M. Mapping coral reef environments: A review of historical methods, recent advances and future opportunities. Prog. Phys. Geogr. 2017, 41, 803–833. [Google Scholar] [CrossRef]

- Roelfsema, C.; Kovacs, E.; Roos, P.; Terzano, D.; Lyons, M.; Phinn, S. Use of a semi-automated object based analysis to map benthic composition, Heron Reef, Southern Great Barrier Reef. Remote Sens. Lett. 2018, 9, 324–333. [Google Scholar] [CrossRef]

- Roelfsema, C.; Lyons, M.; Dunbabin, M.; Kovacs, E.M.; Phinn, S. Integrating field survey data with satellite image data to improve shallow water seagrass maps: The role of AUV and snorkeller surveys? Remote Sens. Lett. 2015, 6, 135–144. [Google Scholar] [CrossRef]

- Zapata-Ramírez, P.A.; Blanchon, P.; Olioso, A.; Hernandez-Nuñez, H.; Sobrino, J.A. Accuracy of IKONOS for mapping benthic coral-reef habitats: A case study from the Puerto Morelos Reef National Park, Mexico. Int. J. Remote Sens. 2012, 34, 3671–3687. [Google Scholar]

- Seiler, J.; Friedman, A.; Steinberg, D.; Barrett, N.; Williams, A.; Holbrook, N.J. Image-based continental shelf habitat mapping using novel automated data extraction techniques. Cont. Shelf Res. 2012, 45, 87–97. [Google Scholar] [CrossRef]

- Bewley, M.; Friedman, A.; Ferrari, R.; Hill, N.; Hovey, R.; Barrett, N.; Pizarro, O.; Figueira, W.; Meyer, L.; Babcock, R.; et al. Australian sea-floor survey data, with images and expert annotations. Sci. Data 2015, 2, 150057. [Google Scholar] [CrossRef] [PubMed]

- Pante, E.; Dustan, P. Getting to the Point: Accuracy of Point Count in Monitoring Ecosystem Change. J. Mar. Biol. 2012, 2012, 802875. [Google Scholar] [CrossRef] [Green Version]

- Rigby, P.; Pizarro, O.; Williams, S.B. Toward Adaptive Benthic Habitat Mapping Using Gaussian Process Classification. J. Field Robot. 2010, 27, 741–758. [Google Scholar] [CrossRef]

- Singh, H.; Howland, J.; Pizarro, O. Advances in large-area photomosaicking underwater. IEEE J. Ocean. Eng. 2004, 29, 872–886. [Google Scholar] [CrossRef]

- Agrafiotis, P.; Skarlatos, D.; Forbes, T.; Poullis, C.; Skamantzari, M.; Georgopoulos, A. Underwater photogrammetry in very shallow waters: Main challenges and caustics effect removal. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Riva del Garda, Italy, 4–7 June 2018; pp. 15–22. [Google Scholar]

- Elnashef, B.; Filin, S. Direct linear and refraction-invariant pose estimation and calibration model for underwater imaging. ISPRS J. Photogramm. Remote Sens. 2019, 154, 259–271. [Google Scholar] [CrossRef]

- Guinan, J.; Brown, C.; Dolan, M.F.J.; Grehan, A.J. Ecological Informatics Ecological niche modelling of the distribution of cold-water coral habitat using underwater remote sensing data. Ecol. Inf. 2009, 4, 83–92. [Google Scholar] [CrossRef]

- Baumstark, R.; Duffey, R.; Pu, R. Mapping seagrass and colonized hard bottom in Springs Coast, Florida using WorldView-2 satellite imagery. Estuar. Coast. Shelf Sci. 2016, 181, 83–92. [Google Scholar] [CrossRef]

- Baumstark, R.; Dixon, B.; Carlson, P.; Palandro, D.; Kolasa, K. Alternative spatially enhanced integrative techniques for mapping seagrass in Florida’s marine ecosystem. Int. J. Remote Sens. 2013, 34, 1248–1264. [Google Scholar] [CrossRef]

- Javernick, L.; Brasington, J.; Caruso, B. Modeling the topography of shallow braided rivers using Structure-from-Motion photogrammetry. Geomorphology 2014, 213, 166–182. [Google Scholar] [CrossRef]

- Burns, J.H.R.; Delparte, D.; Gates, R.D.; Takabayashi, M. Integrating structure-from-motion photogrammetry with geospatial software as a novel technique for quantifying 3D ecological characteristics of coral reefs. PeerJ 2015, 3, e1077. [Google Scholar] [CrossRef] [PubMed]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-Motion” photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef] [Green Version]

- Piazza, P.; Cummings, V.; Guzzi, A.; Hawes, I.; Lohrer, A.; Marini, S.; Marriott, P.; Menna, F.; Nocerino, E.; Peirano, A.; et al. Underwater photogrammetry in Antarctica: Long-term observations in benthic ecosystems and legacy data rescue. Polar Biol. 2019, 42, 1061–1079. [Google Scholar] [CrossRef] [Green Version]

- Xiao, X.; Guo, B.; Li, D.; Li, L.; Yang, N.; Liu, J.; Zhang, P.; Peng, Z. Multi-view stereo matching based on self-adaptive patch and image grouping for multiple unmanned aerial vehicle imagery. Remote Sens. 2016, 8, 89. [Google Scholar] [CrossRef] [Green Version]

- Bryson, M.; Ferrari, R.; Figueira, W.; Pizarro, O.; Madin, J.; Williams, S.; Byrne, M. Characterization of measurement errors using structure—Motion and photogrammetry to measure marine habitat structural complexity. Ecol. Evol. 2017, 5669–5681. [Google Scholar] [CrossRef]

- Leon, J.X.; Roelfsema, C.M.; Saunders, M.I.; Phinn, S.R. Measuring coral reef terrain roughness using “Structure-from-Motion” close-range photogrammetry. Geomorphology 2015, 242, 21–28. [Google Scholar] [CrossRef]

- Palma, M.; Casado, M.R.; Pantaleo, U.; Pavoni, G.; Pica, D.; Cerrano, C. SfM-based method to assess gorgonian forests (Paramuricea clavata (Cnidaria, Octocorallia)). Remote Sens. 2018, 10, 1154. [Google Scholar] [CrossRef] [Green Version]

- Pizarro, O.; Eustice, R.M.; Singh, H. Large area 3-D reconstructions from underwater optical surveys. IEEE J. Ocean. Eng. 2009, 34, 150–169. [Google Scholar] [CrossRef] [Green Version]

- Agrafiotis, P.; Skarlatos, D.; Georgopoulos, A.; Karantzalos, K. DepthLearn: Learning to Correct the Refraction on Point Clouds Derived from Aerial Imagery for Accurate Dense Shallow Water Bathymetry Based on SVMs-Fusion with LiDAR Point Clouds. Remote Sens. 2019, 11, 2225. [Google Scholar] [CrossRef] [Green Version]

- Casella, E.; Collin, A.; Harris, D.; Ferse, S.; Bejarano, S.; Parravicini, V.; Hench, J.L.; Rovere, A. Mapping coral reefs using consumer-grade drones and structure from motion photogrammetry techniques. Coral Reefs 2017, 36, 269–275. [Google Scholar] [CrossRef]

- Dietrich, J.T. Bathymetric Structure-from-Motion: Extracting shallow stream bathymetry from multi-view stereo photogrammetry. Earth Surf. Process. Landf. 2017, 42, 355–364. [Google Scholar] [CrossRef]

- Figueira, W.; Ferrari, R.; Weatherby, E.; Porter, A.; Hawes, S.; Byrne, M. Accuracy and precision of habitat structural complexity metrics derived from underwater photogrammetry. Remote Sens. 2015, 7, 16883–16900. [Google Scholar] [CrossRef] [Green Version]

- Storlazzi, C.D.; Dartnell, P.; Hatcher, G.A.; Gibbs, A.E. End of the chain? Rugosity and fine-scale bathymetry from existing underwater digital imagery using structure-from-motion (SfM) technology. Coral Reefs 2016, 35, 889–894. [Google Scholar] [CrossRef]

- Raoult, V.; Reid-Anderson, S.; Ferri, A.; Williamson, J. How Reliable Is Structure from Motion (SfM) over Time and between Observers? A Case Study Using Coral Reef Bommies. Remote Sens. 2017, 9, 740. [Google Scholar] [CrossRef] [Green Version]

- Ahsan, N.; Williams, S.B.; Jakuba, M.; Pizarro, O.; Radford, B. Predictive habitat models from AUV-based multibeam and optical imagery. In Proceedings of the OCEANS 2010 MTS/IEEE SEATTLE, Seattle, WA, USA, 20–23 September 2010; pp. 1–10. [Google Scholar]

- Price, D.M.; Robert, K.; Callaway, A.; Lo lacono, C.; Hall, R.A.; Huvenne, V.A.I. Using 3D photogrammetry from ROV video to quantify cold-water coral reef structural complexity and investigate its influence on biodiversity and community assemblage. Coral Reefs 2019, 38, 1007–1021. [Google Scholar] [CrossRef] [Green Version]

- Williams, S.B.; Pizarro, O.; Mahon, I.; Johnson-Roberson, M. Simultaneous Localisation and Mapping and Dense Stereoscopic Seafloor Reconstruction Using an AUV. In Proceedings of the Experimental Robotics; Khatib, O., Kumar, V., Pappas, G.J., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 407–416. [Google Scholar]

- Pavoni, G.; Corsini, M.; Callieri, M.; Palma, M.; Scopigno, R. SEMANTIC SEGMENTATION of BENTHIC COMMUNITIES from ORTHO-MOSAIC MAPS. In Proceedings of the ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences; ISPRS: Limassol, Cyprus, 2019; Volume XLII-2/W10, pp. 151–158. [Google Scholar]

- Liu, Z.-G.; Zhang, X.-Y.; Yang, Y.; Wu, C.-C. A Flame Detection Algorithm Based on Bag-of—Features In The YUV Color Space. In Proceedings of the International Conference on Intelligent Computing and Internet of Things (IC1T), Chongqing, China, 21–23 September 2015; pp. 64–67. [Google Scholar]

- Nazir, S.; Yousaf, M.H.; Velastin, S.A. Evaluating a bag-of-visual features approach using spatio-temporal features for action recognition. Comput. Electr. Eng. 2018, 72, 660–669. [Google Scholar] [CrossRef]

- Shi, R.B.; Qiu, J.; Maida, V. Towards algorithm-enabled home wound monitoring with smartphone photography: A hue-saturation-value colour space thresholding technique for wound content tracking. Int. Wound J. 2018, 16, 211–218. [Google Scholar] [CrossRef] [Green Version]

- Mazumder, J.; Nahar, L.N. Moin Uddin Atique Finger Gesture Detection and Application Using Hue Saturation Value. Int. J. Image Graph. Signal Process. 2018, 8, 31–38. [Google Scholar] [CrossRef]

- Zheng, G.; Li, X.; Member, S.; Zhou, L.; Yang, J.; Ren, L.; Chen, P.; Zhang, H.; Lou, X. Development of a Gray-Level Co-Occurrence Matrix-Based Texture Orientation Estimation Method and Its Application in Sea Surface Wind Direction Retrieval From SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 56, 1–17. [Google Scholar] [CrossRef]

- Moya, L.; Zakeri, H.; Yamazaki, F.; Liu, W.; Mas, E.; Koshimura, S. 3D gray level co-occurrence matrix and its application to identifying collapsed buildings. ISPRS J. Photogramm. Remote Sens. 2019, 149, 14–28. [Google Scholar] [CrossRef]

- Paringit, E.C.; Nadaoka, K. Simultaneous estimation of benthic fractional cover and shallow water bathymetry in coral reef areas from high-resolution satellite images. Int. J. Remote 2012, 33, 3026–3047. [Google Scholar] [CrossRef]

- He, F.; Habib, A. Target-based and feature-based calibration of low-cost digital cameras with large field-of-view. In Proceedings of the IGTF 2015—ASPRS Annual Conference, Tampa, FL, USA, 4–8 May 2015; pp. 205–212. [Google Scholar]

- Breiman, L. Bagging Predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef] [Green Version]

- Tien, D.; Ho, B.T.; Pradhan, B.; Pham, B. GIS-based modeling of rainfall-induced landslides using data mining-based functional trees classifier with AdaBoost, Bagging, and MultiBoost ensemble frameworks. Environ. Earth Sci. 2016, 75, 1–22. [Google Scholar] [CrossRef]

- CORTES, C.; VAPNIK, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Ishida, H.; Oishi, Y.; Morita, K.; Moriwaki, K.; Nakajima, T.Y. Development of a support vector machine based cloud detection method for MODIS with the adjustability to various conditions. Remote Sens. Environ. 2018, 205, 390–407. [Google Scholar] [CrossRef]

- Noi, P.T.; Kappas, M. Comparison of Random Forest, k-Nearest Neighbor, and Support Vector Machine Classifiers for Land Cover Classification Using Sentinel-2 Imagery. Sensors 2018, 18, 18. [Google Scholar]

- Shihavuddin, A.S.M.; Gracias, N.; Garcia, R.; Gleason, A.C.R.; Gintert, B. Image-based coral reef classification and thematic mapping. Remote Sens. 2013, 5, 1809–1841. [Google Scholar] [CrossRef] [Green Version]

- Salah, M. Fuzzy Majority Voting based fusion of Markovian probability for improved Land Cover Change Prediction. Int. J. Geoinform. 2016, 12, 27–40. [Google Scholar]

- Valdivia, A.; Luzíón, M.V.; Herrera, F. Neutrality in the sentiment analysis problem based on fuzzy majority. In Proceedings of the 2017 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Naples, Italy, 9–12 July 2017; pp. 1–6. [Google Scholar]

- He, F.; Zhou, T.; Xiong, W.; Hasheminnasab, S.M.; Habib, A. Automated aerial triangulation for UAV-based mapping. Remote Sens. 2018, 10, 1952. [Google Scholar] [CrossRef] [Green Version]

- Chagoonian, A.M.; Makhtarzade, M.; Zoej, M.J.V.; Salehi, M. Soft Supervised Classification: An Improved Method for Coral Reef Classification Using Medium Resolution Satellite Images. In Proceedings of the IEEE IGRASS, Beijing, China, 10–15 July 2016; pp. 2787–2790. [Google Scholar]

- Zhang, C. Applying data fusion techniques for benthic habitat mapping and monitoring in a coral reef ecosystem. ISPRS J. Photogramm. Remote Sens. 2015, 104, 213–223. [Google Scholar] [CrossRef]

- Muslim, A.M.; Komatsu, T.; Dianachia, D. Evaluation of classification techniques for benthic habitat mapping. Proc. SPIE 2012, 8525, W85250. [Google Scholar]

- Diesing, M.; Stephens, D. A multi-model ensemble approach to seabed mapping. J. Sea Res. 2015, 100, 62–69. [Google Scholar] [CrossRef]

- Chirayath, V.; Li, A. Next-Generation Optical Sensing Technologies for Exploring Ocean Worlds—NASA FluidCam, MiDAR, and NeMO-Net. Front. Mar. Sci. 2019, 6, 1–24. [Google Scholar] [CrossRef]

| Descriptor | Selected Attributes |

|---|---|

| Texture Parameters | 26 Gray Level Co-occurrence Matrix (GLCM) parameters, including energy, entropy, contrast, correlation, homogeneity, cluster prominence, cluster shade, dissimilarity, image mean, image standard deviation, image mean variance, etc. |

| BOF | A total of 250 attributes extracted from each input image with a 16 grid step, 32 block width, 80% as the strongest feature percent kept from each category, and the grid point selection method for the feature point location selection |

| HSV | A total of 256 HSV values were extracted for each input image |

| Methodology | BAG | K-NN | SVM | FMV |

|---|---|---|---|---|

| OA % | 85.6 | 86.5 | 89.7 | 93.5 |

| Kappa | 0.82 | 0.83 | 0.87 | 0.92 |

| Classification Data | Reference Data | Row. Total | UA | ||||||

|---|---|---|---|---|---|---|---|---|---|

| A | Br A | Cor | Bl Cor | Sed | SS | Seag | |||

| A | 133 | 1 | 2 | 2 | 0 | 0 | 0 | 138 | 0.96 |

| Br A | 1 | 48 | 0 | 5 | 0 | 0 | 0 | 54 | 0.89 |

| Cor | 1 | 0 | 137 | 10 | 0 | 0 | 0 | 148 | 0.93 |

| Bl Cor | 1 | 1 | 2 | 24 | 0 | 0 | 0 | 28 | 0.86 |

| Sed | 0 | 0 | 0 | 1 | 137 | 14 | 2 | 154 | 0.89 |

| SS | 0 | 0 | 0 | 0 | 1 | 36 | 0 | 37 | 1.00 |

| Seag | 2 | 0 | 1 | 3 | 0 | 0 | 185 | 191 | 0.97 |

| Col. Total | 138 | 50 | 142 | 45 | 138 | 50 | 187 | OA = 93.5% | |

| PA | 0.96 | 0.96 | 0.96 | 0.53 | 0.99 | 0.72 | 0.99 | Kappa val. = 0.92 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mohamed, H.; Nadaoka, K.; Nakamura, T. Towards Benthic Habitat 3D Mapping Using Machine Learning Algorithms and Structures from Motion Photogrammetry. Remote Sens. 2020, 12, 127. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010127

Mohamed H, Nadaoka K, Nakamura T. Towards Benthic Habitat 3D Mapping Using Machine Learning Algorithms and Structures from Motion Photogrammetry. Remote Sensing. 2020; 12(1):127. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010127

Chicago/Turabian StyleMohamed, Hassan, Kazuo Nadaoka, and Takashi Nakamura. 2020. "Towards Benthic Habitat 3D Mapping Using Machine Learning Algorithms and Structures from Motion Photogrammetry" Remote Sensing 12, no. 1: 127. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12010127