1. Introduction

Over the past decades, remote sensing has experienced dramatic changes in data quality, spatial resolution, shorter revisit times, and available area covered. Emery and Camps [

1] reported that our ability to observe the Earth from low Earth orbit and geostationary satellites has been improving continuously. Such an increase requires a significant change in the way we use and manage remote-sensing images. Zhou et al. [

2] noted that the increased spatial resolution makes it possible to develop novel approaches, providing new opportunities for advancing remote-sensing image analysis and understanding, thus allowing us to study the ground surface in greater detail. However, the increase in data available has resulted in important challenges in terms of how to properly manage the imagery collection.

One of the fundamental remote sensing tasks is scene classification. Cheng et al. [

3] defined scene classification as the categorization of remote-sensing images into a discrete set of meaningful land-cover and land-use classes. Scene classification is a fundamental remote-sensing task and important for many practical remote-sensing applications, such as urban planning [

4], land management [

5], and to characterize wild fires [

6,

7], among other applications. Such ample use of remote-sensing image classification led many researchers to investigate techniques to quickly classify remote-sensing data and accelerate image retrieval.

Conventional scene classification techniques rely on low-level visual features to represent the images of interest. Such low-level features can be global or local. Global features are extracted from the entire remote-sensing image, such as color (spectral) features [

8,

9], texture features [

10], and shape features [

11]. Local features, like scale invariant feature transform (SIFT) [

12] are extracted from image patches that are centered about a point of interest. Zhou et al. [

2] observed that the remote-sensing community makes use of the properties of local features and proposed several methods for remote-sensing image analysis. However, these global and local features are hand-crafted. Furthermore, the development of such features is time consuming and often depends on ad hoc or heuristic design decisions. For these reasons, the extraction of low-level global and local features is suboptimal for some scene classification tasks. Hu et al. [

13] remarked that the performance of remote-sensing scene classification has only slightly improved in recent years. The main reason remote-sensing scene classification only marginally improved is due to the fact that the approaches relying on low-level features are incapable of generating sufficiently powerful feature representations for remote-sensing scenes. Hu et al. [

13] concluded that the more representative and higher-level features, which are abstractions of the lower-level features, are desirable and play a dominant role in the scene classification task. The extraction of high-level features promises to be one of the main advantages of deep-learning methods. As observed by Yang et al. [

14], one of the reasons for the attractiveness of deep-learning models is due the models’ capacity to discover effective feature transformations for the desired task.

Recently, the deep-learning (DL) methods [

15] have been applied in many fields of science and industry. Current progress in deep-learning models, specifically deep convolutional neural networks (CNN) architectures, have improved the state-of-the-art in visual object recognition and detection, speech recognition and many other fields of study [

15]. The model described by Krizhevsky et al. [

16], frequently referenced to as AlexNet, is considered a breakthrough and influenced the rapid adoption of DL in the computer vision field [

15]. CNNs currently are the dominant method in the vast majority image classification, segmentation, and detection tasks due to their remarkable performance in many benchmarks, e.g., the MNIST handwritten database [

17] and the ImageNet dataset [

18], a large dataset with millions of natural images. In 2012 AlexNet used a five-layer deep CNN model to win the ImageNet Large Scale Visual Recognition Competition. Now, many CNN models use 20 to hundreds of layers. Huang et al. [

19] proposed models with thousands of layers. Due to the vast number of operations performed in deep CNN models, it is often difficult to discuss the interpretability, or the degree to which a decision taken by a model can be interpreted. Thus, CNN interpretability itself remains a research topic (e.g., [

20,

21,

22,

23]).

Despite CNNs’ powerful feature extraction capabilities, Hu et al. [

13] and others found that in practice it is difficult to train CNNs with small datasets. However, Yosinski et al. [

24] and Yin et al. [

21] observed that the parameters learned by the layers in many CNN models trained on images exhibit a very common behavior. The layers closer to the input data tend to learn general features, resulting in convolutional operators akin to edge detection filters, smoothing, or color filters. Then there is a transition to features more specific to the dataset on which the model is trained. These general-specific CNN layer feature transitions lead to the development of transfer learning [

24,

25,

26]. In transfer learning, the filters learned by a CNN model on a primary task are applied to an unrelated secondary task. The primary CNN model can be used a as feature extractor, or as a starting point for a secondary CNN model.

Even though large datasets help the performance of CNN models, the use of transfer learning facilitated the application of CNN techniques to other scientific fields that have less available data. For example, Carranza-Rojas et al. [

27] used transfer learning for herbarium specimens classification, Esteva et al. [

28] for dermatologist-level classification of skin cancer classification, Pires de Lima and Suriamin et al. [

29] for oil field drill core images, Duarte-Coronado et al. [

30] for the estimation of porosity in thin section images, and Pires de Lima et al. [

31,

32] for the classification of a variety of geoscience images. Minaee et al. [

33] stated that many of the deep neural network models for biometric recognition are based on transfer learning. Razavian et al. [

34] used a model trained for image classification and conducted a series of transfer learning experiments to investigate a wide range of recognition tasks such as of object image classification, scene recognition, and image retrieval. Transfer learning is also widely used in the remote-sensing field. For example, Hu et al. [

13] performed an analysis of the use of transfer learning from pretrained CNN models to perform remote-sensing scene classification. Chen et al. [

35] used transfer learning for airplane detection, Rostami et al. [

36] for classifying synthetic aperture radar images, Weinstein et al. [

37] for the localization of tree-crowns using Light Detection and Ranging RGB (red, green, blue) images.

Despite the success of transfer learning in applications in which the secondary task is significantly different from the primary task (e.g., [

28,

38,

39]), the remark that the effectiveness of transfer learning is expected to decline as the primary and secondary tasks become less similar [

24] is commonly made and still very present in many research fields. Although Yosinski et al. [

24] concluded that using transfer learning from distant tasks perform better than training CNN models from scratch (with randomly initialized weights), it remains unclear how the amount of data or the model used can influence the models’ performance.

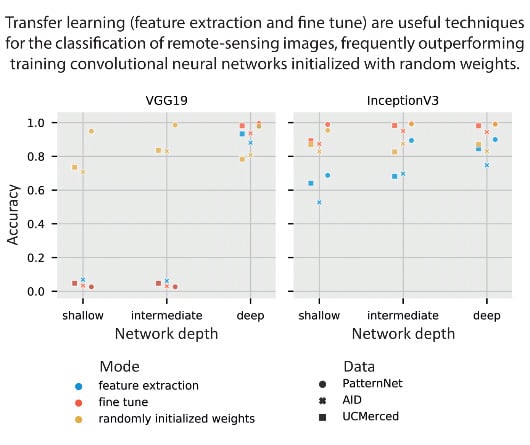

Here we investigate the performance of transfer learning from CNNs pre-trained on natural images for remote-sensing scene classification versus CNNs trained from scratch only on the remote sensing scene classification dataset themselves. We evaluate different depths of two popular CNN models—VGG 19 [

40], and Inception V3 [

41]—using three different sized remote sensing datasets.

Section 2 provides a short glossary for easier reference.

Section 3 describes the datasets.

Section 4 provides a brief overview of CNNs and

Section 5 provides details on the methods we apply for analysis.

Section 6 shows the results followed by a discussion in

Section 7. We summarize our findings in

Section 8.

2. Glossary

This short glossary provides common denominations in machine-learning applications and used throughout the manuscript. Please refer to Google’s machine learning glossary for a more detailed list of terms [

42].

Accuracy: the ratio between the number of correct classifications and the total number of classifications performed. Values range from 0.0 to 1.0 (equivalently, 0% to 100%). A perfect score of 1.0 means all classifications were correct whereas a score of 0.0 means all classifications were incorrect.

Convolution: a mathematical operation that combines input data and a convolutional kernel producing an output. In machine learning applications, a convolutional layer uses the convolutional kernel and the input data to train the convolutional kernel weights.

Convolutional neural networks (CNN): a neuron network architecture in which at least one layer is a convolutional layer.

Deep neural networks (DNN): an artificial neural network model containing multiple hidden layers.

Fine tuning: a secondary training step to further adjust the weights of a previously trained model so the model can better achieve a secondary task.

Label: the names applied to an instance, sample, or example (for image classification, an image) associating it with a given class.

Layer: a group of neurons in a machine learning model that processes a set of input features.

Machine learning (ML): a model or algorithm that is trained and learns from input data rather than from externally specified parameters.

Softmax: a function that calculates probabilities for each possible class over all different classes. The sum of all probabilities adds to 1.0. The softmax equation

computed over

classes is given by:

Training: the iterative process of finding the most appropriate weights of a machine-learning model.

Transfer Learning: a technique that uses information learned in a primary machine learning task to perform a secondary machine learning task.

Weights: the coefficients of a machine learning model. In a simple linear equation, the slope and intercept are the weights of the model. In CNNs, the weights are the convolutional kernel values. The training objective is to find the ideal weights of the machine-learning model.

5. Methods

To better understand the effects of different approaches and techniques used for transfer learning with remote-sensing datasets, we perform two major experiments using the models presented in

Section 5.1. The first experiment in

Section 5.2 compares different optimization methods. The second experiment in

Section 5.3 aims to investigate the sensitivity of transfer learning to the level of specialization of the original trained CNN model. The experiment in

Section 5.3 also compares the results of transfer learning and training a model with randomly initialized weights.

The choice of hyperparameters can have a strong influence on CNN performance. Nonetheless, our main objective here is to investigate transfer learning results rather than maximize performance. Therefore, unless otherwise noted, we maintain the same hyperparameters specified in

Table 4 for all training in all experiments. The models are trained using Keras [

56], with TensorFlow as its backend [

57]. When kernels are initialized, we use the Glorot uniform [

58] distribution of weights. The images are rescaled from their original size to the model’s input size using nearest neighbors.

5.1. Model Split

To evaluate the transfer learning process from natural images to remote sensing datasets, we use VGG19 and Inception V3 models and train a small classification network on top of such models. We refer to the original CNN model structure, part of VGG19 or part of Inception V3, as the “base model”, and the small classification network as the “top model” (

Figure 1). The top model is composed of an average pooling, followed by one fully connected layer with 512 neurons, a dropout layer [

59] used during training, and a final fully connected layer with a softmax output where the number of neurons is dependent on the number of classes for the task (i.e., 21 for UCMerced, 30 for AID, 38 for PatternNet). The dropout is a simple technique useful to avoid overfitting in which random connections are disabled during training. Note the top model will be specific to the secondary task and for each one of the datasets, whereas the base model, when containing the weights learned during training for the primary task, will have its layers presenting the transition from general to specific features. The models we used were primarily trained on the ImageNet dataset and are available online (e.g., through Keras or TensorFlow websites). We evaluate how dependent the transfer learning process is on the transition from general to specific features by extracting features in three different positions for each one of the retrained models and we denominate them “shallow”, “intermediate”, and “deep” (

Figure 2). The shallow experiment uses the initial blocks of the base models to extract features and adds the top model. The intermediate experiment extracts the block somewhere in the middle of the base model. Finally, the deep experiment uses all the blocks of the original base model, except the original final classification layers.

5.2. Stochastic Gradient Descent versus Adaptive Optimization Methods

In the search for the global minima, optimization algorithms frequently use the gradient descent strategy. To compute the gradient of the loss function, we sum the error of each sample. Using our PatternNet data split as example, we first loop through all training set containing 21,280 samples before updating the gradient. Therefore, to move a single step towards the minima, we compute the error 21,280 times. A common approach to avoid computing the error for all training samples before moving a step is to use stochastic gradient descent (SGD).

The SGD uses a straightforward approach; instead of using the sum of all training errors (the loss), SGD uses the error gradient of a single sample at each iteration. Bottou [

60] observed that SGD show good performance for large-scale problems. SGD is the building block used by many optimization algorithms that apply some variation to achieve better convergence rates (e.g., [

61,

62]). Kingma and Ba [

63] observed that SGD has a great practical importance in many fields of science and engineering and propose Adam, a method for efficient stochastic optimization. Ruder [

64] recommends using Adam as the best overall optimization choice.

However, Wilson et al. [

65] reported that the solutions found by adaptive methods (such as Adam) have a worse generalization than SGD, even though solutions found by adaptive optimization methods have a better performance on the training set. Our optimization experiment is straightforward: we compare the training, validation losses and the test accuracy for the UCMerced dataset using different optimization methods: SGD, Adam, and Adamax, a variant of Adam that makes use of the infinity norm, also described by Kingma and Ba [

63]. We perform such analysis using the shallow-intermediate-deep VGG19 and shallow-intermediate-deep Inception V3 to fit the UCMerced dataset starting the models with randomly initialized weights.

5.3. General to Specific Layer Transition of CNN Models

As mentioned above, many CNN models trained on natural images show a very common characteristic. Layers closer to the input data tend to learn general features, then there is a transition to more specific dataset features. For example, a CNN trained to classify the 21 UCMerced dataset has in its final layer 21 softmax outputs, with each output specifically identifying one of the 21 classes. Therefore, the final layer in this example is very specific for the UCMerced task; the final layer receives a set of features coming from the previous layers and outputs a set of probabilities accounting for the 21 UCMerced classes. These are intuitive notions of general vs. specific features that are sufficient for the experiments to be performed. Yosinski et al. [

24] provide a rigorous definition of general and specific features.

To observe how the transition from general to specific features can affect the transfer learning process of remote-sensing datasets, we use the shallow, intermediate, and deep VGG19 and Inception V3 described in

Section 5.1. Three training modes are performed: feature extraction, fine tuning, and randomly initialized weights. Feature extraction “locks” (or “freezes”) the pre-trained layers extracted from the base models. Fine tuning starts as feature extraction, with the base model frozen, but eventually allows all the layers of the model to learn. The randomly initialized weights mode starts the entire model with randomly initialized weights after which all the weights are updated during training. Randomly initialized weights is the ordinary CNN training, not a transfer learning process. For the sake of standardization, all modes train the model for 100 epochs. In fine tuning, the first step (part of the model is frozen) is trained for 50 epochs, and the second step (all layers of the model are free to learn) for another 50 epochs.

7. Discussion

Using ImageNet data, Yosinski et al. [

24] found that transfer learning, even when applied to a secondary task not similar to the primary task, perform better than training CNN models with randomly initialized weights. Using medical image data, Tajbakhsh et al. [

66] found that fine-tuning achieved results comparable to or better than results from training a CNN model with randomly initialized weights. Our results align with their findings. Both

Figure 8 and

Table 7 show the fine-tuning mode of training outperforming randomly initialized weights when using SGD (1e-3) momentum 0.9. Results in

Figure 9 and

Table 9 show that transfer learning performs best with the Adamax (2e-3) optimizer. However, it seems that the step size (2e-3) is too large for fine tuning in the VGG19 model, such that the VGG19 intermediate and deep models trained on fine tune and randomly initialized weights modes fall in local minima. The primary task (ImageNet, composed of natural images) is not very similar to the secondary task (remote-sensing scene classification). While there is a similarity in primary and secondary tasks datasets, such as the number of channels (red-green-blue components), images are from the visible spectra, and some objects might be present in both tasks (e.g., airplanes), the tasks are fundamentally different.

Figure 5, however, shows how feature extraction can be limited by the difference in tasks. When the initial layers are frozen, the model cannot properly learn and the model starts to overfit. With the layers unfrozen, the overfitting reduces and accuracy increase. We observed a similar behavior for most of fine-tuning tests (all of the loss and accuracy per epoch can be accessed in the

supplemental materials). Despite feature extraction limitations, the results show that transfer learning is an effective deep-learning approach that should not be discarded if the secondary task is not similar to the primary task. In fact,

Table 8 presents striking results. Fine-tuned models initially trained on PatternNet underperformed fine tuned models trained on ImageNet. Perhaps the first explanation for such underperformance would be that the models are overfitting the PatternNet dataset. However, PatternNet models performed well on the PatternNet test set, which indicates they are not overfitting the training data. We hypothesize that the weaker performance is due to the complexity of the datasets. PatternNet is a dataset created with the objective to provide researchers with clear examples of different remote sensing scenes, whereas the ImageNet is a complex dataset where the intra-class variance, i.e. how a single class contains very different samples, is very high. As observed by Cheng et al. [

3] many remote-sensing scene classification datasets have a lack of intraclass sample variations and diversity. These limitations severely limit the development of new approaches especially deep learning-based methods. Thus, CNN models trained on the ImageNet need to develop more generic, perhaps robust, filters to be able to identify ImageNet’s classes properly.

8. Conclusions

Our objective with this paper was to investigate the use of transfer learning in the analysis of remote-sensing data, as well as how the CNN performance depends on the depth of the network and on the amount of training data available. Our experiments, based on three distinct remote-sensing datasets and two popular CNN models, show that transfer learning, specifically fine tuning CNNs is a powerful tool for remote-sensing scene classification. Much like the findings in other experiments, the results we found show that transfer from natural images (ImageNet) to remote-sensing imagery is possible. Despite the relatively large difference between primary and secondary tasks, transfer learning training mode generally outperformed training a CNN with randomly initialized weights and achieved the best results overall. Curiously, fine-tuning models primarily trained on the generic ImageNet dataset overperformed fine-tuning models primarily trained on PatternNet dataset. As expected, our results also indicate that for a particular application, the amount of training data available plays a significant role on the performance of the CNN models. In general we observed a larger accuracy difference between transfer learning and training with randomly initialized weights using the smaller UCMerced dataset, whereas accuracy differences were smaller when using the larger PatternNet dataset. Model robustness was also clear on the results. In several instances the VGG19 ended up stuck on local minima, both during optimization testing and during transfer learning testing. The VGG19 shallow and intermediate models’ results exhibit a performance degradation caused by splitting the primary trained CNN model between co-adapted neurons on neighboring layers. VGG19 shallow and intermediate on randomly initialized mode, however, performed satisfactorily. In spite of our simplistic model split without detailed attention to co-adaption of neurons between layers, Inception V3 passed all experiments without falling into local minima.

The results seem to corroborate that feature extraction or fine-tuning well-established CNN models offer a practical way to achieve the best performance for remote-sensing scene classification. Although fine-tuning the originally more complex deep models might present satisfactory results, splitting the original model can perhaps improve performance. Note, the fine-tuning Inception V3 intermediate model outperformed Inception V3 deep model. With datasets large enough, randomly initialized weights are also an appropriate choice for training. However, it is often hard to know when a dataset is large enough. Our recommendation is to start from the deep models and try to reduce model’s size as it is easier to overfit models with too many weights.