Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges

Abstract

:1. Introduction

- Visual analysis: the change map is obtained by manual interpretation, which can provide highly reliable results based on expert knowledge but is time-consuming and labor-intensive;

- aAlgebra-based methods: the change map is obtained by performing algebraic operation or transformation on multi-temporal data, such as image differencing, image regression, image ratioing, and change vector analysis (CVA);

- Transformation: data reduction methods, such as principle component analysis (PCA), Tasseled Cap (KT), multivariate alteration detection (MAD), Gramm–Schmidt (GS), and Chi-Square, are used to suppress correlated information and highlight variance in multi-temporal data;

- Classification-based methods: changes are identified by comparing multiple classification maps (i.e., post-classification comparison), or using a trained classifier to directly classify data from multiple periods (i.e., multidate classification or direct classification);

- Advanced models: advanced models, such as the Li-Strahler reflectance model, the spectral mixture model, and the biophysical parameter method, are used to convert the spectral reflectance values of multi-period data into physically based parameters or fractions to perform change analysis, and this is more intuitive and has physical meaning, but it is complicated and time-consuming;

- Others: hybrid approaches and others, such as knowledge-based, spatial-statistics-based, and integrated GIS and RS methods, are used.

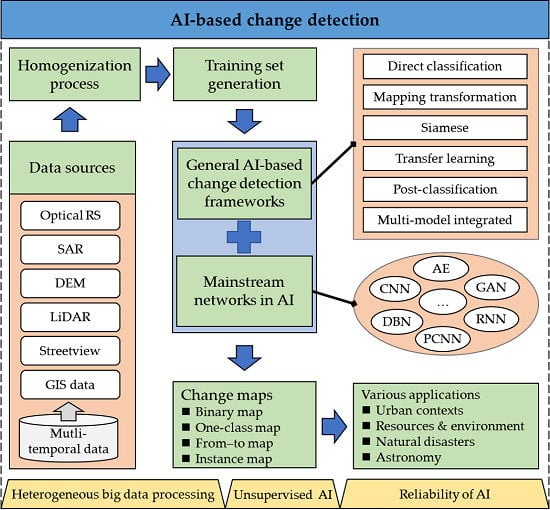

- The implementation process of AI-based change detection is introduced, and we summarize common implementation strategies that can help beginners understand this research field;

- We present the data from different sensors used for AI-based change detection in detail, mainly including optical RS data, SAR data, street-view images, and combined heterogeneous data. More practically, we list the available open datasets with annotations, which can be used as benchmarks for training and evaluating AI models in future change detection studies;

- By systematically reviewing and analyzing the process of AI-based change detection methods, we summarize their general frameworks in a practical way, which can help to design change detection approaches in the future. Furthermore, the unsupervised schemes used in AI-based change detection are analyzed to help address the problem of lack of training samples in practical applications;

- We describe the commonly used networks in AI for change detection. Analyzing their applicability is helpful for the selection of AI models in practical applications;

- We provide the application of AI-based change detection in various fields, and subdivide it into different data types, which helps those interested in these areas to find relevant AI-based change detection approaches;

- We delineate and discuss the challenges and prospects of AI for change detection from three major directions, i.e., heterogeneous big data processing, unsupervised AI, and the reliability of AI, providing a useful reference for future research.

2. Implementation Process of AI-Based Change Detection

- Homogenization: Due to differences in illumination and atmospheric conditions, seasons, and sensor attitudes at the time of acquisition, multi-period data usually need to be homogenized before change detection. Geometric and radiometric correction are two commonly used methods [14,15]. The former aims to geometrically align two or more given pieces of data, which can be achieved through registration or co-registration. Given two period data, only when they are overlaid can the comparison between corresponding positions be meaningful [16]. The latter aims to eliminate radiance or reflectance differences caused by the digitalization process of sensors and atmospheric attenuation distortion caused by absorption and scattering in the atmosphere [4], which helps to reduce false alarms caused by these radiation errors in change detection. For heterogeneous data, a special AI model structure can be designed for feature space transformation to achieve change detection (see Section 4.1.2);

- Training set generation: To develop the AI model, a large, high-quality training set is required that can help algorithms to understand that certain patterns or series of outcomes come with a given question. Multi-period data are labeled or annotated using certain techniques (e.g., manual annotation [17], pre-classification [18], use of thematic data [19]) to make it easy for the AI model to learn the characteristics of the changed objects. Figure 2 presents an annotated example for building change detection, which is composed of two-period RS images and a corresponding ground truth labeled with building changes at the pixel level. Based on the ground truth, i.e., prior knowledge, the AI model can be trained in a supervised manner. To alleviate the problem of lack of training data, data augmentation, which is widely used, is a good strategy, such as horizontal or vertical flip, rotation, change in scale, crop, translation, or adding noise, which can significantly increase the diversity of data available for training models, without actually collecting new data;

- Model training: After the training set is generated, it can usually be divided into two datasets according to the number of samples or the geographic area: a training set for AI model training and a test set for accuracy evaluation during the training process [20]. The training and testing processes are performed alternately and iteratively. During the training process, the model is optimized according to a learning criterion, which can be a loss function in deep learning (e.g., softmax loss [21], contrastive loss [22], Euclidean loss [23], or cross-entropy loss [24]). By monitoring the training process and test accuracy, the convergence state of the AI model can be obtained, which can help in adjusting its hyperparameters (such as the learning rate), and also in judging whether the model performance has reached the best (i.e., termination) condition;

- Model serving: By deploying a trained AI model, change maps can be generated more intelligently and automatically for practical applications. Moreover, this can help validate the generalization ability and robustness of the model, which is an important aspect of evaluating the practicality of the AI-based change detection technique.

3. Data Sources for Change Detection

3.1. Data Used for Change Detection

3.1.1. Optical RS Images

3.1.2. SAR Images

3.1.3. Street View Images

3.1.4. Combining Heterogeneous Data

3.2. Open Data Sets

4. General AI-Based Change Detection Frameworks

4.1. Single-Stream Framework

4.1.1. Direct Classification Structure

4.1.2. Mapping Transformation-Based Structure

4.2. Double-Stream Framework

4.2.1. Siamese Structure

4.2.2. Transfer Learning-Based Structure

4.2.3. Post-Classification Structure

4.3. Multi-Model Integrated Structure

4.4. Unsupervised Schemes in Change Detection Frameworks

5. Mainstream Networks in AI

5.1. Autoencoder

5.2. Deep Belief Network

5.3. Convolutional Neural Network

5.4. Recurrent Neural Network

5.5. Pulse Couple Neural Network

5.6. Generative Adversarial Networks

5.7. Other Artificial Neural Networks and AI Methods

6. Applications

- Urban contexts: urban expansion, public space management, and building change detection;

- Resources and environment: human-driven environmental changes, hydro-environmental changes, sea ice, surface water, and forest monitoring;

- Natural disasters: landslide mapping and damage assessment;

- Astronomy: planetary surfaces.

7. Challenges and Opportunities for AI-Based Change Detection

- With the development of various platforms and sensors, they bring significant challenges such as high-dimensional datasets (the high spatial resolution and hyperspectral features), complex data structures (nonlinear and overlapping distributions), and the nonlinear optimization problem (high computational complexity). The complexity of multi-source data greatly contributes to the difficulty of learning robust and discriminative representations from training data with AI techniques. This can be considered a challenge of heterogeneous big data processing;

- The supervised AI methods require massive training samples, which are usually obtained by time-consuming and labor-intensive processes such as human interpretation of RS products and field surveys. It is a big challenge to achieve a robust model of AI-based methods with insufficient training samples. Unsupervised AI techniques need to be developed;

- There are various efficient and accurate AI models and frameworks, as we review in Section 4 and Section 5. At present, researchers constantly propose novel AI-based change detection approaches endlessly. Still, it is also a great challenge to choose an efficient one and ensure its accuracy for different applications. The reliability of AI needs to be considered in practical applications.

7.1. Heterogeneous Big Data Processing

- Although some AI-based change detection methods based on heterogeneous data have achieved satisfactory results, as summarized in Section 3.1.4, the types of sensor and data size of these studies are relatively limited. Moreover, they mainly consider change detection between different source data rather than finding the fusion of data in the same period. The full use of multi-source data at the same period (e.g., optical RS images and DEM) and data fusion theories (i.e., mutual compensation of various types of data), combined with AI techniques, would help improve the accuracy of change detection sufficiently;

- Since current change detection methods mainly depends on the detection of 2D information, with the development of 3D reconstruction techniques, using 3D data to detect changes in buildings, etc., is also a direction of future development [6]. Among such techniques, 3D reconstruction based on oblique images or laser point cloud data and 3D information integration based on aerial imagery and ground-level street view imagery (i.e., air–ground integration) are the hot topics of research. There are still no effective AI techniques that implement 3D change detection;

- The processing of RS big data requires a large amount of computing resources, limiting the implementation of the AI model. For example, the processing of large-format data usually needs to be processed in blocks, which easily leads to edge problems. The large amount of data means that large trainable parameters in the AI model are required, resulting in a difficult training process and consuming a high amount of computing resources. Therefore, it is necessary to balance the amount of data and the number of trainable parameters. They pose challenges to the design of AI-based change detection approaches.

7.2. Unsupervised AI

- Due to the lack of labeled samples to train efficient AI models in the past few years, many researchers have devoted great efforts to these problems and have consistently produced impressive results. New unsupervised AI techniques are constantly emerging, including GAN, transfer learning, and AEs, as summarized in Section 4.4. Although these techniques alleviate the lack of samples to a certain extent, there is still room for improvement;

- Change detection is generally regarded as a low-likelihood problem (i.e., the unchanged in the change map is much larger than the changed), with the uncertainty of the change location and direction. The current unsupervised AI techniques do not easily solve this problem due to the lack of prior knowledge. Excluding supervised AI, weakly- and semi-supervised AI techniques are feasible solutions, but further research is needed to improve performance. Nevertheless, pure unsupervised AI technique for change detection should be the ultimate goal;

- One of the reasons for studying unsupervised AI techniques is the lack of training samples, i.e., prior knowledge. Considering that the Internet has entered the Web 2.0 era (emphasizing user-generated content, ease of use, participatory culture and interoperability for end users), using crowd-source data as a priori knowledge is a good alternative solution. For example, OpenStreetMap [32], a free wiki world map, can provide a large amount of annotation data labeled by volunteers for the training of AI models. Although the label precision of some crowd-sourced data is not high, the AI model can also be trained in a weakly supervised manner to achieve change detection.

7.3. Reliability of AI

- Strategy 1: Reduce errors caused by data sources, such as using preprocessing (e.g., spectral and radiometric correction) to reduce the uncertainty of data caused by geometric errors and spectral differences, or fusing multiple data to improve the reliability of the original data, thereby improving the reliability of the change detection results. To date, there have been some studies considering the impact of registration [242] and algorithm fusion [243];

- Strategy 2: Improve the interpretability of AI models through a sub-modular model structure, which can help to understand the operation principle of the entire AI model by understanding the function of each sub-module. For example, the region-proposals component in R-CNN can be clearly understood as a generator to predict the regions of the objects;

- Strategy 4: Improve the practicality of the AI model results by integrating post-processing algorithms, such as the Markov random field [245], the conditional random field [246], and level set evolution [247], which can help remove noise points and provide accurate boundaries. This is critical for some cartographic applications;

- Strategy 5: Improve the fineness of change maps through refined detection units. According to the detection unit of change detection, it can be divided into scene level, patch or super-pixel level, pixel level and sub-pixel level from coarse to fine. From the aspect of reliability, sub-pixel level is the best choice because it alleviates the problem of mixed pixels in RS images. However, this easily leads to high computational complexity. Therefore, using different detection units according to different land cover types is the best solution, which requires a well-designed AI model;

- Strategy 6: Improve the representation of change maps by detecting changes in each instance. As we introduced in Section 6, the change maps can be grouped into binary maps, one-class maps, from–to maps, and instance maps. The instance change map is more practical but still lacks research. It can provide change information for each instance, and is more reflective of real-world changes. Moreover, it can avoid the limitation of the binary map without semantic information and the restriction of the from–to map by the classification system, thereby improving the reliability of the final result.

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Singh, A. Digital change detection techniques using remotely sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Marinelli, D.; Bruzzone, L.; Bovolo, F. A Review of Change Detection in Multitemporal Hyperspectral Images: Current Techniques, Applications, and Challenges. IEEE Geosci. Remote Sens. Mag. 2019, 7, 140–158. [Google Scholar] [CrossRef]

- Tewkesbury, A.P.; Comber, A.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef] [Green Version]

- Lu, D.; Mausel, P.; Brondizio, E.; Morán, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2401. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J.; De Carvalho, L.M.T.; A Wulder, M. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

- Qin, R.; Tian, J.; Reinartz, P. 3D change detection–Approaches and applications. ISPRS J. Photogramm. Remote Sens. 2016, 122, 41–56. [Google Scholar] [CrossRef] [Green Version]

- Hussain, M.; Chen, D.M.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Kaplan, A.; Haenlein, M. Siri in my hand: Who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus. Horiz. 2019, 62, 15–25. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, X. The Spectral-Spatial Joint Learning for Change Detection in Multispectral Imagery. Remote Sens. 2019, 11, 240. [Google Scholar] [CrossRef] [Green Version]

- Fang, B.; Pan, L.; Kou, R. Dual Learning-Based Siamese Framework for Change Detection Using Bi-Temporal VHR Optical Remote Sensing Images. Remote Sens. 2019, 11, 1292. [Google Scholar] [CrossRef] [Green Version]

- Zhong, Y.; Ma, A.; Ong, Y.-S.; Zhu, Z.; Zhang, L. Computational intelligence in optical remote sensing image processing. Appl. Soft Comput. 2018, 64, 75–93. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote. Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 1. [Google Scholar] [CrossRef] [Green Version]

- Chen, H.; Wu, C.; Du, B.; Zhang, L.; Wang, L. Change Detection in Multisource VHR Images via Deep Siamese Convolutional Multiple-Layers Recurrent Neural Network. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2848–2864. [Google Scholar] [CrossRef]

- Wang, X.; Liu, S.; Du, P.; Liang, H.; Xia, J.; Li, Y. Object-Based Change Detection in Urban Areas from High Spatial Resolution Images Based on Multiple Features and Ensemble Learning. Remote Sens. 2018, 10, 276. [Google Scholar] [CrossRef] [Green Version]

- Zhang, P.; Gong, M.; Su, L.; Liu, J.; Li, Z. Change detection based on deep feature representation and mapping transformation for multi-spatial-resolution remote sensing images. ISPRS J. Photogramm. Remote Sens. 2016, 116, 24–41. [Google Scholar] [CrossRef]

- Zhao, W.; Mou, L.; Chen, J.; Bo, Y.; Emery, W.J. Incorporating Metric Learning and Adversarial Network for Seasonal Invariant Change Detection. IEEE Trans. Geosci. Remote Sens. 2020, 58, 2720–2731. [Google Scholar] [CrossRef]

- Zhao, J.J.; Gong, M.G.; Liu, J.; Jiao, L.C. Deep learning to classify difference image for image change detection. In Proceedings of the 2014 International Joint Conference on Neural Networks, Beijing, China, 6–11 July 2014; IEEE: New York, NY, USA, 2014; pp. 397–403. [Google Scholar]

- Ji, M.; Liu, L.; Du, R.; Buchroithner, M.F. A Comparative Study of Texture and Convolutional Neural Network Features for Detecting Collapsed Buildings After Earthquakes Using Pre- and Post-Event Satellite Imagery. Remote Sens. 2019, 11, 1202. [Google Scholar] [CrossRef] [Green Version]

- Lei, T.; Zhang, Y.; Lv, Z.; Li, S.; Liu, S.; Nandi, A.K. Landslide Inventory Mapping From Bitemporal Images Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1–5. [Google Scholar] [CrossRef]

- Geng, J.; Ma, X.; Zhou, X.; Wang, H. Saliency-Guided Deep Neural Networks for SAR Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 7365–7377. [Google Scholar] [CrossRef]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change Detection Based on Deep Siamese Convolutional Network for Optical Aerial Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Cao, G.; Wang, B.; Xavier, H.-C.; Yang, D.; Southworth, J. A new difference image creation method based on deep neural networks for change detection in remote-sensing images. Int. J. Remote Sens. 2017, 38, 7161–7175. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change Detection in Synthetic Aperture Radar Images Based on Deep Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef] [PubMed]

- TensorFlow. Available online: https://www.tensorflow.org/ (accessed on 5 May 2020).

- Keras. Available online: https://keras.io/ (accessed on 5 May 2020).

- Pytorch. Available online: https://pytorch.org/ (accessed on 5 May 2020).

- Caffe. Available online: https://caffe.berkeleyvision.org/ (accessed on 5 May 2020).

- Ghouaiel, N.; Lefevre, S. Coupling ground-level panoramas and aerial imagery for change detection. Geospat. Inf. Sci. 2016, 19, 222–232. [Google Scholar] [CrossRef] [Green Version]

- Regmi, K.; Shah, M. Bridging the Domain Gap for Ground-to-Aerial Image Matching. arXiv 2019, arXiv:1904.11045. [Google Scholar]

- Kang, J.; Körner, M.; Wang, Y.; Taubenbock, H.; Zhu, X.X. Building instance classification using street view images. ISPRS J. Photogramm. Remote. Sens. 2018, 145, 44–59. [Google Scholar] [CrossRef]

- OpenStreetMap. Available online: http://www.openstreetmap.org/ (accessed on 4 May 2020).

- ISPRS Benchmarks. Available online: http://www2.isprs.org/commissions/comm3/wg4/3d-semantic-labeling.html (accessed on 4 May 2020).

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Baumgardner, M.F.; Biehl, L.L.; Landgrebe, D.A. 220 band aviris hyperspectral image data set: June 12, 1992 indian pine test site 3. Purdue Univ. Res. Repos. 2015, 10, R7RX991C. [Google Scholar]

- Li, X.; Yuan, Z.; Wang, Q. Unsupervised Deep Noise Modeling for Hyperspectral Image Change Detection. Remote Sens. 2019, 11, 258. [Google Scholar] [CrossRef] [Green Version]

- Huang, F.; Yu, Y.; Feng, T. Hyperspectral remote sensing image change detection based on tensor and deep learning. J. Vis. Commun. Image Represent. 2019, 58, 233–244. [Google Scholar] [CrossRef]

- Song, A.; Choi, J.; Han, Y.; Kim, Y. Change Detection in Hyperspectral Images Using Recurrent 3D Fully Convolutional Networks. Remote Sens. 2018, 10, 1827. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A General End-to-End 2-D CNN Framework for Hyperspectral Image Change Detection. IEEE Trans. Geosci. Remote Sens. 2018, 57, 3–13. [Google Scholar] [CrossRef] [Green Version]

- He, Y.; Weng, Q. High Spatial Resolution Remote Sensing: Data, Analysis, and Applications; CRC Press: Boca Raton, FL, USA, 2018. [Google Scholar]

- Anees, A.; Aryal, J.; O’Reilly, M.M.; Gale, T.; Wardlaw, T. A robust multi-kernel change detection framework for detecting leaf beetle defoliation using Landsat 7 ETM+ data. ISPRS J. Photogramm. Remote Sens. 2016, 122, 167–178. [Google Scholar] [CrossRef]

- Dai, X.L.; Khorram, S. Remotely sensed change detection based on artificial neural networks. Photogramm. Eng. Remote Sens. 1999, 65, 1187–1194. [Google Scholar]

- Bruzzone, L.; Cossu, R. An RBF neural network approach for detecting land-cover transitions. In Image and Signal Processing for Remote Sensing Vii; Serpico, S.B., Ed.; Spie-Int Soc Optical Engineering: Bellingham, WA, USA, 2002; Volume 4541, pp. 223–231. [Google Scholar]

- Abuelgasim, A.; Ross, W.; Gopal, S.; Woodcock, C. Change Detection Using Adaptive Fuzzy Neural Networks. Remote Sens. Environ. 1999, 70, 208–223. [Google Scholar] [CrossRef]

- Deilmai, B.R.; Kanniah, K.D.; Rasib, A.W.; Ariffin, A. Comparison of pixel -based and artificial neural networks classification methods for detecting forest cover changes in Malaysia. In Proceedings of the 8th International Symposium of the Digital Earth, Univ Teknologi Malaysia, Inst Geospatial Sci & Technol, Kuching, Malaysia, 26–29 August 2013; Iop Publishing Ltd.: Bristol, UK, 2014; Volume 18. [Google Scholar]

- Feldberg, I.; Netanyahu, N.S.; Shoshany, M. A neural network-based technique for change detection of linear features and its application to a Mediterranean region. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, IGARSS, Toronto, ON, Canada, 24–28 June 2002; Volume 2, pp. 1195–1197. [Google Scholar] [CrossRef]

- Ghosh, A.; Subudhi, B.N.; Bruzzone, L. Integration of Gibbs Markov Random Field and Hopfield-Type Neural Networks for Unsupervised Change Detection in Remotely Sensed Multitemporal Images. IEEE Trans. Image Process. 2013, 22, 3087–3096. [Google Scholar] [CrossRef]

- Ghosh, S.; Bruzzone, L.; Patra, S.; Bovolo, F.; Ghosh, A. A Context-Sensitive Technique for Unsupervised Change Detection Based on Hopfield-Type Neural Networks. IEEE Trans. Geosci. Remote Sens. 2007, 45, 778–789. [Google Scholar] [CrossRef]

- Ghosh, S.; Patra, S.; Ghosh, A. An unsupervised context-sensitive change detection technique based on modified self-organizing feature map neural network. Int. J. Approx. Reason. 2009, 50, 37–50. [Google Scholar] [CrossRef] [Green Version]

- Han, M.; Zhang, C.; Zhou, Y. Object-wise joint-classification change detection for remote sensing images based on entropy query-by fuzzy ARTMAP. GISci. Remote Sens. 2018, 55, 265–284. [Google Scholar] [CrossRef]

- Lyu, H.; Lu, H.; Mou, L. Learning a Transferable Change Rule from a Recurrent Neural Network for Land Cover Change Detection. Remote Sens. 2016, 8, 506. [Google Scholar] [CrossRef] [Green Version]

- Lyu, H.; Lu, H.; Mou, L.; Li, W.; Wright, J.S.; Li, X.; Li, X.; Zhu, X.X.; Wang, J.; Yu, L.; et al. Long-Term Annual Mapping of Four Cities on Different Continents by Applying a Deep Information Learning Method to Landsat Data. Remote Sens. 2018, 10, 471. [Google Scholar] [CrossRef] [Green Version]

- Mou, L.C.; Zhu, X.X. A recurrent convolutional neural network for land cover change detection in multispectral images. In Proceedings of the Igarss 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 4363–4366. [Google Scholar]

- Neagoe, V.E.; Ciotec, A.D.; Carata, S.V. A new multispectral pixel change detection approach using pulse-coupled neural networks for change vector analysis. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016; IEEE: New York, NY, USA, 2016; pp. 3386–3389. [Google Scholar] [CrossRef]

- Neagoe, V.E.; Stoica, R.M.; Ciurea, A.I. A modular neural network model for change detection in earth observation imagery. In Proceedings of the 2013 IEEE International Geoscience and Remote Sensing Symposium, Melbourne, Australia, 21–26 July 2013; IEEE: New York, NY, USA, 2013; pp. 3321–3324. [Google Scholar] [CrossRef]

- Nourani, V.; Roushangar, K.; Andalib, G. An inverse method for watershed change detection using hybrid conceptual and artificial intelligence approaches. J. Hydrol. 2018, 562, 371–384. [Google Scholar] [CrossRef]

- Patra, S.; Ghosh, S.; Ghosh, A. Unsupervised Change Detection in Remote-Sensing Images Using Modified Self-Organizing Feature Map Neural Network; IEEE Computer Soc.: Los Alamitos, CA, USA, 2007; p. 716. [Google Scholar]

- Roy, M.; Ghosh, S.; Ghosh, A. A novel approach for change detection of remotely sensed images using semi-supervised multiple classifier system. Inf. Sci. 2014, 269, 35–47. [Google Scholar] [CrossRef]

- Roy, M.; Ghosh, S.; Ghosh, A. A Neural Approach Under Active Learning Mode for Change Detection in Remotely Sensed Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 7, 1200–1206. [Google Scholar] [CrossRef]

- Sadeghi, V.; Ahmadi, F.F.; Ebadi, H. A new fuzzy measurement approach for automatic change detection using remotely sensed images. Measurement 2018, 127, 1–14. [Google Scholar] [CrossRef]

- Seto, K.C.; Liu, W. Comparing ARTMAP Neural Network with the Maximum-Likelihood Classifier for Detecting Urban Change. Photogramm. Eng. Remote Sens. 2003, 69, 981–990. [Google Scholar] [CrossRef] [Green Version]

- Varamesh, S. Detection of land use changes in NorthEastern Iran by Landsat satellite data. Appl. Ecol. Environ. Res. 2017, 15, 1443–1454. [Google Scholar] [CrossRef]

- E Woodcock, C.; A Macomber, S.; Pax-Lenney, M.; Cohen, W.B. Monitoring large areas for forest change using Landsat: Generalization across space, time and Landsat sensors. Remote Sens. Environ. 2001, 78, 194–203. [Google Scholar] [CrossRef] [Green Version]

- Mou, L.; Bruzzone, L.; Zhu, X.X. Learning Spectral-Spatial-Temporal Features via a Recurrent Convolutional Neural Network for Change Detection in Multispectral Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 57, 924–935. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Ling, F.; Du, Y.; Feng, Q.; Zhang, Y. A spatial–temporal Hopfield neural network approach for super-resolution land cover mapping with multi-temporal different resolution remotely sensed images. ISPRS J. Photogramm. Remote Sens. 2014, 93, 76–87. [Google Scholar] [CrossRef]

- Benedetti, A.; Picchiani, M.; Del Frate, F. Sentinel-1 and Sentinel-2 data fusion for urban change detection. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 1962–1965. [Google Scholar]

- Pomente, A.; Picchiani, M.; Del Frate, F. Sentinel-2 change detection based on deep features. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 6859–6862. [Google Scholar]

- Arabi, M.E.A.; Karoui, M.S.; Djerriri, K. Optical remote sensing change detection through deep siamese network. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 5041–5044. [Google Scholar]

- Chen, H.; Hua, Y.; Ren, Q.; Zhang, Y. Comprehensive analysis of regional human-driven environmental change with multitemporal remote sensing images using observed object-specified dynamic Bayesian network. J. Appl. Remote Sens. 2016, 10, 16021. [Google Scholar] [CrossRef]

- Pacifici, F.; Del Frate, F.; Solimini, C.; Emery, W. An Innovative Neural-Net Method to Detect Temporal Changes in High-Resolution Optical Satellite Imagery. IEEE Trans. Geosci. Remote Sens. 2007, 45, 2940–2952. [Google Scholar] [CrossRef]

- Pacifici, F.; Del Frate, F. Automatic Change Detection in Very High Resolution Images with Pulse-Coupled Neural Networks. IEEE Geosci. Remote. Sens. Lett. 2009, 7, 58–62. [Google Scholar] [CrossRef]

- Saha, S.; Bovolo, F.; Bruzzone, L. Unsupervised Deep Change Vector Analysis for Multiple-Change Detection in VHR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3677–3693. [Google Scholar] [CrossRef]

- Larabi, M.E.A.; Chaib, S.; Bakhti, K.; Hasni, K.; Bouhlala, M.A. High-resolution optical remote sensing imagery change detection through deep transfer learning. J. Appl. Remote Sens. 2019, 13, 18. [Google Scholar] [CrossRef]

- Liu, R.; Cheng, Z.; Zhang, L.; Li, J. Remote Sensing Image Change Detection Based on Information Transmission and Attention Mechanism. IEEE Access 2019, 7, 156349–156359. [Google Scholar] [CrossRef]

- Han, M.; Chang, N.-B.; Yao, W.; Chen, L.-C.; Xu, S. Change detection of land use and land cover in an urban region with SPOT-5 images and partial Lanczos extreme learning machine. J. Appl. Remote Sens. 2010, 4, 43551. [Google Scholar] [CrossRef] [Green Version]

- Nemmour, H.; Chibani, Y. Neural Network Combination by Fuzzy Integral for Robust Change Detection in Remotely Sensed Imagery. EURASIP J. Adv. Signal Process. 2005, 2005, 2187–2195. [Google Scholar] [CrossRef] [Green Version]

- Nemmour, H.; Chibani, Y. Fuzzy neural network architecture for change detection in remotely sensed imagery. Int. J. Remote Sens. 2006, 27, 705–717. [Google Scholar] [CrossRef]

- Peng, D.; Guan, H. Unsupervised change detection method based on saliency analysis and convolutional neural network. J. Appl. Remote Sens. 2019, 13, 024512. [Google Scholar] [CrossRef]

- Zhang, P.; Gong, M.; Zhang, H.; Liu, J.; Ban, Y. Unsupervised Difference Representation Learning for Detecting Multiple Types of Changes in Multitemporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2018, 57, 2277–2289. [Google Scholar] [CrossRef]

- Fan, J.; Lin, K.; Han, M. A Novel Joint Change Detection Approach Based on Weight-Clustering Sparse Autoencoders. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 685–699. [Google Scholar] [CrossRef]

- Bai, T.; Sun, K.; Deng, S.; Chen, Y. Comparison of four machine learning methods for object-oriented change detection in high-resolution satellite imagery. In Mippr 2017: Remote Sensing Image Processing, Geographic Information Systems, and Other Applications; Sang, N., Ma, J., Chen, Z., Eds.; Spie-Int Soc Optical Engineering: Bellingham, WA, USA, 2018; Volume 10611. [Google Scholar]

- Saha, S.; Bovolo, F.; Bruzzone, L. Unsupervised multiple-change detection in VHR optical images using deep features. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 1902–1905. [Google Scholar]

- Gong, M.; Yang, Y.; Zhan, T.; Niu, X.; Liu, C. A Generative Discriminatory Classified Network for Change Detection in Multispectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 321–333. [Google Scholar] [CrossRef]

- Gong, M.; Zhan, T.; Zhang, P.; Miao, Q. Superpixel-Based Difference Representation Learning for Change Detection in Multispectral Remote Sensing Images. IEEE Trans. Geosci. Remote. Sens. 2017, 55, 2658–2673. [Google Scholar] [CrossRef]

- Huang, F.; Yu, Y.; Feng, T. Automatic building change image quality assessment in high resolution remote sensing based on deep learning. J. Vis. Commun. Image Represent. 2019, 63, 10. [Google Scholar] [CrossRef]

- Nemoto, K.; Imaizumi, T.; Hikosaka, S.; Hamaguchi, R.; Sato, M.; Fujita, A. Building change detection via a combination of CNNs using only RGB aerial imageries. Remote Sens. Technol. Appl. Urban Environ. 2017, 10431. [Google Scholar] [CrossRef]

- Han, P.; Ma, C.; Li, Q.; Leng, P.; Bu, S.; Li, K. Aerial image change detection using dual regions of interest networks. Neurocomputing 2019, 349, 190–201. [Google Scholar] [CrossRef]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction from an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2018, 57, 574–586. [Google Scholar] [CrossRef]

- Ji, S.; Shen, Y.; Lu, M.; Zhang, Y. Building Instance Change Detection from Large-Scale Aerial Images using Convolutional Neural Networks and Simulated Samples. Remote Sens. 2019, 11, 1343. [Google Scholar] [CrossRef] [Green Version]

- Sun, B.; Li, G.-Z.; Han, M.; Lin, Q.-H. A deep learning approach to detecting changes in buildings from aerial images. In Proceedings of the International Symposium on Neural Networks, Moscow, Russia, 10–12 July 2019; pp. 414–421. [Google Scholar]

- Zhang, Z.; Vosselman, G.; Gerke, M.; Persello, C.; Tuia, D.; Yang, M.Y. Detecting Building Changes between Airborne Laser Scanning and Photogrammetric Data. Remote Sens. 2019, 11, 2417. [Google Scholar] [CrossRef] [Green Version]

- Fujita, A.; Sakurada, K.; Imaizumi, T.; Ito, R.; Hikosaka, S.; Nakamura, R. Damage detection from aerial images via convolutional neural networks. In Proceedings of the 2017 Fifteenth IAPR International Conference on Machine Vision Applications (MVA), Nagoya Univ, Nagoya, Japan, 08–12 May 2017; pp. 5–8. [Google Scholar]

- Fang, B.; Chen, G.; Pan, L.; Kou, R.; Wang, L. GAN-Based Siamese Framework for Landslide Inventory Mapping Using Bi-Temporal Optical Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2020, 1–5. [Google Scholar] [CrossRef]

- Jiang, H.; Hu, X.; Li, K.; Zhang, J.; Gong, J.; Zhang, M. PGA-SiamNet: Pyramid Feature-Based Attention-Guided Siamese Network for Remote Sensing Orthoimagery Building Change Detection. Remote Sens. 2020, 12, 484. [Google Scholar] [CrossRef] [Green Version]

- Wiratama, W.; Sim, D. Fusion Network for Change Detection of High-Resolution Panchromatic Imagery. Appl. Sci. 2019, 9, 1441. [Google Scholar] [CrossRef] [Green Version]

- Chan, Y.K.; Koo, V.C. An introduction to synthetic aperture radar (SAR). Prog. Electromagn. Res. B 2008, 2, 27–60. [Google Scholar] [CrossRef] [Green Version]

- De, S.; Pirrone, D.; Bovolo, F.; Bruzzone, L.; Bhattacharya, A. A novel change detection framework based on deep learning for the analysis of multi-temporal polarimetric SAR images. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium, Fort Worth, TX, USA, 23–28 July 2017; IEEE: New York, NY, USA, 2017; pp. 5193–5196. [Google Scholar]

- Chen, H.; Jiao, L.; Liang, M.; Liu, F.; Yang, S.; Hou, B. Fast unsupervised deep fusion network for change detection of multitemporal SAR images. Neurocomputing 2019, 332, 56–70. [Google Scholar] [CrossRef]

- Geng, J.; Wang, H.Y.; Fan, J.C.; Ma, X.R. Change Detection of SAR Images Based on Supervised Contractive Autoencoders and Fuzzy Clustering. In Proceedings of the International Workshop on Remote Sensing with Intelligent Processing (RSIP), Shang Hai, China, 18–21 May 2017; IEEE: New York, NY, USA, 2017. [Google Scholar]

- Gong, M.; Yang, H.; Zhang, P. Feature learning and change feature classification based on deep learning for ternary change detection in SAR images. ISPRS J. Photogramm. Remote Sens. 2017, 129, 212–225. [Google Scholar] [CrossRef]

- Lei, Y.; Liu, X.; Shi, J.; Lei, C.; Wang, J. Multiscale Superpixel Segmentation with Deep Features for Change Detection. IEEE Access 2019, 7, 36600–36616. [Google Scholar] [CrossRef]

- Li, Y.Y.; Zhou, L.H.; Peng, C.; Jiao, L.C. Spatial fuzzy clustering and deep auto-encoder for unsupervised change detection in synthetic aperture radar images. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; IEEE: New York, NY, USA, 2018; pp. 4479–4482. [Google Scholar]

- Lv, N.; Chen, C.; Qiu, T.; Sangaiah, A.K. Deep Learning and Superpixel Feature Extraction Based on Contractive Autoencoder for Change Detection in SAR Images. IEEE Trans. Ind. Inform. 2018, 14, 5530–5538. [Google Scholar] [CrossRef]

- Planinšič, P.; Gleich, D. Temporal Change Detection in SAR Images Using Log Cumulants and Stacked Autoencoder. IEEE Geosci. Remote Sens. Lett. 2018, 15, 297–301. [Google Scholar] [CrossRef]

- Su, L.; Cao, X. Fuzzy autoencoder for multiple change detection in remote sensing images. J. Appl. Remote Sens. 2018, 12, 035014. [Google Scholar] [CrossRef]

- Su, L.Z.; Shi, J.; Zhang, P.Z.; Wang, Z.; Gong, M.G. Detecting multiple changes from multi-temporal images by using stacked denosing autoencoder based change vector analysis. In Proceedings of the 2016 International Joint Conference on Neural Networks, Vancouver, Canada, 24–29 July 2016; IEEE: New York, NY, USA, 2016; pp. 1269–1276. [Google Scholar]

- Luo, B.; Hu, C.; Su, X.; Wang, Y. Differentially Deep Subspace Representation for Unsupervised Change Detection of SAR Images. Remote Sens. 2019, 11, 2740. [Google Scholar] [CrossRef] [Green Version]

- Dong, H.; Ma, W.; Wu, Y.; Gong, M.; Jiao, L. Local Descriptor Learning for Change Detection in Synthetic Aperture Radar Images via Convolutional Neural Networks. IEEE Access 2018, 7, 15389–15403. [Google Scholar] [CrossRef]

- Liu, T.; Li, Y.; Cao, Y.; Shen, Q. Change detection in multitemporal synthetic aperture radar images using dual-channel convolutional neural network. J. Appl. Remote Sens. 2017, 11, 1. [Google Scholar] [CrossRef] [Green Version]

- Saha, S.; Bovolo, F.; Bruzzone, L. Destroyed-buildings detection from VHR SAR images using deep features. In Image and Signal Processing for Remote Sensing aXxiv; Bruzzone, L., Bovolo, F., Eds.; Spie-Int Soc Optical Engineering: Bellingham, WA, USA, 2018; Volume 10789. [Google Scholar]

- Li, Y.; Peng, C.; Chen, Y.; Jiao, L.; Zhou, L.; Shang, R. A Deep Learning Method for Change Detection in Synthetic Aperture Radar Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5751–5763. [Google Scholar] [CrossRef]

- Jaturapitpornchai, R.; Matsuoka, M.; Kanemoto, N.; Kuzuoka, S.; Ito, R.; Nakamura, R. Newly Built Construction Detection in SAR Images Using Deep Learning. Remote Sens. 2019, 11, 1444. [Google Scholar] [CrossRef] [Green Version]

- Cui, B.; Zhang, Y.; Yan, L.; Wei, J.; Wu, H. An Unsupervised SAR Change Detection Method Based on Stochastic Subspace Ensemble Learning. Remote Sens. 2019, 11, 1314. [Google Scholar] [CrossRef] [Green Version]

- Liu, F.; Jiao, L.; Tang, X.; Yang, S.; Ma, W.; Hou, B. Local Restricted Convolutional Neural Network for Change Detection in Polarimetric SAR Images. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 818–833. [Google Scholar] [CrossRef]

- Guo, E.; Fu, X.; Zhu, J.; Deng, M.; Liu, Y.; Zhu, Q.; Li, H. Learning to measure change: Fully convolutional Siamese metric networks for scene change detection. arXiv 2018, arXiv:1810.09111. [Google Scholar]

- Huélamo, C.G.; Alcantarilla, P.F.; Bergasa, L.M.; López-Guillén, E. Change detection tool based on GSV to help DNNs training. In Proceedings of the Workshop of Physical Agents, Madrid, Spain, 22–23 November 2018; pp. 115–131. [Google Scholar]

- Varghese, A.; Gubbi, J.; Ramaswamy, A.; Balamuralidhar, P. ChangeNet: A deep learning architecture for visual change detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 129–145. [Google Scholar]

- Sakurada, K.; Wang, W.; Kawaguchi, N.; Nakamura, R. Dense optical flow based change detection network robust to difference of camera viewpoints. arXiv 2017, arXiv:1712.02941. [Google Scholar]

- Sakurada, K.; Okatani, T. Change detection from a street image pair using CNN features and superpixel segmentation. In Proceedings of the British Machine Vision Conference (BMVC), Swansea, UK, 7–10 September 2015; pp. 61.1–61.12. [Google Scholar]

- Bu, S.; Li, Q.; Han, P.; Leng, P.; Li, K. Mask-CDNet: A mask based pixel change detection network. Neurocomputing 2020, 378, 166–178. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, A.; Zhang, P. A Deep Convolutional Coupling Network for Change Detection Based on Heterogeneous Optical and Radar Images. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 545–559. [Google Scholar] [CrossRef]

- Zhan, T.; Gong, M.; Jiang, X.; Li, S. Log-Based Transformation Feature Learning for Change Detection in Heterogeneous Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1352–1356. [Google Scholar] [CrossRef]

- Zhan, T.; Gong, M.; Liu, J.; Zhang, P. Iterative feature mapping network for detecting multiple changes in multi-source remote sensing images. ISPRS J. Photogramm. Remote Sens. 2018, 146, 38–51. [Google Scholar] [CrossRef]

- Ma, W.; Xiong, Y.; Wu, Y.; Yang, H.; Zhang, X.-R.; Jiao, L. Change Detection in Remote Sensing Images Based on Image Mapping and a Deep Capsule Network. Remote Sens. 2019, 11, 626. [Google Scholar] [CrossRef] [Green Version]

- Yang, M.; Jiao, L.; Liu, F.; Hou, B.; Yang, S. Transferred Deep Learning-Based Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6960–6973. [Google Scholar] [CrossRef]

- Gong, M.; Niu, X.; Zhan, T.; Zhang, M. A coupling translation network for change detection in heterogeneous images. Int. J. Remote Sens. 2018, 40, 3647–3672. [Google Scholar] [CrossRef]

- Niu, X.; Gong, M.; Zhan, T.; Yang, Y. A Conditional Adversarial Network for Change Detection in Heterogeneous Images. IEEE Geosci. Remote Sens. Lett. 2018, 16, 45–49. [Google Scholar] [CrossRef]

- Zhang, C.; Wei, S.; Ji, S.; Lu, M. Detecting Large-Scale Urban Land Cover Changes from Very High Resolution Remote Sensing Images Using CNN-Based Classification. ISPRS Int. J. Geo Inf. 2019, 8, 189. [Google Scholar] [CrossRef] [Green Version]

- Chen, Z.; Zhang, Y.; Ouyang, C.; Zhang, F.; Ma, J. Automated Landslides Detection for Mountain Cities Using Multi-Temporal Remote Sensing Imagery. Sensors 2018, 18, 821. [Google Scholar] [CrossRef] [Green Version]

- Huang, D.M.; Wei, C.T.; Yu, J.C.; Wang, J.L. A method of detecting land use change of remote sensing images based on texture features and DEM. In Proceedings of the International Conference on Intelligent Earth Observing and Applications, Guilin, China, 23–24 October 2015; Zhou, G., Kang, C., Eds.; Spie-Int Soc Optical Engineering: Bellingham, WA, USA, 2015; Volume 9808. [Google Scholar]

- Iino, S.; Ito, R.; Doi, K.; Imaizumi, T.; Hikosaka, S. CNN-based generation of high-accuracy urban distribution maps utilising SAR satellite imagery for short-term change monitoring. Int. J. Image Data Fusion 2018, 9, 302–318. [Google Scholar] [CrossRef]

- Goyette, N.; Jodoin, P.-M.; Porikli, F.; Konrad, J.; Ishwar, P. Changedetection. net: A new change detection benchmark dataset. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; pp. 1–8. [Google Scholar]

- Wang, Y.; Jodoin, P.-M.; Porikli, F.; Konrad, J.; Benezeth, Y.; Ishwar, P. CDnet 2014: An Expanded Change Detection Benchmark Dataset. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 23–28 June 2014; pp. 393–400. [Google Scholar]

- Goyette, N.; Jodoin, P.-M.; Porikli, F.; Konrad, J.; Ishwar, P. A Novel Video Dataset for Change Detection Benchmarking. IEEE Trans. Image Process. 2014, 23, 4663–4679. [Google Scholar] [CrossRef]

- Hyperspectral Change Detection Dataset. Available online: https://citius.usc.es/investigacion/datasets/hyperspectral-change-detection-dataset (accessed on 4 May 2020).

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Multitask learning for large-scale semantic change detection. Comput. Vis. Image Underst. 2019, 187, 102783. [Google Scholar] [CrossRef] [Green Version]

- Benedek, C.; Sziranyi, T. Change Detection in Optical Aerial Images by a Multilayer Conditional Mixed Markov Model. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3416–3430. [Google Scholar] [CrossRef] [Green Version]

- Benedek, C.; Sziranyi, T. A Mixed Markov model for change detection in aerial photos with large time differences. In Proceedings of the 2008 19th International Conference on Pattern Recognition, Tampa, FL, USA, 8–11 December 2008; pp. 1–4. [Google Scholar]

- Daudt, R.C.; Le Saux, B.; Boulch, A.; Gousseau, Y. Urban change detection for multispectral earth observation using convolutional neural networks. In Proceedings of the IGARSS 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 2115–2118. [Google Scholar]

- Zhang, M.; Shi, W. A Feature Difference Convolutional Neural Network-Based Change Detection Method. IEEE Trans. Geosci. Remote Sens. 2020, 1–15. [Google Scholar] [CrossRef]

- Wu, C.; Zhang, L.; Zhang, L. A scene change detection framework for multi-temporal very high resolution remote sensing images. Signal Process. 2016, 124, 184–197. [Google Scholar] [CrossRef]

- Gupta, R.; Goodman, B.; Patel, N.; Hosfelt, R.; Sajeev, S.; Heim, E.; Doshi, J.; Lucas, K.; Choset, H.; Gaston, M. Creating xBD: A dataset for assessing building damage from satellite imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 10–17. [Google Scholar]

- Bourdis, N.; Marraud, D.; Sahbi, H. Constrained optical flow for aerial image change detection. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium, Vancouver, BC, Canada, 24–29 July 2011; pp. 4176–4179. [Google Scholar] [CrossRef] [Green Version]

- Lebedev, M.A.; Vizilter, Y.V.; Vygolov, O.V.; Knyaz, V.A.; Rubis, A.Y. Change detection in remote sensing images using conditional adversarial networks. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 565–571. [Google Scholar] [CrossRef] [Green Version]

- Alcantarilla, P.F.; Stent, S.; Ros, G.; Arroyo, R.; Gherardi, R. Street-view change detection with deconvolutional networks. Auton. Robot. 2018, 42, 1301–1322. [Google Scholar] [CrossRef]

- Sakurada, K.; Okatani, T.; Deguchi, K. Detecting changes in 3D structure of a scene from multi-view images captured by a vehicle-mounted camera. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 137–144. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Learning to compare image patches via convolutional neural networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; IEEE: New York, NY, USA, 2015; pp. 4353–4361. [Google Scholar]

- Liu, J.; Gong, M.; Zhao, J.; Li, H.; Jiao, L. Difference representation learning using stacked restricted Boltzmann machines for change detection in SAR images. Soft Comput. 2014, 20, 4645–4657. [Google Scholar] [CrossRef]

- Aghababaee, H.; Amini, J.; Tzeng, Y. Improving change detection methods of SAR images using fractals. Sci. Iran. 2013, 20, 15–22. [Google Scholar] [CrossRef] [Green Version]

- Gopal, S.; Woodcock, C. Remote sensing of forest change using artificial neural networks. IEEE Trans. Geosci. Remote Sens. 1996, 34, 398–404. [Google Scholar] [CrossRef]

- Xu, J.; Zhang, B.; Guo, H.; Lu, J.; Lin, Y. Combining iterative slow feature analysis and deep feature learning for change detection in high-resolution remote sensing images. J. Appl. Remote Sens. 2019, 13, 024506. [Google Scholar] [CrossRef]

- Touazi, A.; Bouchaffra, D. A k-nearest neighbor approach to improve change detection from remote sensing: Application to optical aerial images. In Proceedings of the 2015 15th International Conference on Intelligent Systems Design and Applications, Marrakech, Morocco, 14–16 December 2015; Abraham, A., Alimi, A.M., Haqiq, A., Barbosa, L.O., BenAmar, C., Berqia, A., BenHalima, M., Muda, A.M., Ma, K., Eds.; IEEE: New York, NY, USA, 2015; pp. 98–103. [Google Scholar]

- Gao, Y.; Gao, F.; Dong, J.; Wang, S. Change Detection from Synthetic Aperture Radar Images Based on Channel Weighting-Based Deep Cascade Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4517–4529. [Google Scholar] [CrossRef]

- Keshk, H.; Yin, X.-C. Change Detection in SAR Images Based on Deep Learning. Int. J. Aeronaut. Space Sci. 2019, 1–11. [Google Scholar] [CrossRef]

- Zhang, M.; Xu, G.; Chen, K.; Yan, M.; Sun, X. Triplet-Based Semantic Relation Learning for Aerial Remote Sensing Image Change Detection. IEEE Geosci. Remote Sens. Lett. 2018, 16, 266–270. [Google Scholar] [CrossRef]

- Hedjam, R.; Abdesselam, A.; Melgani, F. Change detection from unlabeled remote sensing images using siamese ANN. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1530–1533. [Google Scholar]

- Chu, Y.; Cao, G.; Hayat, H. Change detection of remote sensing image based on deep neural networks. In Proceedings of the 2016 2nd International Conference on Artificial Intelligence and Industrial Engineering, Beijing, China, 20–11 November 2016; Sehiemy, R.E., Reaz, M.B.I., Eds.; Atlantis Press: Paris, France, 2016; Volume 133, pp. 262–267. [Google Scholar]

- Wiratama, W.; Lee, J.; Park, S.-E.; Sim, D. Dual-Dense Convolution Network for Change Detection of High-Resolution Panchromatic Imagery. Appl. Sci. 2018, 8, 1785. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, T.P.; Pham, C.C.; Ha, S.V.-U.; Jeon, J.W. Change Detection by Training a Triplet Network for Motion Feature Extraction. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 433–446. [Google Scholar] [CrossRef]

- Su, L.; Gong, M.; Zhang, P.; Zhang, M.; Liu, J.; Yang, H. Deep learning and mapping based ternary change detection for information unbalanced images. Pattern Recognit. 2017, 66, 213–228. [Google Scholar] [CrossRef]

- Ye, Q.; Lu, X.; Huo, H.; Wan, L.; Guo, Y.; Fang, T. AggregationNet: Identifying multiple changes based on convolutional neural network in bitemporal optical remote sensing images. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Macau, China, 14–17 April 2019; pp. 375–386. [Google Scholar]

- Du, B.; Ru, L.; Wu, C.; Zhang, L. Unsupervised Deep Slow Feature Analysis for Change Detection in Multi-Temporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9976–9992. [Google Scholar] [CrossRef] [Green Version]

- Wu, C.; Chen, H.; Do, B.; Zhang, L. Unsupervised change detection in multi-temporal VHR images based on deep kernel PCA convolutional mapping network. arXiv 2019, arXiv:1912.08628. [Google Scholar]

- Rahman, F.; Vasu, B.; Van Cor, J.; Kerekes, J.; Savakis, A. Siamese network with multi-level features for patch-based change detection in satellite imagery. In Proceedings of the 2018 IEEE Global Conference on Signal and Information Processing, Anaheim, CA, USA, 26–29 November 2018; IEEE: New York, NY, USA, 2018; pp. 958–962. [Google Scholar]

- Chen, H.; Wu, C.; Du, B.; Zhang, L. Deep siamese multi-scale convolutional network for change detection in multi-temporal VHR images. In Proceedings of the 2019 10th International Workshop on the Analysis of Multitemporal Remote Sensing Images (MultiTemp), Shanghai, China, 5–7 August 2019; pp. 1–4. [Google Scholar]

- Wang, M.; Tan, K.; Jia, X.; Wang, X.; Chen, Y. A Deep Siamese Network with Hybrid Convolutional Feature Extraction Module for Change Detection Based on Multi-sensor Remote Sensing Images. Remote Sens. 2020, 12, 205. [Google Scholar] [CrossRef] [Green Version]

- Lim, K.; Jin, D.; Kim, C.-S. Change detection in high resolution satellite images using an ensemble of convolutional neural networks. In Proceedings of the 2018 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Honolulu, HI, USA, 12–15 November 2018; pp. 509–515. [Google Scholar]

- El Amin, A.M.; Liu, Q.; Wang, Y. Zoom out CNNs Features for Optical Remote Sensing Change Detection. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 812–817. [Google Scholar]

- Liu, J.; Chen, K.; Xu, G.; Sun, X.; Yan, M.; Diao, W.; Han, H. Convolutional Neural Network-Based Transfer Learning for Optical Aerial Images Change Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 127–131. [Google Scholar] [CrossRef]

- Kerner, H.R.; Wagstaff, K.L.; Bue, B.D.; Gray, P.C.; Bell, J.F.; Ben Amor, H.; Iii, J.F.B. Toward Generalized Change Detection on Planetary Surfaces with Convolutional Autoencoders and Transfer Learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 3900–3918. [Google Scholar] [CrossRef]

- Gao, Y.; Gao, F.; Dong, J.; Wang, S. Transferred Deep Learning for Sea Ice Change Detection from Synthetic-Aperture Radar Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1655–1659. [Google Scholar] [CrossRef]

- Wang, Y.; Du, B.; Ru, L.; Wu, C.; Luo, H. Scene change detection via deep convolution canonical correlation analysis neural network. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 198–201. [Google Scholar]

- Hou, B.; Wang, Y.; Liu, Q. Change Detection Based on Deep Features and Low Rank. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2418–2422. [Google Scholar] [CrossRef]

- El Amin, A.M.; Liu, Q.; Wang, Y. Convolutional neural network features based change detection in satellite images. In Froceedings of the First International Workshop on Pattern Recognition, Tokyo, Japan, 11–13 May 2016; Jiang, X., Chen, G., Capi, G., Ishii, C., Eds.; Spie-Int Soc Optical Engineering: Bellingham, WA, USA, 2016; Volume 0011. [Google Scholar]

- Cao, C.; Dragićević, S.; Li, S. Land-Use Change Detection with Convolutional Neural Network Methods. Environments 2019, 6, 25. [Google Scholar] [CrossRef] [Green Version]

- Wu, C.; Zhang, L.; Du, B. Kernel Slow Feature Analysis for Scene Change Detection. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2367–2384. [Google Scholar] [CrossRef]

- Ghaffarian, S.; Kerle, N.; Pasolli, E.; Arsanjani, J.J. Post-Disaster Building Database Updating Using Automated Deep Learning: An Integration of Pre-Disaster OpenStreetMap and Multi-Temporal Satellite Data. Remote Sens. 2019, 11, 2427. [Google Scholar] [CrossRef] [Green Version]

- Gao, F.; Dong, J.; Li, B.; Xu, Q. Automatic Change Detection in Synthetic Aperture Radar Images Based on PCANet. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1–5. [Google Scholar] [CrossRef]

- Gao, F.; Liu, X.; Dong, J.; Zhong, G.; Jian, M. Change Detection in SAR Images Based on Deep Semi-NMF and SVD Networks. Remote Sens. 2017, 9, 435. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Lia, M.; Zhang, P.; Wu, Y.; Song, W.; An, L. SAR Image Change Detection Using PCANet Guided by Saliency Detection. IEEE Geosci. Remote Sens. Lett. 2018, 16, 402–406. [Google Scholar] [CrossRef]

- Liao, F.; Koshelev, E.; Milton, M.; Jin, Y.; Lu, E. Change detection by deep neural networks for synthetic aperture radar images. In Proceedings of the 2017 International Conference on Computing, Networking and Communications (ICNC), Santa Clara, CA, USA, 26–29 January 2017; pp. 947–951. [Google Scholar]

- Zhao, Q.N.; Gong, M.G.; Li, H.; Zhan, T.; Wang, Q. Three-class change detection in synthetic aperture radar images based on deep belief network. In Bio-Inspired Computing—Theories and Applications, Bic-Ta 2015; Gong, M., Pan, L., Song, T., Tang, K., Zhang, X., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; Volume 562, pp. 696–705. [Google Scholar]

- Samadi, F.; Akbarizadeh, G.; Kaabi, H. Change detection in SAR images using deep belief network: A new training approach based on morphological images. IET Image Process. 2019, 13, 2255–2264. [Google Scholar] [CrossRef]

- Zhao, W.; Wang, Z.; Gong, M.; Liu, J. Discriminative Feature Learning for Unsupervised Change Detection in Heterogeneous Images Based on a Coupled Neural Network. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7066–7080. [Google Scholar] [CrossRef]

- Daudt, R.C.; Saux, B.L.; Boulch, A.; Gousseau, Y. Guided anisotropic diffusion and iterative learning for weakly supervised change detection. arXiv 2019, arXiv:1904.08208. [Google Scholar]

- Connors, C.; Vatsavai, R.R. Semi-supervised deep generative models for change detection in very high resolution imagery. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium, Fort Worth, TX, USA, 23–28 July 2017; pp. 1063–1066. [Google Scholar]

- Li, H.-C.; Yang, G.; Yang, W.; Du, Q.; Emery, W.J. Deep nonsmooth nonnegative matrix factorization network with semi-supervised learning for SAR image change detection. ISPRS J. Photogramm. Remote Sens. 2020, 160, 167–179. [Google Scholar] [CrossRef]

- Zhang, X.; Shi, W.; Lv, Z.; Peng, F. Land Cover Change Detection from High-Resolution Remote Sensing Imagery Using Multitemporal Deep Feature Collaborative Learning and a Semi-supervised Chan–Vese Model. Remote Sens. 2019, 11, 2787. [Google Scholar] [CrossRef] [Green Version]

- Liu, G.; Li, L.; Jiao, L.; Dong, Y.; Li, X. Stacked Fisher autoencoder for SAR change detection. Pattern Recognit. 2019, 96, 106971. [Google Scholar] [CrossRef]

- Sublime, J.; Kalinicheva, E. Automatic Post-Disaster Damage Mapping Using Deep-Learning Techniques for Change Detection: Case Study of the Tohoku Tsunami. Remote Sens. 2019, 11, 1123. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Zhu, B.; Gao, H.; Wang, X.; Xu, M.; Zhu, X. Change Detection Based on the Combination of Improved SegNet Neural Network and Morphology. In Proceedings of the 2018 IEEE 3rd International Conference on Image, Vision and Computing (ICIVC), Chong Qing, China, 27–29 June 2018; pp. 55–59. [Google Scholar]

- Peng, D.; Zhang, Y.; Guan, H. Guan End-to-End Change Detection for High Resolution Satellite Images Using Improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef] [Green Version]

- Venugopal, N. Automatic Semantic Segmentation with DeepLab Dilated Learning Network for Change Detection in Remote Sensing Images. Neural Process. Lett. 2020, 1–23. [Google Scholar] [CrossRef]

- Venugopal, N. Sample Selection Based Change Detection with Dilated Network Learning in Remote Sensing Images. Sens. Imaging: Int. J. 2019, 20, 31. [Google Scholar] [CrossRef]

- Khan, S.; He, X.; Porikli, F.; Bennamoun, M. Forest Change Detection in Incomplete Satellite Images with Deep Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5407–5423. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, X.; Chen, G.; Dai, F.; Gong, Y.; Zhu, K. Change detection based on Faster R-CNN for high-resolution remote sensing images. Remote Sens. Lett. 2018, 9, 923–932. [Google Scholar] [CrossRef]

- Dewan, N.; Kashyap, V.; Kushwaha, A.S. A review of pulse coupled neural network. Iioab J. 2019, 10, 61–65. [Google Scholar]

- Liu, R.; Jia, Z.; Qin, X.; Yang, J.; Kasabov, N.K. SAR Image Change Detection Method Based on Pulse-Coupled Neural Network. J. Indian Soc. Remote Sens. 2016, 44, 443–450. [Google Scholar] [CrossRef]

- Pratola, C.; Del Frate, F.; Schiavon, G.; Solimini, D. Toward Fully Automatic Detection of Changes in Suburban Areas from VHR SAR Images by Combining Multiple Neural-Network Models. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2055–2066. [Google Scholar] [CrossRef]

- Zhong, Y.; Liu, W.; Zhao, J.; Zhang, L. Change Detection Based on Pulse-Coupled Neural Networks and the NMI Feature for High Spatial Resolution Remote Sensing Imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 537–541. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Advances in Neural Information Processing Systems 27; Ghahramani, Z., Welling, M., Cortes, C., Lawrence, N.D., Weinberger, K.Q., Eds.; NIPS: La Jolla, CA, USA, 2014; Volume 27. [Google Scholar]

- Gong, M.; Niu, X.; Zhang, P.; Li, Z. Generative Adversarial Networks for Change Detection in Multispectral Imagery. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 2310–2314. [Google Scholar] [CrossRef]

- Hou, B.; Liu, Q.; Wang, H.; Wang, Y. From W-Net to CDGAN: Bitemporal Change Detection via Deep Learning Techniques. IEEE Trans. Geosci. Remote Sens. 2020, 58, 1790–1802. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Shi, W.; Atkinson, P.; Li, Z. Land Cover Change Detection at Subpixel Resolution with a Hopfield Neural Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 8, 1–14. [Google Scholar] [CrossRef]

- Chen, K.; Huo, C.; Zhou, Z.; Lu, H. Unsupervised Change Detection in High Spatial Resolution Optical Imagery Based on Modified Hopfield Neural Network; IEEE: New York, NY, USA, 2008; pp. 281–285. [Google Scholar] [CrossRef]

- Subudhi, B.N.; Ghosh, S.; Ghosh, A. Spatial constraint hopfield-type neural networks for detecting changes in remotely sensed multitemporal images. In Proceedings of the 2013 20th IEEE International Conference on Image Processing, Melbourne, VIC, Australia, 15–18 September 2013; pp. 3815–3819. [Google Scholar]

- Wu, K.; Du, Q.; Wang, Y.; Yang, Y. Supervised Sub-Pixel Mapping for Change Detection from Remotely Sensed Images with Different Resolutions. Remote Sens. 2017, 9, 284. [Google Scholar] [CrossRef] [Green Version]

- Dalmiya, C.P.; Santhi, N.; Sathyabama, B. An enhanced back propagation method for change analysis of remote sensing images with adaptive preprocessing. Eur. J. Remote Sens. 2019, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Castellana, L.; D’Addabbo, A.; Pasquariello, G. A composed supervised/unsupervised approach to improve change detection from remote sensing. Pattern Recognit. Lett. 2007, 28, 405–413. [Google Scholar] [CrossRef]

- Del Frate, F.; Pacifici, F.; Solimini, D. Monitoring Urban Land Cover in Rome, Italy, and Its Changes by Single-Polarization Multitemporal SAR Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2008, 1, 87–97. [Google Scholar] [CrossRef]

- Mirici, M.E. Land use/cover change modelling in a mediterranean rural landscape using multi-layer perceptron and markov chain (mlp-mc). Appl. Ecol. Environ. Res. 2018, 16, 467–486. [Google Scholar] [CrossRef]

- Patra, S.; Ghosh, S.; Ghosh, A. Change detection of remote sensing images with semi-supervised multilayer perceptron. Fundam. Inform. 2008, 84, 429–442. [Google Scholar]

- Tarantino, C.; Blonda, P.; Pasquariello, G. Remote sensed data for automatic detection of land-use changes due to human activity in support to landslide studies. Nat. Hazards 2006, 41, 245–267. [Google Scholar] [CrossRef]

- Chen, J.; Zheng, G.; Fang, C.; Zhang, N.; Chen, J.; Wu, Z. Time-series processing of large scale remote sensing data with extreme learning machine. Neurocomputing 2014, 128, 199–206. [Google Scholar] [CrossRef]

- Tang, S.H.; Li, T.; Cheng, X.H. A Novel Remote Sensing Image Change Detection Algorithm Based on Self-Organizing Feature Map Neural Network Model. In Proceedings of the 2016 International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 21–22 October 2016; IEEE: New York, NY, USA, 2016; pp. 1033–1038. [Google Scholar]

- Xiao, R.; Cui, R.; Lin, M.; Chen, L.; Ni, Y.; Lin, X.; Lin, X. SOMDNCD: Image Change Detection Based on Self-Organizing Maps and Deep Neural Networks. IEEE Access 2018, 6, 35915–35925. [Google Scholar] [CrossRef]

- Chen, X.; Li, X.W.; Ma, J.W. Urban Change Detection Based on Self-Organizing Feature Map Neural Network. In Proceedings of the 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; pp. 3428–3431. [Google Scholar]

- Ghosh, S.; Roy, M.; Ghosh, A. Semi-supervised change detection using modified self-organizing feature map neural network. Appl. Soft Comput. 2014, 15, 1–20. [Google Scholar] [CrossRef]

- Patra, S.; Ghosh, S.; Ghosh, A. Unsupervised Change Detection in Remote-Sensing Images using One-dimensional Self-Organizing Feature Map Neural Network. In Proceedings of the 9th International Conference on Information Technology (ICIT’06), Bhubaneswar, India, 18–21 December 2006; pp. 141–142. [Google Scholar]

- Song, Y.; Yuan, X.; Xu, H.; Yang, Y. A novel image change detection method based on enhanced growing self-organization feature map. Geoinformatics Remote Sens. Data Inf. 2006, 6419, 641915. [Google Scholar] [CrossRef]

- Coppin, P.; Jonckheere, I.; Nackaerts, K.; Muys, B.; Lambin, E. Review ArticleDigital change detection methods in ecosystem monitoring: A review. Int. J. Remote Sens. 2004, 25, 1565–1596. [Google Scholar] [CrossRef]

- Karpatne, A.; Jiang, Z.; Vatsavai, R.R.; Shekhar, S.; Kumar, V. Monitoring Land-Cover Changes: A Machine-Learning Perspective. IEEE Geosci. Remote Sens. Mag. 2016, 4, 8–21. [Google Scholar] [CrossRef]

- Tomoya, M.; Kanji, T. Change Detection under Global Viewpoint Uncertainty. arXiv 2017, arXiv:1703.00552. [Google Scholar]

- Yang, G.; Li, H.-C.; Wang, W.-Y.; Yang, W.; Emery, W.J. Unsupervised Change Detection Based on a Unified Framework for Weighted Collaborative Representation with RDDL and Fuzzy Clustering. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8890–8903. [Google Scholar] [CrossRef]

- Durmusoglu, Z.; Tanriover, A. Modelling land use/cover change in Lake Mogan and surroundings using CA-Markov Chain Analysis. J. Environ. Boil. 2017, 38, 981–989. [Google Scholar] [CrossRef]

- Fan, F.; Wang, Y.; Wang, Z. Temporal and spatial change detecting (1998–2003) and predicting of land use and land cover in Core corridor of Pearl River Delta (China) by using TM and ETM+ images. Environ. Monit. Assess. 2007, 137, 127–147. [Google Scholar] [CrossRef]

- Tong, X.; Zhang, X.; Liu, M. Detection of urban sprawl using a genetic algorithm-evolved artificial neural network classification in remote sensing: A case study in Jiading and Putuo districts of Shanghai, China. Int. J. Remote Sens. 2010, 31, 1485–1504. [Google Scholar] [CrossRef]

- Iino, S.; Ito, R.; Doi, K.; Imaizumi, T.; Hikosaka, S. Generating high-accuracy urban distribution map for short-term change monitoring based on convolutional neural network by utilizing SAR imagery. In Earth Resources and Environmental Remote Sensing/GIS Applications VIII; Michel, U., Schulz, K., Nikolakopoulos, K.G., Civco, D., Eds.; Spie-Int Soc Optical Engineering: Bellingham, WA, USA, 2017; Volume 10428. [Google Scholar]

- Rokni, K.; Ahmad, A.; Solaimani, K.; Hazini, S. A new approach for surface water change detection: Integration of pixel level image fusion and image classification techniques. Int. J. Appl. Earth Obs. Geoinformation 2015, 34, 226–234. [Google Scholar] [CrossRef]

- Song, A.; Kim, Y.; Kim, Y. Change Detection of Surface Water in Remote Sensing Images Based on Fully Convolutional Network. J. Coast. Res. 2019, 91, 426–430. [Google Scholar] [CrossRef]

- Lindquist, E.; D’Annunzio, R. Assessing Global Forest Land-Use Change by Object-Based Image Analysis. Remote Sens. 2016, 8, 678. [Google Scholar] [CrossRef] [Green Version]

- Ding, A.; Zhang, Q.; Zhou, X.; Dai, B. Automatic Recognition of Landslide Based on CNN and Texture Change Detection. In Proceedings of the 2016 31st Youth Academic Annual Conference of Chinese-Association-of-Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 444–448. [Google Scholar]

- Singh, A.; Singh, K.K.; Nigam, M.J.; Pal, K. Detection of tsunami-induced changes using generalized improved fuzzy radial basis function neural network. Nat. Hazards 2015, 77, 367–381. [Google Scholar] [CrossRef]

- Peng, B.; Meng, Z.; Huang, Q.; Wang, C. Patch Similarity Convolutional Neural Network for Urban Flood Extent Mapping Using Bi-Temporal Satellite Multispectral Imagery. Remote Sens. 2019, 11, 2492. [Google Scholar] [CrossRef] [Green Version]

- Sakurada, K.; Tetsuka, D.; Okatani, T. Temporal city modeling using street level imagery. Comput. Vis. Image Underst. 2017, 157, 55–71. [Google Scholar] [CrossRef]

- Wang, L. Heterogeneous Data and Big Data Analytics. Autom. Control. Inf. Sci. 2017, 3, 8–15. [Google Scholar] [CrossRef] [Green Version]

- Bengio, Y.; Courville, A.C.; Vincent, P. Unsupervised Feature Learning and Deep Learning: A Review and New Perspectives. arXiv 2012, arXiv:1206.5538. [Google Scholar]

- Guidotti, R.; Monreale, A.; Ruggieri, S.; Turini, F.; Giannotti, F.; Pedreschi, D. A Survey of Methods for Explaining Black Box Models. ACM Comput. Surv. 2019, 51, 1–42. [Google Scholar] [CrossRef] [Green Version]

- Dietterich, T.G. Steps Toward Robust Artificial Intelligence. AI Mag. 2017, 38, 3–24. [Google Scholar] [CrossRef] [Green Version]

- Mueller, S.T.; Hoffman, R.R.; Clancey, W.; Emrey, A.; Klein, G. Explanation in human-AI systems: A literature meta-review, synopsis of key ideas and publications, and bibliography for explainable AI. arXiv 2019, arXiv:1902.01876. [Google Scholar]

- Shi, W.; Hao, M. Analysis of spatial distribution pattern of change-detection error caused by misregistration. Int. J. Remote Sens. 2013, 34, 6883–6897. [Google Scholar] [CrossRef]

- Zhang, P.; Shi, W.; Wong, M.-S.; Chen, J. A Reliability-Based Multi-Algorithm Fusion Technique in Detecting Changes in Land Cover. Remote Sens. 2013, 5, 1134–1151. [Google Scholar] [CrossRef] [Green Version]

- Bruzzone, L.; Cossu, R.; Vernazza, G. Detection of land-cover transitions by combining multidate classifiers. Pattern Recognit. Lett. 2004, 25, 1491–1500. [Google Scholar] [CrossRef]

- He, P.; Shi, W.; Miao, Z.; Zhang, H.; Cai, L. Advanced Markov random field model based on local uncertainty for unsupervised change detection. Remote Sens. Lett. 2015, 6, 667–676. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Acuna, D.; Ling, H.; Kar, A.; Fidler, S. Object instance annotation with deep extreme level set evolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 7500–7508. [Google Scholar]

| Type | Data set | Description |

|---|---|---|

| Optical RS | Hyperspectral change detection dataset [135] | 3 different hyperspectral scenes acquired by AVIRIS or HYPERION sensor, with 224 or 242 spectral bands, labeled 5 types of changes related with crop transitions at pixel level. |

| River HSIs dataset [39] | 2 HSIs in Jiangsu province, China, with 198 bands, labeled as changed and unchanged at pixel level. | |

| HRSCD [136] | 291 co-registered pairs of RGB aerial images, with pixel-level change and land cover annotations, providing hierarchical level change labels, for example, level 1 labels include five classes: no information, artificial surfaces, agricultural areas, forests, wetlands, and water. | |

| WHU building dataset [88] | 2-period aerial images containing 12,796 buildings, provided along with building vector and raster maps. | |

| SZTAKI Air change benchmark [137,138] | 13 aerial image pairs with 1.5 m spatial resolution, labeled as changed and unchanged at pixel level. | |

| OSCD [139] | 24 pairs of multispectral images acquired by Sentinel-2, labeled as changed and unchanged at pixel level. | |

| Change detection dataset [140] | 4 pairs of multispectral images with different spatial resolutions, labeled as changed and unchanged at pixel level. | |

| MtS-WH [141] | 2 large-size VHR images acquired by IKONOS sensors, with 4 bands and 1 m spatial resolution, labeled 5 types of changes (i.e., parking, sparse houses, residential region, and vegetation region) at scene level. | |

| ABCD [92] | 16,950 pairs of RGB aerial images for detecting washed buildings by tsunami, labeled damaged buildings at scene level. | |

| xBD [142] | Pre- and post-disaster satellite imageries for building damage assessment, with over 850,000 building polygons from 6 disaster types, labeled at pixel level with 4 damage scales. | |

| AICD [143] | 1000 pairs of synthetic aerial images with artificial changes generated with a rendering engine, labeled as changed and unchanged at pixel level. | |

| Database of synthetic and real images [144] | 24,000 synthetic images and 16,000 fragments of real season-varying RS images obtained by Google Earth, labeled as changed and unchanged at pixel level. | |

| Street view | VL-CMU-CD [145] | 1362 co-registered pairs of RGB and depth images, labeled ground truth change (e.g., bin, sign, vehicle, refuse, construction, traffic cone, person/cycle, barrier) and sky masks at pixel level. |

| PCD 2015 [119] | 200 panoramic image pairs in "TSUNAMI" and "GSV" subset, with the size of 224 × 1024 pixels, label as changed and unchanged at pixel level. | |

| Change detection dataset [146] | Image sequences of city streets captured by a vehicle-mounted camera at two different time points, with the size of 5000 × 2500 pixels, labeled 3D scene structure changes at pixel level. |

| Applications | Data Types | |

|---|---|---|

| Urban contexts | Urban expansion | a. Satellite images [52,228] b. SAR images [229] |

| Public space management | Street view images [117] | |

| Building change detection | a. Aerial images [86,89,90] b. Satellite images [85,192] c. Satellite/Aerial images [88,94] d. Airborne laser scanning data and aerial images [91] e. SAR images [112] f. Satellite images and GIS map [177] | |

| Resources & environment | Human-driven environmental changes | Satellite images [69] |

| Hydro-environmental changes | Satellite images [56] | |

| Sea ice | SAR images [171] | |

| Surface water | Satellite images [230,231] | |

| Forest monitoring | Satellite images [45,63,150,196,232] | |

| Natural disasters | Landslide mapping | a. Aerial images [20,93] b. Satellite images [129,214,233] |

| Damage assessment | a. Satellite images (caused by tsunami [190,234], particular incident [156], flood [235], or earthquake [19]) b. Aerial images (caused by tsunami [92]) c. SAR images (caused by fires [104], or earthquake [110]) d. Street view images (caused by tsunami [119]) e. Street view images and GIS map (caused by tsunami [236]) | |

| Astronomy | Planetary surfaces | Satellite images [170] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, W.; Zhang, M.; Zhang, R.; Chen, S.; Zhan, Z. Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges. Remote Sens. 2020, 12, 1688. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12101688

Shi W, Zhang M, Zhang R, Chen S, Zhan Z. Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges. Remote Sensing. 2020; 12(10):1688. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12101688

Chicago/Turabian StyleShi, Wenzhong, Min Zhang, Rui Zhang, Shanxiong Chen, and Zhao Zhan. 2020. "Change Detection Based on Artificial Intelligence: State-of-the-Art and Challenges" Remote Sensing 12, no. 10: 1688. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12101688