A Deep Learning Approach for Burned Area Segmentation with Sentinel-2 Data

Abstract

:1. Introduction

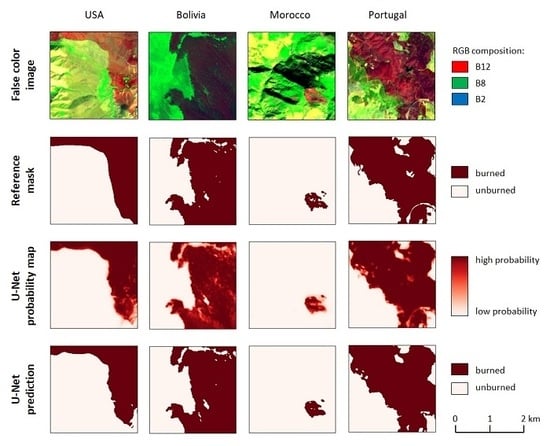

2. Materials and Methods

2.1. Data

2.2. Fully Automatic Processing Chain

2.3. Semantic Segmentation of Burned Areas with a U-Net CNN

2.4. Training of the U-Net CNN

2.5. Accuracy Assessment

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kelly, L.T.; Brotons, L. Using fire to promote biodiversity. Science 2017, 355, 1264–1265. [Google Scholar] [CrossRef] [PubMed]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; San Miguel, J.; Tanase, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical background and current developments for mapping burned area from satellite Earth observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Bowman, D.M.J.S.; Balch, J.K.; Artaxo, P.; Bond, W.J.; Carlson, J.M.; Cochrane, M.A.; D’Antonio, C.M.; DeFries, R.S.; Doyle, J.C.; Harrison, S.P.; et al. Fire in the Earth system. Science 2009, 324, 481–484. [Google Scholar] [CrossRef]

- Lewis, S.L.; Edwards, D.P.; Galbraith, D. Increasing human dominance of tropical forests. Science 2015, 349, 827–832. [Google Scholar] [CrossRef]

- Thonicke, K.; Spessa, A.; Prentice, I.C.; Harrison, S.P.; Dong, L.; Carmona-Moreno, C. The influence of vegetation, fire spread and fire behaviour on biomass burning and trace gas emissions: Results from a process-based model. Biogeosciences 2010, 7, 1991–2011. [Google Scholar] [CrossRef] [Green Version]

- Yue, C.; Ciais, P.; Cadule, P.; Thonicke, K.; Van Leeuwen, T.T. Modelling the role of fires in the terrestrial carbon balance by incorporating SPITFIRE into the global vegetation model ORCHIDEE—Part 2: Carbon emissions and the role of fires in the global carbon balance. Geosci. Model Dev. 2015, 8, 1321–1338. [Google Scholar] [CrossRef] [Green Version]

- Chuvieco, E.; Aguado, I.; Yebra, M.; Nieto, H.; Salas, J.; Martín, M.P.; Vilar, L.; Martinez, J.; Martín, S.; Ibarra, P.; et al. Development of a framework for fire risk assessment using remote sensing and geographic information system technologies. Ecol. Model. 2010, 221, 46–58. [Google Scholar] [CrossRef]

- Global Assessment Report on Disaster Risk Reduction 2019. Available online: https://gar.undrr.org/sites/default/files/reports/2019-05/full_gar_report.pdf (accessed on 7 January 2020).

- Nolde, M.; Plank, S.; Riedlinger, T. An Adaptive and Extensible System for Satellite-Based, Large Scale Burnt Area Monitoring in Near-Real Time. Remote Sens. 2020, 12, 2162. [Google Scholar] [CrossRef]

- Laris, P.S. Spatiotemporal problems with detecting and mapping mosaic fire regimes with coarse-resolution satellite data in savanna environments. Remote Sens. Environ. 2005, 99, 412–424. [Google Scholar] [CrossRef]

- Roy, D.P.; Jin, Y.; Lewis, P.E.; Justice, C.O. Prototyping a global algorithm for systematic fire-affected area mapping using MODIS time series data. Remote Sens. Environ. 2005, 97, 137–162. [Google Scholar] [CrossRef]

- Chuvieco, E.; Lizundia-Loiola, J.; Pettinari, M.L.; Ramo, R.; Padilla, M.; Tansey, K.; Mouillot, F.; Laurent, P.; Storm, T.; Heil, A.; et al. Generation and analysis of a global burned area product based on MODIS 250m reflectance bands and thermal anomalies. Earth Syst. Sci. Data 2018, 512, 1–24. [Google Scholar] [CrossRef]

- Giglio, L.; Boschetti, L.; Roy, D.P.; Humber, M.L.; Justice, C.O. The Collection 6 MODIS burned area mapping algorithm and product. Remote Sens. Environ. 2018, 217, 72–85. [Google Scholar] [CrossRef] [PubMed]

- San-Miguel-Ayanz, J.; Schulte, E.; Schmuck, G.; Camia, A.; Strobl, P.; Liberta, G.; Giovando, C.; Boca, R.; Sedano, F.; Kempeneers, P.; et al. Comprehensive monitoring of wildfires in Europe: The European forest fire information system (EFFIS). In Approaches to Managing Disasters—Assessing Hazards, Emergencies and Disaster Impacts; Tiefenbacher, J., Ed.; IntechOpen: Rijeka, Croatia, 2012; pp. 87–108. [Google Scholar]

- Hawbaker, T.J.; Vanderhoof, M.K.; Beal, Y.-J.; Takacs, J.D.; Schmidt, G.L.; Falgout, J.F.; Williams, B.W.; Fairaux, N.M.; Caldwell, M.K.; Picotte, J.J.; et al. Mapping burned areas using dense time-series of Landsat data. Remote Sens. Environ. 2017, 198, 504–522. [Google Scholar] [CrossRef]

- Stavrakoudis, D.G.; Katagis, T.; Minakou, C.; Gitas, I.Z. Towards a fully automatic processing chain for operationally mapping burned areas countrywide exploiting Sentinel-2 imagery. In Proceedings of the Seventh International Conference on Remote Sensing and Geoinformation of the Environment (RSCy2019), Paphos, Cyprus, 18–21 March 2019; p. 1117405. [Google Scholar]

- Bettinger, M.; Martinis, S.; Plank, S. An Automatic Process Chain for Detecting Burn Scars Using Sentinel-2 Data. In Proceedings of the 11th EARSeL Forest Fires SIG Workshop, Chania, Greece, 25–27 September 2017. [Google Scholar]

- Pulvirenti, L.; Squicciarino, G.; Fiori, E.; Fiorucci, P.; Ferraris, L.; Negro, D.; Gollini, A.; Severino, M.; Puca, S. An Automatic Processing Chain for Near Real-Time Mapping of Burned Forest Areas Using Sentinel-2 Data. Remote Sens. 2020, 12, 674. [Google Scholar] [CrossRef] [Green Version]

- Roteta, E.; Bastarrika, A.; Padilla, M.; Storm, T.; Chuvieco, E. Development of a Sentinel-2 burned area algorithm: Generation of a small fire database for sub-Saharan Africa. Remote Sens. Environ. 2018, 222, 1–17. [Google Scholar] [CrossRef]

- Navarro, G.; Caballero, I.; Silva, G.; Parra, P.C.; Vázquez, Á.; Caldeira, R. Evaluation of forest fire on Madeira Island using Sentinel-2A MSI imagery. Int. J. Appl. Earth Obs. Geoinf. 2017, 58, 97–106. [Google Scholar] [CrossRef] [Green Version]

- Lasaponara, R.; Tucci, B.; Ghermandi, L. On the use of satellite Sentinel 2 data for automatic mapping of burnt areas and burn severity. Sustainability 2018, 10, 3889. [Google Scholar] [CrossRef] [Green Version]

- Filipponi, F. Exploitation of Sentinel-2 Time Series to Map Burned Areas at the National Level: A Case Study on the 2017 Italy Wildfires. Remote Sens. 2019, 11, 622. [Google Scholar] [CrossRef] [Green Version]

- Fernández-Manso, A.; Fernández-Manso, O.; Quintano, C. SENTINEL-2A red-edge spectral indices suitability for discriminating burn severity. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 170–175. [Google Scholar] [CrossRef]

- CAL FIRE Incidents Overview. Available online: https://www.fire.ca.gov/incidents (accessed on 1 September 2019).

- Cartografía de area ardida. Available online: http://www2.icnf.pt/portal/florestas/dfci/inc/mapas (accessed on 1 September 2019).

- Key, C.H.; Benson, N.C. Measuring and remote sensing of burn severity: The CBI and NBR. In Proceedings of the Joint Fire Science Conference and Workshop, Boise, ID, USA, 15–17 June 1999; Volume 2000, pp. 284–285. [Google Scholar]

- Trigg, S.; Flasse, S. An evaluation of different bi-spectral spaces for discriminating burned shrub-savannah. Int. J. Remote Sens. 2001, 22, 2641–2647. [Google Scholar] [CrossRef]

- Martín, M.P.; Gómez, I.; Chuvieco, E. Performance of a burned-area index (BAIM) for mapping Mediterranean burned scars from MODIS data. In Proceedings of the 5th International Workshop on Remote Sensing and GIS Applications to forest fire management: Fire effects assessment, Zaragoza, Spain, 16–18 June 2005; pp. 193–198. [Google Scholar]

- Ramo, R.; Chuvieco, E. Developing a Random Forest algorithm for MODIS global burned area classification. Remote Sens. 2017, 9, 1193. [Google Scholar] [CrossRef] [Green Version]

- Roy, D.P.; Huang, H.; Boschetti, L.; Giglio, L.; Yan, L.; Zhang, H.H.; Li, Z. Landsat-8 and Sentinel-2 burned area mapping—A combined sensor multi-temporal change detection approach. Remote Sens. Environ. 2019, 231, 111254. [Google Scholar] [CrossRef]

- Petropoulos, G.P.; Kontoes, C.; Keramitsoglou, I. Burnt area delineation from a uni-temporal perspective based on Landsat TM imagery classification using support vector machines. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 70–80. [Google Scholar] [CrossRef]

- Zhang, R.; Qu, J.J.; Liu, Y.; Hao, X.; Huang, C.; Zhan, X. Detection of burned areas from mega-fires using daily and historical MODIS surface reflectance. Int. J. Remote Sens. 2015, 36, 1167–1187. [Google Scholar] [CrossRef]

- Al-Rawi, K.R.; Casanova, J.L.; Calle, A. Burned area mapping system and fire detection system, based on neural networks and NOAA-AVHRR imagery. Int. J. Remote Sens. 2015, 22, 2015–2032. [Google Scholar] [CrossRef]

- Brivio, P.A.; Maggi, M.; Binaghi, E.; Gallo, I. Mapping burned surfaces in Sub-Saharan Africa based on multi-temporal neural classification. Int. J. Remote Sens. 2003, 24, 4003–4016. [Google Scholar] [CrossRef]

- Gimeno, M.; San-Miguel-Ayanz, J. Evaluation of RADARSAT-1 data for identification of burnt areas in Southern Europe. Remote Sens. Environ. 2004, 92, 370–375. [Google Scholar] [CrossRef]

- Petropoulos, G.P.; Vadrevu, K.P.; Xanthopoulos, G.; Karantounias, G.; Scholze, M. A comparison of spectral angle mapper and artificial neural network classifiers combined with Landsat TM imagery analysis for obtaining burnt area mapping. Sensors 2010, 10, 1967–1985. [Google Scholar] [CrossRef] [Green Version]

- Gómez, I.; Martín, M.P. Prototyping an artificial neural network for burned area mapping on a regional scale in Mediterranean areas using MODIS images. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 741–752. [Google Scholar] [CrossRef]

- Ramo, R.; García, M.; Rodríguez, D.; Chuvieco, E. A data mining approach for global burned area mapping. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 39–51. [Google Scholar] [CrossRef]

- Ba, R.; Song, W.; Li, X.; Xie, Z.; Lo, S. Integration of multiple spectral indices and a neural network for burned area mapping based on MODIS data. Remote Sens. 2019, 11, 326. [Google Scholar] [CrossRef] [Green Version]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional neural networks for large-scale remote-sensing image classification. IEEE Trans. Geosci. Remote Sens. 2016, 55, 645–657. [Google Scholar] [CrossRef] [Green Version]

- Wurm, M.; Stark, T.; Zhu, X.X.; Weigand, M.; Taubenböck, H. Semantic segmentation of slums in satellite images using transfer learning on fully convolutional neural networks. Isprs J. Photogramm. Remote Sens. 2019, 150, 59–69. [Google Scholar] [CrossRef]

- Wieland, M.; Li, Y.; Martinis, S. Multi-sensor cloud and cloud shadow segmentation with a convolutional neural network. Remote Sens. Environ. 2019, 230, 1–22. [Google Scholar] [CrossRef]

- Wieland, M.; Martinis, S. A modular processing chain for automated flood monitoring from multi-spectral satellite data. Remote Sens. 2019, 11, 2330. [Google Scholar] [CrossRef] [Green Version]

- Luus, F.P.S.; Salmon, B.P.; den Bergh, F.; Maharaj, B.T.J. Multiview deep learning for land-use classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2448–2452. [Google Scholar] [CrossRef] [Green Version]

- Rustowicz, R.M.; Cheong, R.; Wang, L.; Ermon, S.; Burke, M.; Lobell, D. Semantic Segmentation of Crop Type in Africa: A Novel Dataset and Analysis of Deep Learning Methods. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019; pp. 75–82. [Google Scholar]

- Pinto, M.M.; Libonati, R.; Trigo, R.M.; Trigo, I.F.; DaCamara, C.C. A deep learning approach for mapping and dating burned areas using temporal sequences of satellite images. Isprs J. Photogramm. Remote Sens. 2020, 160, 260–274. [Google Scholar] [CrossRef]

- Ban, Y.; Zhang, P.; Nascetti, A.; Bevington, A.R.; Wulder, M.A. Near Real-Time Wildfire Progression Monitoring with Sentinel-1 SAR Time Series and Deep Learning. Sci. Rep. 2020, 10, 1322. [Google Scholar] [CrossRef] [Green Version]

- 2017 Statewide Fire Map. Available online: https://www.google.com/maps/d/viewer?mid=1TOEFA857tOVxtewW1DH6neG1Sm0 (accessed on 1 September 2019).

- 2018 Statewide Fire Map. Available online: https://www.google.com/maps/d/viewer?mid=1HacmM5E2ueL-FT2c6QMVzoAmE5M19GAf (accessed on 1 September 2019).

- Weih, R.C.; Riggan, N.D. Object-based classification vs. pixel-based classification: Comparative importance of multi-resolution imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2010, 38, C7. [Google Scholar]

- Sentinel-2 ESA’s Optical High-Resolution Mission for GMES Operational Services (ESA SP-1322/2 March 2012). Available online: https://sentinel.esa.int/documents/247904/349490/S2_SP-1322_2.pdf (accessed on 20 April 2020).

- Olson, D.M.; Dinerstein, E.; Wikramanayake, E.D.; Burgess, N.D.; Powell, G.V.N.; Underwood, E.C.; D’amico, J.A.; Itoua, I.; Strand, H.E.; Morrison, J.C.; et al. Terrestrial Ecoregions of the World: A New Map of Life on Earth. Bioscience 2001, 51, 933. [Google Scholar] [CrossRef]

- Copernicus Emergency Mapping Service-EMSR169. Available online: https://emergency.copernicus.eu/mapping/list-of-components/EMSR169 (accessed on 19 February 2020).

- Copernicus Emergency Mapping Service-EMSR291. Available online: https://emergency.copernicus.eu/mapping/list-of-components/EMSR291 (accessed on 19 February 2020).

- Copernicus Emergency Mapping Service-EMSR365. Available online: https://emergency.copernicus.eu/mapping/list-of-components/EMSR365 (accessed on 19 February 2020).

- Keras. Available online: https://keras.io (accessed on 7 October 2019).

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Springer: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. [Google Scholar]

- Kingma, D.P.; Ba, J.L. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations (ICLR 2015), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Scikit-learn. Available online: http://scikit-learn.org (accessed on 27 March 2020).

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

| Band | Description | Central Wavelength [nm] | Bandwidth [nm] | Spatial Resolution [m] |

|---|---|---|---|---|

| B1 | Aerosol | 443 | 20 | 60 |

| B2 | Blue | 490 | 65 | 10 |

| B3 | Green | 560 | 35 | 10 |

| B4 | Red | 665 | 30 | 10 |

| B5 | Vegetation edge | 705 | 15 | 20 |

| B6 | Vegetation edge | 740 | 15 | 20 |

| B7 | Vegetation edge | 783 | 220 | 20 |

| B8 | NIR | 842 | 115 | 10 |

| B8a | Narrow NIR | 865 | 20 | 20 |

| B9 | Water vapor | 945 | 20 | 60 |

| B10 | Cirrus | 1380 | 30 | 60 |

| B11 | SWIR1 | 1610 | 90 | 20 |

| B12 | SWIR2 | 2190 | 180 | 20 |

| Test ID | Spectral Bands | Acc. | Kappa | Precision | Recall | Inference Time (s/mp) |

|---|---|---|---|---|---|---|

| BC1 | B1, B2, B3, B4, B5, B6, B7, B8, B8a, B9, B10, B11, B12 | 0.97 | 0.90 | 0.92 | 0.92 | 0.28 ± 0.06 |

| BC2 | B2, B3, B4, B5, B6, B7, B8, B8a, B11, B12 | 0.97 | 0.91 | 0.90 | 0.96 | 0.25 ± 0.04 |

| BC3 | B2, B3, B4, B8, B11, B12 | 0.96 | 0.90 | 0.92 | 0.92 | 0.23 ± 0.04 |

| BC4 | B2, B3, B4, B8 | 0.96 | 0.88 | 0.87 | 0.95 | 0.23 ± 0.04 |

| BC5 | B2, B3, B4 | 0.90 | 0.75 | 0.70 | 0.98 | 0.22 ± 0.04 |

| Model | OA | K | Precision | Recall | F1 score | Inference Time (s/mp) |

|---|---|---|---|---|---|---|

| U-Net | 0.98 | 0.94 | 0.95 | 0.95 | 0.95 | 2.20 ± 0.01 (0.26 ± 0.05) |

| RF | 0.96 | 0.87 | 0.93 | 0.87 | 0.90 | 3.80 ± 0.02 |

| Site | OA | K | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| A | 0.99 | 0.86 | 0.77 | 0.97 | 0.86 |

| B | 0.99 | 0.97 | 0.96 | 0.99 | 0.97 |

| C | 0.99 | 0.92 | 0.88 | 0.98 | 0.93 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Knopp, L.; Wieland, M.; Rättich, M.; Martinis, S. A Deep Learning Approach for Burned Area Segmentation with Sentinel-2 Data. Remote Sens. 2020, 12, 2422. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12152422

Knopp L, Wieland M, Rättich M, Martinis S. A Deep Learning Approach for Burned Area Segmentation with Sentinel-2 Data. Remote Sensing. 2020; 12(15):2422. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12152422

Chicago/Turabian StyleKnopp, Lisa, Marc Wieland, Michaela Rättich, and Sandro Martinis. 2020. "A Deep Learning Approach for Burned Area Segmentation with Sentinel-2 Data" Remote Sensing 12, no. 15: 2422. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12152422