An FPGA Accelerator for Real-Time Lossy Compression of Hyperspectral Images

Abstract

:1. Introduction

2. The JYPEC Algorithm

2.1. Dimensionality Reduction

2.2. The JPEG2000 Algorithm

- A color transformation is done per pixel, converting the input color space (usually RGB) into a luminance (brightness) and chrominance (color) model, since human vision is more sensitive to brightness than color. The color channels can be down-sampled with no perceivable loss in quality, reducing a large chunk of the data bits.

- Every channel is then subjected to a wavelet transform [42]. A wavelet transform consists of a high-pass and a low-pass [43] filter that are applied both horizontally and vertically to all rows and columns respectively. This can be done in a reversible (lossless) or irreversible (lossy) way, and in either case the result is a partitioned channel in which different zones present different patterns that can be compressed to a higher degree than the original data.

- After doing the wavelet transform, the resulting values are quantized to integer values; some information is lost when the lossy wavelet transform is used.

- Finally, the values are encoded. The image is split into blocks of up to 4096 samples; each one is encoded individually, thereby exploiting local redundancies and the patterns left by the wavelet transform.

2.2.1. Encoding

2.2.2. Tier 1 Coder

- A bit x which is the current prediction for the given context.

- A state (of which there are 47 different ones) indicating the probability p of the prediction x being right.

3. Existing Implementations

3.1. Bit Plane Coder

3.2. MQ-Coder

- Pipelining: As with many other designs, pipelining can be the key to improving performance. Distinct stages have been identified (mainly separating the update of A and C, and the output of coded byte(s)).

- Dual symbol processing: Some bit plane coders can produce two CxD pairs in one cycle. This has motivated the design of MQ coders with the capability of processing two pairs at the same time. Since this can not be done in parallel, these MQ-coders incorporate two cascaded processing units.

4. Implementation

4.1. BPC

4.2. MQ-Coder

4.2.1. First and Second Stages

- If the same context is found in cycles n and , a write–read cycle is skipped and input data are directly multiplexed to the memory’s output.

- To avoid stalling in the case where the same context appears in cycles n and , a second memory is present in the second stage, which outputs state information. The state is decided from the MPS and LPS transitions, and used to read this second memory. In this case, the context memory will be updated the next cycle. But those values are required in the current cycle, so a mux is used to bypass it from the second memory, avoiding a stall.

4.2.2. Third Stage

- If and , both updates can be merged by setting , , . This merges two consecutive shifts that are under the maximum shift length of 15.

- If and , then both updates can be merged with , , . This is because the addition of to C happens before the shift . Both probabilities can be added at once because they are below the limit of .

4.2.3. Fourth Stage

4.3. The Full Tier 1 Coder

- When the MQ-coder stage IV stalls (because it has to output more than one byte), the fuser queue can hold updates until a fused one is sent (effectively canceling out the stalling).

- When the BPC-core is producing many CxD vectors, the vector queue avoids a stall from the BPC-core.

- Conversely, when the BPC-core does not produce vectors, the queue serves as a buffer to keep the next stages busy.

5. Results

- The BPC-core processes a full block of 65,536 bits in 44,032 cycles, working at a speed of 380 Mb/s.

- The BPC-serial can produce up to 390 MCxD pairs per second.

- The MQ-I/II stages processes 322 MCxD pairs per second, generating up to 322 M updates per second.

- The third stage is a bit faster, being able to merge 535 M updates per second.

- The last MQ stage processes up to 331 M updates per second.

- The intermediate FIFO queues have no problem at all keeping up with the speed requirements.

- The minimum number of updates for a block is seen when it is all zero, having successful run-length coding throughout the block. In this case, a total of = 15,360 updates are generated. That is, per bit.

- Conversely, an upper limit for the number of updates is given by a cleanup pass with run-length interruptions at every position, followed by 14 refinement passes. In this case, the number of updates is = 67,584 updates. Exactly per bit.

5.1. Comparison

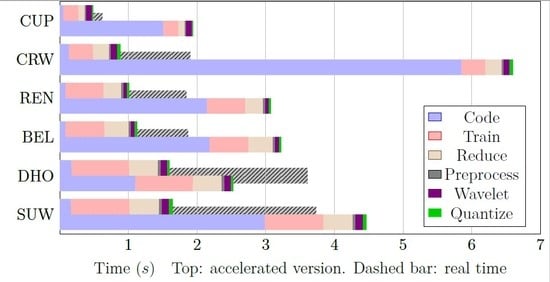

5.2. Acceleration of JYPEC

6. Conclusions

- First, the bit plane coder concurrently processes bits in groups of four, greatly accelerating execution. A system of FIFOs and buffers ensure that a constant stream of CxD pairs reach the MQ-coder.

- Second, the coder itself is highly optimized in a pipelined fashion. Stalling of the pipeline, a problem previous designs had, is avoided by fusing together multiple updates when possible.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- DataBase, I. A Database for Remote Sensing Indices. Available online: https://www.indexdatabase.de/db/s.php (accessed on 24 February 2020).

- eoPortal. Airborne Sensors. Available online: https://directory.eoportal.org/web/eoportal/airborne-sensors (accessed on 14 May 2020).

- Briottet, X.; Boucher, Y.; Dimmeler, A.; Malaplate, A.; Cini, A.; Diani, M.; Bekman, H.; Schwering, P.; Skauli, T.; Kasen, I.; et al. Military applications of hyperspectral imagery. Targets Backgrounds XII Charact. Represent. 2006, 6239, 62390B. [Google Scholar] [CrossRef] [Green Version]

- Tiwari, K.C.; Arora, M.K.; Singh, D. An assessment of independent component analysis for detection of military targets from hyperspectral images. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 730–740. [Google Scholar] [CrossRef]

- Slocum, K.; Surdu, J.; Sullivan, J.; Rudak, M.; Colvin, N.; Gates, C. Trafficability Analysis Engine. Cross Talk J. Def. Softw. Eng. 2003, 28–30. [Google Scholar]

- Murphy, R.J.; Monteiro, S.T.; Schneider, S. Evaluating classification techniques for mapping vertical geology using field-based hyperspectral sensors. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3066–3080. [Google Scholar] [CrossRef]

- Kurz, T.H.; Buckley, S.J.; Howell, J.A.; Schneider, D. Geological outcrop modelling and interpretation using ground based hyperspectral and laser scanning data fusion. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2008, 37, 1229–1234. [Google Scholar]

- van der Meer, F.D.; van der Werff, H.M.; van Ruitenbeek, F.J.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; van der Meijde, M.; Carranza, E.J.M.; de Smeth, J.B.; Woldai, T. Multi- and hyperspectral geologic remote sensing: A review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Thenkabail, P.; Lyon, J.; Huete, A. (Eds.) Hyperspectral Indices and Image Classifications for Agriculture and Vegetation. 2018. Available online: https://www.taylorfrancis.com/books/9781315159331/chapters/10.1201/9781315159331-1 (accessed on 16 July 2020).

- Lu, J.; Yang, T.; Su, X.; Qi, H.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Monitoring leaf potassium content using hyperspectral vegetation indices in rice leaves. Precis. Agric. 2019, 21, 324–348. [Google Scholar] [CrossRef]

- eoPortal. EnMAP (Environmental Monitoring and Analysis Program). Available online: https://directory.eoportal.org/web/eoportal/satellite-missions/e/enmap (accessed on 5 August 2020).

- CCSDS. Low-Complexity Lossless and Near-Lossless Multispectral and Hyperspectral Image Compression. Available online: https://public.ccsds.org/Pubs/123x0b2c1.pdf (accessed on 8 August 2020).

- Motta, G.; Rizzo, F.; Storer, J.A. Hyperspectral Data Compression; Springer Science & Business Media: Berlin, Germany, 2006; p. 417. [Google Scholar]

- Ryan, M.J.; Arnold, J.F. The lossless compression of aviris images by vector quantization. IEEE Trans. Geosci. Remote Sens. 1997, 35, 546–550. [Google Scholar] [CrossRef]

- Abrardo, A.; Barni, M.; Magli, E. Low-complexity predictive lossy compression of hyperspectral and ultraspectral images. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 797–800. [Google Scholar] [CrossRef]

- Fowler, J.; Rucker, J. 3D wavelet-based compression of hyperspectral imagery. In Hyperspectral Data Exploitation: Theory and Applications; John Wiley & Sons: Hoboken, NJ, USA, 2007; pp. 379–407. [Google Scholar]

- Christophe, E.; Mailhes, C.; Duhamel, P. Hyperspectral image compression: Adapting SPIHT and EZW to anisotropic 3-D wavelet coding. IEEE Trans. Image Process. 2008, 17, 2334–2346. [Google Scholar] [CrossRef] [Green Version]

- Huang, B. Satellite Data Compression; Springer Science & Business Media: Berlin, Germany, 2011. [Google Scholar]

- Du, Q.; Fowler, J.E. Hyperspectral image compression using JPEG2000 and principal component analysis. IEEE Geosci. Remote Sens. Lett. 2007, 4, 201–205. [Google Scholar] [CrossRef]

- Báscones, D.; González, C.; Mozos, D. Hyperspectral Image Compression Using Vector Quantization, PCA and JPEG2000. Remote Sens. 2018, 10, 907. [Google Scholar] [CrossRef] [Green Version]

- Taubman, D.; Marcellin, M. JPEG2000 Image Compression Fundamentals, Standards and Practice; Springer Science & Business Media: Berlin, Germay, 2012; Volume 642, p. 773. [Google Scholar] [CrossRef]

- Skodras, A.; Christopoulos, C.; Ebrahimi, T. The JPEG 2000 Still Image Compression Standard. IEEE Signal Process. Mag. 2001, 18, 36–58. [Google Scholar] [CrossRef]

- Wallace, G.K. The JPEG still picture compression standard. IEEE Trans. Consum. Electron. 1992. [Google Scholar] [CrossRef]

- Gangadhar, M.; Bhatia, D. FPGA based EBCOT architecture for JPEG 2000. Microprocess. Microsyst. 2003, 29, 363–373. [Google Scholar] [CrossRef]

- Lian, C.J.; Chen, K.F.; Chen, H.H.; Chen, L.G. Analysis and architecture design of block-coding engine for EBCOT in JPEG 2000. IEEE Trans. Circuits Syst. Video Technol. 2003, 13, 219–230. [Google Scholar] [CrossRef]

- Dyer, M.; Taubman, D.; Nooshabadi, S.; Gupta, A.K. Concurrency techniques for arithmetic coding in JPEG2000. IEEE Trans. Circuits Syst. I Regul. Pap. 2006, 53, 1203–1213. [Google Scholar] [CrossRef]

- Gupta, A.K.; Taubman, D.S.; Nooshabadi, S. High speed VLSI architecture for bit plane encoder of JPEG 2000. IEEE Midwest Sympos. Circuits Syst. 2004, 2, 233–236. [Google Scholar] [CrossRef]

- Kai, L.; Chengke, W.; Yunsong, L. A high-performance VLSI arquitecture of EBCOT block coding in JPEG2000. J. Electron. 2006, 23, 1–5. [Google Scholar]

- Sarawadekar, K.; Banerjee, S. Low-cost, high-performance VLSI design of an MQ coder for JPEG 2000. In Proceedings of the IEEE 10th International Conference on Signal Processing Proceedings, Beijing, China, 24–28 October 2010; Number D. pp. 397–400. [Google Scholar]

- Saidani, T.; Atri, M.; Tourki, R. Implementation of JPEG 2000 MQ-coder. In Proceedings of the 2008 3rd International Conference on Design and Technology of Integrated Systems in Nanoscale Era, Tozeur, Tunisia, 25–27 March 2008; pp. 1–4. [Google Scholar] [CrossRef]

- Sarawadekar, K.; Banerjee, S. VLSI design of memory-efficient, high-speed baseline MQ coder for JPEG 2000. Integr. VLSI J. 2012, 45, 1–8. [Google Scholar] [CrossRef]

- Liu, K.; Zhou, Y.; Li, Y.S.; Ma, J.F.; Song Li, Y.; Ma, J.F. A high performance MQ encoder architecture in JPEG2000. Integr. VLSI J. 2010, 43, 305–317. [Google Scholar] [CrossRef]

- Sulaiman, N.; Obaid, Z.A.; Marhaban, M.H.; Hamidon, M.N. Design and Implementation of FPGA-Based Systems—A Review. Aust. J. Basic Appl. Sci. 2009, 3, 3575–3596. [Google Scholar]

- Trimberger, S.M. Three ages of FPGAs: A retrospective on the first thirty years of FPGA technology. Proc. IEEE 2015, 103, 318–331. [Google Scholar] [CrossRef]

- Xilinx. Space-Grade Virtex-5QV FPGA. Available online: www.xilinx.com/products/silicon-devices/fpga/virtex-5qv.html (accessed on 8 August 2020).

- Báscones, D.; González, C.; Mozos, D. Parallel Implementation of the CCSDS 1.2.3 Standard for Hyperspectral Lossless Compression. Remote Sens. 2017, 9, 973. [Google Scholar] [CrossRef] [Green Version]

- Báscones, D.; Gonzalez, C.; Mozos, D. FPGA Implementation of the CCSDS 1.2.3 Standard for Real-Time Hyperspectral Lossless Compression. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 11, 1158–1165. [Google Scholar] [CrossRef]

- Bascones, D.; Gonzalez, C.; Mozos, D. An Extremely Pipelined FPGA Implementation of a Lossy Hyperspectral Image Compression Algorithm. IEEE Trans. Geosci. Remote Sens. 2020, 1–13. [Google Scholar] [CrossRef]

- Báscones, D. Implementación Sobre FPGA de un Algoritmo de Compresión de Imágenes Hiperespectrales Basado en JPEG2000. Ph.D. Thesis, Universidad Complutense de Madrid, Madrid, Spain, 2018. [Google Scholar]

- Higgins, G.; Faul, S.; McEvoy, R.P.; McGinley, B.; Glavin, M.; Marnane, W.P.; Jones, E. EEG compression using JPEG2000 how much loss is too much? In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology Society, EMBC’10, Buenos Aires, Argentina, 31 August–4 September 2010; pp. 614–617. [Google Scholar] [CrossRef]

- Marpe, D.; George, V.; Cycon, H.L.; Barthel, K.U. Performance evaluation of Motion-JPEG2000 in comparison with H.264/AVC operated in pure intra coding mode. SPIE Proc. 2003, 5266, 129–137. [Google Scholar] [CrossRef] [Green Version]

- Van Fleet, P.J. Discrete Wavelet Transformations: An Elementary Approach with Applications; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar] [CrossRef]

- Makandar, A.; Halalli, B. Image Enhancement Techniques using Highpass and Lowpass Filters. Int. J. Comput. Appl. 2015, 109, 12–15. [Google Scholar] [CrossRef]

- Taubman, D. High performance scalable image compression with EBCOT. IEEE Trans. Image Process. 2000, 9, 1158–1170. [Google Scholar] [CrossRef]

- Andra, K.; Acharya, T.; Chakrabarti, C. Efficient VLSI implementation of bit plane coder of JPEG2000. Appl. Digit. Image Process. Xxiv 2001, 4472, 246–257. [Google Scholar] [CrossRef]

- Li, Y.; Bayoumi, M.A. A three level parallel high speed low power architecture for EBCOT of JPEG 2000. IEEE Trans. Circuits Syst. Video Technol. 2006, 16, 1153–1163. [Google Scholar] [CrossRef]

- Jayavathi, S.D.; Shenbagavalli, A. FPGA Implementation of MQ Coder in JPEG 2000 Standard—A Review. 2016, 28, 76–83. Int. J. Innov. Sci. Res. 2016, 28, 76–83. [Google Scholar]

- Rhu, M.; Park, I.C. Optimization of arithmetic coding for JPEG2000. IEEE Trans. Circuits Syst. Video Technol. 2010, 20, 446–451. [Google Scholar] [CrossRef]

- Ahmadvand, M.; Ezhdehakosh, A. A New Pipelined Architecture for JPEG2000. World Congress Eng. Comput. Sci. 2012, 2, 24–26. [Google Scholar]

- Mei, K.; Zheng, N.; Huang, C.; Liu, Y.; Zeng, Q. VLSI design of a high-speed and area-efficient JPEG2000 encoder. IEEE Trans. Circuits Syst. Video Technol. 2007, 17, 1065–1078. [Google Scholar] [CrossRef]

- Chang, Y.W.; Fang, H.C.; Chen, L.G. High Performance Two-Symbol Arithmetic Encoder in JPEG 2000. 2000, pp. 4–7. Available online: https://video.ee.ntu.edu.tw/publication/paper/[C][2004][ICCE][Yu-Wei.Chang][1].pdf (accessed on 8 August 2020).

- Kumar, N.R.; Xiang, W.; Wang, Y. An FPGA-based fast two-symbol processing architecture for JPEG 2000 arithmetic coding. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 1282–1285. [Google Scholar] [CrossRef] [Green Version]

- Sarawadekar, K.; Banerjee, S. An Efficient Pass-Parallel Architecture for Embedded Block Coder in JPEG 2000. IEEE Trans. Circuits Syst. 2011, 21, 825–836. [Google Scholar] [CrossRef]

- Kumar, N.R.; Xiang, W.; Wang, Y. Two-symbol FPGA architecture for fast arithmetic encoding in JPEG 2000. J. Signal Process. Syst. 2012, 69, 213–224. [Google Scholar] [CrossRef]

- Spectir. Free Data Samples. Available online: https://www.spectir.com/free-data-samples/ (accessed on 5 August 2020).

- CCSDS. Collaborative Work Environment. Available online: https://cwe.ccsds.org/sls/default.aspx (accessed on 5 August 2020).

- Báscones, D. Jypec. Available online: github.com/Daniel-BG/Jypec (accessed on 17 January 2018).

- Báscones, D. Vypec. Available online: github.com/Daniel-BG/Vypec (accessed on 25 January 2018).

| Module | Frequency (MHz) | Slices | BRAM |

|---|---|---|---|

| Tier 1 coder | 255 | 2708 | 4 |

| BPC-core | 248 | 731 | 2 |

| BPC-serial | 390 | 142 | 0 |

| MQ | 321 | 1778 | 0 |

| MQ-I/II | 322 | 1326 | 0 |

| MQ-III | 535 | 47 | 0 |

| MQ-IV | 331 | 231 | 0 |

| FIFOs | 927 | 57 | 2 |

| Coder | Ref. | Technology | Frequency | Speed | Slices | BRAM/b |

|---|---|---|---|---|---|---|

| BPC | [27] | APEX20KE FPGA | 51.7 MHz | 73.44 Mb/s | 956 | n/a ** |

| [28] | XCV600e-6BG432 | 52.0 MHz | 94.4 Mb/s | n/a | n/a ** | |

| [50] | Altera EP20K600EFC672–3 | 100.0 MHz | 40.5 Mb/s | 1850 | 0 ** | |

| This | Virtex-7 FPGA | 247.8 MHz | 368.8 Mb/s | 731 | 2 | |

| MQ | [51] | 0.35 m | 90.0 MHz | 180.0 MCxD/s | n/a | n/a |

| [46] | 0.35 m | 150.0 MHz | 300.0 MCxD/s | n/a | n/a | |

| [26] | Stratix | 48.8 MHz | 97.7 MCxD/s | 1596 | 8192 b | |

| [26] | 0.18 m | 211.8 MHz | 423.7 MCxD/s | n/a | n/a | |

| [50] | Altera EP20K600EFC672–3 | 26.3 MHz | 52.6 MCxD/s | 1811 | n/a | |

| [29] | Stratix FPGA | 153.0 MHz | 137.7 MCxD/s | 279 | 1344 b | |

| [48] | 0.18 m | 413.0 MHz | 413.0 MCxD/s | n/a | n/a | |

| [52] | Stratix FPGA | 106.2 MHz | 212.4 MCxD/s | 1267 | 0 | |

| [32] | XC4VLX80 FPGA | 48.3 MHz | 96.6 MCxD/s | 6974 | 1509 b | |

| [32] | 0.18 m | 220.0 MHz | 440.0 MCxD/s | n/a | n/a | |

| [53] | Stratix EP1S10B672C6 | 136.9 MHz | 136.9 MCxD/s | 695 | 3301 b | |

| [31] | Stratix FPGA | 146.0 MHz | 146.0 MCxD/s | 824 | 428 b | |

| [49] | 0.18 m | 208.0 MHz | 192.8 MCxD/s | n/a | n/a | |

| [54] | Stratix II FPGA | 106.2 MHz | 212.4 MCxD/s | 1267 | 1321 b | |

| This | Virtex-7 FPGA | 321.5 MHz | 321.5 MCxD/s | 1778 | 0 | |

| Tier 1 | [25] | 0.35 m | 50.0 MHz | 36.5 Mb/s | n/a | n/a |

| [24] | Virtex II XC2V1000 | 50.0 MHz | 91.2 Mb/s | 4420 | 3120 b ** | |

| [30] | Virtex II Pro FG 456 | 112.0 MHz | 181.6 Mb/s * | 2504 | 28 | |

| This | Virtex-7 FPGA | 255.3 MHz | 380.0 Mb/s | 2708 | 4 |

| Image | Bit Depth | Description | |||

|---|---|---|---|---|---|

| CUP [56] | 350 | 350 | 188 | 16 | Cuprite valley in Nevada |

| SUW [55] | 320 | 1200 | 360 | 16 | Lower Suwannee natural reserve |

| DHO [55] | 320 | 1260 | 360 | 16 | Deepwater Horizon oil spill |

| BEL [55] | 320 | 600 | 360 | 16 | Crop fields in Belstville |

| REN [55] | 320 | 600 | 356 | 16 | Urban and rural mixed area |

| CRW [56] | 614 | 512 | 224 | 16 | Cuprite valley full image |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Báscones, D.; González, C.; Mozos, D. An FPGA Accelerator for Real-Time Lossy Compression of Hyperspectral Images. Remote Sens. 2020, 12, 2563. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12162563

Báscones D, González C, Mozos D. An FPGA Accelerator for Real-Time Lossy Compression of Hyperspectral Images. Remote Sensing. 2020; 12(16):2563. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12162563

Chicago/Turabian StyleBáscones, Daniel, Carlos González, and Daniel Mozos. 2020. "An FPGA Accelerator for Real-Time Lossy Compression of Hyperspectral Images" Remote Sensing 12, no. 16: 2563. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12162563