Novel Pole Photogrammetric System for Low-Cost Documentation of Archaeological Sites: The Case Study of “Cueva Pintada”

Abstract

:1. Introduction

2. Materials and Methods

2.1. Pole Photogrammetric System

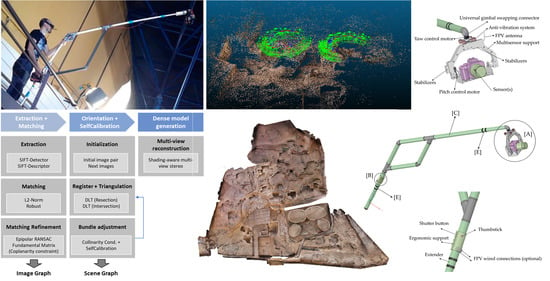

- [A] Self-stabilizing platform (Figure 1b): It includes a universal gimbal swapping connector that allows the exchange of different types of platforms adapted to the type of sensor used in each case from visible, infrared to multispectral or hyperspectral cameras. The sensors are supplied by wire through the hollow structure towards the batteries by means of power cables with interchangeable pins. This platform has 2 degrees of freedom to guide the sensors according to yaw and pitch thanks to the two rotation motors and two servo-stabilizers that guarantee the position. In order to reduce vibrations that the motors could transmit to the sensors, the platform incorporates an anti-vibration system. To facilitate the manageability of the equipment and the transmission of the signal, the FPV antenna for the remote vision glasses [D] is in the sensor support to guarantee a better signal emission and not hinder the operator.

- [B] Control System (Figure 2a): This unit is centralized in an ergonomic support through which the actuation of the platform motors can be controlled thanks to a thumbstick with potentiometers for both angles yaw and pitch. It is fixed but can be adapted to the characteristics of any operator thanks to the telescopic extenders of the structure. The shutter button is also located on the control unit to facilitate handling while using the SAMBA system and it connects to the sensor in a wired way. Depend on the type of sensor this may involve capturing individual images or starting/stopping video recordings. Finally, two optional wired connections are located for the remote vision system just in case the reception of the antenna signal is not enough.

- [C] Structure (Figure 1a): The entire system is linked by a light hollow structure, designed in PVC and aluminum, which allows the power supply of all the devices involved: sensors, platform orientation, active stabilizers, remote vision system and shutter control. In the final part of the structure there is an exchangeable regaton that is the one in contact with the ground. It can be non-slip rubber type, for indoor or delicate scenarios, or tip type for outdoor.

- [D] Remote vision system (Figure 1a): This system consists of a remote vision glasses, which allows both to visualize the point of view of the sensor and control its optimal orientation using the thumbstick and (ii) release both hands to be able to handle the structure. Yaw rotation is limited to prevent the damage of the wiring to the sensors. The video system connection is made, either wirelessly or wired when there is signal interference. It supports a conventional Analog AV video signal in standard NTSC or PAL format. Practically all cameras have a video output in one format or another, either Analog or HDMI, for which an HDMI to Analog signal converter would be required.

- [E] Telescopic extenders (Figure 1a): The main structure can be contracted to facilitate its transport and can be adapted to the geometric characteristics of each scenario. The lower part unfolds up to 2.5 m, while the upper part can reach up to 4–4.5 m, in order to overcome obstacles and increase the vertical sensor-object distance. Due to the angle designed between the two main structures, it allows the camera to be raised to a maximum height of 3.5 m when using it supported on the ground.

- Batteries (Figure 2b): LiPo type batteries are located at the bottom of the structure to stabilize its center of gravity. Their purpose is to provide power to all the electronic elements of the system as well as to the different sensors that can be embarked. To avoid having to remove them in each recharge cycle, a charging connection has been provided for them. The wiring is distributed inside the hollow structure with a margin to avoid tension when the extenders are fully deployed.

- Possibility to ship sensors of different nature to enrich and hybridize the 3D model using data acquired from infrared, thermal, multispectral or hyperspectral cameras that can provide relevant information for the investigations at the remains.

- To serve as a close-range photogrammetric platform alternative to drones for places where their use is not allowed, is discouraged or is problematic due to the possibility of collision risk or of having hidden areas due to the presence of many vertical structures, columns, etc.

- To allow to overcome obstacles and get into limited access areas thanks to its extendable structure and the possibility of pivoting and tilting the structure.

- To be handled in a simple way thanks to its light and ergonomic design being able to visualize in real time the point of view of the camera and being able to control the most convenient framing in each case.

- To ensure sharp images thanks to the dynamic counterbalance effect of its structure and the presence of an IMU and a snap-on system on the sensor platform.

2.2. Total Station

2.3. Network Design and Data Acquisition

2.4. Data Processing

2.4.1. Extraction and Robust Matching of Features

- First, a robust matching approach based on brute force scheme and L2-Norm distance searches for feature correspondences which enclose the most similar features in the rest of the images. Particularly, for each extracted point the distance ratio between the two best candidates in the other image is compared with a threshold. If it is obtained a high distance ratio, the match could be ambiguous or incorrect. According to the probability distribution function, a threshold > 0.8 [33] provides a good separation among correct and incorrect matches. Those remaining pairs of candidates are then filtered by a threshold which expresses the discrepancy between descriptors. This threshold is established as a percent value in the range [0,1]. The computation is established as maximum descriptor distance (for the whole matches’ pairs) multiplied by K factor. The matches’ pairs whose distance is greater than that value are rejected. This is equivalent to sort all the matches in ascending order of their distances so that best matches (with low distance, or lower than 1-K) come to front. A K = 1 factor implies that not refinement is done (all matches are kept).

- Second, a robust matching refinement was performed based on geometric constraints (fundamental matrix and epipolar geometry) combined with RANSAC robust estimator [34]. The idea is to improve the first robust matching strategy, which uses only radiometric criterions, with geometric constraints that allow us to verify if the matched points map the same object point. To this end, an approximation of the fundamental matrix was computed with the matchings of the first step, obtaining a first epipolar geometry of image pairs and thus an extra geometric constraint to refine the matchings. The fundamental matrix was solved with 8 parameters using, at least, n ≥ 8 correspondences. Particularly, the term f33 was constrained with the unit value. Due to the ambiguity of the matching process, we define a matching outlier as an incorrect corresponding point that does not coincide to the correct homologous point. Since the matchings are often contaminated by outliers, robust estimators’ techniques, such as RANSAC, are required. Specifically, RANSAC was implemented as a search engine following a voting process based on how closely a matched pair of points satisfies the epipolar geometry. Those matching points which overpass the threshold based on the orthogonal distance to the epipolar line were rejected.

2.4.2. Orientation and Self-Calibration

- First, an initialization of the first image pair was carried out selecting the best pair of images. To this end a trifold criterion was established for selecting the initial image pair: (i) guarantee a good ray intersection; (ii) contain a considerable number of matching points; (iii) present a good matching points distribution along the image format. Note, that initialize with a good image pair usually results in a more reliable and accurate reconstruction.

- Second, once the image pair was initialized, image triangulation was performed through the direct linear transformation (DLT) using 3 parameters corresponding to the object coordinates (X,Y,Z) [35] and taking the matchings points and the camera pose provided by the fundamental matrix, as input data. Afterwards and considering this initial image pair as reference, new images were first registered and then triangulated using again the DLT. The DLT allows us to estimate first the camera pose and then to triangulate matching points in a direct way, that is without initial approximations.

- Third, although all the images were registered and triangulated based on DLT, this method suffers from limited accuracy and reliability which could drift quickly to a non-convergent state. To cope with this problem, a bundle adjustment based on collinearity condition [36] was applied with a threefold purpose: (i) compute registration and triangulation together and in a global way; (ii) consider the estimation of the inner parameters of the camera, self-calibration; (iii) get more accuracy and precision in the images orientation and self-calibration, using an non-linear iterative procedure supported by collinearity condition that minimizes the reprojection error.

2.4.3. Dense Model and Orthoimage Generation

2.5. Accuracy Assessment

3. Results

3.1. The Archaeological Site of “Cueva Pintada”

3.2. Data Acquisition and 3D Model Generation

3.3. Photorealistic 3D Surface Model

3.4. Orthoimages and Other Products

4. Conclusions

- Point of view: Acquisition of close-range nadir and oblique images at a maximum height of up to 5 m.

- Stability: Sharp images even in poor lighting conditions (long exposure times).

- Flexibility: Adjustment to different heights thanks to its telescopic structure, even being able to save heights and ensuring blur problems.

- Control: Ability to orient the on-board sensor towards the desired point of view.

- Versatility: Ability to board any type of sensor on this system, mainly digital and multispectral cameras, and to be used both indoors and outdoors.

- Portability: It allows to carry out large topographic surveys thanks to its light and ergonomic structure.

- Robustness: Acquisition, control, display and power units are integrated in the same device.

- Security: it allows to replicate a nadiral point of view avoiding the use of drones and therefore possible impacts due to failures, exceeding the autonomy time, and lifting of particles of loose materials from the ground.

- Low-cost alternative to drones.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bitelli, G.; Dubbini, M.; Zanutta, A. Terrestrial laser scanning and digital photogrammetry techniques to monitor landslide bodies. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 246–251. [Google Scholar]

- Pollefeys, M.; Van Gool, L.; Vergauwen, M.; Verbiest, F.; Cornelis, K.; Tops, J.; Koch, R. Visual modeling with a hand-held camera. Int. J. Comput. Vis. 2004, 59, 207–232. [Google Scholar] [CrossRef]

- Stumpf, A.; Malet, J.P.; Allemand, P.; Pierrot-Deseilligny, M.; Skupinski, G. Ground-based multi-view photogrammetry for the monitoring of landslide deformation and erosion. Geomorphology 2015, 231, 130–145. [Google Scholar] [CrossRef]

- Tuttas, S.; Braun, A.; Borrmann, A.; Stilla, U. Evaluation of acquisition strategies for image-based construction site monitoring. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 733–740. [Google Scholar] [CrossRef]

- Drewello, R.; Wetter, N.; Beckett, B.; Beckett, N. A New Crane System for Remote Inspection and NDT. In Nondestructive Testing of Materials and Structures; Springer: Dordrecht, The Netherlands, 2013; pp. 1253–1257. [Google Scholar]

- Jo, Y.H.; Hong, S. Three-dimensional digital documentation of cultural heritage site based on the convergence of terrestrial laser scanning and unmanned aerial vehicle photogrammetry. ISPRS Int. J. Geo-Inf. 2019, 8, 53. [Google Scholar]

- Verhoeven, G.J. Providing an archaeological bird’s-eye view–an overall picture of ground-based means to execute low-altitude aerial photography (LAAP) in Archaeology. Archaeol. Prospect. 2009, 16, 233–249. [Google Scholar] [CrossRef]

- Altan, M.O.; Celikoyan, T.M.; Kemper, G.; Toz, G. Balloon photogrammetry for cultural heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2004, 35, 964–968. [Google Scholar]

- Bitelli, G.; Tini, M.A.; Vittuari, L. Low-height aerial photogrammetry for archaeological orthoimaging production. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2003, 34, 55–59. [Google Scholar]

- Cefalu, A.; Abdel-Wahab, M.; Peter, M.; Wenzel, K.; Fritsch, D. Image based 3D Reconstruction in Cultural Heritage Preservation. In ICINCO; SciTePress: Setúbal Municipality, Portugal, 2013; Volume 1, pp. 201–205. [Google Scholar]

- Dominici, D.; Alicandro, M.; Massimi, V. UAV photogrammetry in the post-earthquake scenario: Case studies in L’Aquila. Geomat. Nat. Hazards Risk 2017, 8, 87–103. [Google Scholar] [CrossRef] [Green Version]

- Jaud, M.; Letortu, P.; Théry, C.; Grandjean, P.; Costa, S.; Maquaire, O.; Le Dantec, N. UAV survey of a coastal cliff face–Selection of the best imaging angle. Measurement 2019, 139, 10–20. [Google Scholar] [CrossRef] [Green Version]

- Šedina, J.; Pavelka, K.; Raeva, P. UAV remote sensing capability for precision agriculture, forestry and small natural reservation monitoring. In Hyperspectral Imaging Sensors: Innovative Applications and Sensor Standards; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10213, p. 102130L. [Google Scholar]

- Luhmann, T.; Chizhova, M.; Gorkovchuk, D.; Hastedt, H.; Chachava, N.; Lekveishvili, N. Combination of terrestrial laserscanning, UAV and close-range photogrammetry for 3D reconstruction of complex churches in Georgia. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W11, 753–761. [Google Scholar] [CrossRef] [Green Version]

- Dhonju, H.K.; Xiao, W.; Mills, J.P.; Sarhosis, V. Share Our Cultural Heritage (SOCH): Worldwide 3D heritage reconstruction and visualization via web and mobile GIS. ISPRS Int. J. Geo-Inf. 2018, 7, 360. [Google Scholar] [CrossRef] [Green Version]

- Pham, H.Q.; Camey, M.; Pham, K.D.; Pham, K.V.; Rilett, L.R. Review of Unmanned Aerial Vehicles (UAVs) Operation and Data Collection for Driving Behavior Analysis. In CIGOS 2019, Innovation for Sustainable Infrastructure; Springer: Singapore, 2020; pp. 1111–1116. [Google Scholar]

- Xie, T.; Zhu, J.; Jiang, C.; Jiang, Y.; Guo, W.; Wang, C.; Liu, R. Situation and prospect of light and miniature UAV-borne LiDAR. In XIV International Conference on Pulsed Lasers and Laser Applications; International Society for Optics and Photonics: Bellingham, WA, USA, 2019; Volume 11322, p. 1132210. [Google Scholar]

- Singh, V.; Bagavathiannan, M.; Chauhan, B.S.; Singh, S. Evaluation of current policies on the use of unmanned aerial vehicles in Indian agriculture. Curr. Sci. 2019, 117, 25. [Google Scholar] [CrossRef]

- Szabó, G.; Bertalan, L.; Barkóczi, N.; Kovács, Z.; Burai, P.; Lénárt, C. Zooming on aerial survey. In Small Flying Drones; Springer: Cham, Switzerland, 2018; pp. 91–126. [Google Scholar]

- Tonkin, T.N.; Midgley, N.G. Ground-control networks for image based surface reconstruction: An investigation of optimum survey designs using UAV derived imagery and structure-from-motion photogrammetry. Remote Sens. 2016, 8, 786. [Google Scholar] [CrossRef] [Green Version]

- Dallas, R.W.A. Architectural and archaeological photogrammetry. In Close Range Photogrammetry and Machine Vision; Atkinson, K.B., Ed.; Whittles Publishing: Scotland, UK, 1996; pp. 283–303. [Google Scholar]

- Rodríguez-Gonzálvez, P.; Holgado-Barco, A.; González-Aguilera, D.; Guerrero-Sevilla, D.; Hernández-López, D. Sistema de Adquisición de Imágenes Nadirales y Oblicuas2644168-B1. ES Patent 2644168-B1, 19 September 2018. [Google Scholar]

- Khalili, A. Pull Rod Type Digital Camera. WO Patent 2015192207-A1, January 2015. Available online: https://patents.google.com/patent/US9843708B2/en (accessed on 13 August 2020).

- Staudinger, R.J.; Chevere-Santos, M.; Zhou, R. Portable Remote Camera Control Device. US Patent 7706673-B1, April 2010. [Google Scholar]

- Winners Sun Plastic and Electronic Shenzhen CO LTD. WO Patent 2016050011-A1, 27 April 2016.

- Anari, F.A., III; Vosburg, R.P.; VanZIle, R., III. Camera Pole. US Patent 2015108777-A1, 23 April 2015. [Google Scholar]

- Gonçalves, J.A.; Moutinho, O.F.; Rodrigues, A.C. Pole photogrammetry with an action camera for fast and accurate surface mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 571–575. [Google Scholar] [CrossRef]

- Del Pozo, S.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Onrubia-Pintado, J.; González-Aguilera, D. Sensor fusion for 3D archaeological documentation and reconstruction: Case study of “Cueva Pintada” in Galdar, Gran Canaria. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W15, 373–379. [Google Scholar] [CrossRef] [Green Version]

- Sánchez-Aparicio, L.J.; Herrero-Huerta, M.; Esposito, R.; Roel Schipper, H.; González-Aguilera, D. Photogrammetric solution for analysis of out-of-plane movements of a masonry structure in a large-scale laboratory experiment. Remote Sens. 2019, 11, 1871. [Google Scholar]

- González-Aguilera, D.; López-Fernández, L.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Guerrero, D.; Remondino, F.; Menna, F.; Nocerino, E.; Toschi, I.; Ballabeni, A.; et al. GRAPHOS–open-source software for photogrammetric applications. Photogramm. Rec. 2018, 33, 11–29. [Google Scholar] [CrossRef] [Green Version]

- Schönberger, J.L.; Frahm, J. Structure-from-Motion Revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Gesto-Diaz, M.; Tombari, F.; Gonzalez-Aguilera, D.; Lopez-Fernandez, L.; Rodriguez-Gonzalvez, P. Feature matching evaluation for multimodal correspondence. ISPRS J. Photogramm. Remote Sens. 2017, 129, 179–188. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Abdel-Aziz, Y.I.; Karara, H.M.; Hauck, M. Direct linear transformation from comparator coordinates into object space coordinates in close-range photogrammetry. Photogramm. Eng. Remote Sens. 2015, 81, 103–107. [Google Scholar] [CrossRef]

- Triggs, B.; McLauchlan, P.F.; Hartley, R.I.; Fitzgibbon, A.W. Bundle Adjustment-A Modern Synthesis. Vis. Algorithms Theory Pract. 2000, 34099, 298–372. [Google Scholar]

- Langguth, F.; Sunkavalli, K.; Hadap, S.; Goesele, M. Shading-aware multi-view stereo. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2016; pp. 469–485. [Google Scholar]

- Semerjian, B. A new variational framework for multiview surface reconstruction. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 719–734. [Google Scholar]

- Kraus, K. Photogrammetry-Fundamentals and Standard Process. Dummler/Bonn 1993, 1, 397. [Google Scholar]

- Amhar, F.; Jansa, J.; Ries, C. The generation of true orthophotos using a 3D building model in conjunction with a conventional DTM. Int. Arch. Photogramm. Remote Sens. 1998, 32, 16–22. [Google Scholar]

- Rodríguez-Gonzálvez, P.; Garcia-Gago, J.; Gomez-Lahoz, J.; González-Aguilera, D. Confronting passive and active sensors with non-Gaussian statistics. Sensors 2014, 14, 13759–13777. [Google Scholar] [CrossRef] [Green Version]

- American Society for Photogrammetry and Remote Sensing (ASPRS). ASPRS positional accuracy standards for digital geospatial data. Photogramm. Eng. Remote Sens. 2015, 81, 1–26. [Google Scholar] [CrossRef]

- Nocerino, E.; Menna, F.; Remondino, F.; Toschi, I.; Rodríguez-Gonzálvez, P. Investigation of indoor and outdoor performance of two portable mobile mapping systems. In Proceedings of the Videometrics, Range Imaging, and Applications XIV, Munich, Germany, 26 June 2017; International Society for Optics and Photonics: Bellingham, WA, USA, 2017; Volume 10332, p. 103320I. [Google Scholar]

- Höhle, J.; Höhle, M. Accuracy assessment of digital elevation models by means of robust statistical methods. ISPRS J. Photogramm. Remote Sens. 2009, 64, 398–406. [Google Scholar] [CrossRef] [Green Version]

- Rodríguez-Gonzálvez, P.; González-Aguilera, D.; Hernández-López, D.; González-Jorge, H. Accuracy assessment of airborne laser scanner dataset by means of parametric and non-parametric statistical methods. IET Sci. Meas. Technol. 2015, 9, 505–513. [Google Scholar] [CrossRef]

- Caselles, J.O.; Clapés, J.; Sáenz Sagasti, J.I.; Pérez Gracia, V.; Rodríguez Santana, C.G. Integrated GPR and Laser Vibration Surveys to Preserve Prehistorical Painted Caves: Cueva Pintada Case Study. Int. J. Archit. Herit. 2019, 1–9. [Google Scholar] [CrossRef]

- De Guzmán, C.M.; Pintado, J.O.; Sagasti, J.S. Trabajos en el Parque Arqueológico de la Cueva Pintada de Gáldar, Gran Canaria. Avances de las intervenciones realizadas en 1993. Anuario de Estudios Atlánticos 1996, 42, 17–76. [Google Scholar]

- Sanchez-Moral, S.; Garcia-Guinea, J.; Sanz-Rubio, E.; Canaveras, J.C.; Onrubia-Pintado, J. Mortars, pigments and saline efflorescence from Canarian pre-Hispanic constructions (Galdar, Grand Canary Island). Constr. Build. Mater. 2002, 16, 241–250. [Google Scholar] [CrossRef]

- Kazhdan, M.; Bolitho, M.; Hoppe, H. Poisson surface reconstruction. In Proceedings of the Fourth Eurographics Symposium on Geometry Processing, Sardinia, Italy, 26–28 June 2006; Volume 7. [Google Scholar]

- Rodríguez-Gonzálvez, P.; Nocerino, E.; Menna, F.; Minto, S.; Remondino, F. 3D surveying and modeling of underground passages in WWI fortifications. Int. Arch. Photogramm. Remote Sens. 2015, 40, 17–24. [Google Scholar] [CrossRef] [Green Version]

| Products-Parameters | Value |

|---|---|

| Point cloud (MP 1) | 71.77 |

| Mesh (MT 2) | 3.69 |

| Size of the texturized model (pixels) | 16,384 × 16,384 |

| GSD (mm) | 1.47 |

| Parameters | Value | Accuracy | |

|---|---|---|---|

| Translation (m) | X-Coordinate | 435,679.943 | ±0.0011 |

| Y-Coordinate | 3,113,299.081 | ±0.0007 | |

| Z-Coordinate | 112.165 | ±0.0016 | |

| Rotation (rad) | X-Axis (ω) | −0.00000176 | ±0.00004035 |

| Y-Axis (φ) | +0.00017037 | ±0.00005154 | |

| Z-Axis (κ) | +0.42133813 | ±0.00002850 | |

| Scale | 1 | - | |

| X-Coordinate | Y-Coordinate | Z-Coordinate | 3D Module | ||

|---|---|---|---|---|---|

| Control | Median | 0.0002 | 0.0000 | 0.0003 | 0.0017 |

| NMAD | ±0.0017 | ±0.0007 | ±0.0004 | ±0.0006 | |

| RMSE | ±0.0017 | ±0.0006 | ±0.0009 | ±0.0005 | |

| Check | Median | 0.0008 | −0.0040 | 0.0007 | 0.0042 |

| NMAD | ±0.0006 | ±0.0006 | ±0.0004 | ±0.0004 | |

| RMSE | ±0.0005 | ±0.0006 | ±0.0004 | ±0.0004 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Del Pozo, S.; Rodríguez-Gonzálvez, P.; Hernández-López, D.; Onrubia-Pintado, J.; Guerrero-Sevilla, D.; González-Aguilera, D. Novel Pole Photogrammetric System for Low-Cost Documentation of Archaeological Sites: The Case Study of “Cueva Pintada”. Remote Sens. 2020, 12, 2644. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12162644

Del Pozo S, Rodríguez-Gonzálvez P, Hernández-López D, Onrubia-Pintado J, Guerrero-Sevilla D, González-Aguilera D. Novel Pole Photogrammetric System for Low-Cost Documentation of Archaeological Sites: The Case Study of “Cueva Pintada”. Remote Sensing. 2020; 12(16):2644. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12162644

Chicago/Turabian StyleDel Pozo, Susana, Pablo Rodríguez-Gonzálvez, David Hernández-López, Jorge Onrubia-Pintado, Diego Guerrero-Sevilla, and Diego González-Aguilera. 2020. "Novel Pole Photogrammetric System for Low-Cost Documentation of Archaeological Sites: The Case Study of “Cueva Pintada”" Remote Sensing 12, no. 16: 2644. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12162644