A Novel Deep Forest-Based Active Transfer Learning Method for PolSAR Images

Abstract

:1. Introduction

2. Materials and Methods

2.1. Active Learning

2.2. Deep Forest Model

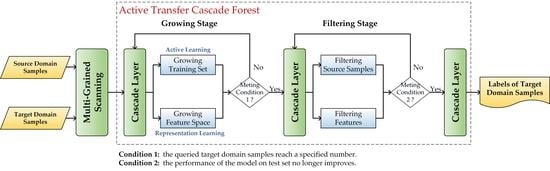

2.3. The Proposed Active Transfer Learning Method

2.3.1. Growing Stage

- The growth of the training set

- The growth of feature space

2.3.2. Filtering Stage

- Feature subspace filtering

- Source domain sample filtering

| Algorithm 1: Proposed active transfer learning method. |

| Input: source domain samples (), target domain samples (). |

| Output: class labels of . |

| Require: active learning strategy (), filtering criterion of (), |

| filtering criterion of feature subspaces (). |

| Begin: |

| 1: train multi-grained scanning forest based on . |

| 2: transform and from raw features into multi-grained features. |

| 3: initialize: target domain labeled samples . |

| Repeat until the number of meets the requirements |

| 4: train cascade layer based on . |

| 5: generate augmented features for all the samples and concatenate with multi-grained features. |

| 6: use to select N samples from for manual annotation, and then add them to . |

| End repeat |

| Repeat until performance of the model no longer improves |

| 7: train cascade layer based on . |

| 8: generate augmented features for all the samples and concatenate with multi-grained features. |

| 9: use to remove domain-sensitive feature subspaces. |

| 10: use to remove samples from . |

| 11: evaluate the performance of the model based on the classification accuracy of . |

| End repeat |

| 12: obtain the class labels of from the final cascade layer. |

3. Experiments

3.1. Experimental Data and Settings

3.2. Comparison with Existing Methods

3.3. Effectiveness Evaluation

3.3.1. Effect of the Feature Space Adjustment Strategy

3.3.2. Influence of the Model Parameters

3.3.3. Effects of the Proposed Active Learning Strategy

- The proposed active learning strategy (AL-Proposed),

- Active learning using only uncertainty (AL-U),

- Active learning using uncertainty and the cosine angle distance (AL-U-CAD), and

- Random sampling (RS).

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Kamishima, T.; Hamasaki, M.; Akaho, S. TrBagg: A Simple Transfer Learning Method and its Application to Personalization in Collaborative Tagging. In Proceedings of the 2009 Ninth IEEE International Conference on Data Mining, Miami, FL, USA, 6–9 December 2009; pp. 219–228. [Google Scholar] [CrossRef] [Green Version]

- Lin, D.; An, X.; Zhang, J. Double-bootstrapping source data selection for instance-based transfer learning. Pattern Recognit. Lett. 2013, 34, 1279–1285. [Google Scholar] [CrossRef]

- Donahue, J.; Hoffman, J.; Rodner, E.; Saenko, K.; Darrell, T. Semi-supervised Domain Adaptation with Instance Constraints. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 668–675. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Wang, G.; Cai, Z.; Zhang, H. Bagging based ensemble transfer learning. J. Ambient Intell. Humaniz. Comput. 2016, 7, 29–36. [Google Scholar] [CrossRef]

- Liu, B.; Xiao, Y.; Hao, Z. A Selective Multiple Instance Transfer Learning Method for Text Categorization Problems. Knowl. Based Syst. 2018, 141, 178–187. [Google Scholar] [CrossRef]

- Pereira, L.A.; da Silva Torres, R. Semi-supervised transfer subspace for domain adaptation. Pattern Recognit. 2018, 75, 235–249. [Google Scholar] [CrossRef]

- Zhang, L.; Guo, L.; Gao, H.; Dong, D.; Fu, G.; Hong, X. Instance-based ensemble deep transfer learning network: A new intelligent degradation recognition method and its application on ball screw. Mech. Syst. Signal Process. 2020, 140, 106681. [Google Scholar] [CrossRef]

- Pan, S.J.; Tsang, I.W.; Kwok, J.T.; Yang, Q. Domain Adaptation via Transfer Component Analysis. IEEE Trans. Neural Netw. 2011, 22, 199–210. [Google Scholar] [CrossRef] [Green Version]

- Duan, L.; Xu, D.; Tsang, I.W. Domain Adaptation From Multiple Sources: A Domain-Dependent Regularization Approach. IEEE Trans. Neural Netw. Learn. Syst. 2012, 23, 504–518. [Google Scholar] [CrossRef]

- Othman, E.; Bazi, Y.; Melgani, F.; Alhichri, H.; Alajlan, N.; Zuair, M. Domain Adaptation Network for Cross-Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4441–4456. [Google Scholar] [CrossRef]

- Theckel Joy, T.; Rana, S.; Gupta, S.; Venkatesh, S. A Flexible Transfer Learning Framework for Bayesian optimization with Convergence Guarantee. Expert Syst. Appl. 2018, 115, 656–672. [Google Scholar] [CrossRef]

- Yan, K.; Kou, L.; Zhang, D. Learning Domain-Invariant Subspace Using Domain Features and Independence Maximization. IEEE Trans. Cybern. 2018, 48, 288–299. [Google Scholar] [CrossRef]

- Wang, Y.; Zhai, J.; Li, Y.; Chen, K.; Xue, H. Transfer learning with partial related “instance-feature” knowledge. Neurocomputing 2018, 310, 115–124. [Google Scholar] [CrossRef]

- Qin, X.; Yang, J.; Li, P.; Sun, W.; Liu, W. A Novel Relational-Based Transductive Transfer Learning Method for PolSAR Images via Time-Series Clustering. Remote Sens. 2019, 11, 1358. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Song, Y.; Zhang, C. Transferred Dimensionality Reduction. Machine Learning and Knowledge Discovery in Databases; Daelemans, W., Goethals, B., Morik, K., Eds.; Springer: Berlin/Heidelberg, Germany, 2008; pp. 550–565. [Google Scholar]

- Chang, H.; Han, J.; Zhong, C.; Snijders, A.M.; Mao, J. Unsupervised Transfer Learning via Multi-Scale Convolutional Sparse Coding for Biomedical Applications. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1182–1194. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Siddhant, A.; Goyal, A.; Metallinou, A. Unsupervised Transfer Learning for Spoken Language Understanding in Intelligent Agents. arXiv 2018, arXiv:cs.CL/1811.05370. [Google Scholar] [CrossRef] [Green Version]

- Rochette, A.; Yaghoobzadeh, Y.; Hazen, T.J. Unsupervised Domain Adaptation of Contextual Embeddings for Low-Resource Duplicate Question Detection. arXiv 2019, arXiv:cs.CL/1911.02645. [Google Scholar]

- Passalis, N.; Tefas, A. Unsupervised Knowledge Transfer Using Similarity Embeddings. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 946–950. [Google Scholar] [CrossRef]

- Liu, Y.; Ding, L.; Chen, C.; Liu, Y. Similarity-Based Unsupervised Deep Transfer Learning for Remote Sensing Image Retrieval. IEEE Trans. Geosc. Remote Sens. 2020, 1–18. [Google Scholar] [CrossRef]

- Deng, C.; Xue, Y.; Liu, X.; Li, C.; Tao, D. Active Transfer Learning Network: A Unified Deep Joint Spectral-Spatial Feature Learning Model for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1741–1754. [Google Scholar] [CrossRef] [Green Version]

- Wu, D. Active semi-supervised transfer learning (ASTL) for offline BCI calibration. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 246–251. [Google Scholar] [CrossRef] [Green Version]

- Yan, Y.; Subramanian, R.; Lanz, O.; Sebe, N. Active transfer learning for multi-view head-pose classification. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 1168–1171. [Google Scholar]

- Tang, X.; Du, B.; Huang, J.; Wang, Z.; Zhang, L. On combining active and transfer learning for medical data classification. IET Comput. Vis. 2019, 13, 194–205. [Google Scholar] [CrossRef]

- Wang, N.; Li, T.; Zhang, Z.; Cui, L. TLTL: An Active Transfer Learning Method for Internet of Things Applications. In Proceedings of the ICC 2019-2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Zhou, Z.; Feng, J. Deep forest: Towards an alternative to deep neural networks. arXiv 2017, arXiv:1702.08835. [Google Scholar]

- Settles, B. Active Learning Literature Survey; University of Wisconsin-Madison: Madison, WI, USA, 2010; Volume 52. [Google Scholar]

- Schein, A.I.; Ungar, L.H. Active learning for logistic regression: An evaluation. Mach. Learn. 2007, 68, 235–265. [Google Scholar] [CrossRef]

- Demir, B.; Persello, C.; Bruzzone, L. Batch-Mode Active-Learning Methods for the Interactive Classification of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1014–1031. [Google Scholar] [CrossRef] [Green Version]

- Brinker, K. Incorporating Diversity in Active Learning with Support Vector Machines. In Proceedings of the Twentieth International Conference (ICML 2003), Washington, DC, USA, 21–24 August 2003; pp. 59–66. [Google Scholar]

- Persello, C.; Bruzzone, L. Active Learning for Domain Adaptation in the Supervised Classification of Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4468–4483. [Google Scholar] [CrossRef]

- Yang, J.; Li, S.; Xu, W. Active Learning for Visual Image Classification Method Based on Transfer Learning. IEEE Access 2018, 6, 187–198. [Google Scholar] [CrossRef]

- Cloude, S.R.; Pottier, E. An entropy based classification scheme for land applications of polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1997, 35, 68–78. [Google Scholar] [CrossRef]

- Deng, W.; Lendasse, A.; Ong, Y.; Tsang, I.W.; Chen, L.; Zheng, Q. Domain Adaption via Feature Selection on Explicit Feature Map. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 1180–1190. [Google Scholar] [CrossRef]

- Fernando, B.; Habrard, A.; Sebban, M.; Tuytelaars, T. Unsupervised Visual Domain Adaptation Using Subspace Alignment. In Proceedings of the 2013 IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 2960–2967. [Google Scholar] [CrossRef] [Green Version]

- Borgwardt, K.M.; Gretton, A.; Rasch, M.J.; Kriegel, H.P.; Schölkopf, B.; Smola, A.J. Integrating Structured Biological Data by Kernel Maximum Mean Discrepancy. Bioinformatics 2006, 22, 49–57. [Google Scholar] [CrossRef] [Green Version]

| Data Set | Number of | Baseline | BETL | TrBagg | SMIDA | SSTCA | Proposed |

|---|---|---|---|---|---|---|---|

| 5 | 80.50 | 73.57 ± 5.02 | 74.72 ± 7.56 | 79.42 ± 0.47 | 74.18 ± 5.29 | 81.70 ± 0.58 | |

| Wuhan 2011 | 50 | 80.50 | 73.32 ± 5.29 | 81.69 ± 1.07 | 80.90 ± 0.96 | 79.18 ± 1.92 | 83.74 ± 0.42 |

| to | 100 | 80.50 | 78.90 ± 2.31 | 81.53 ± 0.78 | 81.47 ± 0.73 | 78.52 ± 1.47 | 84.76 ± 0.45 |

| Wuhan 2017 | 150 | 80.50 | 79.20 ± 1.86 | 82.17 ± 1.05 | 82.63 ± 0.52 | 78.26 ± 1.66 | 85.96 ± 0.67 |

| 200 | 80.50 | 80.61 ± 1.94 | 83.19 ± 0.66 | 81.90 ± 2.62 | 79.59 ± 2.13 | 86.71 ± 0.21 | |

| 5 | 81.90 | 72.02 ± 5.88 | 63.57 ± 22.17 | 79.49 ± 0.90 | 76.91 ± 1.18 | 83.38 ± 0.45 | |

| Wuhan 2016 | 50 | 81.90 | 74.59 ± 6.72 | 81.30 ± 0.81 | 81.63 ± 0.91 | 80.53 ± 0.59 | 85.14 ± 0.29 |

| to | 100 | 81.90 | 78.30 ± 3.00 | 82.20 ± 1.58 | 82.18 ± 0.95 | 81.94 ± 0.78 | 86.36 ± 0.28 |

| Wuhan 2017 | 150 | 81.90 | 81.14 ± 1.94 | 82.44 ± 0.75 | 83.01 ± 1.06 | 82.35 ± 0.90 | 87.13 ± 0.27 |

| 200 | 81.90 | 81.10 ± 2.12 | 83.34 ± 0.69 | 83.42 ± 0.79 | 82.45 ± 1.04 | 87.87 ± 0.32 | |

| 5 | 58.40 | 51.94 ± 4.02 | 46.18 ± 14.92 | 77.02 ± 1.22 | 59.83 ± 5.41 | 58.34 ± 6.78 | |

| Suzhou 2008 | 50 | 58.40 | 65.73 ± 9.07 | 70.98 ± 9.72 | 80.20 ± 1.12 | 77.60 ± 2.97 | 80.95 ± 2.24 |

| to | 100 | 58.40 | 69.45 ± 8.80 | 77.11 ± 5.50 | 81.84 ± 1.09 | 82.26 ± 1.01 | 83.91 ± 1.25 |

| Suzhou 2016 | 150 | 58.40 | 73.53 ± 7.88 | 76.40 ± 4.22 | 82.14 ± 0.73 | 82.12 ± 1.26 | 86.73 ± 0.70 |

| 200 | 58.40 | 79.51 ± 3.00 | 80.57 ± 3.11 | 83.55 ± 0.96 | 81.30 ± 2.83 | 88.76 ± 0.41 | |

| Average computational time (s) | 3.6 | 19.6 | 4.1 | 9.3 | 23.5 | 130 | |

| Data Number | MMD | DBD | ||

|---|---|---|---|---|

| Before | After | Before | After | |

| 1 | 1.9101 | 1.5093 | 97.91% | 97.57% |

| 2 | 0.9459 | 0.7086 | 95.83% | 94.55% |

| 3 | 1.2600 | 0.9229 | 98.22% | 97.24% |

| Parameters | Parameter Setting | Wuhan 2011 to Wuhan 2017 | Wuhan 2016 to Wuhan 2017 | Suzhou 2008 to Suzhou 2016 | Average Computational Time (s) |

|---|---|---|---|---|---|

| W | 86.27 ± 0.92 | 87.30 ± 0.62 | 87.04 ± 0.68 | 128.3 | |

| 86.34 ± 0.49 | 87.85 ± 0.61 | 87.63 ± 0.60 | 173.5 | ||

| 86.71 ± 0.21 | 87.87 ± 0.32 | 88.76 ± 0.41 | 190.1 | ||

| 87.39 ± 0.16 | 87.80 ± 0.43 | 88.43 ± 0.38 | 257.0 | ||

| N | 86.77 ± 0.38 | 87.62 ± 0.63 | 88.67 ± 0.40 | 529.2 | |

| 86.71 ± 0.21 | 87.87 ± 0.32 | 88.76 ± 0.41 | 190.1 | ||

| 86.76 ± 0.56 | 87.98 ± 0.53 | 87.96 ± 0.33 | 136.9 | ||

| 86.87 ± 0.41 | 87.82 ± 0.24 | 87.95 ± 0.52 | 113.7 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, X.; Yang, J.; Zhao, L.; Li, P.; Sun, K. A Novel Deep Forest-Based Active Transfer Learning Method for PolSAR Images. Remote Sens. 2020, 12, 2755. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12172755

Qin X, Yang J, Zhao L, Li P, Sun K. A Novel Deep Forest-Based Active Transfer Learning Method for PolSAR Images. Remote Sensing. 2020; 12(17):2755. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12172755

Chicago/Turabian StyleQin, Xingli, Jie Yang, Lingli Zhao, Pingxiang Li, and Kaimin Sun. 2020. "A Novel Deep Forest-Based Active Transfer Learning Method for PolSAR Images" Remote Sensing 12, no. 17: 2755. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12172755