A Feature Space Constraint-Based Method for Change Detection in Heterogeneous Images

Abstract

:1. Introduction

2. Background

2.1. Homogeneous Transformation

2.2. Style Transfer

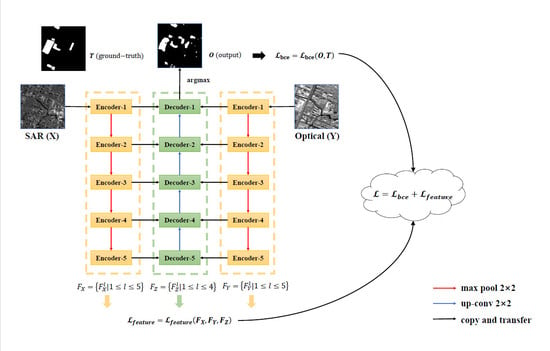

3. Proposed Method

3.1. Feature Space Representation

3.2. Feature Space Loss

3.3. Combined Loss Function

3.4. Detailed Change Detection Scheme

4. Experiments

4.1. Dataset Description

4.1.1. Dataset-1

4.1.2. Dataset-4

4.2. Implementation Details

4.2.1. Data Augmentation

4.2.2. Parameter Setting

4.2.3. Evaluation Criteria

4.3. Results and Evaluation

4.3.1. Experiments’ Design

4.3.2. Comparison with Other Methods

4.3.3. Experiments on Different Modules of the Proposed Method

4.3.4. Experiments on Different Hyperparameters of the Proposed Method

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| BCELoss | binary cross entropy loss |

| BDNN | bipartite differential neural network |

| CD | change detection |

| CDMs | change disguise maps |

| CDL | coupled dictionary learning |

| cGAN | conditional generative adversarial network |

| CM | change map |

| CMS-HCC | cooperative multitemporal segmentation and hierarchical compound classification |

| CNN | convolutional neural network |

| DEM | digital elevation model |

| DHFT | deep homogeneous feature fusion |

| ENVI | Environment for Visualizing Images |

| FSL | feature space loss |

| GCN | graph convolutional network |

| GISs | geographic information systems |

| MDS | multidimensional scaling |

| OA | overall accuracy |

| O-PCC | object-based PCC |

| P-PCC | pixel-based PCC |

| PCC | post-classification comparison |

| Pr | precision |

| Re | recall |

| SAR | synthetic aperture radar |

| SDAE | stacked denoising auto-encoder |

| SGD | stochastic gradient descent |

| NSW | non-shared weight |

References

- Singh, A. Review Article Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef] [Green Version]

- Giustarini, L.; Hostache, R.; Matgen, P.; Schumann, G.J.P.; Bates, P.D.; Mason, D.C. A Change Detection Approach to Flood Mapping in Urban Areas Using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2417–2430. [Google Scholar] [CrossRef] [Green Version]

- Gueguen, L.; Hamid, R. Toward a Generalizable Image Representation for Large-Scale Change Detection: Application to Generic Damage Analysis. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3378–3387. [Google Scholar] [CrossRef]

- Lunetta, R.S.; Knight, J.F.; Ediriwickrema, J.; Lyon, J.G.; Worthy, L.D. Land-cover change detection using multi-temporal MODIS NDVI data. Remote Sens. Environ. 2006, 105, 142–154. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Continuous change detection and classification of land cover using all available Landsat data. Remote Sens. Environ. 2014, 144, 152–171. [Google Scholar] [CrossRef] [Green Version]

- Manonmani, R.; Suganya, G. Remote sensing and GIS application in change detection study in urban zone using multi temporal satellite. Int. J. Geomat. Geosci. 2010, 1, 60–65. [Google Scholar]

- Liu, Z.; Zhang, L.; Li, G.; He, Y. Change Detection in Heterogeneous Remote Sensing Images Based on the Fusion of Pixel Transformation. In Proceedings of the 2017 20th International Conference on Information Fusion (Fusion), Xi’an, China, 10–13 July 2017; pp. 263–268. [Google Scholar] [CrossRef]

- Zhan, T.; Gong, M.; Jiang, X.; Li, S. Log-based transformation feature learning for change detection in heterogeneous images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1352–1356. [Google Scholar] [CrossRef]

- Pang, S.; Hu, X.; Zhang, M.; Cai, Z.; Liu, F. Co-Segmentation and Superpixel-Based Graph Cuts for Building Change Detection from Bi-Temporal Digital Surface Models and Aerial Images. Remote Sens. 2019, 11, 729. [Google Scholar] [CrossRef] [Green Version]

- Liu, J.; Chen, K.; Xu, G.; Sun, X.; Yan, M.; Diao, W.; Han, H. Convolutional Neural Network-Based Transfer Learning for Optical Aerial Images Change Detection. IEEE Geosci. Remote Sens. Lett. 2020, 17, 127–131. [Google Scholar] [CrossRef]

- Daudt, R.C.; Saux, B.L.; Boulch, A. Fully Convolutional Siamese Networks for Change Detection. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 4063–4067. [Google Scholar] [CrossRef] [Green Version]

- Zhang, M.; Xu, G.; Chen, K.; Yan, M.; Sun, X. Triplet-based semantic relation learning for aerial remote sensing image change detection. IEEE Geosci. Remote Sens. Lett. 2018, 16, 266–270. [Google Scholar] [CrossRef]

- Liu, J.; Chen, K.; Xu, G.; Li, H.; Yan, M.; Diao, W.; Sun, X. Semi-Supervised Change Detection Based on Graphs with Generative Adversarial Networks. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 74–77. [Google Scholar] [CrossRef]

- Touati, R.; Mignotte, M. An energy-based model encoding nonlocal pairwise pixel interactions for multisensor change detection. IEEE Trans. Geosci. Remote Sens. 2017, 56, 1046–1058. [Google Scholar] [CrossRef]

- Brunner, D.; Lemoine, G.; Bruzzone, L. Earthquake damage assessment of buildings using VHR optical and SAR imagery. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2403–2420. [Google Scholar] [CrossRef] [Green Version]

- Zhao, W.; Wang, Z.; Gong, M.; Liu, J. Discriminative Feature Learning for Unsupervised Change Detection in Heterogeneous Images Based on a Coupled Neural Network. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7066–7080. [Google Scholar] [CrossRef]

- Auer, S.; Hornig, I.; Schmitt, M.; Reinartz, P. Simulation-Based Interpretation and Alignment of High-Resolution Optical and SAR Images. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 4779–4793. [Google Scholar] [CrossRef] [Green Version]

- Mubea, K.; Menz, G. Monitoring Land-Use Change in Nakuru (Kenya) Using Multi-Sensor Satellite Data. Adv. Remote Sens. 2012, 1, 74–84. [Google Scholar] [CrossRef] [Green Version]

- Zhou, W.; Troy, A.; Grove, M. Object-based Land Cover Classification and Change Analysis in the Baltimore Metropolitan Area Using Multitemporal High Resolution Remote Sensing Data. Sensors 2008, 8, 1613–1636. [Google Scholar] [CrossRef] [Green Version]

- Prendes, J.; Chabert, M.; Pascal, F.; Giros, A.; Tourneret, J.Y. Change detection for optical and radar images using a Bayesian nonparametric model coupled with a Markov random field. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, Australia, 19–24 April 2015; pp. 1513–1517. [Google Scholar]

- Hedhli, I.; Moser, G.; Zerubia, J.; Serpico, S.B. A New Cascade Model for the Hierarchical Joint Classification of Multitemporal and Multiresolution Remote Sensing Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6333–6348. [Google Scholar] [CrossRef] [Green Version]

- Wan, L.; Xiang, Y.; You, H. An Object-Based Hierarchical Compound Classification Method for Change Detection in Heterogeneous Optical and SAR Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9941–9959. [Google Scholar] [CrossRef]

- Touati, R.; Mignotte, M.; Dahmane, M. Change Detection in Heterogeneous Remote Sensing Images Based on an Imaging Modality-Invariant MDS Representation. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3998–4002. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, K.; Zhang, P. A deep convolutional coupling network for change detection based on heterogeneous optical and radar images. IEEE Trans. Neural Netw. Learn. Syst. 2016, 29, 545–559. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, A.K.; Tan, K.C. Bipartite Differential Neural Network for Unsupervised Image Change Detection. IEEE Trans. Neural Netw. Learn. Syst. 2019, 31, 876–890. [Google Scholar] [CrossRef]

- Liu, H.; Wang, Z.; Shang, F.; Zhang, M.; Gong, M.; Ge, F.; Jiao, L. A Novel Deep Framework for Change Detection of Multi-source Heterogeneous Images. In Proceedings of the 2019 International Conference on Data Mining Workshops (ICDMW), Beijing, Chin, 8–11 November 2019; pp. 165–171. [Google Scholar]

- Niu, X.; Gong, M.; Zhan, T.; Yang, Y. A Conditional Adversarial Network for Change Detection in Heterogeneous Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 45–49. [Google Scholar] [CrossRef]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change Detection Based on Deep Siamese Convolutional Network for Optical Aerial Images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Liu, Z.; Li, G.; Mercier, G.; He, Y.; Pan, Q. Change Detection in Heterogenous Remote Sensing Images via Homogeneous Pixel Transformation. IEEE Trans. Image Process. 2018, 27, 1822–1834. [Google Scholar] [CrossRef] [PubMed]

- Gong, M.; Zhang, P.; Su, L.; Liu, J. Coupled Dictionary Learning for Change Detection From Multisource Data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7077–7091. [Google Scholar] [CrossRef]

- Jiang, X.; Li, G.; Liu, Y.; Zhang, X.P.; He, Y. Homogeneous Transformation Based on Deep-Level Features in Heterogeneous Remote Sensing Images. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 206–209. [Google Scholar] [CrossRef]

- Jiang, X.; Li, G.; Liu, Y.; Zhang, X.P.; He, Y. Change Detection in Heterogeneous Optical and SAR Remote Sensing Images Via Deep Homogeneous Feature Fusion. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2020, 13, 1551–1566. [Google Scholar] [CrossRef] [Green Version]

- Jing, Y.; Yang, Y.; Feng, Z.; Ye, J.; Yu, Y.; Song, M. Neural Style Transfer: A Review. IEEE Trans. Vis. Comput. Graph. 2019. [Google Scholar] [CrossRef] [Green Version]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image Style Transfer Using Convolutional Neural Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2414–2423. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Lecture Notes in Computer Science; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar] [CrossRef] [Green Version]

- Gatys, L.; Ecker, A.S.; Bethge, M. Texture Synthesis Using Convolutional Neural Networks. In Advances in Neural Information Processing Systems 28; Curran Associates, Inc.: Red Hook, NY, USA, 2015; pp. 262–270. [Google Scholar]

- Yu, L.; Gong, P. Google Earth as a virtual globe tool for Earth science applications at the global scale: Progress and perspectives. Int. J. Remote Sens. 2012, 33, 3966–3986. [Google Scholar] [CrossRef]

- Qingjun, Z. System design and key technologies of the GF-3 satellite. Acta Geod. Et Cartogr. Sin. 2017, 46, 269. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar] [CrossRef] [Green Version]

- Brennan, R.L.; Prediger, D.J. Coefficient Kappa: Some Uses, Misuses, and Alternatives. Educ. Psychol. Meas. 1981, 41, 687–699. [Google Scholar] [CrossRef]

- Li, S.; Tang, H.; Huang, X.; Mao, T.; Niu, X. Automated Detection of Buildings from Heterogeneous VHR Satellite Images for Rapid Response to Natural Disasters. Remote Sens. 2017, 9, 1177. [Google Scholar] [CrossRef] [Green Version]

- El Amin, A.M.; Liu, Q.; Wang, Y. Zoom out CNNs features for optical remote sensing change detection. In Proceedings of the 2017 2nd International Conference on Image, Vision and Computing (ICIVC), Chengdu, China, 2–4 June 2017; pp. 812–817. [Google Scholar]

- Yu, C.; Wang, J.; Peng, C.; Gao, C.; Yu, G.; Sang, N. Learning a Discriminative Feature Network for Semantic Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Moss, R.H.; Chen, X.; Stanley, R.J.; Stoecker, W.V.; Celebi, M.E.; Malters, J.M.; Grichnik, J.M.; Marghoob, A.A.; Rabinovitz, H.S.; et al. Modified watershed technique and post-processing for segmentation of skin lesions in dermoscopy images. Comput. Med. Imaging Graph. 2011, 35, 116–120. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yang, M.J.; Jiao, L.C.; Liu, F.; Hou, B.; Yang, S.Y. Transferred Deep Learning-Based Change Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6960–6973. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

| Block | Output Size | Kernel | |

|---|---|---|---|

| Encoder | Encoder-1 | 112 × 112 | 3 × 3 × 1 × 16 3 × 3 × 16 × 16 |

| Encoder-2 | 56 × 56 | 3 × 3 × 16 × 32 3 × 3 × 32 × 32 | |

| Encoder-3 | 28 × 28 | 3 × 3 × 32 × 64 3 × 3 × 64 × 64 | |

| Encoder-4 | 24 × 24 | 3 × 3 × 64 × 128 3 × 3 × 128 × 128 | |

| Decoder | Decoder-1 | 112 × 112 | 3 × 3 × 32 × 16 3 × 3 × 16 × 2 |

| Decoder-2 | 6 × 56 | 3 × 3 × 64 × 32 3 × 3 × 32 × 16 | |

| Decoder-3 | 28 × 28 | 3 × 3 × 128 × 64 3 × 3 × 64 × 32 | |

| Decoder-4 | 14 × 14 | 3 × 3 × 256 × 128 3 × 3 × 128 × 64 | |

| Decoder-5 | 7 × 7 | 3 × 3 × 128 × 128 | |

| Dataset | Training Set | Test Set | ||

|---|---|---|---|---|

| Size | Numbers | Unchanged | Size | |

| Dataset-1 | 112 × 112 | 320 | 161 (50.3%) | 560 × 560 |

| Dataset-4 | 112 × 112 | 376 | 266 (70.7%) | 560 × 560 |

| Dataset | Method | OA | Kappa | Pr | Re | F1 |

|---|---|---|---|---|---|---|

| Dataset-1 | P-PCC | 61.7 | 14.1 | 16.0 | 79.2 | 26.6 |

| O-PCC | 78.5 | 34.4 | 28.1 | 93.3 | 43.2 | |

| CMS-HCC (L1) | 94.8 | 68.4 | 68.7 | 73.9 | 71.2 | |

| CMS-HCC (L2) | 95.1 | 71.0 | 69.2 | 78.9 | 73.7 | |

| CMS-HCC (L3) | 93.9 | 66.4 | 61.2 | 81.1 | 67.8 | |

| Proposed | 95.7 | 74.3 | 73.5 | 80.2 | 76.6 | |

| Dataset-4 | P-PCC | 69.3 | 20.1 | 20.0 | 77.2 | 31.8 |

| O-PCC | 79.3 | 34.9 | 29.3 | 87.1 | 43.9 | |

| CMS-HCC (L1) | 93.9 | 69.2 | 62.0 | 87.3 | 72.6 | |

| CMS-HCC (L2) | 93.1 | 67.9 | 58.1 | 93.2 | 71.6 | |

| CMS-HCC (L3) | 92.1 | 64.9 | 54.2 | 94.8 | 69.0 | |

| Proposed | 95.2 | 71.0 | 75.5 | 72.1 | 73.6 |

| Dataset | NSW | FSL | OA | Kappa | Pr | Re | F1 |

|---|---|---|---|---|---|---|---|

| Dataset-1 | 84.7 | 40.1 | 34.0 | 78.8 | 47.5 | ||

| √ | 95.4 | 72.0 | 72.1 | 77.3 | 74.5 | ||

| √ | √ | 95.7 | 74.3 | 73.5 | 80.2 | 76.6 | |

| Dataset-4 | 89.8 | 47.7 | 46.5 | 62.2 | 53.2 | ||

| √ | 94.7 | 69.2 | 71.1 | 73.4 | 72.1 | ||

| √ | √ | 95.2 | 71.0 | 75.5 | 72.1 | 73.6 |

| OA | Kappa | Pr | Re | F1 | |

|---|---|---|---|---|---|

| 1/55 | 94.9 | 70.5 | 71.3 | 75.9 | 73.3 |

| 1/45 | 94.8 | 69.8 | 71.6 | 74.1 | 72.7 |

| 1/35 | 95.2 | 71.0 | 75.5 | 72.1 | 73.6 |

| 1/25 | 95.1 | 69.8 | 77.9 | 70.3 | 72.7 |

| 1/15 | 95.2 | 69.4 | 79.1 | 66.5 | 71.9 |

| OA | Kappa | Pr | Re | F1 | |

|---|---|---|---|---|---|

| 0 | 94.7 | 69.2 | 71.1 | 73.4 | 72.1 |

| 94.7 | 70.2 | 69.1 | 76.9 | 72.8 | |

| 95.2 | 71.0 | 69.1 | 72.1 | 75.5 | |

| 95.1 | 70.1 | 75.4 | 70.4 | 75.4 |

| OA | Kappa | Pr | Re | F1 | |

|---|---|---|---|---|---|

| 4.0 | 95.0 | 68.7 | 75.7 | 67.8 | 71.4 |

| 6.0 | 95.1 | 70.3 | 74.3 | 72.1 | 73.0 |

| 8.0 | 95.2 | 71.0 | 75.5 | 72.1 | 73.6 |

| 10.0 | 95.0 | 70.4 | 73.3 | 73.2 | 73.1 |

| 12.0 | 94.7 | 70.3 | 69.7 | 77.2 | 73.2 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shi, N.; Chen, K.; Zhou, G.; Sun, X. A Feature Space Constraint-Based Method for Change Detection in Heterogeneous Images. Remote Sens. 2020, 12, 3057. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12183057

Shi N, Chen K, Zhou G, Sun X. A Feature Space Constraint-Based Method for Change Detection in Heterogeneous Images. Remote Sensing. 2020; 12(18):3057. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12183057

Chicago/Turabian StyleShi, Nian, Keming Chen, Guangyao Zhou, and Xian Sun. 2020. "A Feature Space Constraint-Based Method for Change Detection in Heterogeneous Images" Remote Sensing 12, no. 18: 3057. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12183057