Development of Land Cover Classification Model Using AI Based FusionNet Network

Abstract

:1. Introduction

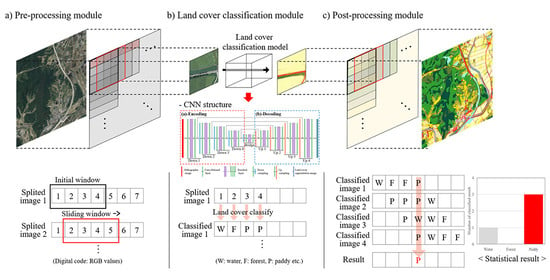

2. Development of Land Cover Classification Model

2.1. CNN Based Land Cover Classification Model Framework

2.2. Pre-Processing Module

2.3. Architecture of CNN Based Land Cover Classification Module

2.4. Post-Processing Module

3. Data Set and Methods for Verification of Land Cover Classification Model

3.1. Study Procedure

3.2. Study Area

3.2.1. Training Area

3.2.2. Verification Area

3.3. Data Acquisition

3.3.1. Orthographic Image

3.3.2. Land Cover Map

3.4. Training Land Cover Classification Model

3.5. Verification Method for Land Cover Classification Model

4. Verification Result of Land Cover Classification Model

4.1. Performance of Land Cover Classification at the Child Subcategory

4.2. Classification Accuracy of the Aggregated Land Cover to Main Category

4.3. Land Cover Classification of the Agricultural Fields

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Blasi, C.; Zavattero, L.; Marignani, M.; Smiraglia, D.; Copiz, R.; Rosati, L.; Del Vico, E. The concept of land ecological network and its design using a land unit approach. Plant Biosyst. 2008, 142, 540–549. [Google Scholar] [CrossRef]

- Yang, J.; Chen, F.; Xi, J.; Xie, P.; Li, C. A multitarget land use change simulation model based on cellular automata and its application. Abstr. Appl. Anal. 2014, 2014, 1–11. [Google Scholar] [CrossRef] [Green Version]

- He, T.; Shao, Q.; Cao, W.; Huang, L.; Liu, L. Satellite-observed energy budget change of deforestation in northeastern China and its climate implications. Remote Sens. 2015, 7, 11586–11601. [Google Scholar] [CrossRef] [Green Version]

- Anderson, M.C.; Allen, R.G.; Morse, A.; Kustas, W. Use of Landsat thermal imagery in monitoring evapotranspiration and managing water resources. Remote Sens. Environ. 2012, 122, 50–65. [Google Scholar] [CrossRef]

- Schilling, K.E.; Jha, M.K.; Zhang, Y.K.; Gassman, P.W.; Wolter, C.F. Impact of land use and land cover change on the water balance of a large agricultural watershed: Historical effects and future directions. Water Resour. Res. 2008, 44, 44. [Google Scholar] [CrossRef]

- Rahman, S. Six decades of agricultural land use change in Bangladesh: Effects on crop diversity, productivity, food availability and the environment, 1948–2006. Singap. J. Trop. Geogr. 2010, 31, 254–269. [Google Scholar] [CrossRef] [Green Version]

- Bontemps, S.; Arias, M.; Cara, C.; Dedieu, G.; Guzzonato, E.; Hagolle, O.; Inglada, J.; Matton, N.; Morin, D.; Popescu, R.; et al. Building a data set over 12 globally distributed sites to support the development of agriculture monitoring applications with Sentinel-2. Remote Sens. 2015, 7, 16062–16090. [Google Scholar] [CrossRef] [Green Version]

- Borrelli, P.; Robinson, D.A.; Fleischer, L.R.; Lugato, E.; Ballabio, C.; Alewell, C.; Bagarello, V. An assessment of the global impact of 21st century land use change on soil erosion. Nat. Commun. 2017, 8, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Kyungdo, L.; Sukyoung, H.; Yihyun, K. Farmland use mapping using high resolution images and land use change analysis. Korean J. Soil Sci. Fertil. 2012, 45, 1164–1172. [Google Scholar]

- Song, X.; Duan, Z.; Jiang, X. Comparison of artificial neural networks and support vector machine classifiers for land cover classification in Northern China using a SPOT-5 HRG image. Int. J. Remote Sens. 2012, 33, 3301–3320. [Google Scholar] [CrossRef]

- Lee, H.-J.; Lu, J.-H.; Kim, S.-Y. Land cover object-oriented base classification using digital aerial photo image. J. Korean Soc. Geospat. Inf. Syst. 2011, 19, 105–113. [Google Scholar]

- Sakong, H.; Im, J. An empirical study on the land cover classification method using IKONOS image. J. Korean Assoc. Geogr. Inf. Stud. 2003, 6, 107–116. [Google Scholar]

- Enderle, D.I.; Weih, R.C., Jr. Integrating supervised and unsupervised classification methods to develop a more accurate land cover classification. J. Ark. Acad. Sci. 2005, 59, 65–73. [Google Scholar]

- Laha, A.; Pal, N.R.; Das, J. Land cover classification using fuzzy rules and aggregation of contextual information through evidence theory. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1633–1641. [Google Scholar] [CrossRef] [Green Version]

- Jin, B.; Ye, P.; Zhang, X.; Song, W.; Li, S. Object-oriented method combined with deep convolutional neural networks for land-use-type classification of remote sensing images. J. Indian Soc. Remote Sens. 2019, 47, 951–965. [Google Scholar] [CrossRef] [Green Version]

- Paisitkriangkrai, S.; Shen, C.; Av, H. Pedestrian detection with spatially pooled features and structured ensemble learning. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 1243–1257. [Google Scholar] [CrossRef] [Green Version]

- Roy, M.; Melgani, F.; Ghosh, A.; Blanzieri, E.; Ghosh, S. Land-cover classification of remotely sensed images using compressive sensing having severe scarcity of labeled patterns. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1257–1261. [Google Scholar] [CrossRef]

- Prasad, S.V.S.; Savitri, T.S.; Krishna, I.V.M. Classification of multispectral satellite images using clustering with SVM classifier. Int. J. Comput. Appl. 2011, 35, 32–44. [Google Scholar]

- Huang, X.; Zhang, L. An SVM ensemble approach combining spectral, structural, and semantic features for the classification of high-resolution remotely sensed imagery. IEEE Trans. Geosci. Remote Sens. 2012, 51, 257–272. [Google Scholar] [CrossRef]

- Kang, M.S.; Park, S.W.; Kwang, S.Y. Land cover classification of image data using artificial neural networks. J. Korean Soc. Rural Plan. 2006, 12, 75–83. [Google Scholar]

- Kang, N.Y.; Pak, J.G.; Cho, G.S.; Yeu, Y. An analysis of land cover classification methods using IKONOS satellite image. J. Korean Soc. Geospat. Inf. Syst. 2012, 20, 65–71. [Google Scholar]

- Schöpfer, E.; Lang, S.; Strobl, J. Segmentation and object-based image analysis. Remote Sens. Urban Suburb. Areas 2010, 10, 181–192. [Google Scholar]

- Längkvist, M.; Kiselev, A.; Alirezaie, M.; Loutfi, A. Classification and segmentation of satellite orthoimagery using convolutional neural networks. Remote Sens. 2016, 8, 329. [Google Scholar] [CrossRef] [Green Version]

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of convolutional neural networks in agriculture. J. Agric. Sci. 2018, 156, 312–322. [Google Scholar] [CrossRef] [Green Version]

- Gavade, A.B.; Rajpurohit, V.S. Systematic analysis of satellite image-based land cover classification techniques: Literature review and challenges. Int. J. Comput. Appl. 2019, 1–10. [Google Scholar] [CrossRef]

- Luus, F.P.S.; Salmon, B.P.; Bergh, F.V.D.; Maharaj, B.T. Multiview deep learning for land-use classification. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2448–2452. [Google Scholar] [CrossRef] [Green Version]

- Santoni, M.M.; Sensuse, D.I.; Arymurthy, A.M.; Fanany, M.I. Cattle race classification using gray level co-occurrence matrix convolutional neural networks. Procedia Comput. Sci. 2015, 59, 493–502. [Google Scholar] [CrossRef] [Green Version]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Lee, S.H.; Chan, C.S.; Wilkin, P.; Remagnino, P. Deep-plant: Plant identification with convolutional neural networks. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015. [Google Scholar]

- Grinblat, G.L.; Uzal, L.C.; Larese, M.G.; Granitto, P.M. Deep learning for plant identification using vein morphological patterns. Comput. Electron. Agric. 2016, 127, 418–424. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Fu, G.; Liu, C.; Zhou, R.; Sun, T.; Zhang, Q. Classification for high resolution remote sensing imagery using a fully convolutional network. Remote Sens. 2017, 9, 498. [Google Scholar] [CrossRef] [Green Version]

- Lu, H.; Fu, X.; Liu, C.; Li, L.-G.; He, Y.-X. Cultivated land information extraction in UAV imagery based on deep convolutional neural network and transfer learning. J. Mt. Sci. 2017, 14, 731–741. [Google Scholar] [CrossRef]

- Carranza-García, M.; García-Gutiérrez, J.; Riquelme, J. A framework for evaluating land use and land cover classification using convolutional neural networks. Remote Sens. 2019, 11, 274. [Google Scholar] [CrossRef] [Green Version]

- Pan, S.; Guan, H.; Chen, Y.; Yu, Y.; Gonçalves, W.; Junior, J.M.; Li, J. Land-cover classification of multispectral LiDAR data using CNN with optimized hyper-parameters. ISPRS J. Photogramm. Remote Sens. 2020, 166, 241–254. [Google Scholar] [CrossRef]

- Quan, T.M.; Hildebrand, D.G.; Jeong, W.K. Fusionnet: A deep fully residual convolutional neural network for image segmentation in connectomics. arXiv 2016, arXiv:1612.05360. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Çiçek, Ö.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning dense volumetric segmentation from sparse annotation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Athens, Greece, 17–21 October 2016; Volume 9901, pp. 424–432. [Google Scholar]

- Shang, W.; Sohn, K.; Almeida, D.; Lee, H. Understanding and improving convolutional neural networks via concatenated rectified linear units. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2217–2225. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Stochastic pooling for regularization of deep convolutional neural networks. arXiv 2013, arXiv:1301.3557. [Google Scholar]

- Cultivated Area by City and County in 2017 from Kostat Total Survey of Agriculture, Forestry and Fisheries. Available online: http://kosis.kr/statHtml/statHtml.do?orgId=101&tblId=DT_1EB002&conn_path=I2 (accessed on 27 September 2020).

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [Green Version]

- Kim, H.O.; Yeom, J.M. A study on object-based image analysis methods for land cover classification in agricultural areas. J. Korean Assoc. Geogr. Inf. Stud. 2012, 15, 26–41. [Google Scholar] [CrossRef] [Green Version]

| Main Category (7 Items) | Parent Subcategory (22 Items) | Child Subcategory (41 Items) | Color Code | |||

|---|---|---|---|---|---|---|

| R | G | B | Remark | |||

| Urbanized area | Residential area | Single housings | 254 | 230 | 194 |  |

| Apartment housings | 223 | 193 | 111 |  | ||

| Industrial area | Industrial facilities | 192 | 132 | 132 |  | |

| Commercial area | Commercial and office buildings | 237 | 131 | 184 |  | |

| Mixed use areas | 223 | 176 | 164 |  | ||

| Culture and sports recreation area | Culture and sports recreation facilities | 246 | 113 | 138 |  | |

| Transportation area | Airports | 229 | 38 | 254 |  | |

| Ports | 197 | 50 | 81 |  | ||

| Railroads | 252 | 4 | 78 |  | ||

| Roads | 247 | 65 | 42 |  | ||

| Other transportation and communication facilities | 115 | 0 | 0 |  | ||

| Public facilities area | Basic environmental facilities | 246 | 177 | 18 |  | |

| Educational andadministrative facilities | 255 | 122 | 0 |  | ||

| Other public facilities | 199 | 88 | 27 |  | ||

| Agricultural area | Paddy | Consolidated paddy filed | 255 | 255 | 191 |  |

| Paddy field without consolidation | 244 | 230 | 168 |  | ||

| Upland | Consolidated upland | 247 | 249 | 102 |  | |

| Upland without consolidation | 245 | 228 | 10 |  | ||

| Greenhouse | Green houses | 223 | 220 | 115 |  | |

| Orchard | Orchards | 184 | 177 | 44 |  | |

| Other cultivation lands | Ranches and fish farms | 184 | 145 | 18 |  | |

| Other cultivation plots | 170 | 100 | 0 |  | ||

| Forest | Deciduous forest | Deciduous forests | 51 | 160 | 44 |  |

| Coniferous forest | Coniferous forests | 10 | 79 | 64 |  | |

| Mixed forests | Mixed forests | 51 | 102 | 51 |  | |

| Grassland | Natural grassland | Natural grasslands | 161 | 213 | 148 |  |

| Artificial grassland | Golf courses | 128 | 228 | 90 |  | |

| Cemeteries | 113 | 176 | 90 |  | ||

| Other grasslands | 96 | 126 | 51 |  | ||

| Wetland | Inland wetland | Inland wetlands | 180 | 167 | 208 |  |

| Coastal wetland | Tidal flats | 153 | 116 | 153 |  | |

| Salterns | 124 | 30 | 162 |  | ||

| Barren lands | Natural barren | Beaches | 193 | 219 | 236 |  |

| Riversides | 171 | 197 | 202 |  | ||

| Rocks and boulders | 171 | 182 | 165 |  | ||

| Artificial barrens | Mining sites | 88 | 90 | 138 |  | |

| Sports fields | 123 | 181 | 172 |  | ||

| Other artificial barrens | 159 | 242 | 255 |  | ||

| Water | Inland watery | Rivers | 62 | 167 | 255 |  |

| Lakes | 93 | 109 | 255 |  | ||

| Marine water | Marine water | 23 | 57 | 255 |  | |

| Classified Land Cover | Producer’s Accuracy (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Urbanized Area | Agricultural Area | Forest | Grassland | Wetland | Barren Lands | Water | Total | |||

| Reference land cover | Urbanized area | 18,658 | 2834 | 19 | 1850 | 41 | 193 | 24 | 23,619 | 79.00 |

| Agricultural area | 4439 | 114,426 | 61 | 5848 | 177 | 1122 | 114 | 126,187 | 90.68 | |

| Forest | 83 | 329 | 29,198 | 9619 | 4 | 24 | 3 | 39,261 | 74.37 | |

| Grassland | 2148 | 4870 | 1414 | 23,920 | 424 | 853 | 43 | 33,672 | 71.04 | |

| Wetland | 138 | 309 | 22 | 3840 | 1595 | 1205 | 352 | 7461 | 21.38 | |

| Barren lands | 1369 | 802 | 13 | 879 | 13 | 385 | 9 | 3469 | 11.10 | |

| Water | 29 | 22 | 1 | 153 | 688 | 187 | 5055 | 6133 | 82.41 | |

| Total | 26,864 | 123,593 | 30,728 | 46,108 | 2943 | 3970 | 5600 | 239,804 | - | |

| User’s Accuracy (%) | 69.45 | 92.58 | 95.02 | 51.88 | 54.20 | 9.70 | 90.27 | - | - | |

| Overall accuracy | 0.81 | |||||||||

| Kappa Value | 0.71 | |||||||||

| Classified Land Cover | Producer’s Accuracy (%) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Urbanized Area | Agricultural Area | Forest | Grassland | Wetland | Barren Lands | Water | Total | |||

| Reference land cover | Urbanized area | 44,614 | 4451 | 25 | 4026 | 203 | 334 | 68 | 53,721 | 83.05 |

| Agricultural area | 6759 | 124,749 | 254 | 6677 | 334 | 737 | 402 | 139,912 | 89.16 | |

| Forest | 214 | 469 | 14,582 | 18,109 | 1184 | 213 | 198 | 34,970 | 41.70 | |

| Grassland | 3951 | 7333 | 775 | 39,064 | 815 | 788 | 344 | 53,069 | 73.61 | |

| Wetland | 558 | 2327 | 5 | 3985 | 759 | 790 | 77 | 8501 | 8.92 | |

| Barren lands | 3956 | 3483 | 14 | 2,702 | 131 | 2280 | 38 | 12,604 | 18.09 | |

| Water | 73 | 321 | 2 | 378 | 280 | 249 | 2012 | 3315 | 60.69 | |

| Total | 60,125 | 143,132 | 15,657 | 74,941 | 3706 | 5392 | 3139 | 306,093 | - | |

| User’s Accuracy (%) | 74.20 | 87.16 | 93.13 | 52.13 | 20.47 | 42.29 | 64.10 | - | - | |

| Overall accuracy | 0.75 | |||||||||

| Kappa Value | 0.64 | |||||||||

| Classified Land Cover | Producer’s Accuracy (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Paddy | Upland | Green House | Orchard | Other Cultivation Lands | Other Land Cover | Total | |||

| Reference land cover | Paddy | 56,560 | 7523 | 1073 | 130 | 27 | 2262 | 67,575 | 83.70 |

| Upland | 2340 | 36,944 | 589 | 1743 | 286 | 5129 | 47,031 | 78.55 | |

| Green house | 557 | 359 | 9908 | 491 | 1575 | 3828 | 16,718 | 59.27 | |

| Orchard | 9 | 158 | 6 | 1356 | 84 | 624 | 2236 | 60.66 | |

| Other cultivation lands | 99 | 417 | 261 | 875 | 1378 | 3321 | 6352 | 21.70 | |

| Other land cover | 4302 | 6556 | 1398 | 3156 | 2971 | 147,798 | 166,181 | 88.94 | |

| Total | 63,868 | 51,956 | 13,236 | 7751 | 6322 | 162,961 | 306,093 | ||

| User’s accuracy (%) | 88.56 | 71.11 | 74.86 | 17.50 | 21.80 | 90.70 | - | - | |

| Overall accuracy | 0.83 | ||||||||

| Kappa Value | 0.73 | ||||||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Park, J.; Jang, S.; Hong, R.; Suh, K.; Song, I. Development of Land Cover Classification Model Using AI Based FusionNet Network. Remote Sens. 2020, 12, 3171. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12193171

Park J, Jang S, Hong R, Suh K, Song I. Development of Land Cover Classification Model Using AI Based FusionNet Network. Remote Sensing. 2020; 12(19):3171. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12193171

Chicago/Turabian StylePark, Jinseok, Seongju Jang, Rokgi Hong, Kyo Suh, and Inhong Song. 2020. "Development of Land Cover Classification Model Using AI Based FusionNet Network" Remote Sensing 12, no. 19: 3171. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12193171