Deep Neural Network Cloud-Type Classification (DeepCTC) Model and Its Application in Evaluating PERSIANN-CCS

Abstract

:1. Introduction

- (1)

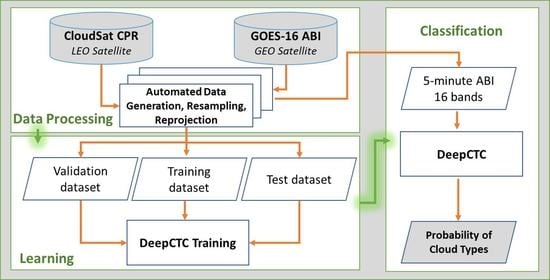

- Introducing an automatic data generation pipeline to meet the critical demand for an accurately labeled cloud-type dataset. The integration of CloudSat space-borne radar satellite data with GOES-16 ABI observations also addresses the spatial and temporal discontinuity in cloud-type information from narrow satellite swaths.

- (2)

- Using the Deep Neural Network Cloud-Type Classification (DeepCTC) system to perform a rapid and global cloud-type classification for the new generation of geostationary satellite observations (e.g., GOES-16 ABI).

- (3)

- Evaluating the performance of PERSIANN-CCS based on DeepCTC to diagnose the weakness and strength of precipitation estimates over different cloud types. This assessment is performed over a period of one month (July 2018) for half-hourly precipitation estimates.

2. Data Sources

2.1. CloudSat

2.2. Geostationary Satellite Observations

2.3. Precipitation Datasets

3. Methodology

3.1. The Architecture and Configuration of DeepCTC

3.2. Automated Data Generation Pipeline

4. Results and Discussion

4.1. Visualization of Cloud-Type Information over the CONUS

4.2. Assessment of DeepCTC Through Precipitation

4.3. Evaluation of Half-Hourly PERSIANN-CCS According to Cloud-Type Information

5. Conclusions and Future Direction

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

References

- Wang, Z.; Sassen, K. Cloud type and macrophysical property retrieval using multiple remote sensors. J. Appl. Meteorol. 2001, 40, 1665–1682. [Google Scholar] [CrossRef]

- Boucher, O.; Randall, D.; Artaxo, P.; Bretherton, C.; Feingold, G.; Forster, P.; Kerminen, V.M.; Kondo, Y.; Liao, H.; Lohmann, U.; et al. Clouds and aerosols. In Climate Change 2013: The Physical Science Basis. Contribution of Working Group I to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK, 2013; pp. 571–657. [Google Scholar]

- Booth, A.L. Objective Cloud Type Classification Using Visual and Infrared Satellite Data; American Meteorological Society: Boston, MA, USA, 1973; Volume 54, p. 275. [Google Scholar]

- Hughes, N. Global cloud climatologies: A historical review. J. Clim. Appl. Meteorol. 1984, 23, 724–751. [Google Scholar] [CrossRef]

- Goodman, A.; Henderson-Sellers, A. Cloud detection and analysis: A review of recent progress. Atmos. Res. 1988, 21, 203–228. [Google Scholar] [CrossRef]

- Rossow, W.B. Measuring cloud properties from space: A review. J. Clim. 1989, 2, 201–213. [Google Scholar] [CrossRef] [Green Version]

- Rossow, W.B.; Garder, L.C. Cloud detection using satellite measurements of infrared and visible radiances for ISCCP. J. Clim. 1993, 6, 2341–2369. [Google Scholar] [CrossRef]

- Wielicki, B.A.; Parker, L. On the determination of cloud cover from satellite sensors: The effect of sensor spatial resolution. J. Geophys. Res. Atmos. 1992, 97, 12799–12823. [Google Scholar] [CrossRef]

- Key, J.R. The area coverage of geophysical fields as a function of sensor field-of-view. Remote Sens. Environ. 1994, 48, 339–346. [Google Scholar] [CrossRef]

- Yhann, S.R.; Simpson, J.J. Application of neural networks to AVHRR cloud segmentation. IEEE Trans. Geosci. Remote Sens. 1995, 33, 590–604. [Google Scholar] [CrossRef]

- Rossow, W.B.; Schiffer, R.A. Advances in understanding clouds from ISCCP. Bull. Am. Meteorol. Soc. 1999, 80, 2261–2288. [Google Scholar] [CrossRef] [Green Version]

- Marais, I.V.Z.; Du Preez, J.A.; Steyn, W.H. An optimal image transform for threshold-based cloud detection using heteroscedastic discriminant analysis. Int. J. Remote Sens. 2011, 32, 1713–1729. [Google Scholar] [CrossRef]

- Ackerman, S.; Holz, R.; Frey, R.; Eloranta, E.; Maddux, B.; McGill, M. Cloud detection with MODIS. Part II: Validation. J. Atmos. Ocean. Technol. 2008, 25, 1073–1086. [Google Scholar] [CrossRef] [Green Version]

- Dybbroe, A.; Karlsson, K.G.; Thoss, A. NWCSAF AVHRR cloud detection and analysis using dynamic thresholds and radiative transfer modeling. Part I: Algorithm description. J. Appl. Meteorol. 2005, 44, 39–54. [Google Scholar] [CrossRef]

- So, D.; Shin, D.B. Classification of precipitating clouds using satellite infrared observations and its implications for rainfall estimation. Q. J. R. Meteorol. Soc. 2018, 144, 133–144. [Google Scholar] [CrossRef] [Green Version]

- Hong, Y.; Hsu, K.L.; Sorooshian, S.; Gao, X. Precipitation estimation from remotely sensed imagery using an artificial neural network cloud classification system. J. Appl. Meteorol. 2004, 43, 1834–1853. [Google Scholar] [CrossRef] [Green Version]

- Purbantoro, B.; Aminuddin, J.; Manago, N.; Toyoshima, K.; Lagrosas, N.; Sumantyo, J.T.S.; Kuze, H. Comparison of Cloud Type Classification with Split Window Algorithm Based on Different Infrared Band Combinations of Himawari-8 Satellite. Adv. Remote Sens. 2018, 7, 218. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Menzel, W.P.; Yang, Z.; Frey, R.A.; Ackerman, S.A. High-spatial-resolution surface and cloud-type classification from MODIS multispectral band measurements. J. Appl. Meteorol. 2003, 42, 204–226. [Google Scholar] [CrossRef]

- Hameg, S.; Lazri, M.; Ameur, S. Using naive Bayes classifier for classification of convective rainfall intensities based on spectral characteristics retrieved from SEVIRI. J. Earth Syst. Sci. 2016, 125, 945–955. [Google Scholar] [CrossRef] [Green Version]

- Tebbi, M.A.; Haddad, B. Artificial intelligence systems for rainy areas detection and convective cells’ delineation for the south shore of Mediterranean Sea during day and nighttime using MSG satellite images. Atmos. Res. 2016, 178, 380–392. [Google Scholar] [CrossRef]

- Lazri, M.; Ameur, S. Combination of support vector machine, artificial neural network and random forest for improving the classification of convective and stratiform rain using spectral features of SEVIRI data. Atmos. Res. 2018, 203, 118–129. [Google Scholar] [CrossRef]

- Lee, J.; Weger, R.C.; Sengupta, S.K.; Welch, R.M. A neural network approach to cloud classification. IEEE Trans. Geosci. Remote Sens. 1990, 28, 846–855. [Google Scholar] [CrossRef]

- Bankert, R.L. Cloud classification of AVHRR imagery in maritime regions using a probabilistic neural network. J. Appl. Meteorol. 1994, 33, 909–918. [Google Scholar] [CrossRef] [Green Version]

- Tian, B.; Shaikh, M.A.; Azimi-Sadjadi, M.R.; Haar, T.H.V.; Reinke, D.L. A study of cloud classification with neural networks using spectral and textural features. IEEE Trans. Neural Netw. 1999, 10, 138–151. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Miller, S.W.; Emery, W.J. An automated neural network cloud classifier for use over land and ocean surfaces. J. Appl. Meteorol. 1997, 36, 1346–1362. [Google Scholar] [CrossRef]

- Hsu, K.l.; Gao, X.; Sorooshian, S.; Gupta, H.V. Precipitation estimation from remotely sensed information using artificial neural networks. J. Appl. Meteorol. 1997, 36, 1176–1190. [Google Scholar] [CrossRef]

- Sorooshian, S.; Hsu, K.L.; Gao, X.; Gupta, H.V.; Imam, B.; Braithwaite, D. Evaluation of PERSIANN system satellite-based estimates of tropical rainfall. Bull. Am. Meteorol. Soc. 2000, 81, 2035–2046. [Google Scholar] [CrossRef] [Green Version]

- Mazzoni, D.; Horváth, Á.; Garay, M.J.; Tang, B.; Davies, R. A MISR cloud-type classifier using reduced Support Vector Machines. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010. [Google Scholar]

- Nasrollahi, N. Cloud Classification and its Application in Reducing False Rain. In Improving Infrared-Based Precipitation Retrieval Algorithms Using Multi-Spectral Satellite Imagery; Springer: Berlin/Heidelberg, Germany, 2015; pp. 43–63. [Google Scholar]

- Cai, K.; Wang, H. Cloud classification of satellite image based on convolutional neural networks. In Proceedings of the 2017 8th IEEE International Conference on Software Engineering and Service Science (ICSESS), Beijing, China, 20–22 November 2017; pp. 874–877. [Google Scholar]

- Wohlfarth, K.; Schröer, C.; Klaß, M.; Hakenes, S.; Venhaus, M.; Kauffmann, S.; Wilhelm, T.; Wohler, C. Dense Cloud Classification on Multispectral Satellite Imagery. In Proceedings of the 2018 10th IAPR Workshop on Pattern Recognition in Remote Sensing (PRRS), Beijing, China, 19–20 August 2018; pp. 1–6. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation Inc.: La Jolla, CA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Kohonen, T. The self-organizing map. Proc. IEEE 1990, 78, 1464–1480. [Google Scholar] [CrossRef]

- Alpaydin, E. Introduction to Machine Learning; MIT Press: Cambridge, MA, USA, 2009. [Google Scholar]

- Pankiewicz, G. Pattern recognition techniques for the identification of cloud and cloud systems. Meteorol. Appl. 1995, 2, 257–271. [Google Scholar] [CrossRef]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 042609. [Google Scholar] [CrossRef] [Green Version]

- Tao, Y.; Gao, X.; Ihler, A.; Sorooshian, S.; Hsu, K. Precipitation identification with bispectral satellite information using deep learning approaches. J. Hydrometeorol. 2017, 18, 1271–1283. [Google Scholar] [CrossRef]

- Huffman, G.J.; Bolvin, D.T.; Braithwaite, D.; Hsu, K.; Joyce, R.; Xie, P.; Yoo, S.H. NASA global precipitation measurement (GPM) integrated multi-satellite retrievals for GPM (IMERG). Algorithm Theor. Basis Doc. Version 2015, 4, 30. [Google Scholar]

- Joyce, R.J.; Xie, P. Kalman filter–based CMORPH. J. Hydrometeorol. 2011, 12, 1547–1563. [Google Scholar] [CrossRef]

- Behrangi, A.; Hsu, K.; Imam, B.; Sorooshian, S. Daytime precipitation estimation using bispectral cloud classification system. J. Appl. Meteorol. Climatol. 2010, 49, 1015–1031. [Google Scholar] [CrossRef] [Green Version]

- Camps-Valls, G.; Tuia, D.; Bruzzone, L.; Benediktsson, J.A. Advances in hyperspectral image classification: Earth monitoring with statistical learning methods. IEEE Signal Process. Mag. 2013, 31, 45–54. [Google Scholar] [CrossRef] [Green Version]

- Stephens, G.L.; Vane, D.G.; Boain, R.J.; Mace, G.G.; Sassen, K.; Wang, Z.; Illingworth, A.J.; O’connor, E.J.; Rossow, W.B.; Durden, S.L.; et al. The CloudSat mission and the A-Train: A new dimension of space-based observations of clouds and precipitation. Bull. Am. Meteorol. Soc. 2002, 83, 1771–1790. [Google Scholar] [CrossRef] [Green Version]

- Sassen, K.; Wang, Z. Classifying clouds around the globe with the CloudSat radar: 1-year of results. Geophys. Res. Lett. 2008, 35. [Google Scholar] [CrossRef]

- Huang, L.; Jiang, J.H.; Wang, Z.; Su, H.; Deng, M.; Massie, S. Climatology of cloud water content associated with different cloud types observed by A-Train satellites. J. Geophys. Res. Atmos. 2015, 120, 4196–4212. [Google Scholar] [CrossRef]

- Hudak, D.; Rodriguez, P.; Donaldson, N. Validation of the CloudSat precipitation occurrence algorithm using the Canadian C band radar network. J. Geophys. Res. Atmos. 2008, 113. [Google Scholar] [CrossRef] [Green Version]

- Nasrollahi, N. Improving Infrared-Based Precipitation Retrieval Algorithms Using Multi-Spectral Satellite Imagery; University of California: Irvine, CA, USA, 2013. [Google Scholar]

- Houze, R.A., Jr. Cloud Dynamics; Academic Press: Cambridge, MA, USA, 2014; Volume 104. [Google Scholar]

- Schmit, T.J.; Griffith, P.; Gunshor, M.M.; Daniels, J.M.; Goodman, S.J.; Lebair, W.J. A closer look at the ABI on the GOES-R series. Bull. Am. Meteorol. Soc. 2017, 98, 681–698. [Google Scholar] [CrossRef]

- Schmit, T.J.; Gunshor, M.M. ABI Imagery from the GOES-R Series. In The GOES-R Series; Elsevier: Amsterdam, The Netherlands, 2020; pp. 23–34. [Google Scholar]

- Schmit, T.; Gunshor, M.; Fu, G.; Rink, T.; Bah, K.; Wolf, W. GOES-R Advanced Baseline Imager (ABI) Algorithm Theoretical Basis Document for: Cloud and Moisture Imagery Product (CMIP); University of Wisconsin: Madison, WI, USA, 2010. [Google Scholar]

- Zhang, J.; Howard, K.; Langston, C.; Kaney, B.; Qi, Y.; Tang, L.; Grams, H.; Wang, Y.; Cocks, S.; Martinaitis, S.; et al. Multi-Radar Multi-Sensor (MRMS) quantitative precipitation estimation: Initial operating capabilities. Bull. Am. Meteorol. Soc. 2016, 97, 621–638. [Google Scholar] [CrossRef]

- Van Oldenborgh, G.J.; Van Der Wiel, K.; Sebastian, A.; Singh, R.; Arrighi, J.; Otto, F.; Haustein, K.; Li, S.; Vecchi, G.; Cullen, H. Attribution of extreme rainfall from Hurricane Harvey, August 2017. Environ. Res. Lett. 2017, 12, 124009. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Zhang, Z.; Sabuncu, M. Generalized cross entropy loss for training deep neural networks with noisy labels. In Advances in Neural Information Processing Systems; Neural Information Processing Systems Foundation Inc.: La Jolla, CA, USA, 2018; pp. 8778–8788. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Zhang, J.; Liu, P.; Zhang, F.; Song, Q. CloudNet: Ground-Based Cloud Classification with Deep Convolutional Neural Network. Geophys. Res. Lett. 2018, 45, 8665–8672. [Google Scholar] [CrossRef]

- Stephens, G.; Winker, D.; Pelon, J.; Trepte, C.; Vane, D.; Yuhas, C.; L’ecuyer, T.; Lebsock, M. CloudSat and CALIPSO within the A-Train: Ten years of actively observing the Earth system. Bull. Am. Meteorol. Soc. 2018, 99, 569–581. [Google Scholar] [CrossRef] [Green Version]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2009, 22, 1345–1359. [Google Scholar] [CrossRef]

- Sun-Mack, S.; Minnis, P.; Chen, Y.; Gibson, S.; Yi, Y.; Trepte, Q.; Wielicki, B.; Kato, S.; Winker, D.; Stephens, G.; et al. Integrated cloud-aerosol-radiation product using CERES, MODIS, CALIPSO, and CloudSat data. In Remote Sensing of Clouds and the Atmosphere XII; International Society for Optics and Photonics: Bellingham, DC, USA, 2007; Volume 6745, p. 674513. [Google Scholar]

- Hsu, K.l.; Gupta, H.V.; Gao, X.; Sorooshian, S.; Imam, B. Self-organizing linear output map (SOLO): An artificial neural network suitable for hydrologic modeling and analysis. Water Resour. Res. 2002, 38, 38-1–38-17. [Google Scholar] [CrossRef] [Green Version]

- Hayatbini, N.; Kong, B.; Hsu, K.L.; Nguyen, P.; Sorooshian, S.; Stephens, G.; Fowlkes, C.; Nemani, R.; Ganguly, S. Conditional Generative Adversarial Networks (cGANs) for Near Real-Time Precipitation Estimation from Multispectral GOES-16 Satellite Imageries—PERSIANN-cGAN. Remote Sens. 2019, 11, 2193. [Google Scholar] [CrossRef] [Green Version]

- AghaKouchak, A.; Mehran, A. Extended contingency table: Performance metrics for satellite observations and climate model simulations. Water Resour. Res. 2013, 49, 7144–7149. [Google Scholar] [CrossRef] [Green Version]

- Wang, Z.; Sassen, K.; Whiteman, D.N.; Demoz, B.B. Studying altocumulus with ice virga using ground-based active and passive remote sensors. J. Appl. Meteorol. 2004, 43, 449–460. [Google Scholar] [CrossRef]

- Ting, K.M. Confusion matrix. In Encyclopedia of Machine Learning and Data Mining; Springer: Berlin/Heidelberg, Germany, 2017; p. 260. [Google Scholar]

- Sokolova, M.; Lapalme, G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009, 45, 427–437. [Google Scholar] [CrossRef]

- Wilks, D.S. Statistical Methods in the Atmospheric Sciences; Academic Press: Cambridge, MA, USA, 2011; Volume 100. [Google Scholar]

- AghaKouchak, A.; Behrangi, A.; Sorooshian, S.; Hsu, K.; Amitai, E. Evaluation of satellite-retrieved extreme precipitation rates across the central United States. J. Geophys. Res. Atmos. 2011, 116. [Google Scholar] [CrossRef]

| Cloud-Type | Vertical Dimension | Cloud Base Level | Precipitation | Horizontal Dimension | |

|---|---|---|---|---|---|

| 1 | High clouds (Hi) | Moderate | High | None | Continuous |

| 2 | Altostratus (As) | Moderate | Middle | None | Continuous |

| 3 | Altocumulus (Ac) | Shallow/ Moderate | Middle | None/Drizzle | Discontinuous |

| 4 | Stratus (St) | Shallow/ Moderate | Low | Possible | Continuous |

| 5 | Stratocumulus (Sc) | Shallow | Low | Possible | Discontinuous |

| 6 | Cumulus (Cu) | Moderate/ Deep | Low | Possible | Isolated |

| 7 | Nimbostratus (Ns) | Deep | Low/ Middle | Yes | Continuous |

| 8 | Deep Convective (DC) | Deep | Low | Yes | Continuous |

| ABI Bands | Descriptive Name | Central Wavelength | Primary Purpose |

|---|---|---|---|

| 1 | Blue | 0.47 m | Aerosol monitoring |

| 2 | Red | 0.64 m | Detection of smoke, dust, fog and low level clouds |

| 3 | Vegetation | 0.86 m | Aerosol and cloud monitoring; Land characterizing (NDVI) |

| 4 | Cirrus | 1.37 m | Daytime detection of thin/high cirrus clouds |

| 5 | Snow/Ice | 1.61 m | Snow and ice discrimination, Cloud phase detection |

| 6 | Cloud particle size | 2.24 m | Cloud particle size and cloud phase discrimination |

| 7 | Shortwave window | 3.9 m | Fog, low level and stratus cloud identification |

| 8 | Upper-level water vapor | 6.19 m | Tropospheric water vapor monitoring |

| 9 | Middle-level water vapor | 6.93 m | Tropospheric water vapor monitoring |

| 10 | Lower-level water vapor | 7.37 m | Tropospheric water vapor monitoring |

| 11 | Cloud top phase | 8.44 m | Determining cloud phase |

| 12 | Ozone | 9.61 m | Dynamics of the atmosphere near tropopause |

| 13 | Clean long-wave window | 10.33 m | Cloud boundary detection |

| 14 | Long-wave window | 11.21 m | Cloud boundary detection |

| 15 | Dirty long-wave window | 12.29 m | Distinguishing dust and volcanic ash from clouds |

| 16 | CO2 | 13.28 m | Cloud top height |

| Predicted Cloud-Type (DeepCTC) | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Hi | As | Ac | St, Sc | Cu | Ns | Dc | No Cloud | ||

| Reference (CloudSat) | Hi | 1239 | 46 | 33 | 8 | 2 | 1 | 10 | 161 |

| As | 53 | 1317 | 40 | 13 | 9 | 1 | 55 | 9 | |

| Ac | 39 | 44 | 1182 | 83 | 22 | 4 | 27 | 99 | |

| St, Sc | 51 | 7 | 33 | 968 | 16 | 0 | 2 | 417 | |

| Cu | 43 | 44 | 71 | 218 | 998 | 6 | 14 | 112 | |

| Ns | 35 | 70 | 38 | 2 | 9 | 1312 | 34 | 0 | |

| DC | 23 | 76 | 23 | 13 | 10 | 22 | 1329 | 4 | |

| No Cloud | 39 | 2 | 4 | 47 | 0 | 0 | 0 | 1408 | |

| Cloud Type | ||||||||

|---|---|---|---|---|---|---|---|---|

| Index | Hi | As | Ac | St, Sc | Cu | Ns | DC | No Cloud |

| PPV | 0.81 | 0.82 | 0.83 | 0.71 | 0.93 | 0.97 | 0.90 | 0.63 |

| TPR | 0.82 | 0.87 | 0.78 | 0.64 | 0.66 | 0.87 | 0.88 | 0.93 |

| TNR | 0.97 | 0.97 | 0.97 | 0.96 | 0.99 | 0.99 | 0.98 | 0.92 |

| NPV | 0.97 | 0.98 | 0.96 | 0.95 | 0.95 | 0.98 | 0.98 | 0.99 |

| FPR | 0.02 | 0.02 | 0.02 | 0.03 | 0.00 | 0.00 | 0.01 | 0.07 |

| Cloud Type | |||||||

|---|---|---|---|---|---|---|---|

| Metrics | Hi | As | Ac | St, Sc | Cu | Ns | DC |

| 0.69 | 1.19 | 0.32 | 0.70 | 0.39 | 0.82 | 1.56 | |

| 2.14 | 2.63 | 1.28 | 1.44 | 2.03 | 3.08 | 5.61 | |

| 0.26 | 0.27 | 0.17 | 0.36 | 0.22 | 0.17 | 0.26 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Afzali Gorooh, V.; Kalia, S.; Nguyen, P.; Hsu, K.-l.; Sorooshian, S.; Ganguly, S.; Nemani, R.R. Deep Neural Network Cloud-Type Classification (DeepCTC) Model and Its Application in Evaluating PERSIANN-CCS. Remote Sens. 2020, 12, 316. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12020316

Afzali Gorooh V, Kalia S, Nguyen P, Hsu K-l, Sorooshian S, Ganguly S, Nemani RR. Deep Neural Network Cloud-Type Classification (DeepCTC) Model and Its Application in Evaluating PERSIANN-CCS. Remote Sensing. 2020; 12(2):316. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12020316

Chicago/Turabian StyleAfzali Gorooh, Vesta, Subodh Kalia, Phu Nguyen, Kuo-lin Hsu, Soroosh Sorooshian, Sangram Ganguly, and Ramakrishna R. Nemani. 2020. "Deep Neural Network Cloud-Type Classification (DeepCTC) Model and Its Application in Evaluating PERSIANN-CCS" Remote Sensing 12, no. 2: 316. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12020316