2.3. Imaging Approach

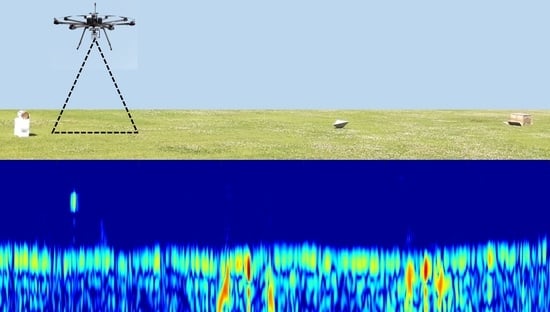

The block diagram of the applied data processing strategy is described in

Figure 3. The strategy takes as input the raw radargram, which represents the radar signals collected at each measurement position (during the slow time and along the flight path) versus the fast time. The final output is a focused and easy interpretable image, referred to as tomographic image, which accounts for the reconstruction of the targets into the vertical slice defined by the flight trajectory (along-track direction), which is assumed to be a straight line, and the nadir direction.

Initially, raw data are processed by means of the background removal step [

34]. Background removal is a filtering procedure herein adopted for mitigating the undesired signal due to the electromagnetic coupling between transmitting and receiving radar antennas. Since this undesired signal is typically spatial and temporal invariant, the background removal step replaces each single radar trace of the radargram with the difference between the trace and the mean value of all the traces collected along the flight trajectory.

After, the motion compensation (MoCo)

stage is performed. The MoCo is a key element of the proposed signal processing strategy and its main steps are depicted in

Figure 3. The MoCo takes as input the UAV positions estimated by GPS or CDGPS (defined as “estimated” trajectory), it generates a straight flight trajectory (i.e., the along-track direction) and it modifies the radar signals by means of the range alignment and the along-track interpolation procedures.

The range alignment compensates the altitude variations, occurred during the flight, by realigning each radar signal, along the nadir direction, with respect to a constant flight altitude. This latter is obtained from the UAV altitudes, as estimated by GPS or CDGPS, by an averaging operation, and it is assumed as the altitude of the radar system in the following processing steps.

The along-track interpolation accounts for the deviations occurring in the north–east plane between the estimated flight trajectory and a straight one. In details, a straight trajectory approximating the GPS or CDGPS estimated UAV flight trajectory in the north–east plane is computed by means of a fitting procedure. The straight trajectory in the north–east plane is taken as along-track direction, and is considered as the measurement line in the following processing steps. After the along-track direction is computed, the range aligned radar signals are interpolated and resampled in order to obtain evenly spaced radar data along the along track direction. Attitude variations are not considered in the MoCo. Indeed, the limited distance between the radar antennas and the UAV center of mass and the wide antenna radiation pattern imply that UAV attitude variation has a negligible effect on the data accuracy in terms of two travel time.

Figure 4a shows a schematic representation of the MoCo. As indicated in

Figure 4a,b, originally, the flight trajectory Γ has an arbitrary shape and each measurement points can be indicated by the following unevenly spaced vector:

. By applying the MoCo, the actual flight trajectory (and accordingly the collected data) is first modified by the range alignment operation as in

Figure 4c and, then, by performing the along-track interpolation, the measurements points are evenly spaced, as shown in

Figure 4d.

Note that the imaging plane, i.e., the plane wherein the targets are supposed to be located, is the vertical plane defined by the along-track and the nadir directions, from now on indicated as coordinates, respectively.

After MoCo, the radar data pre-processing step is performed (see

Figure 3). At this step, time-domain radar preprocessing procedures as dewow and time gating are carried out. The dewow step aims at mitigating the bias effect induced by internal electronic radar components by removing the average value of each radar trace [

34]. The time gating procedure selects the interval (along the fast time) of the radargram, where signals scattered from targets of interest occur. This allows a reduction of environmental clutter and noise effects [

35]. Herein, we define a suitable time window around the time where reflection of the air-soil interface occurs.

The last processing stage is the focusing. In this stage a focused image of the scene under test, as appearing into the vertical imaging domain, is obtained by solving an inverse scattering problem formulated into the frequency domain. Each trace of the radargram is transformed into the frequency domain by means of the Discrete Fourier Transform (DFT) so to provide the input data to the inversion approach. This latter faces the imaging as an inverse scattering problem by adopting an electromagnetic scattering model based on the following assumptions:

The antennas have a broad radiation pattern;

The targets are in the far-field region with respect to the radar antennas;

A linear model of the scattering phenomenon is assumed, hence the mutual interactions between the targets can be neglected (the Born approximation [

36]);

The time dependence is assumed, and, for notation simplicity, it is dropped.

Accordingly, at each angular frequency belonging to

, which is the angular frequency range of the collected signals, the backscattered signal at each point

is expressed by the following formula [

37]:

Equation (1) is a linear integral equation, where S is the spectrum of the transmitted pulse, is the measured scattered field, is the unknown contrast function at a point in imaging domain ; is the propagation constant in free space ( is the speed of light), and, is the distance between the measurement point and the generic point in . The contrast function accounts for the relative difference between the electromagnetic properties (dielectric permittivity, electrical conductivity) of the targets and the one of the free-space. The spectrum S(ω) is assumed unitary within the frequency range and is omitted for notation simplicity. The kernel of Equation (1) depends on the distance between and ; hence, an accurate knowledge of this relative distance needs to achieve satisfactory imaging capabilities.

The discretized formulation of the imaging problem described in Equation (1) is obtained by exploiting the Method of Moments [

38]:

where

is the

dimensional data vector,

being the total number of radar scans and

the number of operative pulsations

,

. The domain

is discretized by

pixels

, where

, and

,

is the

dimensional unknown vector, and

is the

scattering matrix related to the linear operator which maps the space of the unknown vector

into the space of data (measured scattered field)

.

The inverse problem defined by Equation (2) is ill posed, thus a regularization scheme needs in order to obtain a stable and robust solution with respect to noise on data [

39]:

where

denotes the scalar product in the data space,

is the truncation threshold,

is the set of singular values of the matrix

ordered in a decreasing way,

and

are the sets of the left and right singular vectors. The threshold

defines the “degree of regularization” of the solution and is chosen as a trade-off between accuracy and resolution requirement from one side (which should push to increase the

value) and solution stability from the other side (which should push to limit the value of

) [

40]. Therefore, the radar image is obtained according to the evaluation of the contrast function in Equation (3).

Since the TSVD inversion algorithm is a computational intensive procedure when large (in terms of the probing wavelength) domain are investigated, the Shift and Zoom concept [

24] has been implemented in order to speed up the computational time. The Shift and Zoom approach consists in processing data on partially overlapping intervals and combining the images in such a way to get an overall focused image. Specifically, it is schematically represented in

Figure 5 and the main steps may be explained as follows:

The measurement acquisition line and the survey area are divided into V partially overlapping subdomains and with ;

For each subdomain , the tomographic reconstruction is obtained by the TSVD inversion scheme indicated in Equation (3);

The tomographic image of the overall surveyed area can be obtained by combining the reconstructions achieved for each subdomain .

A detailed description of the Shift and Zoom implementation is in [

27].

Thanks to MoCo, the relative distance between the radar acquisition measurements and the pixel belonging to each subdomain are equivalent for all subdomain. In this way, the SVD calculation of the matrix

have to be evaluated just in a single shot for the first subdomain and the inversion for each subdomain mainly involve matrix times data vector multiplications. By doing so, the computational time for the overall reconstruction process decreases drastically. In fact, the computational cost of the SVD operation for matrix

having size

is:

Conversely, the adoption of the Shift and Zoom approach and the MoCo procedure reduces this cost to:

where

and

are the scaling factors related to reduced size of measurement line

and subdomain

, respectively. Therefore, an exponential reduction of the computational cost of the TSVD inversion scheme is obtained.