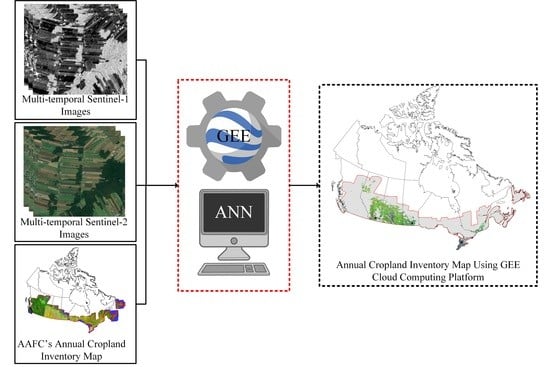

Application of Google Earth Engine Cloud Computing Platform, Sentinel Imagery, and Neural Networks for Crop Mapping in Canada

Abstract

:1. Introduction

2. Study Area and Datasets

2.1. Study Area

2.2. Field Data

2.3. Satellite Data

3. Methodology

3.1. Satellite Data Pre-Processing

3.2. Feature Extraction

3.3. Segmentation

3.4. Classification

3.5. Accuracy Assessment

4. Results

5. Discussion

5.1. Field Data

5.2. Spectral Similarity of Croplands

5.3. Discrimination of Cropland and Non-Cropland Areas

5.4. Including More Satellite Data

5.5. Canada-Wide Cropland Inventory Map

5.6. ACI Maps at Diffeent Years and Change Analysis

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Shelestov, A.; Lavreniuk, M.; Kussul, N.; Novikov, A.; Skakun, S. Exploring Google Earth Engine Platform for Big Data Processing: Classification of Multi-Temporal Satellite Imagery for Crop Mapping. Front. Earth Sci. 2017, 5. [Google Scholar] [CrossRef] [Green Version]

- McNairn, H.; Brisco, B. The application of C-band polarimetric SAR for agriculture: A review. Can. J. Remote Sens. 2004, 30, 525–542. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Afshar, M.; Brisco, B.; Huang, W.; Mohammad Javad Mirzadeh, S.; White, L.; Banks, S.; Montgomery, J.; Hopkinson, C. Canadian Wetland Inventory using Google Earth Engine: The First Map and Preliminary Results. Remote Sens. 2019, 11, 842. [Google Scholar] [CrossRef] [Green Version]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Amani, M.; Brisco, B.; Afshar, M.; Mirmazloumi, S.M.; Mahdavi, S.; Mirzadeh, S.M.J.; Huang, W.; Granger, J. A generalized supervised classification scheme to produce provincial wetland inventory maps: An application of Google Earth Engine for big geo data processing. Big Earth Data 2019, 3, 378–394. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Alizadeh Moghaddam, S.H.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13. [Google Scholar] [CrossRef]

- Dong, T.; Liu, J.; Shang, J.; Qian, B.; Huffman, T.; Zhang, Y.; Champagne, C.; Daneshfar, B. Assessing the Impact of Climate Variability on Cropland Productivity in the Canadian Prairies Using Time Series MODIS FAPAR. Remote Sens. 2016, 8, 281. [Google Scholar] [CrossRef] [Green Version]

- Xiong, J.; Thenkabail, P.S.; Gumma, M.K.; Teluguntla, P.; Poehnelt, J.; Congalton, R.G.; Yadav, K.; Thau, D. Automated cropland mapping of continental Africa using Google Earth Engine cloud computing. ISPRS J. Photogramm. Remote Sens. 2017, 126, 225–244. [Google Scholar] [CrossRef] [Green Version]

- Massey, R.; Sankey, T.T.; Yadav, K.; Congalton, R.G.; Tilton, J.C. Integrating cloud-based workflows in continental-scale cropland extent classification. Remote Sens. Environ. 2018, 219, 162–179. [Google Scholar] [CrossRef]

- Xie, Y.; Lark, T.J.; Brown, J.F.; Gibbs, H.K. Mapping irrigated cropland extent across the conterminous United States at 30 m resolution using a semi-automatic training approach on Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2019, 155, 136–149. [Google Scholar] [CrossRef]

- Deschamps, B.; McNairn, H.; Shang, J.; Jiao, X. Towards operational radar-only crop type classification: Comparison of a traditional decision tree with a random forest classifier. Can. J. Remote Sens. 2012, 38, 60–68. [Google Scholar] [CrossRef]

- Jiao, X.; Kovacs, J.M.; Shang, J.; McNairn, H.; Walters, D.; Ma, B.; Geng, X. Object-oriented crop mapping and monitoring using multi-temporal polarimetric RADARSAT-2 data. ISPRS J. Photogramm. Remote Sens. 2014, 96, 38–46. [Google Scholar] [CrossRef]

- Liao, C.; Wang, J.; Huang, X.; Shang, J. Contribution of Minimum Noise Fraction Transformation of Multi-temporal RADARSAT-2 Polarimetric SAR Data to Cropland Classification. Can. J. Remote Sens. 2018, 44, 215–231. [Google Scholar] [CrossRef]

- Davidson, M.A.; Fisette, T.; McNarin, H.; Daneshfar, B. Detailed crop mapping using remote sensing data (Crop Data Layers). In Handbook on Remote Sensing for Agricultural Statistics; FAO: Rome, Italy, 2017; pp. 91–130. [Google Scholar]

- McNairn, H.; Champagne, C.; Shang, J.; Holmstrom, D.; Reichert, G. Integration of optical and Synthetic Aperture Radar (SAR) imagery for delivering operational annual crop inventories. ISPRS J. Photogramm. Remote Sens. 2009, 64, 434–449. [Google Scholar] [CrossRef]

- Ban, Y. Synergy of multitemporal ERS-1 SAR and Landsat TM data for classification of agricultural crops. Can. J. Remote Sens. 2003, 29, 518–526. [Google Scholar] [CrossRef]

- Veloso, A.; Mermoz, S.; Bouvet, A.; Le Toan, T.; Planells, M.; Dejoux, J.-F.; Ceschia, E. Understanding the temporal behavior of crops using Sentinel-1 and Sentinel-2-like data for agricultural applications. Remote Sens. Environ. 2017, 199, 415–426. [Google Scholar] [CrossRef]

- Van Tricht, K.; Gobin, A.; Gilliams, S.; Piccard, I. Synergistic Use of Radar Sentinel-1 and Optical Sentinel-2 Imagery for Crop Mapping: A Case Study for Belgium. Remote Sens. 2018, 10, 1642. [Google Scholar] [CrossRef] [Green Version]

- Agriculture and Agri-Food Canada. ISO 19131 Annual Crop Inventory–Data Product Specifications; Agriculture and Agri-Food Canada: Ottawa, ON, Canada, 2018.

- Agency, E.S. Sentinel-1-Observation Scenario—Planned Acquisitions—ESA. Available online: https://sentinel.esa.int/web/sentinel/missions/sentinel-1/observation-scenario (accessed on 15 March 2020).

- Sentinel-1 Toolbox. Available online: https://sentinel.esa.int/web/sentinel/toolboxes/sentinel-1 (accessed on 10 March 2020).

- Sentinel-1 Algorithms. Available online: https://developers.google.com/earth-engine/sentinel1 (accessed on 20 March 2020).

- Kakooei, M.; Nascetti, A.; Ban, Y. Sentinel-1 Global Coverage Foreshortening Mask Extraction: An Open Source Implementation Based on Google Earth Engine. In Proceedings of the IGARSS 2018–2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 6836–6839. [Google Scholar]

- Sentinel-2User Handbook. Available online: https://sentinel.esa.int/documents/247904/685211/Sentinel-2_User_Handbook (accessed on 5 April 2020).

- Friedl, M.; Sulla-Menashe, D. MCD12Q1 MODIS/Terra+ Aqua Land Cover Type Yearly L3 Global 500 m SIN Grid V006. 2019, distributed by NASA EOSDIS Land Processes DAAC. Available online: https://lpdaac.usgs.gov/products/mcd12q1v006/ (accessed on 20 March 2020).

- Ghorbanian, A.; Mohammadzadeh, A. An unsupervised feature extraction method based on band correlation clustering for hyperspectral image classification using limited training samples. Remote Sens. Lett. 2018. [Google Scholar] [CrossRef]

- Anchang, J.Y.; Prihodko, L.; Ji, W.; Kumar, S.S.; Ross, C.W.; Yu, Q.; Lind, B.; Sarr, M.A.; Diouf, A.A.; Hanan, N.P. Toward Operational Mapping of Woody Canopy Cover in Tropical Savannas Using Google Earth Engine. Front. Environ. Sci. 2020, 8, 4. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Dong, Q.; Yang, L.; Gao, J.; Liu, J. Crop classification based on a novel feature filtering and enhancement method. Remote Sens. 2019, 11, 455. [Google Scholar] [CrossRef] [Green Version]

- Ashourloo, D.; Shahrabi, H.S.; Azadbakht, M.; Aghighi, H.; Nematollahi, H.; Alimohammadi, A.; Matkan, A.A. Automatic canola mapping using time series of sentinel 2 images. ISPRS J. Photogramm. Remote Sens. 2019, 156, 63–76. [Google Scholar] [CrossRef]

- Dimitrov, P.; Dong, Q.; Eerens, H.; Gikov, A.; Filchev, L.; Roumenina, E.; Jelev, G. Sub-Pixel Crop Type Classification Using PROBA-V 100 m NDVI Time Series and Reference Data from Sentinel-2 Classifications. Remote Sens. 2019, 11, 1370. [Google Scholar] [CrossRef] [Green Version]

- Gao, B.-C. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Sitokonstantinou, V.; Papoutsis, I.; Kontoes, C.; Lafarga Arnal, A.; Armesto Andrés, A.P.; Garraza Zurbano, J.A. Scalable parcel-based crop identification scheme using sentinel-2 data time-series for the monitoring of the common agricultural policy. Remote Sens. 2018, 10, 911. [Google Scholar] [CrossRef] [Green Version]

- Sun, C.; Bian, Y.; Zhou, T.; Pan, J. Using of multi-source and multi-temporal remote sensing data improves crop-type mapping in the subtropical agriculture region. Sensors 2019, 19, 2401. [Google Scholar] [CrossRef] [Green Version]

- Champagne, C.; Shang, J.; McNairn, H.; Fisette, T. Exploiting spectral variation from crop phenology for agricultural land-use classification. In Proceedings of Spie; Remote Sensing and Modeling of Ecosystems for Sustainability II, San Diego, CA, USA, 31 July–4 August 2005; Spie: Bellingham, MA, USA, 2005; Volume 5884, p. 588405. [Google Scholar]

- Ghorbanian, A.; Kakooei, M.; Amani, M.; Mahdavi, S.; Mohammadzadeh, A.; Hasanlou, M. Improved land cover map of Iran using Sentinel imagery within Google Earth Engine and a novel automatic workflow for land cover classification using migrated training samples. ISPRS J. Photogramm. Remote Sens. 2020, 167, 276–288. [Google Scholar] [CrossRef]

- Li, Q.; Wang, C.; Zhang, B.; Lu, L. Object-based crop classification with Landsat-MODIS enhanced time-series data. Remote Sens. 2015, 7, 16091–16107. [Google Scholar] [CrossRef] [Green Version]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Achanta, R.; Susstrunk, S. Superpixels and polygons using simple non-iterative clustering. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4651–4660. [Google Scholar]

- Google Earth Engine API. Available online: https://developers.google.com/earth-engine/api_docs (accessed on 8 March 2020).

- Murthy, C.S.; Raju, P.V.; Badrinath, K.V.S. Classification of wheat crop with multi-temporal images: Performance of maximum likelihood and artificial neural networks. Int. J. Remote Sens. 2003, 24, 4871–4890. [Google Scholar] [CrossRef]

- Kumar, P.; Prasad, R.; Mishra, V.N.; Gupta, D.K.; Singh, S.K. Artificial neural network for crop classification using C-band RISAT-1 satellite datasets. Russ. Agric. Sci. 2016, 42, 281–284. [Google Scholar] [CrossRef]

- Seydi, S.T.; Hasanlou, M.; Amani, M. A New End-to-End Multi-Dimensional CNN Framework for Land Cover/Land Use Change Detection in Multi-Source Remote Sensing Datasets. Remote Sens. 2020, 12, 2010. [Google Scholar] [CrossRef]

- Erinjery, J.J.; Singh, M.; Kent, R. Mapping and assessment of vegetation types in the tropical rainforests of the Western Ghats using multispectral Sentinel-2 and SAR Sentinel-1 satellite imagery. Remote Sens. Environ. 2018, 216, 345–354. [Google Scholar] [CrossRef]

- Møller, M.F. A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw. 1993, 6, 525–533. [Google Scholar] [CrossRef]

- Chen, C.; Ma, Y.; Ren, G. Hyperspectral Classification Using Deep Belief Networks Based on Conjugate Gradient Update and Pixel-Centric Spectral Block Features. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4060–4069. [Google Scholar] [CrossRef]

- Du, Y.-C.; Stephanus, A. Levenberg-Marquardt neural network algorithm for degree of arteriovenous fistula stenosis classification using a dual optical photoplethysmography sensor. Sensors 2018, 18, 2322. [Google Scholar] [CrossRef] [Green Version]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef] [Green Version]

- Naboureh, A.; Li, A.; Bian, J.; Lei, G.; Amani, M. A Hybrid Data Balancing Method for Classification of Imbalanced Training Data within Google Earth Engine: Case Studies from Mountainous Regions. Remote Sens. 2020, 12, 3301. [Google Scholar] [CrossRef]

- Moghimi, A.; Mohammadzadeh, A.; Celik, T.; Amani, M. A Novel Radiometric Control Set Sample Selection Strategy for Relative Radiometric Normalization of Multitemporal Satellite Images. IEEE Trans. Geosci. Remote Sens. 2020, 1–17. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Berard, O. Supervised wetland classification using high spatial resolution optical, SAR, and LiDAR imagery. J. Appl. Remote Sens. 2020, 14, 1. [Google Scholar] [CrossRef]

| Class | NL | PE | NS | NB | QC | ON | BC | Sum |

|---|---|---|---|---|---|---|---|---|

| Barley/Oats/Spring Wheat | 11 | 424 | 167 | 711 | 32,772 | 1210 | 79 | 35,374 |

| Rye | 0 | 16 | 21 | 6 | 829 | 285 | 4 | 1161 |

| Winter Wheat | 1 | 38 | 128 | 3 | 2587 | 2269 | 17 | 5043 |

| Corn | 28 | 143 | 852 | 507 | 61,562 | 11,065 | 574 | 74,731 |

| Tobacco | 0 | 0 | 0 | 0 | 0 | 221 | 0 | 221 |

| Ginseng | 0 | 0 | 0 | 0 | 0 | 190 | 3 | 193 |

| Canola/Rapeseed | 0 | 22 | 4 | 48 | 1789 | 263 | 6 | 2132 |

| Soybeans | 0 | 247 | 316 | 262 | 54,586 | 12,099 | 15 | 67,525 |

| Peas | 5 | 58 | 0 | 48 | 593 | 58 | 8 | 770 |

| Beans | 0 | 0 | 0 | 1 | 1044 | 239 | 7 | 1291 |

| Tomatoes | 0 | 0 | 0 | 1 | 39 | 110 | 2 | 152 |

| Potatoes | 34 | 532 | 39 | 1008 | 1802 | 231 | 98 | 3744 |

| Blueberry | 4 | 66 | 265 | 155 | 486 | 7 | 516 | 1499 |

| Cranberry | 12 | 4 | 13 | 28 | 484 | 0 | 93 | 634 |

| Orchards | 0 | 4 | 186 | 55 | 246 | 462 | 728 | 1681 |

| Vineyards | 0 | 4 | 63 | 9 | 19 | 346 | 361 | 802 |

| Buckwheat | 0 | 12 | 1 | 1 | 658 | 9 | 0 | 681 |

| Sum | 91 | 1570 | 2055 | 2848 | 159,496 | 29,064 | 2511 | 197,634 |

| NL: Newfoundland and Labrador, PE: Price Edward Island, NB: New Brunswick, NS: Nova Scotia, QC: Quebec, ON: Ontario, BC: British Columbia. | ||||||||

| Months | Sentinel-1 | Sentinel-2 | ||

|---|---|---|---|---|

| Features | # Images | Features | # Images | |

| January–February | VV-1, VH-1 | 537 | NDVI-1, NDWI-1 | 9552 |

| March–April | VV-2, VH-2 | 545 | ||

| May–June | VV-3, VH-3 | 620 | NDVI-2, NDWI-2 | 14,532 |

| July–August | VV-4, VH-4 | 705 | ||

| September–October | VV-5, VH-5 | 660 | NDVI-3, NDWI-3 | 11,861 |

| November–December | VV-6, VH-6 | 761 | ||

| Total | 6 VV + 6 VH | 3828 | 3 NDVI + 3 NDWI | 35,945 |

| ID | Class | Area (Hectare) |

|---|---|---|

| 1 | Barley/Oats/Spring Wheat | 34,773,308 |

| 2 | Rye | 3,859,182 |

| 3 | Winter Wheat | 4,607,312 |

| 4 | Corn | 2,007,945 |

| 5 | Tobacco | 124,157 |

| 6 | Ginseng | 129,507 |

| 7 | Canola/Rapeseed | 12,561,075 |

| 8 | Soybeans | 2,132,346 |

| 9 | Peas | 4,887,516 |

| 10 | Beans | 1,841,635 |

| 11 | Tomatoes | 118,822 |

| 12 | Potatoes | 4,053,364 |

| 13 | Blueberry | 4,932,649 |

| 14 | Cranberry | 1,028,732 |

| 15 | Orchards | 1,583,179 |

| 16 | Vineyards | 425,621 |

| 17 | Buckwheat | 2,091,076 |

| - | Total | 81,157,426 |

| Reference Data (ID) | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | Total | |

| Classified Data (ID) | ||||||||||||||||||

| 1 | 2230420 | 75114 | 69312 | 41847 | 324 | 427 | 12583 | 20015 | 31924 | 16198 | 261 | 13174 | 6758 | 1004 | 5279 | 1613 | 44910 | 2571163 |

| 2 | 154119 | 422211 | 65392 | 13932 | 2830 | 3382 | 1807 | 7130 | 10396 | 6057 | 222 | 5067 | 3769 | 266 | 5334 | 2229 | 2336 | 706479 |

| 3 | 179766 | 63932 | 672192 | 16167 | 168 | 537 | 958 | 9048 | 8590 | 5072 | 276 | 1527 | 3565 | 508 | 7783 | 3805 | 3651 | 977545 |

| 4 | 48904 | 11934 | 5394 | 428800 | 840 | 702 | 1131 | 36133 | 21025 | 14373 | 1340 | 8291 | 1409 | 201 | 3737 | 3146 | 18614 | 605974 |

| 5 | 111 | 3230 | 214 | 1290 | 138560 | 4295 | 26 | 3167 | 505 | 4206 | 578 | 1378 | 92 | 7 | 456 | 505 | 9 | 158629 |

| 6 | 198 | 3518 | 925 | 1021 | 5108 | 69627 | 7 | 2732 | 186 | 2973 | 80 | 901 | 211 | 54 | 769 | 427 | 1 | 88738 |

| 7 | 24834 | 3583 | 389 | 2955 | 90 | 4 | 405153 | 3560 | 7219 | 2350 | 452 | 9876 | 478 | 20 | 309 | 324 | 15018 | 476614 |

| 8 | 16752 | 5932 | 2758 | 37286 | 4866 | 2061 | 2966 | 417017 | 13700 | 82097 | 4027 | 38583 | 1217 | 342 | 3526 | 2155 | 9161 | 644446 |

| 9 | 55878 | 8840 | 3878 | 28620 | 544 | 335 | 8286 | 14923 | 558750 | 36085 | 116 | 9680 | 1952 | 50 | 769 | 162 | 6531 | 735399 |

| 10 | 37563 | 8436 | 5856 | 21258 | 4020 | 1279 | 4823 | 77461 | 42492 | 812496 | 261 | 23708 | 810 | 165 | 2367 | 703 | 10640 | 1054338 |

| 11 | 582 | 1240 | 249 | 5986 | 3189 | 1041 | 40 | 6341 | 772 | 1427 | 58848 | 1203 | 17 | 1 | 286 | 137 | 356 | 81715 |

| 12 | 26778 | 7867 | 902 | 9490 | 4449 | 811 | 8587 | 49022 | 10973 | 39950 | 1905 | 423930 | 1355 | 81 | 958 | 630 | 19407 | 607095 |

| 13 | 11913 | 1982 | 415 | 1069 | 56 | 146 | 343 | 651 | 686 | 811 | 2 | 302 | 264369 | 2301 | 5829 | 1614 | 1242 | 293731 |

| 14 | 896 | 602 | 532 | 12 | 0 | 36 | 0 | 7 | 301 | 132 | 0 | 21 | 4474 | 146766 | 239 | 558 | 31 | 154607 |

| 15 | 7169 | 2818 | 4520 | 2306 | 336 | 307 | 15 | 2936 | 581 | 2621 | 140 | 358 | 5521 | 545 | 222276 | 21522 | 247 | 274218 |

| 16 | 1806 | 1431 | 4346 | 1428 | 56 | 525 | 3 | 1592 | 86 | 605 | 47 | 43 | 4148 | 243 | 24791 | 138215 | 146 | 179511 |

| 17 | 65946 | 5049 | 2627 | 18185 | 0 | 16 | 10297 | 6909 | 6087 | 5852 | 72 | 10213 | 2509 | 171 | 300 | 297 | 384835 | 519365 |

| Total | 2863635 | 627719 | 839901 | 631652 | 165436 | 85531 | 457025 | 658644 | 714273 | 1033305 | 68627 | 548255 | 302654 | 152725 | 285008 | 178042 | 517135 | 10129567 |

| PA (%) | 77.89 | 67.26 | 80.03 | 67.89 | 83.75 | 81.41 | 88.65 | 63.31 | 78.23 | 78.63 | 85.75 | 77.32 | 87.35 | 96.10 | 77.99 | 77.63 | 74.42 | OA = 76.95 % |

| UA (%) | 86.75 | 59.76 | 68.76 | 70.76 | 87.35 | 78.46 | 85.01 | 64.71 | 75.98 | 77.06 | 72.02 | 69.83 | 90.00 | 94.93 | 81.06 | 77.00 | 74.10 | |

| CE (%) | 22.11 | 32.74 | 19.97 | 32.11 | 16.25 | 18.59 | 11.35 | 36.69 | 21.77 | 21.37 | 14.25 | 22.68 | 12.65 | 3.90 | 22.01 | 22.37 | 25.58 | KC = 0.738 |

| OE (%) | 13.25 | 40.24 | 31.24 | 29.24 | 12.65 | 21.54 | 14.99 | 35.29 | 24.02 | 22.94 | 27.98 | 30.17 | 10.00 | 5.07 | 18.94 | 23.00 | 25.90 | |

| OA: Overall Accuracy, KC: Kappa Coefficient, PA: Producer Accuracy, UA: User Accuracy, CE: Commission Error, OE: Omission Error 1: Barley/Oats/Spring Wheat, 2: Rye, 3: Winter Wheat, 4: Corn, 5: Tobacco, 6: Ginseng, 7: Canola/Rapeseed, 8: Soybeans, 9: Peas, 10: Beans, 11: Tomatoes, 12: Potatoes, 13: Blueberry, 14: Cranberry, 15: Orchards, 16: Vineyards, 17: Buckwheat | ||||||||||||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Amani, M.; Kakooei, M.; Moghimi, A.; Ghorbanian, A.; Ranjgar, B.; Mahdavi, S.; Davidson, A.; Fisette, T.; Rollin, P.; Brisco, B.; et al. Application of Google Earth Engine Cloud Computing Platform, Sentinel Imagery, and Neural Networks for Crop Mapping in Canada. Remote Sens. 2020, 12, 3561. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12213561

Amani M, Kakooei M, Moghimi A, Ghorbanian A, Ranjgar B, Mahdavi S, Davidson A, Fisette T, Rollin P, Brisco B, et al. Application of Google Earth Engine Cloud Computing Platform, Sentinel Imagery, and Neural Networks for Crop Mapping in Canada. Remote Sensing. 2020; 12(21):3561. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12213561

Chicago/Turabian StyleAmani, Meisam, Mohammad Kakooei, Armin Moghimi, Arsalan Ghorbanian, Babak Ranjgar, Sahel Mahdavi, Andrew Davidson, Thierry Fisette, Patrick Rollin, Brian Brisco, and et al. 2020. "Application of Google Earth Engine Cloud Computing Platform, Sentinel Imagery, and Neural Networks for Crop Mapping in Canada" Remote Sensing 12, no. 21: 3561. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12213561