Quality Assessment of Photogrammetric Methods—A Workflow for Reproducible UAS Orthomosaics

Abstract

:1. Introduction

2. Materials and Methods

2.1. Multi-Temporal Flights

2.2. Image Georeferencing

2.3. Photogrammetric Processing

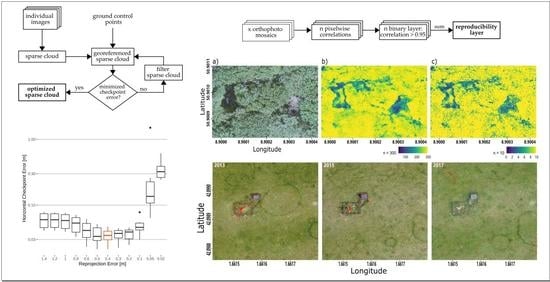

2.4. Optimizing the Georeferencing

2.5. Orthomosaic Reproducibility

2.6. Assessing the Orthorectification Surface

2.7. Time Series Accuracy

3. Results

3.1. Optimized Georeferencing Accuracy

3.2. Orthomosaic Reproducibility

3.3. Comparison of Mesh and DSM Surface-Based Orthomosaics

3.4. Forest Time Series Reproducibility

3.5. Grassland Time Series Reproducibility

3.6. Time Series Positional Accuracy

4. Discussion

4.1. Optimized Georeferencing Accuracy

4.2. Pixel-Wise Reproducibility of Orthomosaics

4.3. Time Series

4.4. Improved UAS Workflow

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

| Camera Model | Sony NEX-SN | Sony NEX-7 | Sony ILCE-7RM2 | GoPro Hero 7 |

|---|---|---|---|---|

| Image Width | 4912 pix | 6000 pix | 7952 pix | 4000 pix |

| Image Height | 3264 pix | 4000 pix | 5304 pix | 3000 pix |

| Sensor Width | 23.5 mm | 23.5 mm | 35.9 mm | 6.17 mm |

| Sensor Height | 15.6 mm | 15.6 mm | 24 mm | 4.63 mm |

| Focal l Length | 16 mm | 18 mm | 15 mm | 17 mm |

| Resolution | 16.7 megapixels | 24.3 megapixels | 43.6 megapixels | 12 megapixels |

| ISO | 100–125 | 400 | 1000–1600 | 400 |

| Shutter | 1/640 | 1/1000 | 1/1000 | Auto |

References

- Nebiker, S.; Lack, N.; Abächerli, M.; Läderach, S. Light-weight Multispectral UAV Sensors and their capabilities for predicting grain yield and detecting plant diseases. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 41, 963–970. [Google Scholar] [CrossRef]

- Gago, J.; Douthe, C.; Coopman, R.; Gallego, P.; Ribas-Carbo, M.; Flexas, J.; Escalona, J.; Medrano, H. UAVs challenge to assess water stress for sustainable agriculture. Agric. Water Manag. 2015, 153, 9–19. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Paul, T.S.H.; Morgenroth, J.; Hartley, R. Taking a closer look at invasive alien plant research: A review of the current state, opportunities, and future directions for UAVs. Methods Ecol. Evol. 2019, 10, 2020–2033. [Google Scholar] [CrossRef] [Green Version]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from Unmanned Aircraft: Image Processing Workflows and Applications for Rangeland Environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef] [Green Version]

- Lu, B.; He, Y. Species classification using Unmanned Aerial Vehicle (UAV)-acquired high spatial resolution imagery in a heterogeneous grassland. ISPRS J. Photogramm. Remote Sens. 2017, 128, 73–85. [Google Scholar] [CrossRef]

- Poley, L.G.; McDermid, G.J. A Systematic Review of the Factors Influencing the Estimation of Vegetation Aboveground Biomass Using Unmanned Aerial Systems. Remote Sens. 2020, 12, 1052. [Google Scholar] [CrossRef] [Green Version]

- Possoch, M.; Bieker, S.; Hoffmeister, D.; Bolten, A.; Schellberg, J.; Bareth, G. Multi-temporal Crop Surface Models Combined with the Rgb Vegetation Index from Uav-based Images for Forage Monitoring in Grassland. ISPRS-Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci 2016, 41, 991–998. [Google Scholar] [CrossRef]

- Belmonte, A.; Sankey, T.; Biederman, J.A.; Bradford, J.; Goetz, S.J.; Kolb, T.; Woolley, T. UAV-derived estimates of forest structure to inform ponderosa pine forest restoration. Remote Sens. Ecol. Conserv. 2019. [Google Scholar] [CrossRef]

- González-Jaramillo, V.; Fries, A.; Bendix, J. AGB Estimation in a Tropical Mountain Forest (TMF) by Means of RGB and Multispectral Images Using an Unmanned Aerial Vehicle (UAV). Remote Sens. 2019, 11, 1413. [Google Scholar] [CrossRef] [Green Version]

- Bourgoin, C.; Betbeder, J.; Couteron, P.; Blanc, L.; Dessard, H.; Oszwald, J.; Roux, R.L.; Cornu, G.; Reymondin, L.; Mazzei, L.; et al. UAV-based canopy textures assess changes in forest structure from long-term degradation. Ecol. Indic. 2020, 115, 106386. [Google Scholar] [CrossRef] [Green Version]

- Lehmann, J.; Nieberding, F.; Prinz, T.; Knoth, C. Analysis of Unmanned Aerial System-Based CIR Images in Forestry—A New Perspective to Monitor Pest Infestation Levels. Forests 2015, 6, 594–612. [Google Scholar] [CrossRef] [Green Version]

- Puliti, S.; Talbot, B.; Astrup, R. Tree-Stump Detection, Segmentation, Classification, and Measurement Using Unmanned Aerial Vehicle (UAV) Imagery. Forests 2018, 9, 102. [Google Scholar] [CrossRef] [Green Version]

- Manfreda, S.; McCabe, M.; Miller, P.; Lucas, R.; Madrigal, V.P.; Mallinis, G.; Dor, E.B.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef] [Green Version]

- Kattenborn, T.; Lopatin, J.; Förster, M.; Braun, A.C.; Fassnacht, F.E. UAV data as alternative to field sampling to map woody invasive species based on combined Sentinel-1 and Sentinel-2 data. Remote Sens. Environ. 2019, 227, 61–73. [Google Scholar] [CrossRef]

- Park, J.Y.; Muller-Landau, H.C.; Lichstein, J.W.; Rifai, S.W.; Dandois, J.P.; Bohlman, S.A. Quantifying Leaf Phenology of Individual Trees and Species in a Tropical Forest Using Unmanned Aerial Vehicle (UAV) Images. Remote Sens. 2019, 11, 1534. [Google Scholar] [CrossRef] [Green Version]

- Bagaram, M.B.; Giuliarelli, D.; Chirici, G.; Giannetti, F.; Barbati, A. UAV Remote Sensing for Biodiversity Monitoring: Are Forest Canopy Gaps Good Covariates? Remote Sens. 2018. [Google Scholar] [CrossRef] [Green Version]

- Fawcett, D.; Azlan, B.; Hill, T.C.; Kho, L.K.; Bennie, J.; Anderson, K. Unmanned aerial vehicle (UAV) derived structure-from-motion photogrammetry point clouds for oil palm (Elaeis guineensis) canopy segmentation and height estimation. Int. J. Remote Sens. 2019, 40, 7538–7560. [Google Scholar] [CrossRef] [Green Version]

- Mohan, M.; Silva, C.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.; Dia, M. Individual Tree Detection from Unmanned Aerial Vehicle (UAV) Derived Canopy Height Model in an Open Canopy Mixed Conifer Forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef] [Green Version]

- Wijesingha, J.; Astor, T.; Schulze-Brüninghoff, D.; Wengert, M.; Wachendorf, M. Predicting Forage Quality of Grasslands Using UAV-Borne Imaging Spectroscopy. Remote Sens. 2020, 12, 126. [Google Scholar] [CrossRef] [Green Version]

- Seifert, E.; Seifert, S.; Vogt, H.; Drew, D.; van Aardt, J.; Kunneke, A.; Seifert, T. Influence of Drone Altitude, Image Overlap, and Optical Sensor Resolution on Multi-View Reconstruction of Forest Images. Remote Sens. 2019, 11, 1252. [Google Scholar] [CrossRef] [Green Version]

- Pepe, M.; Fregonese, L.; Scaioni, M. Planning airborne photogrammetry and remote-sensing missions with modern platforms and sensors. Eur. J. Remote Sens. 2018, 51, 412–435. [Google Scholar] [CrossRef]

- Sanz-Ablanedo, E.; Chandler, J.; Rodríguez-Pérez, J.; Ordóñez, C. Accuracy of Unmanned Aerial Vehicle (UAV) and SfM Photogrammetry Survey as a Function of the Number and Location of Ground Control Points Used. Remote Sens. 2018, 10, 1606. [Google Scholar] [CrossRef] [Green Version]

- Martinez-Carricondo, P.; Aguera-Vega, F.; Carvajal-Ramirez, F.; Mesas-Carrascosa, F.J.; Garcia-Ferrer, A.; Perez-Porras, F.J. Assessment of UAV-photogrammetric mapping accuracy based in variation of ground control points. Int. J. Appl. Earth Obs. ans Geoinf. 2018, 72, 1–10. [Google Scholar] [CrossRef]

- Latte, N.; Gaucher, P.; Bolyn, C.; Lejeune, P.; Michez, A. Upscaling UAS Paradigm to UltraLight Aircrafts: A Low-Cost Multi-Sensors System for Large Scale Aerial Photogrammetry. Remote Sens. 2020, 12, 1265. [Google Scholar] [CrossRef] [Green Version]

- Capolupo, A.; Kooistra, L.; Berendonk, C.; Boccia, L.; Suomalainen, J. Estimating Plant Traits of Grasslands from UAV-Acquired Hyperspectral Images: A Comparison of Statistical Approaches. ISPRS Int. J. Geo-Inf. 2015, 4, 2792–2820. [Google Scholar] [CrossRef]

- van Iersel, W.; Straatsma, M.; Addink, E.; Middelkoop, H. Monitoring height and greenness of non-woody floodplain vegetation with UAV time series. ISPRS J. Photogramm. Remote Sens. 2018, 141, 112–123. [Google Scholar] [CrossRef]

- Näsi, R.; Viljanen, N.; Kaivosoja, J.; Alhonoja, K.; Hakala, T.; Markelin, L.; Honkavaara, E. Estimating Biomass and Nitrogen Amount of Barley and Grass Using UAV and Aircraft Based Spectral and Photogrammetric 3D Features. Remote Sens. 2018, 10, 1082. [Google Scholar] [CrossRef] [Green Version]

- Michez, A.; Lejeune, P.; Bauwens, S.; Herinaina, A.; Blaise, Y.; Muñoz, E.C.; Lebeau, F.; Bindelle, J. Mapping and Monitoring of Biomass and Grazing in Pasture with an Unmanned Aerial System. Remote Sens. 2019, 11, 473. [Google Scholar] [CrossRef] [Green Version]

- Barba, S.; Barbarella, M.; Benedetto, A.D.; Fiani, M.; Gujski, L.; Limongiello, M. Accuracy Assessment of 3D Photogrammetric Models from an Unmanned Aerial Vehicle. Drones 2019, 3, 79. [Google Scholar] [CrossRef] [Green Version]

- Gross, J.W.; Heumann, B.W. A Statistical Examination of Image Stitching Software Packages for Use with Unmanned Aerial Systems. Photogramm. Eng. Remote Sens. 2016, 82, 419–425. [Google Scholar] [CrossRef]

- Iglhaut, J.; Cabo, C.; Puliti, S.; Piermattei, L.; O’Connor, J.; Rosette, J. Structure from Motion Photogrammetry in Forestry: A Review. Curr. For. Rep. 2019, 5, 155–168. [Google Scholar] [CrossRef] [Green Version]

- Guerra-Hernández, J.; González-Ferreiro, E.; Monleón, V.; Faias, S.; Tomé, M.; Díaz-Varela, R. Use of Multi-Temporal UAV-Derived Imagery for Estimating Individual Tree Growth in Pinus pinea Stands. Forests 2017, 8, 300. [Google Scholar] [CrossRef]

- Padró, J.C.; Muñoz, F.J.; Planas, J.; Pons, X. Comparison of four UAV georeferencing methods for environmental monitoring purposes focusing on the combined use with airborne and satellite remote sensing platforms. Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 130–140. [Google Scholar] [CrossRef]

- Ekaso, D.; Nex, F.; Kerle, N. Accuracy assessment of real-time kinematics (RTK) measurements on unmanned aerial vehicles (UAV) for direct geo-referencing. Geo-Spat. Inf. Sci 2020, 1–17. [Google Scholar] [CrossRef] [Green Version]

- Agisoft MetashapeI; Version 1.6; Agisoft, L.L.C.: St. Petersburg, Russia, 2020.

- Fretes, H.; Gomez-Redondo, M.; Paiva, E.; Rodas, J.; Gregor, R. A Review of Existing Evaluation Methods for Point Clouds Quality. In Proceedings of the 2019 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED UAS), Cranfield, UK, 25–27 November 2019; pp. 247–252. [Google Scholar] [CrossRef]

- Hung, I.K.; Unger, D.; Kulhavy, D.; Zhang, Y. Positional Precision Analysis of Orthomosaics Derived from Drone Captured Aerial Imagery. Drones 2019, 3, 46. [Google Scholar] [CrossRef] [Green Version]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2020. [Google Scholar]

- Doughty, C.; Cavanaugh, K. Mapping Coastal Wetland Biomass from High Resolution Unmanned Aerial Vehicle (UAV) Imagery. Remote Sens. 2019, 11, 540. [Google Scholar] [CrossRef] [Green Version]

- Laslier, M.; Hubert-Moy, L.; Corpetti, T.; Dufour, S. Monitoring the colonization of alluvial deposits using multitemporal UAV RGB -imagery. Appl. Veg. Sci. 2019, 22, 561–572. [Google Scholar] [CrossRef]

- Dandois, J.; Olano, M.; Ellis, E. Optimal Altitude, Overlap, and Weather Conditions for Computer Vision UAV Estimates of Forest Structure. Remote Sens. 2015, 7, 13895–13920. [Google Scholar] [CrossRef] [Green Version]

- Turner, D.; Lucieer, A.; de Jong, S. Time Series Analysis of Landslide Dynamics Using an Unmanned Aerial Vehicle (UAV). Remote Sens. 2015, 7, 1736–1757. [Google Scholar] [CrossRef] [Green Version]

- Dash, J.P.; Watt, M.S.; Pearse, G.D.; Heaphy, M.; Dungey, H.S. Assessing very high resolution UAV imagery for monitoring forest health during a simulated disease outbreak. ISPRS J. Photogramm. Remote Sens. 2017, 131, 1–14. [Google Scholar] [CrossRef]

- Döpper, V.; Gränzig, T.; Kleinschmit, B.; Förster, M. Challenges in UAS-Based TIR Imagery Processing: Image Alignment and Uncertainty Quantification. Remote Sens. 2020, 12, 1552. [Google Scholar] [CrossRef]

- Xiang, H.; Tian, L. Method for automatic georeferencing aerial remote sensing (RS) images from an unmanned aerial vehicle (UAV) platform. Biosyst. Eng. 2011, 108, 104–113. [Google Scholar] [CrossRef]

- Tmuši’c, G.; Manfreda, S.; Aasen, H.; James, M.R.; Gonçalves, G.; Ben-Dor, E.; Brook, A.; Polinova, M.; Arranz, J.J.; Mészáros, J.; et al. Current Practices in UAS-based Environmental Monitoring. Remote Sens. 2020, 12, 1001. [Google Scholar] [CrossRef] [Green Version]

- Oliveira, R.A.; Näsi, R.; Niemeläinen, O.; Nyholm, L.; Alhonoja, K.; Kaivosoja, J.; Jauhiainen, L.; Viljanen, N.; Nezami, S.; Markelin, L.; et al. Machine learning estimators for the quantity and quality of grass swards used for silage production using drone-based imaging spectrometry and photogrammetry. Remote Sens. Environ. 2020, 246, 111830. [Google Scholar] [CrossRef]

- Cook, K.L.; Dietze, M. Short Communication: A simple workflow for robust low-cost UAV-derived change detection without ground control points. Earth Surf. Dyn. 2019, 7, 1009–1017. [Google Scholar] [CrossRef] [Green Version]

- Brunsdon, C.; Comber, A. Opening practice: Supporting reproducibility and critical spatial data science. J. Geogr. Syst. 2020. [Google Scholar] [CrossRef]

- Haase, P.; Tonkin, J.D.; Stoll, S.; Burkhard, B.; Frenzel, M.; Geijzendorffer, I.R.; Häuser, C.; Klotz, S.; Kühn, I.; McDowell, W.H.; et al. The next generation of site-based long-term ecological monitoring: Linking essential biodiversity variables and ecosystem integrity. Sci. Total Environ. 2018, 613–614, 1376–1384. [Google Scholar] [CrossRef] [Green Version]

| Mission | Date | Sun Angle (°) | Conditions | Camera | Area (ha) | GSD (cm/px) | Alt. (m) | Overlap, F/S (%) | Images |

|---|---|---|---|---|---|---|---|---|---|

| Forest 01 | 2020/07/07 11:20 a.m. | 38.1 | cloud free | GoPro Hero 7 | 7 | 2.58 | 50 | > 90/75 | 630 |

| Forest 02 | 2020/07/07 11:42 a.m. | 44.33 | cloud free | GoPro Hero 7 | 7 | 2.58 | 50 | > 90/75 | 630 |

| Forest 03 | 2020/07/07 12:11 a.m. | 50.33 | partially cloudy | GoPro Hero 7 | 7 | 2.58 | 50 | > 90/75 | 630 |

| Forest 04 | 2020/07/07 12:40 a.m. | 56.13 | partially cloudy | GoPro Hero 7 | 7 | 2.58 | 50 | > 90/75 | 630 |

| Forest 05 | 2020/07/07 13:10 a.m. | 61.76 | cloudy | GoPro Hero 7 | 7 | 2.58 | 50 | > 90/75 | 630 |

| Forest 06 | 2020/07/07 13:43 a.m. | 67.28 | cloudy | GoPro Hero 7 | 7 | 2.58 | 50 | > 90/75 | 630 |

| Grassland 2013 | 2013/06/01 11:17 a.m. | 41.34 | partially cloudy | Sony NEX-SN | 7.68 | 3.32 | 111 | 70/75 | 27 |

| Grassland 2015 | 2015/05/22 09:26 a.m. | 19.99 | cloud free | Sony NEX-7 | 14.2 | 3.68 | 169 | 75/75 | 57 |

| Grassland 2017 | 2017/05/18 08:53 a.m. | 13.58 | partially cloudy | Sony ILCE-7RM2 | 32.4 | 3.97 | 132 | 75/75 | 57 |

| Controlpoint Error (m) | Checkpoint Error (m) | |||||||

|---|---|---|---|---|---|---|---|---|

| Flight | XYmean | XYsd | Zmean | Zsd | XYmean | XYsd | Zmean | Zsd |

| Forest 01 | 0.0149 | 0.0003 | 0.0207 | 0.0003 | 0.0220 | 0.0002 | 0.0591 | 0.0010 |

| Forest 02 | 0.0082 | 0.0008 | 0.0217 | 0.0006 | 0.0377 | 0.0008 | 0.1989 | 0.0019 |

| Forest 03 | 0.0140 | 0.0001 | 0.0264 | 0.0012 | 0.0565 | 0.0003 | 0.1765 | 0.0030 |

| Forest 04 | 0.0112 | 0.0004 | 0.0122 | 0.0006 | 0.0529 | 0.0034 | 0.0861 | 0.0090 |

| Forest 05 | 0.0176 | 0.0005 | 0.0215 | 0.0005 | 0.0362 | 0.001 | 0.1344 | 0.0022 |

| Forest 06 | 0.0120 | 0.0002 | 0.0173 | 0.0003 | 0.0595 | 0.0053 | 0.1845 | 0.0223 |

| Grassland 2013 | 0.0001 | <0.0001 | 0.0001 | <0.0001 | 0.2917 | 0.0012 | 2.0691 | 0.0041 |

| Grassland 2015 | 0.0009 | <0.0001 | 0.0001 | <0.0001 | 0.1837 | 0.0006 | 1.3638 | 0.0095 |

| Grassland 2017 | 0.0040 | <0.0001 | 0.0001 | <0.0001 | 0.0700 | <0.0001 | 0.0007 | <0.0001 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ludwig, M.; M. Runge, C.; Friess, N.; Koch, T.L.; Richter, S.; Seyfried, S.; Wraase, L.; Lobo, A.; Sebastià, M.-T.; Reudenbach, C.; et al. Quality Assessment of Photogrammetric Methods—A Workflow for Reproducible UAS Orthomosaics. Remote Sens. 2020, 12, 3831. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12223831

Ludwig M, M. Runge C, Friess N, Koch TL, Richter S, Seyfried S, Wraase L, Lobo A, Sebastià M-T, Reudenbach C, et al. Quality Assessment of Photogrammetric Methods—A Workflow for Reproducible UAS Orthomosaics. Remote Sensing. 2020; 12(22):3831. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12223831

Chicago/Turabian StyleLudwig, Marvin, Christian M. Runge, Nicolas Friess, Tiziana L. Koch, Sebastian Richter, Simon Seyfried, Luise Wraase, Agustin Lobo, M.-Teresa Sebastià, Christoph Reudenbach, and et al. 2020. "Quality Assessment of Photogrammetric Methods—A Workflow for Reproducible UAS Orthomosaics" Remote Sensing 12, no. 22: 3831. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12223831