A Simple Spatio–Temporal Data Fusion Method Based on Linear Regression Coefficient Compensation

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data

2.2. Methods

2.2.1. Data Pre-Processing and Environment

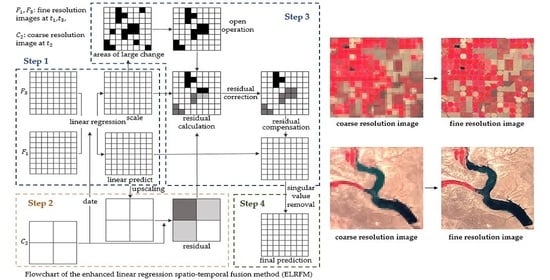

2.2.2. Enhanced Linear Regression Spatio-Temporal Fusion Method

Step 1. Linear Regression

Step 2. Residual Calculation

Step 3. Distribution of the Residual

Step 4. Singular Value Correction

2.2.3. Comparison and Evaluation

3. Results

3.1. Comparison

3.2. Accuracy Assessment

3.3. Further Verification

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Kovalskyy, V.; Roy, D.P. The global availability of Landsat 5 TM and Landsat 7 ETM+ land surface observations and implications for global 30m Landsat data product generation. Remote Sens. Environ. 2013, 130, 280–293. [Google Scholar] [CrossRef] [Green Version]

- Drusch, M.; Del Bello, U.; Carlier, S.; Colin, O.; Fernandez, V.M.; Gascon, F.; Hoersch, B.; Isola, C.; Laberinti, P.; Martimort, P.; et al. Sentinel-2: ESA’s Optical High-Resolution Mission for GMES Operational Services. Remote Sens. Environ. 2012, 120, 25–36. [Google Scholar] [CrossRef]

- Pekel, J.-F.; Cottam, A.; Gorelick, N.; Belward, A.S. High-resolution mapping of global surface water and its long-term changes. Nature 2016, 540, 418–422. [Google Scholar] [CrossRef] [PubMed]

- Hansen, M.C.; Potapov, P.V.; Moore, R.; Hancher, M.; Turubanova, S.A.; Tyukavina, A.; Thau, D.; Stehman, S.V.; Goetz, S.J.; Loveland, T.R.; et al. High-Resolution Global Maps of 21st-Century Forest Cover Change. Science 2013, 342, 850–854. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Donchyts, G.; Schellekens, J.; Winsemius, H.; Eisemann, E.; Van De Giesen, N. A 30 m Resolution Surface Water Mask Including Estimation of Positional and Thematic Differences Using Landsat 8, SRTM and OpenStreetMap: A Case Study in the Murray-Darling Basin, Australia. Remote Sens. 2016, 8, 386. [Google Scholar] [CrossRef] [Green Version]

- Donchyts, G.; Baart, F.; Winsemius, H.; Gorelick, N.; Kwadijk, J.; Van De Giesen, N. Earth’s surface water change over the past 30 years. Nat. Clim. Chang. 2016, 6, 810–813. [Google Scholar] [CrossRef]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Rudiyanto; Minasny, B.; Shah, R.M.; Soh, N.C.; Arif, C.; Setiawan, B.I. Automated Near-Real-Time Mapping and Monitoring of Rice Extent, Cropping Patterns, and Growth Stages in Southeast Asia Using Sentinel-1 Time Series on a Google Earth Engine Platform. Remote Sens. 2019, 11, 1666. [Google Scholar] [CrossRef] [Green Version]

- Carrasco, L.; O’Neil, A.W.; Morton, R.D.; Rowland, C.S. Evaluating Combinations of Temporally Aggregated Sentinel-1, Sentinel-2 and Landsat 8 for Land Cover Mapping with Google Earth Engine. Remote Sens. 2019, 11, 288. [Google Scholar] [CrossRef] [Green Version]

- Bai, B.; Tan, Y.; Guo, D.; Xu, B. Dynamic Monitoring of Forest Land in Fuling District Based on Multi-Source Time Series Remote Sensing Images. ISPRS Int. J. Geo-Inf. 2019, 8, 36. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Shi, Z.; Huang, G.; Bo, Y.; Chen, G. Time series remote sensing data-based identification of the dominant factor for inland lake surface area change: Anthropogenic activities or natural events? Remote Sens. 2020, 12, 612. [Google Scholar] [CrossRef] [Green Version]

- Teluguntla, P.; Thenkabail, P.; Oliphant, A.; Xiong, J.; Gumma, M.K.; Congalton, R.G.; Yadav, K.; Huete, A. A 30-m landsat-derived cropland extent product of Australia and China using random forest machine learning algorithm on Google Earth Engine cloud computing platform. ISPRS J. Photogramm. Remote Sens. 2018, 144, 325–340. [Google Scholar] [CrossRef]

- Verdoliva, L.; Gaetano, R.; Ruello, G.; Poggi, G. Optical-Driven Nonlocal SAR Despeckling. IEEE Geosci. Remote Sens. Lett. 2015, 12, 314–318. [Google Scholar] [CrossRef]

- Rao, P.; Jiang, W.; Hou, Y.; Chen, Z.; Jia, K. Dynamic Change Analysis of Surface Water in the Yangtze River Basin Based on MODIS Products. Remote Sens. 2018, 10, 1025. [Google Scholar] [CrossRef] [Green Version]

- Shen, H.; Huang, L.; Zhang, L.; Wu, P.; Zeng, C. Long-term and fine-scale satellite monitoring of the urban heat island effect by the fusion of multi-temporal and multi-sensor remote sensed data: A 26-year case study of the city of Wuhan in China. Remote Sens. Environ. 2016, 172, 109–125. [Google Scholar] [CrossRef]

- D’Addabbo, A.; Refice, A.; Pasquariello, G.; Lovergine, F. SAR/optical data fusion for flood detection. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 7631–7634. [Google Scholar]

- Torbick, N.; Chowdhury, D.; Salas, W.; Qi, J. Monitoring Rice Agriculture across Myanmar Using Time Series Sentinel-1 Assisted by Landsat-8 and PALSAR-2. Remote Sens. 2017, 9, 119. [Google Scholar] [CrossRef] [Green Version]

- You, N.; Dong, J. Examining earliest identifiable timing of crops using all available Sentinel 1/2 imagery and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 161, 109–123. [Google Scholar] [CrossRef]

- Ghosh, R.; Gupta, P.K.; Tolpekin, V.; Srivastav, S.K. An enhanced spatiotemporal fusion method—Implications for coal fire monitoring using satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2020, 88, 102056. [Google Scholar] [CrossRef]

- Zhu, X.; Cai, F.; Tian, J.; Williams, T.K.A. Spatiotemporal Fusion of Multisource Remote Sensing Data: Literature Survey, Taxonomy, Principles, Applications, and Future Directions. Remote Sens. 2018, 10, 527. [Google Scholar] [CrossRef] [Green Version]

- Moreno-martínez, Á.; Izquierdo-verdiguier, E.; Maneta, M.P.; Camps-valls, G.; Robinson, N.; Muñoz-marí, J.; Sedano, F.; Clinton, N.; Running, S.W. Remote Sensing of Environment Multispectral high resolution sensor fusion for smoothing and gap-filling in the cloud. Remote Sens. Environ. 2020, 247, 111901. [Google Scholar] [CrossRef]

- Laborde, H.; Douzal, V.; Piña, H.A.R.; Morand, S.; Cornu, J.-F. Landsat-8 cloud-free observations in wet tropical areas: A case study in South East Asia. Remote Sens. Lett. 2017, 8, 537–546. [Google Scholar] [CrossRef]

- Ghassemian, H. A review of remote sensing image fusion methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Luo, Y.; Guan, K.; Peng, J. STAIR: A generic and fully-automated method to fuse multiple sources of optical satellite data to generate a high-resolution, daily and cloud-/gap-free surface reflectance product. Remote Sens. Environ. 2018, 214, 87–99. [Google Scholar] [CrossRef]

- Chen, B.; Chen, L.; Huang, B.; Michishita, R.; Xu, B. Dynamic monitoring of the Poyang Lake wetland by integrating Landsat and MODIS observations. ISPRS J. Photogramm. Remote Sens. 2018, 139, 75–87. [Google Scholar] [CrossRef]

- Zhu, X.; Helmer, E.H.; Gao, F.; Liu, D.; Chen, J.; Lefsky, M.A. A flexible spatiotemporal method for fusing satellite images with different resolutions. Remote Sens. Environ. 2016, 172, 165–177. [Google Scholar] [CrossRef]

- Li, X.; Foody, G.M.; Boyd, D.S.; Ge, Y.; Zhang, Y.; Du, Y.; Ling, F. SFSDAF: An enhanced FSDAF that incorporates sub-pixel class fraction change information for spatio-temporal image fusion. Remote Sens. Environ. 2020, 237, 111537. [Google Scholar] [CrossRef]

- Rao, Y.; Zhu, X.; Chen, J.; Wang, J. An Improved Method for Producing High Spatial-Resolution NDVI Time Series Datasets with Multi-Temporal MODIS NDVI Data and Landsat TM/ETM+ Images. Remote Sens. 2015, 7, 7865–7891. [Google Scholar] [CrossRef] [Green Version]

- Kimm, H.; Guan, K.; Jiang, C.; Peng, B.; Gentry, L.F.; Wilkin, S.C.; Wang, S.; Cai, Y.; Bernacchi, C.J.; Peng, J.; et al. Deriving high-spatiotemporal-resolution leaf area index for agroecosystems in the U.S. Corn Belt using Planet Labs CubeSat and STAIR fusion data. Remote Sens. Environ. 2020, 239, 111615. [Google Scholar] [CrossRef]

- Long, D.; Yan, L.; Bai, L.; Zhang, C.; Li, X.; Lei, H.; Yang, H.; Tian, F.; Zeng, C.; Meng, X.; et al. Generation of MODIS-like land surface temperatures under all-weather conditions based on a data fusion approach. Remote Sens. Environ. 2020, 246, 111863. [Google Scholar] [CrossRef]

- Yin, Z.; Wu, P.; Foody, G.M.; Wu, Y.; Liu, Z.; Du, Y.; Ling, F. Spatiotemporal Fusion of Land Surface Temperature Based on a Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2020, 1–15. [Google Scholar] [CrossRef]

- Xie, D.; Zhang, J.; Zhu, X.; Pan, Y.; Liu, H.; Yuan, Z.; Yun, Y. An Improved STARFM with Help of an Unmixing-Based Method to Generate High Spatial and Temporal Resolution Remote Sensing Data in Complex Heterogeneous Regions. Sensors 2016, 16, 207. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gao, F.; Masek, J.; Schwaller, M.; Hall, F. On the Blending of the MODIS and Landsat ETM + Surface Reflectance: Predicting Daily Landsat Surface Reflectanc. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2207–2218. [Google Scholar]

- Hilker, T.; Wulder, M.A.; Coops, N.C.; Linke, J.; McDermid, G.; Masek, J.G.; Gao, F.; White, J.C. A new data fusion model for high spatial- and temporal-resolution mapping of forest disturbance based on Landsat and MODIS. Remote Sens. Environ. 2009, 113, 1613–1627. [Google Scholar] [CrossRef]

- Liu, X.; Deng, C.; Chanussot, J.; Hong, D.; Zhao, B. StfNet: A two-stream convolutional neural network for spatiotemporal image fusion. IEEE Trans. Geosci. Remote Sens. 2019, 57, 6552–6564. [Google Scholar] [CrossRef]

- Zhu, X.; Chen, J.; Gao, F.; Chen, X.; Masek, J.G. An enhanced spatial and temporal adaptive reflectance fusion model for complex heterogeneous regions. Remote Sens. Environ. 2010, 114, 2610–2623. [Google Scholar] [CrossRef]

- Weng, Q.; Fu, P.; Gao, F. Generating daily land surface temperature at Landsat resolution by fusing Landsat and MODIS data. Remote Sens. Environ. 2014, 145, 55–67. [Google Scholar] [CrossRef]

- Knauer, K.; Gessner, U.; Fensholt, R.; Kuenzer, C. An ESTARFM Fusion Framework for the Generation of Large-Scale Time Series in Cloud-Prone and Heterogeneous Landscapes. Remote Sens. 2016, 8, 425. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Atkinson, P.M. Spatio-temporal fusion for daily Sentinel-2 images. Remote Sens. Environ. 2018, 204, 31–42. [Google Scholar] [CrossRef] [Green Version]

- Emelyanova, I.V.; McVicar, T.R.; Van Niel, T.G.; Li, L.T.; Van Dijk, A.I.J.M. Assessing the accuracy of blending Landsat—MODIS surface reflectances in two landscapes with contrasting spatial and temporal dynamics: A framework for algorithm selection. Remote Sens. Environ. 2013, 133, 193–209. [Google Scholar] [CrossRef]

- Liu, M.; Yang, W.; Zhu, X.; Chen, J.; Chen, X.; Yang, L.; Helmer, E.H. An Improved Flexible Spatiotemporal DAta Fusion (IFSDAF) method for producing high spatiotemporal resolution normalized difference vegetation index time series. Remote Sens. Environ. 2019, 227, 74–89. [Google Scholar] [CrossRef]

- Gevaert, C.M.; García-Haro, F.J. A comparison of STARFM and an unmixing-based algorithm for Landsat and MODIS data fusion. Remote Sens. Environ. 2015, 156, 34–44. [Google Scholar] [CrossRef]

| Methods | Band | RMSE | AAD | AD | |

|---|---|---|---|---|---|

| STARFM | Green | 0.0123 | 0.0084 | −0.0003 | 0.9405 |

| Red | 0.0217 | 0.0147 | −0.0021 | 0.9054 | |

| NIR | 0.0344 | 0.0176 | 0.0081 | 0.8886 | |

| FSDAF | Green | 0.0128 | 0.0091 | −0.0005 | 0.9357 |

| Red | 0.0224 | 0.0157 | −0.0022 | 0.8985 | |

| NIR | 0.0312 | 0.0177 | 0.0056 | 0.9136 | |

| LR | Green | 0.0127 | 0.0107 | −0.0074 | 0.9589 |

| Red | 0.0221 | 0.0181 | −0.0131 | 0.9372 | |

| NIR | 0.0299 | 0.0145 | 0.0033 | 0.9159 | |

| ELRFM | Green | 0.0112 | 0.0084 | −0.0039 | 0.9605 |

| Red | 0.0123 | 0.0091 | −0.0021 | 0.9738 | |

| NIR | 0.0244 | 0.0131 | 0.0013 | 0.9453 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, B.; Tan, Y.; Donchyts, G.; Haag, A.; Weerts, A. A Simple Spatio–Temporal Data Fusion Method Based on Linear Regression Coefficient Compensation. Remote Sens. 2020, 12, 3900. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12233900

Bai B, Tan Y, Donchyts G, Haag A, Weerts A. A Simple Spatio–Temporal Data Fusion Method Based on Linear Regression Coefficient Compensation. Remote Sensing. 2020; 12(23):3900. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12233900

Chicago/Turabian StyleBai, Bingxin, Yumin Tan, Gennadii Donchyts, Arjen Haag, and Albrecht Weerts. 2020. "A Simple Spatio–Temporal Data Fusion Method Based on Linear Regression Coefficient Compensation" Remote Sensing 12, no. 23: 3900. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12233900