Design and Development of a Smart Variable Rate Sprayer Using Deep Learning

Abstract

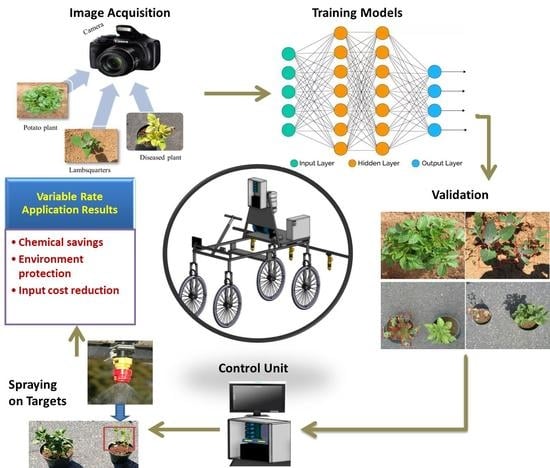

:1. Introduction

2. Materials and Methods

2.1. Hardware Development

2.2. Calibration of SVRS Components

2.3. Image Acquisition

2.4. Training of Models

2.5. Evaluation Indicators

2.6. Development of Models

2.7. Design of the Laboratory Experiment

2.8. Measurement of Spraying Patterns and Percent Area Coverage

2.9. Statistical Analysis

3. Results

3.1. Training and Detection of Deep Learning Models

3.2. Pervormance Evaluation of SVRS

3.3. Spraying Patterns and Percent Area Coverage

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Swanson, N.L.; Leu, A.; Abrahamson, J.; Wallet, B. Genetically engineered crops, glyphosate and the deterioration of health in the United States of America. J. Org. Syst. 2014, 9, 6–37. [Google Scholar]

- López-Granados, F.; Torres-Sánchez, J.; Serrano-Pérez, A.; de Castro, A.; Mesas-Carrascosa, F.; Peña, J.M. Early season weed mapping in sunflower using UAV technology: Variability of herbicide treatment maps against weed thresholds. Precis. Agric. 2016, 17, 183–199. [Google Scholar] [CrossRef]

- Jurado-Expósito, M.; López-Granados, F.; Gonzalez-Andujar, J.L.; Torres, L.G. Characterizing Population Growth Rate of in Wheat-Sunflower No-Tillage Systems Modelling the effects of climate change on weed population dynamics View project. Crop Sci. 2005, 45, 2106–2112. [Google Scholar] [CrossRef] [Green Version]

- Lamichhane, J.R.; Dachbrodt-Saaydeh, S.; Kudsk, P.; Messéan, A. Toward a reduced reliance on conventional pesticides in European agriculture. Plant Dis. 2016, 100, 10–24. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Creech, C.F.; Henry, R.S.; Werle, R.; Sandell, L.D.; Hewitt, A.J.; Kruger, G.R. Performance of Postemergence Herbicides Applied at Different Carrier Volume Rates. Weed Technol. 2015, 29, 611–624. [Google Scholar] [CrossRef]

- Esau, T.J.; Zaman, Q.U.; Chang, Y.K.; Schumann, A.W.; Percival, D.C.; Farooque, A.A. Spot-application of fungicide for wild blueberry using an automated prototype variable rate sprayer. Precis. Agric. 2014, 15, 147–161. [Google Scholar] [CrossRef]

- Dammer, K.H.; Wollny, J.; Giebel, A. Estimation of the Leaf Area Index in cereal crops for variable rate fungicide spraying. Eur. J. Agron. 2008, 28, 351–360. [Google Scholar] [CrossRef]

- Netland, J.; Balvoll, G.; Holmøy, R. Band spraying, selective flame weeding and hoeing in late white cabbage part II. Acta Hortic. 1994, 235–244. [Google Scholar] [CrossRef]

- Miller, P.C.H. Patch spraying: Future role of electronics in limiting pesticide use. Pest Manag. Sci. 2003, 59, 566–574. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ehsani, R.; Abd-Elrahman, A.; Ampatzidis, Y. A remote sensing technique for detecting laurel wilt disease in avocado in presence of other biotic and abiotic stresses. Comput. Electron. Agric. 2019, 156, 549–557. [Google Scholar] [CrossRef]

- Zaman, Q.U.; Esau, T.J.; Schumann, A.W.; Percival, D.C.; Chang, Y.K.; Read, S.M.; Farooque, A.A. Development of prototype automated variable rate sprayer for real-time spot-application of agrochemicals in wild blueberry fields. Comput. Electron. Agric. 2011, 76, 175–182. [Google Scholar] [CrossRef]

- Qiongyan, L.; Cai, J.; Berger, B.; Okamoto, M.; Miklavcic, S.J. Detecting spikes of wheat plants using neural networks with Laws texture energy. Plant Methods 2017, 13, 83. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Pound, M.P.; Atkinson, J.A.; Wells, D.M.; Pridmore, T.P.; French, A.P. Deep Learning for Multi-Task Plant Phenotyping Figure 1: A Selection of Results from Our Deep Network Locating Spikes (Middle) and Spikelets (Bottom) on the ACID Dataset. 2017. Available online: http://plantimages.nottingham.ac.uk/ (accessed on 1 February 2020).

- Yang, X.; Sun, M. A Survey on Deep Learning in Crop Planting. IOP Conf. Ser. Mater. Sci. Eng. Inst. Phys. Publ. 2019, 490, 062053. [Google Scholar] [CrossRef]

- Milioto, A.; Lottes, P.; Stachniss, C. Real-time blob-wise sugar beets vs weeds classification for monitoring fields using convolutional neural networks. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 41–48. [Google Scholar] [CrossRef] [Green Version]

- Lu, J.; Hu, J.; Zhao, G.; Mei, F.; Zhang, C. An in-field automatic wheat disease diagnosis system. Comput. Electron. Agric. 2017, 142, 369–379. [Google Scholar] [CrossRef] [Green Version]

- Liakos, K.G.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hirz, M.; Walzel, B. Sensor and object recognition technologies for self-driving cars. Comput. Aided. Des. Appl. 2018, 15, 501–508. [Google Scholar] [CrossRef] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Zhao, K.; Ren, X. Small Aircraft Detection in Remote Sensing Images Based on YOLOv3. IOP Conf. Ser. Mater. Sci. Eng. Inst. Phys. Publ. 2019, 533, 012056. [Google Scholar] [CrossRef]

- Shahud, M.; Bajracharya, J.; Praneetpolgrang, P.; Petcharee, S. Thai traffic sign detection and recognition using convolutional neural networks. In Proceedings of the 2018 22nd International Computer Science and Engineering Conference (ICSEC), Chiang Mai, Thailand, 21–24 November 2018. [Google Scholar] [CrossRef]

- Xiao, D.; Shan, F.; Li, Z.; Le, B.T.; Liu, X.; Li, X. A Target Detection Model Based on Improved Tiny-Yolov3 under the Environment of Mining Truck. IEEE Access. 2019, 7, 123757–123764. [Google Scholar] [CrossRef]

- Tian, L. Development of a sensor-based precision herbicide application system. Comput. Electron. Agric. 2002, 36, 133–149. [Google Scholar] [CrossRef]

- Pathak, A.R.; Pandey, M.; Rautaray, S. Application of Deep Learning for Object Detection. Procedia Comput. Sci. 2018, 132, 1706–1717. [Google Scholar] [CrossRef]

- Han, S.; Shen, W.; Liu, Z. Deep Drone: Object Detection and Tracking for Smart Drones on Embedded System; Stanford University: Stanford, CA, USA, 2016; pp. 1–8. [Google Scholar]

- Tijtgat, N.; Van Ranst, W.; Volckaert, B.; Goedemé, T.; De Turck, F. Embedded real-time object detection for a UAV warning system. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCV), Venice, Italy, 22–29 October 2017; pp. 2110–2118. [Google Scholar]

- Partel, V.; Charan Kakarla, S.; Ampatzidis, Y. Development and evaluation of a low-cost and smart technology for precision weed management utilizing artificial intelligence. Comput. Electron. Agric. 2019, 157, 339–350. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; De Bellis, L.; Luvisi, A. iPathology: Robotic applications and management of plants and plant diseases. Sustainability 2017, 9, 1010. [Google Scholar] [CrossRef] [Green Version]

- Samseemoung, G.; Soni, P.; Suwan, P. Development of a variable rate chemical sprayer for monitoring diseases and pests infestation in coconut plantations. Agriculture 2017, 7, 89. [Google Scholar] [CrossRef] [Green Version]

- Fernández-Quintanilla, C.; Peña, J.M.; Andújar, D.; Dorado, J.; Ribeiro, A.; López-Granados, F. Is the current state of the art of weed monitoring suitable for site-specific weed management in arable crops? Weed Res. 2018, 58, 259–272. [Google Scholar] [CrossRef]

- Möller, J. Computer Vision—A Versatile Technology in Automation of Agriculture Machinery. J. Agric. Eng. 2010, 47, 28–36. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. 2018. Available online: http://arxiv.org/abs/1804.02767 (accessed on 20 January 2020).

- Zhang, P.; Zhong, Y.; Li, X. SlimYOLOv3: Narrower, faster and better for real-time UAV applications. In Proceedings of the 2019 IEEE International Conference on Computer Vision Workshops (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 37–45. [Google Scholar]

- Schumann, A.W.; Mood, N.S.; Mungofa, P.D.; MacEachern, C.; Zaman, Q.U.; Esau, T. Detection of three fruit maturity stages in wild blueberry fields using deep learning artificial neural networks. In Proceedings of the 2019 ASABE Annual International Meeting. American Society of Agricultural and Biological Engineers, Boston, MA, USA, 7–10 July 2019; p. 1. [Google Scholar] [CrossRef]

- Dobashi, Y.; Yamamoto, T.; Nishita, T. Efficient rendering of lightning taking into account scattering effects due to clouds and atmospheric particles. In Proceedings of the Ninth Pacific Conference on Computer Graphics and Applications Pacific Graphics, Tokyo, Japan, 16–18 October 2001; pp. 390–399. [Google Scholar]

| No | Equipment | Specification | No | Equipment | Specification |

|---|---|---|---|---|---|

| 1 | ZOTAC Mini PC | GeForce GTX 1050 | 11 | Bypass valve | 12.7 mm Bypass Relief Valve; 0–1724 kPa |

| 2 | Relay module | 8 channels | 12 | Pressure gauge | Liquid Filled Pressure Gauge 1103 kPa |

| 3 | Arduino mega | Elegoo MEGA 2560 R3 | 13 | Pressure control valve | Water Pressure Regulator Valve |

| 4 | LCD screen | 10 Inch IPS LCD | 14 | Three nozzles | TeeJet XR Extended Range Spray Nozzle |

| 5 | Speedometer | Analog | 15 | Filter | Hypro 3350–0079 Nylon Line Strainer |

| 6 | Three solenoid valves | Brass Solenoid Valve DC 12 V | 16 | Pump | 12 V Diaphragm Pump – 15 lpm |

| 7 | Three cameras | Logitech C920 Webcam HD Pro | 17 | Shut off valve | On/Off |

| 8 | Power supply-1 | 42,000 mAh 155 Wh Power Station | 18 | Flow meter | Water Flow Control Meter LCD Display Controller |

| 9 | Power supply-2 | 230 Wh 62,400 mAh Power Station | 19 | Supply tank | 15 l |

| 10 | Power supply-3 | 12 V 18 Ah SLA Battery | 20 | Gasoline engine | 7.5 l |

| Experiment | Test | Replication | Target | Weather Condition | Temperature Range °C | Light Intensity (Lux) × 100 |

|---|---|---|---|---|---|---|

| Weed Detection | 1 | 6 | Weed plant | Cloudy | 9.50–13.0 | 100–360 |

| 2 | 6 | Partly cloudy | 13.5–19.0 | 400–545 | ||

| 3 | 6 | Sunny | 16.8–25.0 | 550–1000 | ||

| Simulated Diseased Plant Detection | 4 | 6 | Simulated diseased plant | Cloudy | 10.0–12.5 | 100–350 |

| 5 | 6 | Partly cloudy | 14.0–17.5 | 430–468 | ||

| 6 | 6 | Sunny | 16.0–24.5 | 500–1000 |

| Datasets | Model | Precision | Recall | F1Score | mAP% | FPS |

|---|---|---|---|---|---|---|

| Weed plants | Tiny-YOLOv3 | 0.86 | 0.79 | 0.78 | 78.2 | 30.0 |

| Simulated diseased plants | Tiny-YOLOv3 | 0.78 | 0.8 | 0.75 | 76.4 | 30.5 |

| Weed plants | YOLOv3 | 0.92 | 0.87 | 0.85 | 93.2 | 14.6 |

| Simulated diseased plants | YOLOv3 | 0.84 | 0.82 | 0.83 | 91.4 | 15.6 |

| Experiment | Response Variable | Source | DF | Mean Square | F-Value | p-Value |

|---|---|---|---|---|---|---|

| Weed detection | Volume consumption (l) | Treatment | 1 | 7.5826 | 2108.4 | <0.05 |

| Condition | 2 | 0.0041 | 1.15 | 0.329 | ||

| Treatment × condition | 2 | 0.0009 | 0.26 | 0.773 | ||

| Error | 30 | 0.0036 | ||||

| Total | 35 | |||||

| Simulated diseased plant detection | Volume consumption (l) | Treatment | 1 | 9.7906 | 2517.4 | <0.05 |

| Condition | 2 | 0.0076 | 1.97 | 0.156 | ||

| Treatment × condition | 2 | 0.00222 | 0.57 | 0.571 | ||

| Error | 30 | 0.0038 | ||||

| Total | 35 |

| Weed Detection Experiment | ||||||||

|---|---|---|---|---|---|---|---|---|

| Response Variable | Treatment | Condition | N | Mean | SD | Minimum | Maximum | % Saving |

| Volume consumption (l) | VA | Cloudy | 6 | 1.2180 | 0.08 | 1.085 | 1.324 | 41.76 |

| Partly Cloudy | 6 | 1.2112 | 0.06 | 1.11 | 1.324 | 42.71 | ||

| Sunny | 6 | 1.1887 | 0.03 | 1.122 | 1.2 | 43.29 | ||

| UA | Cloudy | 6 | 2.0917 | 0.01 | 2.076 | 2.108 | NA | |

| Partly Cloudy | 6 | 2.1145 | 0.07 | 2 | 2.2 | |||

| Sunny | 6 | 2.0962 | 0.06 | 2.004 | 2.17 | |||

| Simulated Diseased Plant Detection Experiment | ||||||||

| Volume consumption (l) | VA | Cloudy | 6 | 1.1118 | 0.07 | 1.007 | 1.2 | 47.79 |

| Partly Cloudy | 6 | 1.1303 | 0.05 | 1.06 | 1.21 | 48.67 | ||

| Sunny | 6 | 1.1047 | 0.05 | 1.026 | 1.18 | 48.47 | ||

| UA | Cloudy | 6 | 2.1297 | 0.02 | 2.1 | 2.153 | NA | |

| Partly Cloudy | 6 | 2.2022 | 0.10 | 2.07 | 2.32 | |||

| Sunny | 6 | 2.144 | 0.03 | 2.1 | 2.19 | |||

| Experiment | Response Variable | Source | DF | Mean Square | F-Value | p-Value |

|---|---|---|---|---|---|---|

| Weed detection | Volume Consumption (l) | Treatment | 1 | 7.5826 | 2108.4 | <0.05 |

| Condition | 2 | 0.0041 | 1.15 | 0.329 | ||

| Condition*Treatment | 2 | 0.0009 | 0.26 | 0.773 | ||

| Error | 30 | 0.0036 | ||||

| Total | 35 | |||||

| Simulated diseased plant detection | Volume Consumption (l) | Treatment | 1 | 9.7906 | 2517.4 | <0.05 |

| Condition | 2 | 0.0076 | 1.97 | 0.156 | ||

| Condition*Treatment | 2 | 0.00222 | 0.57 | 0.571 | ||

| Error | 30 | 0.0038 | ||||

| Total | 35 |

| Experiment | Treatment | N | Mean | SD | SE Mean | p-Value |

|---|---|---|---|---|---|---|

| Weed plants detection | UA | 15 | 46.6 | 12.3 | 3.2 | 0.83 |

| VA | 15 | 47.42 | 9.87 | 2.5 | ||

| Simulated diseased plants detection | UA | 15 | 45.98 | 10.91 | 2.7 | 0.85 |

| VA | 15 | 48.62 | 10.01 | 2.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hussain, N.; Farooque, A.A.; Schumann, A.W.; McKenzie-Gopsill, A.; Esau, T.; Abbas, F.; Acharya, B.; Zaman, Q. Design and Development of a Smart Variable Rate Sprayer Using Deep Learning. Remote Sens. 2020, 12, 4091. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12244091

Hussain N, Farooque AA, Schumann AW, McKenzie-Gopsill A, Esau T, Abbas F, Acharya B, Zaman Q. Design and Development of a Smart Variable Rate Sprayer Using Deep Learning. Remote Sensing. 2020; 12(24):4091. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12244091

Chicago/Turabian StyleHussain, Nazar, Aitazaz A. Farooque, Arnold W. Schumann, Andrew McKenzie-Gopsill, Travis Esau, Farhat Abbas, Bishnu Acharya, and Qamar Zaman. 2020. "Design and Development of a Smart Variable Rate Sprayer Using Deep Learning" Remote Sensing 12, no. 24: 4091. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12244091