Image Similarity Metrics Suitable for Infrared Video Stabilization during Active Wildfire Monitoring: A Comparative Analysis

Abstract

:1. Introduction

2. Background: Image Similarity Metrics

2.1. Intensity 2D Correlation

2.2. Intensity Mean Squared Difference (IMSD)

2.3. Mutual Information

2.4. Normalized Mutual Information (NMI)

3. Methodology

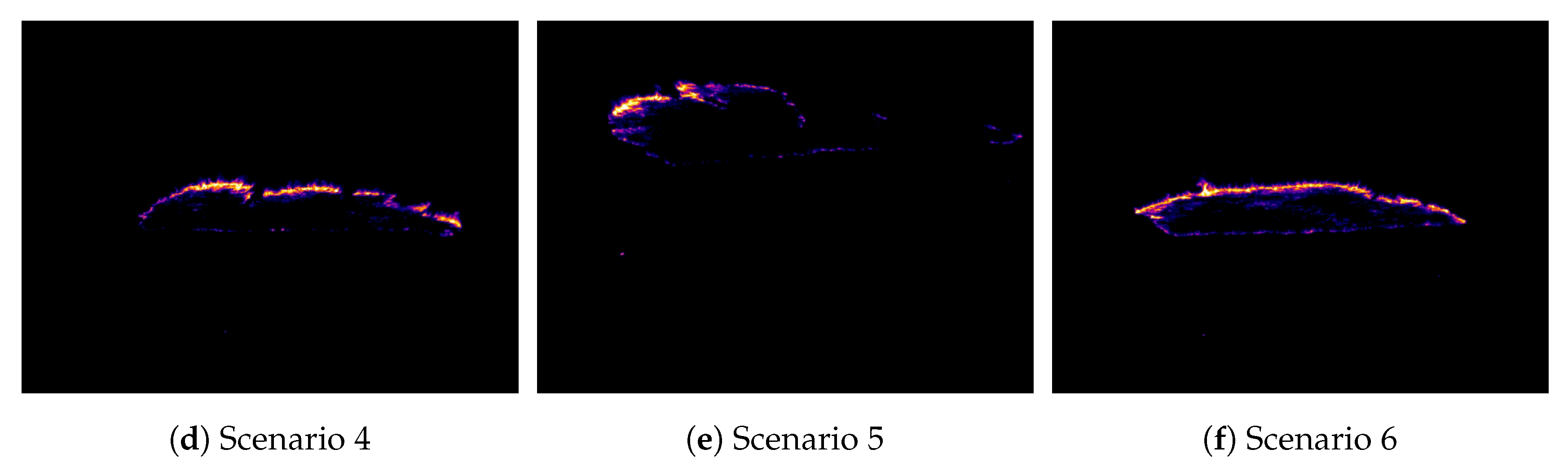

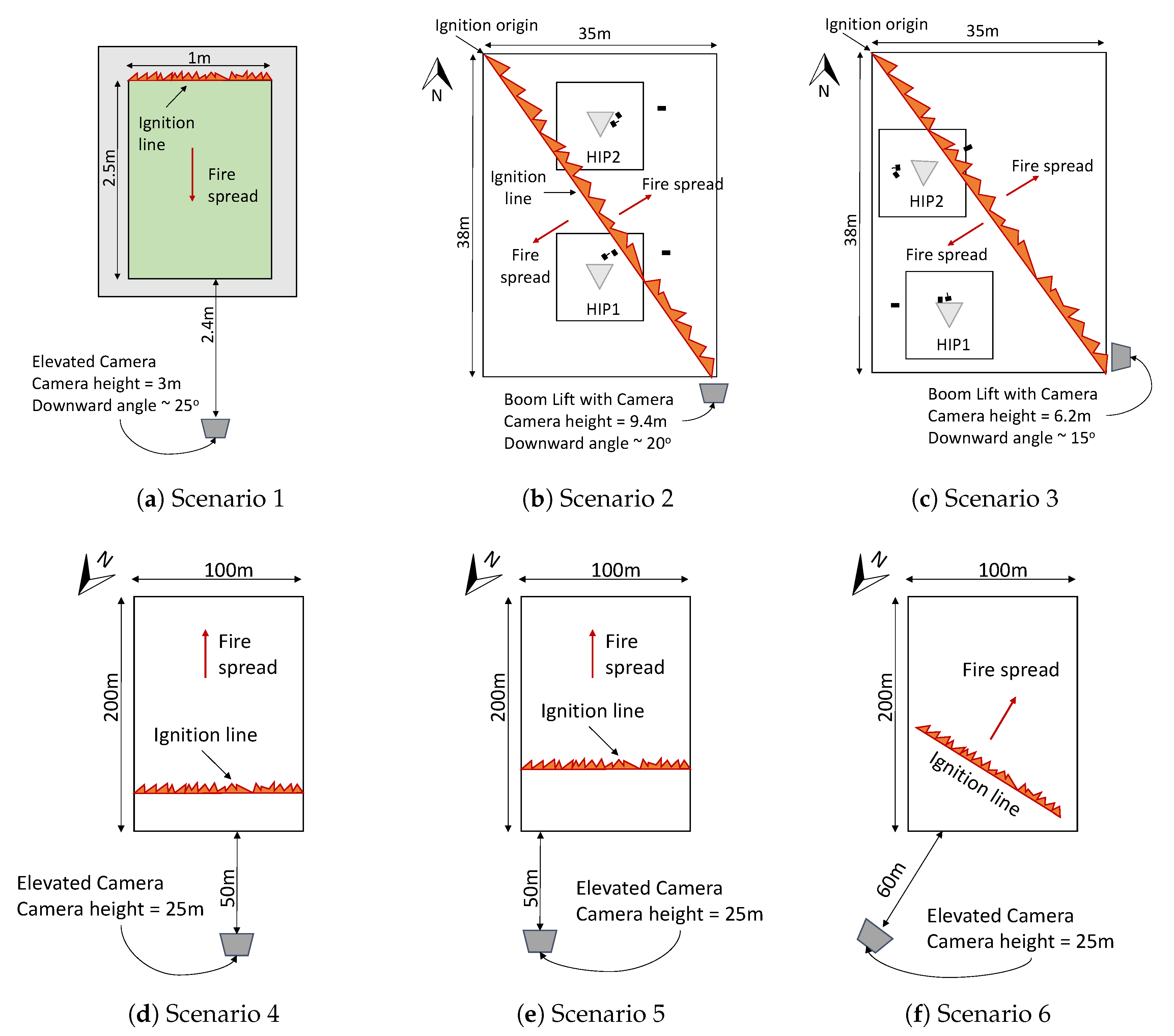

3.1. Test Data

3.2. Approach Overview

3.3. Global Sensitivity Analysis

- Generate a sample of the model input space of size . This can be accomplished through random sampling or using sequences of quasi-random numbers. The latter approach allows a significant reduction on the sample size necessary to achieve convergence in estimated statistics.

- Split the input sample into two groups. The result will be two matrices of size , where M is the number of model inputs. We call these matrices A and B.

- Create a third matrix C by combining columns from A and B. Specifically, C will be a vertical concatenation of M submatrices , where each is composed of all columns of B except the ith column, which is taken from A.

- Run the model for each sample in matrices A, B and C, thus obtaining output vectors , and .

3.4. Local Sensitivity Analysis

4. Results

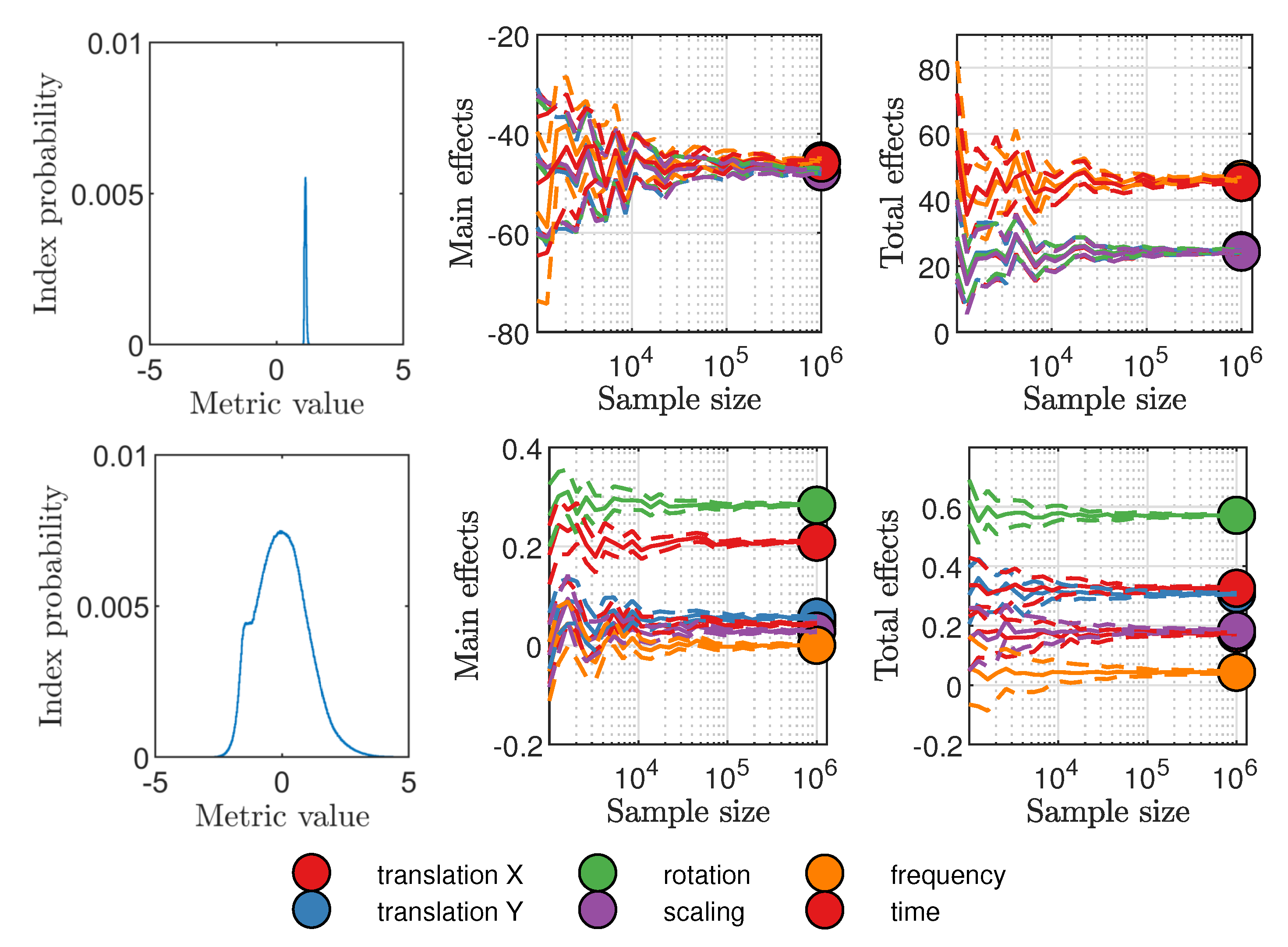

4.1. GSA Convergence Considerations

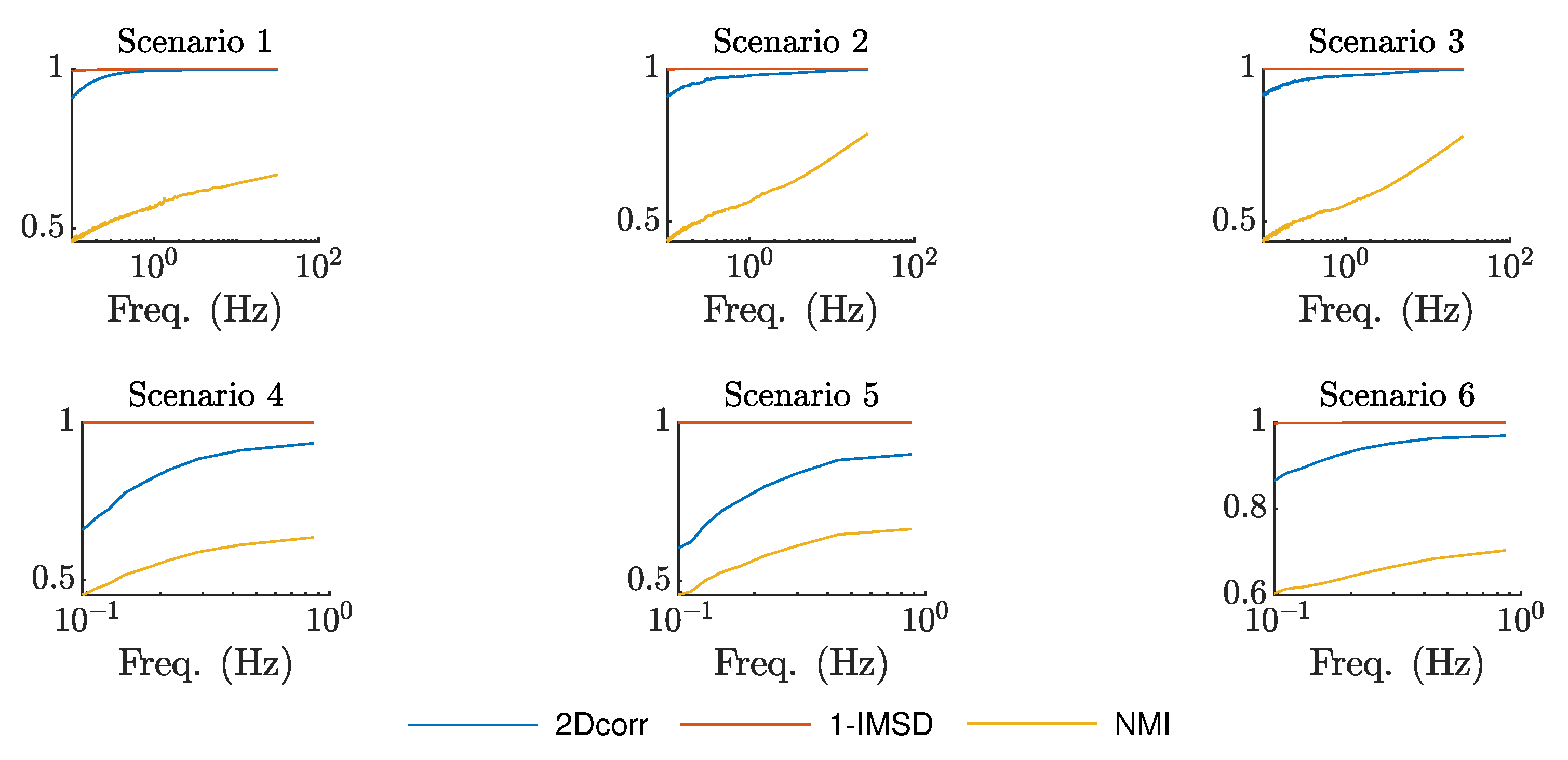

4.2. GSA Results

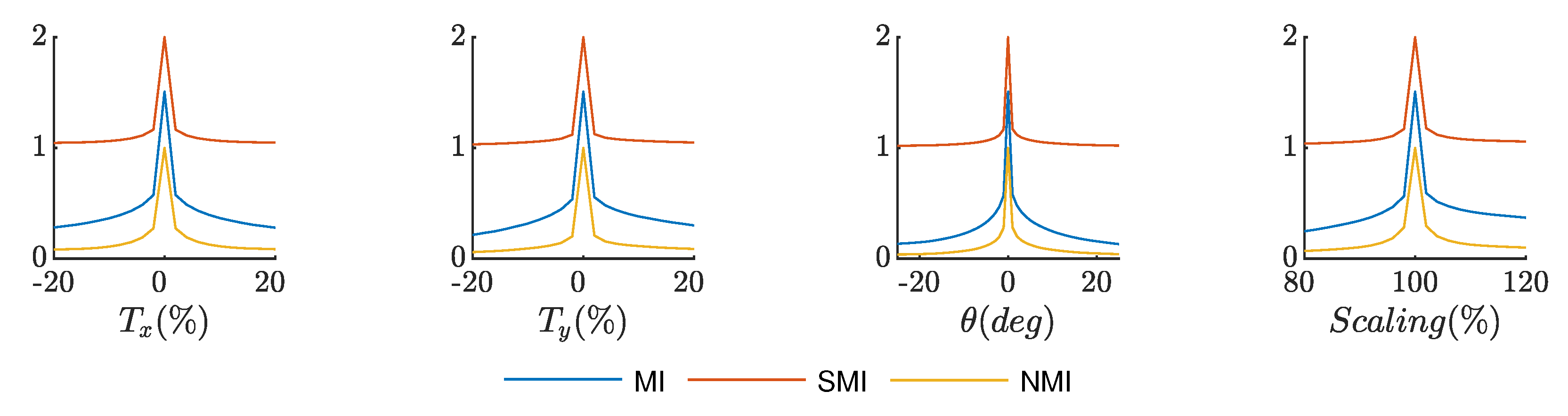

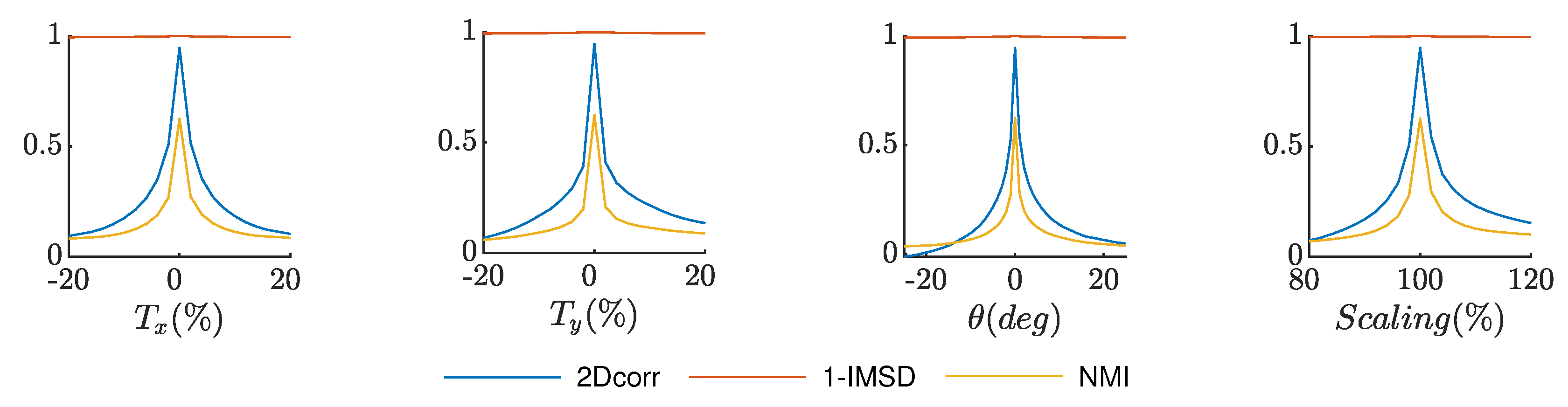

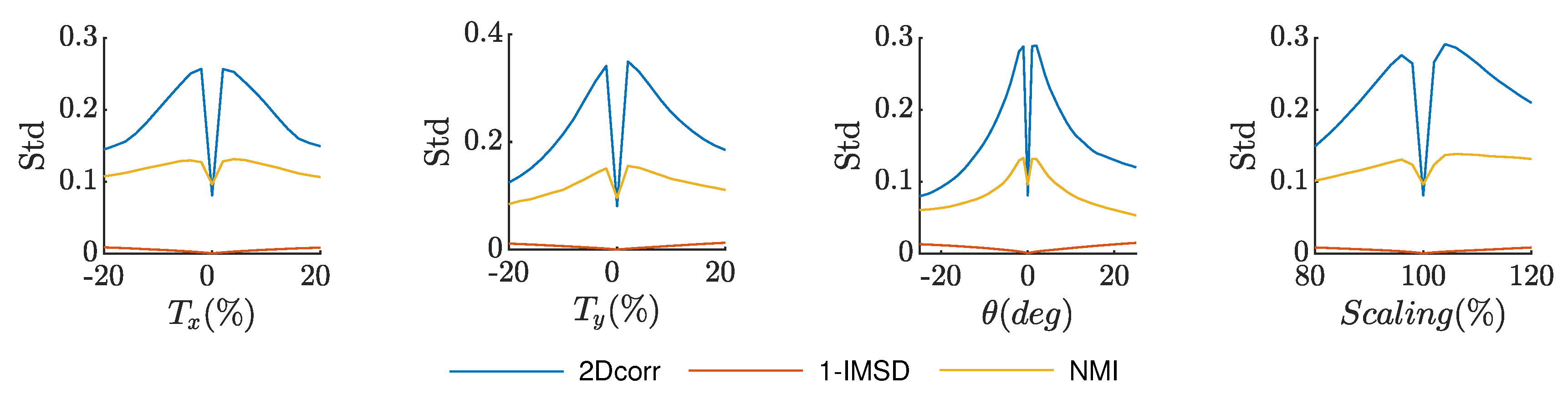

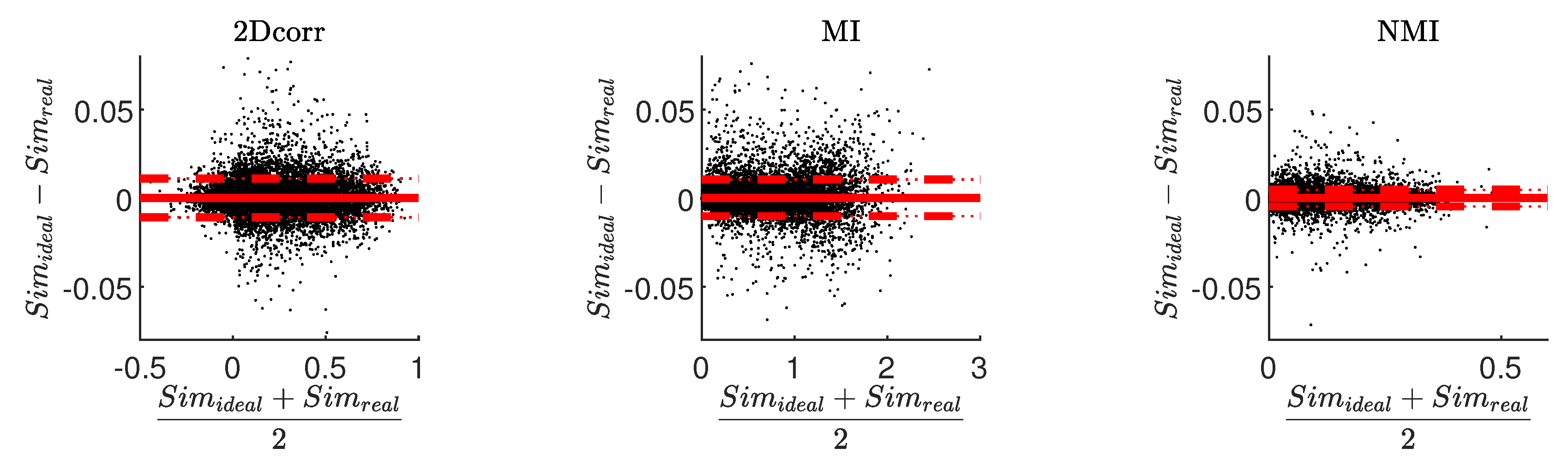

4.3. LSA Results

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Lentile, L.B.; Holden, Z.A.; Smith, A.M.S.; Falkowski, M.J.; Hudak, A.T.; Morgan, P.; Lewis, S.A.; Gessler, P.E.; Benson, N.C. Remote sensing techniques to assess active fire characteristics and post-fire effects. Int. J. Wildland Fire 2006, 15, 319. [Google Scholar] [CrossRef]

- Giglio, L.; Descloitres, J.; Justice, C.O.; Kaufman, Y.J. An Enhanced Contextual Fire Detection Algorithm for MODIS. Remote Sens. Environ. 2003, 87, 273–282. [Google Scholar] [CrossRef]

- Ichoku, C.; Kaufman, Y.J.; Giglio, L.; Li, Z.; Fraser, R.H.; Jin, J.Z.; Park, W.M. Comparative analysis of daytime fire detection algorithms using AVHRR data for the 1995 fire season in Canada: Perspective for MODIS. Int. J. Remote Sens. 2003, 24, 1669–1690. [Google Scholar] [CrossRef]

- Dennison, P.E. Fire detection in imaging spectrometer data using atmospheric carbon dioxide absorption. Int. J. Remote Sens. 2006, 27, 3049–3055. [Google Scholar] [CrossRef]

- Justice, C.; Giglio, L.; Korontzi, S.; Owens, J.; Morisette, J.; Roy, D.; Descloitres, J.; Alleaume, S.; Petitcolin, F.; Kaufman, Y. The MODIS fire products. Remote Sens. Environ. 2002, 83, 244–262. [Google Scholar] [CrossRef]

- Smith, A.M.S.; Wooster, M.J.; Powell, A.K.; Usher, D. Texture based feature extraction: Application to burn scar detection in Earth observation satellite sensor imagery. Int. J. Remote Sens. 2002, 23, 1733–1739. [Google Scholar] [CrossRef]

- Holden, Z.A.; Smith, A.M.S.; Morgan, P.; Rollins, M.G.; Gessler, P.E. Evaluation of novel thermally enhanced spectral indices for mapping fire perimeters and comparisons with fire atlas data. Int. J. Remote Sens. 2005, 26, 4801–4808. [Google Scholar] [CrossRef]

- Roy, D.; Jin, Y.; Lewis, P.; Justice, C. Prototyping a global algorithm for systematic fire-affected area mapping using MODIS time series data. Remote Sens. Environ. 2005, 97, 137–162. [Google Scholar] [CrossRef]

- Giglio, L.; Loboda, T.; Roy, D.P.; Quayle, B.; Justice, C.O. An active-fire based burned area mapping algorithm for the MODIS sensor. Remote Sens. Environ. 2009, 113, 408–420. [Google Scholar] [CrossRef]

- Wooster, M.J.; Zhukov, B.; Oertel, D. Fire radiative energy for quantitative study of biomass burning: Derivation from the BIRD experimental satellite and comparison to MODIS fire products. Remote Sens. Environ. 2003, 86, 83–107. [Google Scholar] [CrossRef]

- Zhukov, B.; Lorenz, E.; Oertel, D.; Wooster, M.; Roberts, G. Spaceborne detection and characterization of fires during the bi-spectral infrared detection (BIRD) experimental small satellite mission (2001–2004). Remote Sens. Environ. 2006, 100, 29–51. [Google Scholar] [CrossRef]

- Roberts, G.; Wooster, M.J.; Perry, G.L.W.; Drake, N.; Rebelo, L.M.; Dipotso, F. Retrieval of biomass combustion rates and totals from fire radiative power observations: Application to southern Africa using geostationary SEVIRI imagery. J. Geophys. Res. Atmos. 2005, 110, 1–19. [Google Scholar] [CrossRef] [Green Version]

- Wooster, M.J.; Roberts, G.; Perry, G.L.W.; Kaufman, Y.J. Retrieval of biomass combustion rates and totals from fire radiative power observations: FRP derivation and calibration relationships between biomass consumption and fire radiative energy release. J. Geophys. Res. Atmos. 2005, 110, 1–24. [Google Scholar] [CrossRef]

- Riggan, P.J.; Tissell, R.G. Chapter 6 Airborne Remote Sensing of Wildland Fires. Dev. Environ. Sci. 2008, 8, 139–168. [Google Scholar] [CrossRef]

- Paugam, R.; Wooster, M.J.; Roberts, G. Use of Handheld Thermal Imager Data for Airborne Mapping of Fire Radiative Power and Energy and Flame Front Rate of Spread. IEEE Trans. Geosci. Remote Sens. 2013, 51, 3385–3399. [Google Scholar] [CrossRef]

- Plucinski, M.; Pastor, E. Criteria and methodology for evaluating aerial wildfire suppression. Int. J. Wildland Fire 2013, 22, 1144–1154. [Google Scholar] [CrossRef]

- Stow, D.A.; Riggan, P.J.; Storey, E.J.; Coulter, L.L. Measuring fire spread rates from repeat pass airborne thermal infrared imagery. Remote Sens. Lett. 2014, 5, 803–812. [Google Scholar] [CrossRef]

- Dickinson, M.B.; Hudak, A.T.; Zajkowski, T.; Loudermilk, E.L.; Schroeder, W.; Ellison, L.; Kremens, R.L.; Holley, W.; Martinez, O.; Paxton, A.; et al. Measuring radiant emissions from entire prescribed fires with ground, airborne and satellite sensors—RxCADRE 2012. Int. J. Wildland Fire 2016, 25, 48–61. [Google Scholar] [CrossRef]

- Mueller, E.V.; Skowronski, N.; Clark, K.; Gallagher, M.; Kremens, R.; Thomas, J.C.; El Houssami, M.; Filkov, A.; Hadden, R.M.; Mell, W.; et al. Utilization of remote sensing techniques for the quantification of fire behavior in two pine stands. Fire Saf. J. 2017, 91, 845–854. [Google Scholar] [CrossRef] [Green Version]

- Johnston, J.M.; Wooster, M.J.; Paugam, R.; Wang, X.; Lynham, T.J.; Johnston, L.M. Direct estimation of Byram’s fire intensity from infrared remote sensing imagery. Int. J. Wildland Fire 2017, 26, 668–684. [Google Scholar] [CrossRef] [Green Version]

- Valero, M.; Rios, O.; Pastor, E.; Planas, E. Automated location of active fire perimeters in aerial infrared imaging using unsupervised edge detectors. Int. J. Wildland Fire 2018, 27, 241–256. [Google Scholar] [CrossRef] [Green Version]

- Stow, D.; Riggan, P.; Schag, G.; Brewer, W.; Tissell, R.; Coen, J.; Storey, E. Assessing uncertainty and demonstrating potential for estimating fire rate of spread at landscape scales based on time sequential airborne thermal infrared imaging. Int. J. Remote Sens. 2019, 40, 4876–4897. [Google Scholar] [CrossRef]

- Pastor, E.; Barrado, C.; Royo, P. Architecture for a helicopter-based unmanned aerial systems wildfire surveillance system. Geocarto Int. 2011, 26, 113–131. [Google Scholar] [CrossRef]

- Zajkowski, T.J.; Dickinson, M.B.; Hiers, J.K.; Holley, W.; Williams, B.W.; Paxton, A.; Martinez, O.; Walker, G.W. Evaluation and use of remotely piloted aircraft systems for operations and research—RxCADRE 2012. Int. J. Wildland Fire 2016, 25, 114–128. [Google Scholar] [CrossRef]

- Moran, C.J.; Seielstad, C.A.; Cunningham, M.R.; Hoff, V.; Parsons, R.A.; Queen, L.; Sauerbrey, K.; Wallace, T. Deriving Fire Behavior Metrics from UAS Imagery. Fire 2019, 2, 36. [Google Scholar] [CrossRef] [Green Version]

- Ambrosia, V.; Wegener, S.; Zajkowski, T.; Sullivan, D.; Buechel, S.; Enomoto, F.; Lobitz, B.; Johan, S.; Brass, J.; Hinkley, E. The Ikhana unmanned airborne system (UAS) western states fire imaging missions: From concept to reality (2006–2010). Geocarto Int. 2011, 26, 85–101. [Google Scholar] [CrossRef]

- Hudak, A.T.; Dickinson, M.B.; Bright, B.C.; Kremens, R.L.; Loudermilk, E.L.; O’Brien, J.J.; Hornsby, B.S.; Ottmar, R.D. Measurements relating fire radiative energy density and surface fuel consumption—RxCADRE 2011 and 2012. Int. J. Wildland Fire 2016, 25, 25–37. [Google Scholar] [CrossRef] [Green Version]

- Clements, C.B.; Davis, B.; Seto, D.; Contezac, J.; Kochanski, A.; Fillipi, J.B.; Lareau, N.; Barboni, B.; Butler, B.; Krueger, S.; et al. Overview of the 2013 FireFlux II grass fire field experiment. In Advances in Forest Fire Research—Proceedings of the 7th International Conference on Forest Fire Research; Coimbra University Press: Coimbra, Portugal, 2014; pp. 392–400. [Google Scholar] [CrossRef]

- Ottmar, R.D.; Hiers, J.K.; Butler, B.W.; Clements, C.B.; Dickinson, M.B.; Hudak, A.T.; O’Brien, J.J.; Potter, B.E.; Rowell, E.M.; Strand, T.M. Measurements, datasets and preliminary results from the RxCADRE project–2008, 2011 and 2012. Int. J. Wildland Fire 2016, 25, 1–9. [Google Scholar] [CrossRef]

- Hudak, A.; Freeborn, P.; Lewis, S.; Hood, S.; Smith, H.; Hardy, C.; Kremens, R.; Butler, B.; Teske, C.; Tissell, R.; et al. The Cooney Ridge Fire Experiment: An Early Operation to Relate Pre-, Active, and Post-Fire Field and Remotely Sensed Measurements. Fire 2018, 1, 10. [Google Scholar] [CrossRef] [Green Version]

- Riggan, P.J.; Hoffman, J.W. Firemappertm: A thermal-imaging radiometer for wildfire research and operations. IEEE Aerosp. Conf. Proc. 2003, 4, 1843–1854. [Google Scholar] [CrossRef] [Green Version]

- Valero, M.M.; Jimenez, D.; Butler, B.W.; Mata, C.; Rios, O.; Pastor, E.; Planas, E. On the use of compact thermal cameras for quantitative wildfire monitoring. In Advances in Forest Fire Research 2018; Viegas, D.X., Ed.; University of Coimbra Press: Coimbra, Portugal, 2018; Chapter 5; pp. 1077–1086. [Google Scholar] [CrossRef] [Green Version]

- Yuan, C.; Zhang, Y.; Liu, Z. A survey on technologies for automatic forest fire monitoring, detection, and fighting using unmanned aerial vehicles and remote sensing techniques. Can. J. For. Res. 2015, 45, 783–792. [Google Scholar] [CrossRef]

- Pérez, Y.; Pastor, E.; Planas, E.; Plucinski, M.; Gould, J. Computing forest fires aerial suppression effectiveness by IR monitoring. Fire Saf. J. 2011, 46, 2–8. [Google Scholar] [CrossRef]

- Brown, L.G. A survey of image registration techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Kaneko, S.; Murase, I.; Igarashi, S. Robust image registration by increment sign correlation. Pattern Recognit. 2002, 35, 2223–2234. [Google Scholar] [CrossRef]

- Yang, Q.; Ma, Z.; Xu, Y.; Yang, L.; Zhang, W. Modeling the Screen Content Image Quality via Multiscale Edge Attention Similarity. IEEE Trans. Broadcast. 2020. [Google Scholar] [CrossRef]

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef] [Green Version]

- Kern, J.P.; Pattichis, M.S. Robust Multispectral Image Registration Using Mutual-Information Models. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1494–1505. [Google Scholar] [CrossRef]

- Wu, Y.; Ma, W.; Su, Q.; Liu, S.; Ge, Y. Remote sensing image registration based on local structural information and global constraint. J. Appl. Remote Sens. 2019, 13. [Google Scholar] [CrossRef]

- Pluim, J.P.; Maintz, J.B.; Viergever, M.A. Mutual-information-based registration of medical images: A survey. IEEE Trans. Med. Imaging 2003, 22, 986–1004. [Google Scholar] [CrossRef]

- Chen, H.M.; Varshney, P.K.; Arora, M.K. Performance of Mutual Information Similarity Measure for Registration of Multitemporal Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2003, 41, 2445–2454. [Google Scholar] [CrossRef]

- Jones, C.; Christens-Barry, W.A.; Terras, M.; Toth, M.B.; Gibson, A. Affine registration of multispectral images of historical documents for optimized feature recovery. Digit. Scholarsh. Humanit. 2019. [Google Scholar] [CrossRef]

- Liu, D.; Mansour, H.; Boufounos, P.T. Robust mutual information-based multi-image registration. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 915–918. [Google Scholar] [CrossRef]

- Baillet, S.; Garnero, L.; Marin, G.; Hugonin, J.P. Combined MEG and EEG Source Imaging by Minimization of Mutual Information. IEEE Trans. Biomed. Eng. 1999, 46, 522–534. [Google Scholar] [CrossRef] [PubMed]

- Panin, G. Mutual information for multi-modal, discontinuity-preserving image registration. In International Symposium on Visual Computing (ISVC); Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar] [CrossRef]

- Eikhosravi, A.; Li, B.; Liu, Y.; Eliceiri, K.W. Intensity-based registration of bright-field and second-harmonic generation images of histopathology tissue sections. Biomed. Opt. Express 2020, 11, 160–173. [Google Scholar] [CrossRef] [PubMed]

- Barnea, D.I.; Silverman, H.F. A class of algorithm for fast digital image rectification. IEEE Trans. Comput. 1972, C-21, 179–186. [Google Scholar] [CrossRef]

- Ertürk, S. Digital image stabilization with sub-image phase correlation based global motion estimation. IEEE Trans. Consum. Electron. 2003, 49, 1320–1325. [Google Scholar] [CrossRef]

- Lüdemann, J.; Barnard, A.; Malan, D.F. Sub-pixel image registration on an embedded Nanosatellite Platform. Acta Astronaut. 2019, 161, 293–303. [Google Scholar] [CrossRef]

- Cover, T.M.; Thomas, J.A. Elements of Information Theory; John Wiley & Sons Inc.: New York, USA, 1991. [Google Scholar]

- Maes, F.; Collignon, A.; Vandermeulen, D.; Marchal, G.; Suetens, P. Multimodality Image Registration by Maximization of Mutual Information. IEEE Trans. Med. Imaging 1997, 16, 187–198. [Google Scholar] [CrossRef] [Green Version]

- Viola, P.A. Alignment by Maximization of Mutual Information. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1995. [Google Scholar]

- Collignon, A.; Maes, F.; Delaere, D.; Vandermeulen, D.; Suetens, P.; Marchal, G. Automated multi-modality image registration based on information theory. Inf. Process. Med. Imaging 1995, 3, 263–274. [Google Scholar] [CrossRef]

- Xu, R.; Chen, Y.w.; Tang, S.Y.; Morikawa, S.; Kurumi, Y. Parzen-Window Based Normalized Mutual Information for Medical Image Registration. IEICE Trans. Inf. Syst. 2008, E91-D, 132–144. [Google Scholar] [CrossRef]

- Zhuang, Y.; Gao, K.; Miu, X.; Han, L.; Gong, X. Infrared and visual image registration based on mutual information with a combined particle swarm optimization—Powell search algorithm. Optik 2016, 127, 188–191. [Google Scholar] [CrossRef]

- Shannon, C. A Mathematical Theory of Communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef] [Green Version]

- Wang, Q.; Shen, Y.; Zhang, Y.; Zhang, J.Q. A quantitative method for evaluating the performances of hyperspectral image fusion. IEEE Trans. Instrum. Meas. 2003, 52, 1041–1047. [Google Scholar] [CrossRef]

- Yan, L.; Liu, Y.; Xiao, B.; Xia, Y.; Fu, M. A Quantitative Performance Evaluation Index for Image Fusion: Normalized Perception Mutual Information. In Proceedings of the 31st Chinese Control Conference, Hefei, China, 25–27 July 2012; pp. 3783–3788. [Google Scholar]

- Penney, G.P.; Weese, J.; Little, J.A.; Desmedt, P.; Hill, D.L.; Hawkes, D.J. A comparison of similarity measures for use in 2-D-3-D medical image registration. IEEE Trans. Med. Imaging 1998, 17, 586–595, [0912.0405]. [Google Scholar] [CrossRef]

- Pluim, J.P.W.; Maintz, J.B.A.; Viergever, M.A. Image registration by maximization of combined mutual information and gradiant information. IEEE Trans. Med. Imaging 2000, 19, 809–814. [Google Scholar] [CrossRef]

- Studholme, C.; Hill, D.; Hawkes, D. An overlap invariant entropy measure of 3D medical image alignment. Pattern Recognit. 1999, 32, 71–86. [Google Scholar] [CrossRef]

- Astola, J.; Virtanen, I. Entropy Correlation Coefficient, a Measure of Statistical Dependence for Categorized Data; Discussion Papers, 44; University of Vaasa: Vaasa, Finland, 1982. [Google Scholar]

- Strehl, A.; Ghosh, J. Cluster ensembles—A knowledge reuse framework for combining multiple partitions. J. Mach. Learn. Res. 2002, 3, 583–617. [Google Scholar] [CrossRef]

- Bai, X.; Zhao, Y.; Huang, Y.; Luo, S. Normalized joint mutual information measure for image segmentation evaluation with multiple ground-truth images. In Proceedings of the 14th International Conference on Computer Analysis of Images and Patterns, Seville, Spain, 29–31 August 2011; Volume Part 1, pp. 110–117. [Google Scholar] [CrossRef]

- Pillai, K.G.; Vatsavai, R.R. Multi-sensor remote sensing image change detection: An evaluation of similarity measures. In Proceedings of the IEEE 13th International Conference on Data Mining Workshops, Dallas, TX, USA, 7–10 December 2013; pp. 1053–1060. [Google Scholar] [CrossRef]

- Estévez, P.A.; Member, S.; Tesmer, M.; Perez, C.A.; Member, S.; Zurada, J.M. Normalized Mutual Information Feature Selection. IEEE Trans. Neural Netw. 2009, 20, 189–201. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- O’Brien, J.J.; Loudermilk, E.L.; Hornsby, B.; Hudak, A.T.; Bright, B.C.; Dickinson, M.B.; Hiers, J.K.; Teske, C.; Ottmar, R.D. High-resolution infrared thermography for capturing wildland fire behaviour: RxCADRE 2012. Int. J. Wildland Fire 2016, 25, 62–75. [Google Scholar] [CrossRef]

- Saltelli, A.; Tarantola, S.; Campolongo, F.; Ratto, M. Sensistivity Analysis in Practice: A Guide to Assessing Scientific Models; John Wiley & Sons Ltd.: Chichester, UK, 2004. [Google Scholar]

- Saltelli, A.; Ratto, M.; Andres, T.; Campolongo, F.; Cariboni, J.; Gatelli, D.; Saisana, M.; Tarantola, S. Global Sensitivity Analysis—The Primer; John Wiley & Sons Ltd.: Hoboken, NJ, USA, 2008; pp. 1–305. [Google Scholar] [CrossRef] [Green Version]

- Cukier, R.; Fortuin, C.; Shuler, K. Study of the sensitivity of coupled reaction systems to uncertainties in rate coefficients. I Theory. J. Chem. Phys. 1973, 59. [Google Scholar] [CrossRef]

- Sobol, I. Sensitivity analysis for nonlinear mathematical models. Math. Model. Comput. Exp. 1993, 1, 407–414. [Google Scholar] [CrossRef]

- McKay, M.; Beckman, R.; Conover, W. A Comparison of Three Methods for Selecting Values of Input Variables in the Analysis of Output from a Computer Code. Technometrics 1979, 21, 239–245. [Google Scholar]

- Pianosi, F.; Sarrazin, F.; Wagener, T. A Matlab toolbox for Global Sensitivity Analysis. Environ. Model. Softw. 2015, 70, 80–85. [Google Scholar] [CrossRef] [Green Version]

- Bland, J.M.; Altman, D.G. Statistical methods for assessing agreement between two methods of clinical measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Bland, J.M.; Altman, D.G. Measuring agreement in method comparison studies. Stat. Methods Med. Res. 1999, 8, 135–160. [Google Scholar] [CrossRef] [PubMed]

- Carkeet, A. Exact Parametric Confidence Intervals for Bland-Altman Limits of Agreement. Optom. Vis. Sci. 2015, 92, 71–80. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yaegashi, Y.; Tateoka, K.; Fujimoto, K.; Nakazawa, T.; Nakata, A.; Saito, Y.; Abe, T.; Yano, M.; Sakata, K. Assessment of Similarity Measures for Accurate Deformable Image Registration. J. Nucl. Med. Radiat. Ther. 2012, 42. [Google Scholar] [CrossRef] [Green Version]

- Hirschmüller, H. Stereo Processing by Semiglobal Matching and Mutual Information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef]

- Panin, G.; Knoll, A. Mutual information-based 3D object tracking. Int. J. Comput. Vis. 2008, 78, 107–118. [Google Scholar] [CrossRef]

- Dame, A.; Marchand, E. Accurate real-time tracking using mutual information. In Proceedings of the 9th IEEE International Symposium on Mixed and Augmented Reality 2010: Science and Technology (ISMAR 2010), Seoul, Korea, 13–16 October 2010; pp. 47–56. [Google Scholar] [CrossRef] [Green Version]

- Cole-Rhodes, A.A.; Johnson, K.L.; LeMoigne, J.; Zavorin, L. Multiresolution registration of remote sensing imagery by optimization of mutual information using a stochastic gradient. IEEE Trans. Image Process. 2003, 12, 1495–1510. [Google Scholar] [CrossRef]

- Bentoutou, Y.; Taleb, N.; Kpalma, K.; Ronsin, J. An automatic image registration for applications in remote sensing. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2127–2137. [Google Scholar] [CrossRef]

- Sakai, T.; Sugiyama, M.; Kitagawa, K.; Suzuki, K. Registration of infrared transmission images using squared-loss mutual information. Precis. Eng. 2015, 39, 187–193. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Ding, W.; Cao, X.; Liu, C. Image registration and fusion of visible and infrared integrated camera for medium-altitude unmanned aerial vehicle remote sensing. Remote Sens. 2017, 9, 441. [Google Scholar] [CrossRef] [Green Version]

- Ma, W.; Wen, Z.; Wu, Y.; Jiao, L.; Gong, M.; Zheng, Y.; Liu, L. Remote sensing image registration with modified sift and enhanced feature matching. IEEE Geosci. Remote Sens. Lett. 2017, 14, 3–7. [Google Scholar] [CrossRef]

- Wang, X.; Li, Y.; Wei, H.; Liu, F. An ASIFT-Based Local Registration Method for Satellite Imagery. Remote Sens. 2015, 7, 7044–7061. [Google Scholar] [CrossRef] [Green Version]

- Thévenaz, P.; Unser, M. Optimization of mutual information for multiresolution image registration. IEEE Trans. Image Process. 2000, 9, 2083–2099. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| Scenario | Camera Commercial Name | Spectral Range Wavelength (m) | Brightness Temperature Range (°C) | Image Resolution (Pixels) | Field of View (°) | Thermal Sensitivity (mK) | Recording Frequency (Hz) |

|---|---|---|---|---|---|---|---|

| 1 | Optris PI 640 | [7.5, 13] | [20, 900] | 640 × 480 | 60 × 45 | 75 | 32 |

| 2 | Optris PI 400 | [7.5, 13] | [200, 1500] | 382 × 288 | 60 × 45 | 75 | 27 |

| 3 | Optris PI 400 | [7.5, 13] | [200, 1500] | 382 × 288 | 60 × 45 | 75 | 27 |

| 4 | FLIR SC660 | [7.5, 13] | [300, 1500] | 640 × 480 | 45 × 30 | 30 | 1 |

| 5 | FLIR SC660 | [7.5, 13] | [300, 1500] | 640 × 480 | 45 × 30 | 30 | 1 |

| 6 | FLIR SC660 | [7.5, 13] | [300, 1500] | 640 × 480 | 45 × 30 | 30 | 1 |

| Video Sequence | Translation Range (% of Width/Height) | Rotation Range (deg) | Scaling Range | Frequency Range (Hz) | Time Range (s) |

|---|---|---|---|---|---|

| 1 | [−20, 20] | [−25, 25] | [0.8, 1.2] | [0.1, 32] | [60, 240] |

| 2 | [−20, 20] | [−25, 25] | [0.8, 1.2] | [0.1, 27] | [8, 660] |

| 3 | [−20, 20] | [−25, 25] | [0.8, 1.2] | [0.1, 27] | [23, 700] |

| 4 | [−20, 20] | [−25, 25] | [0.8, 1.2] | [0.1, 0.86] | [90, 1560] |

| 5 | [−20, 20] | [−25, 25] | [0.8, 1.2] | [0.1, 0.88] | [45, 700] |

| 6 | [−20, 20] | [−25, 25] | [0.8, 1.2] | [0.1, 0.87] | [90, 770] |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Valero, M.M.; Verstockt, S.; Mata, C.; Jimenez, D.; Queen, L.; Rios, O.; Pastor, E.; Planas, E. Image Similarity Metrics Suitable for Infrared Video Stabilization during Active Wildfire Monitoring: A Comparative Analysis. Remote Sens. 2020, 12, 540. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12030540

Valero MM, Verstockt S, Mata C, Jimenez D, Queen L, Rios O, Pastor E, Planas E. Image Similarity Metrics Suitable for Infrared Video Stabilization during Active Wildfire Monitoring: A Comparative Analysis. Remote Sensing. 2020; 12(3):540. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12030540

Chicago/Turabian StyleValero, Mario M., Steven Verstockt, Christian Mata, Dan Jimenez, Lloyd Queen, Oriol Rios, Elsa Pastor, and Eulàlia Planas. 2020. "Image Similarity Metrics Suitable for Infrared Video Stabilization during Active Wildfire Monitoring: A Comparative Analysis" Remote Sensing 12, no. 3: 540. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12030540