Lossy and Lossless Video Frame Compression: A Novel Approach for High-Temporal Video Data Analytics

Abstract

:1. Introduction

- A novel technique based on the mean predictive block value is proposed to manage computational complexity in lossless and lossy BMAs.

- It is shown that the proposed method offers high resolution predicted frames for low, medium, and high motion activity videos.

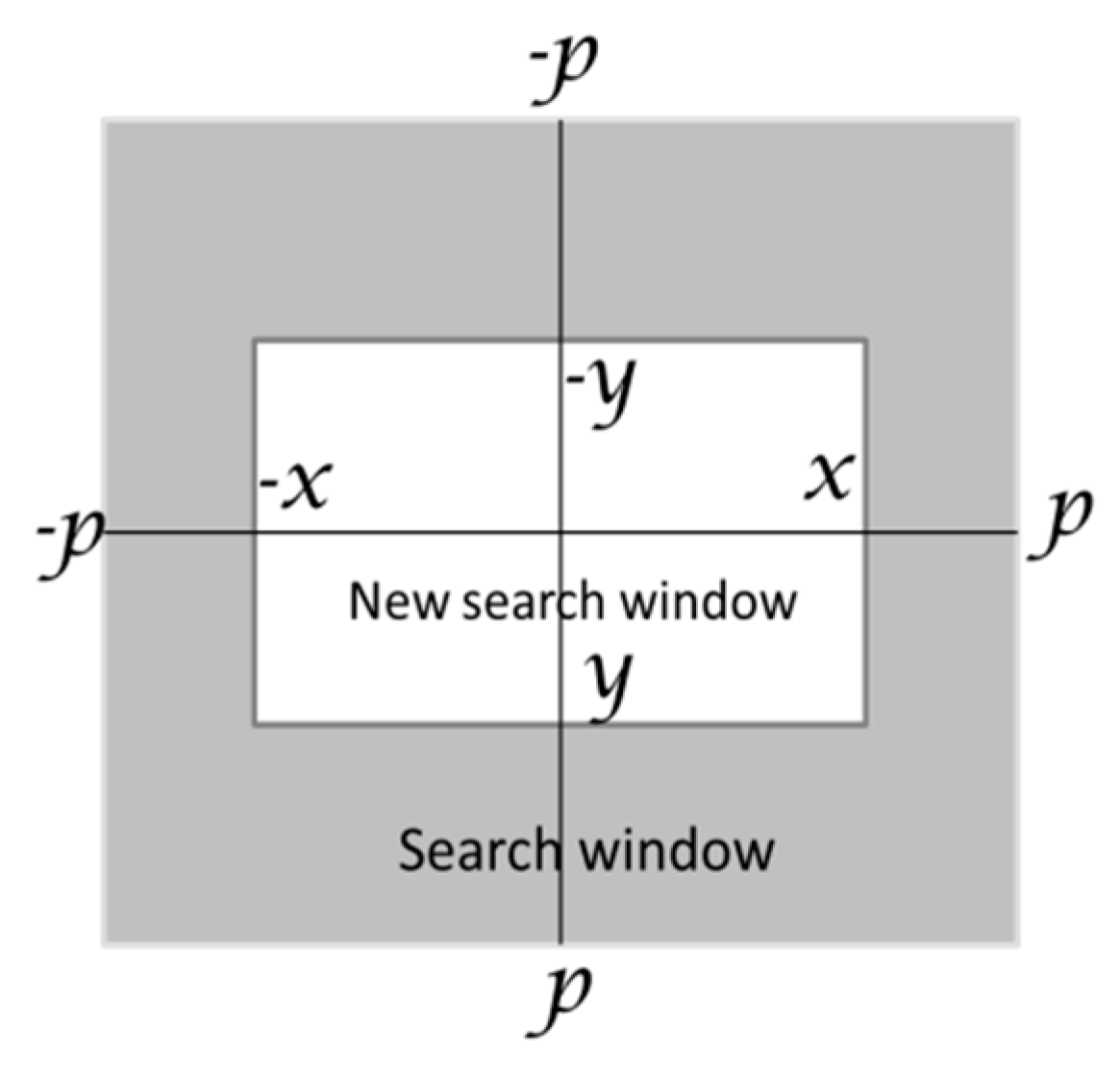

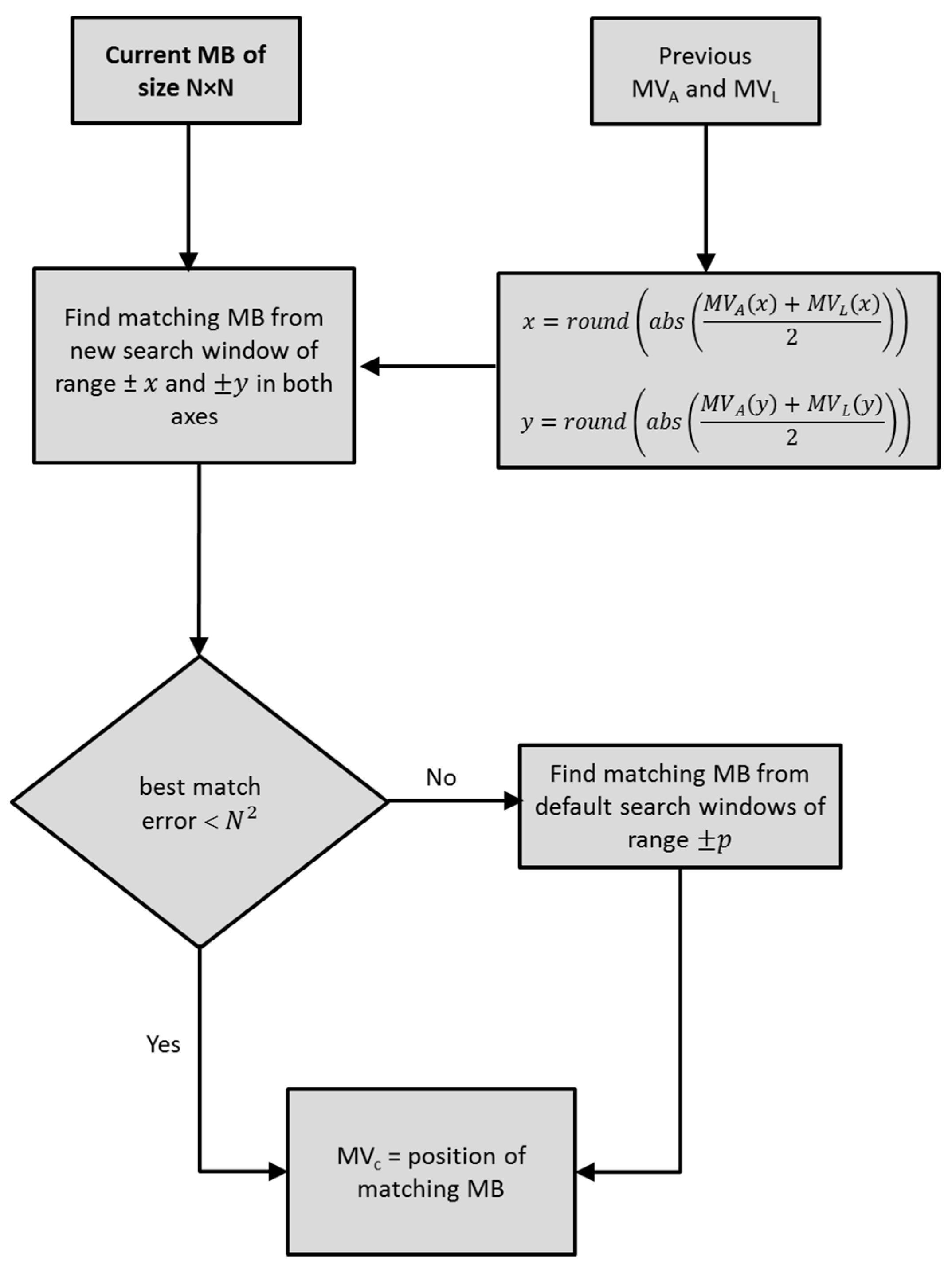

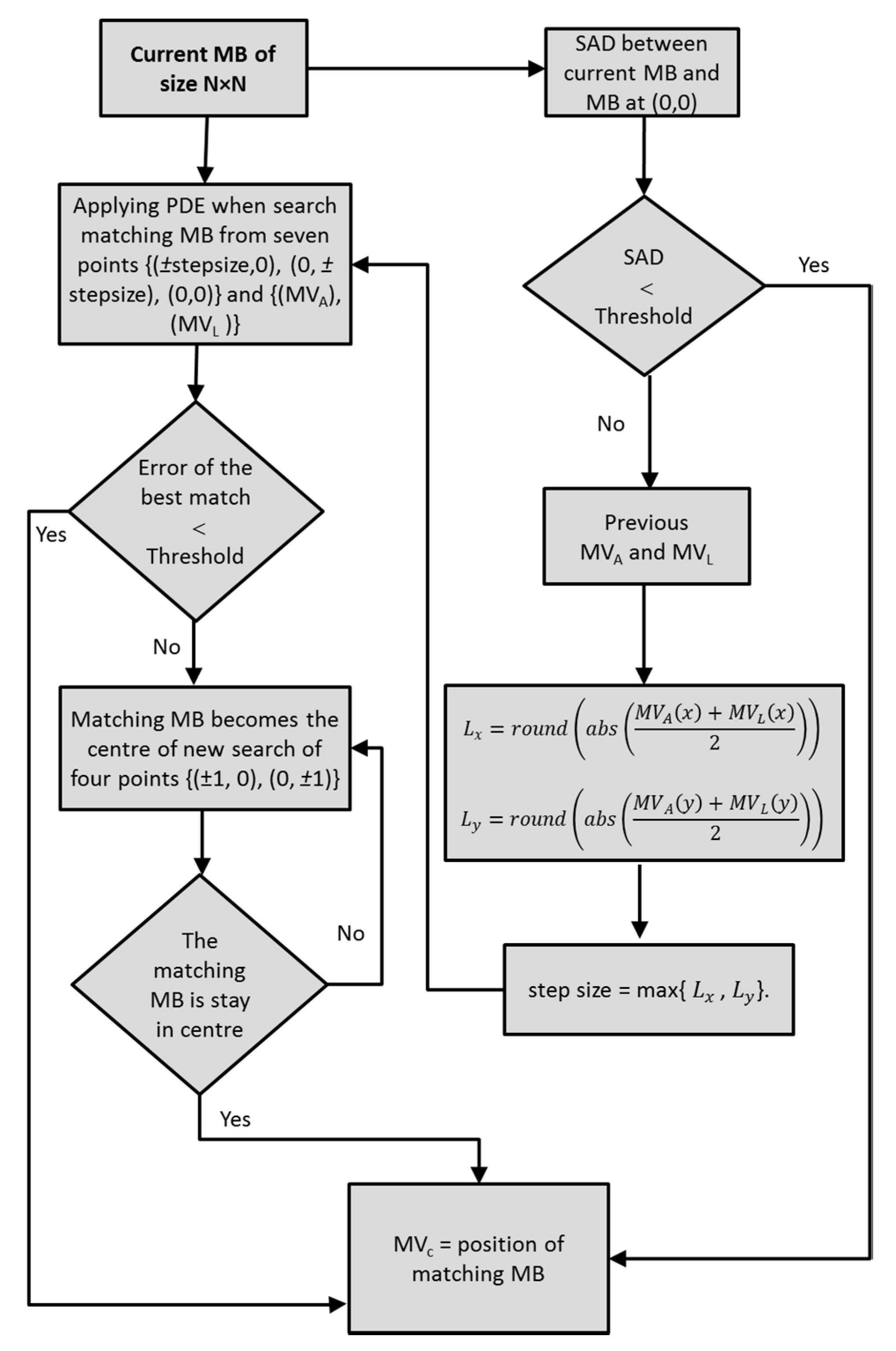

- For lossless prediction, the proposed algorithm speeds up the search process and efficiently reduces the computational complexity. In this case, the performance of the proposed technique is evaluated using the mean value of two motion vectors for the above and left previous neighboring macroblocks to determine the new search window. Therefore, there is a high probability that the new search window will contain the global minimum, using the partial distortion elimination (PDE) algorithm.

- For lossy block matching, previous spatially neighboring macroblocks are utilized to determine the initial search pattern step size. Seven positions are examined in the first step and five positions later. To speed up the search process, the PDE algorithm is applied.

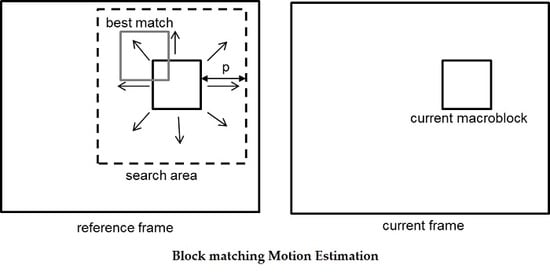

2. Fast Block Matching Algorithms

2.1. Fixed Set of Search Patterns

2.2. Predictive Search

2.3. Partial Distortion Elimination Algorithm

3. Mean Predictive Block Matching Algorithms for Lossless (MPBMLS) and Lossy (MPBMLY) Compression

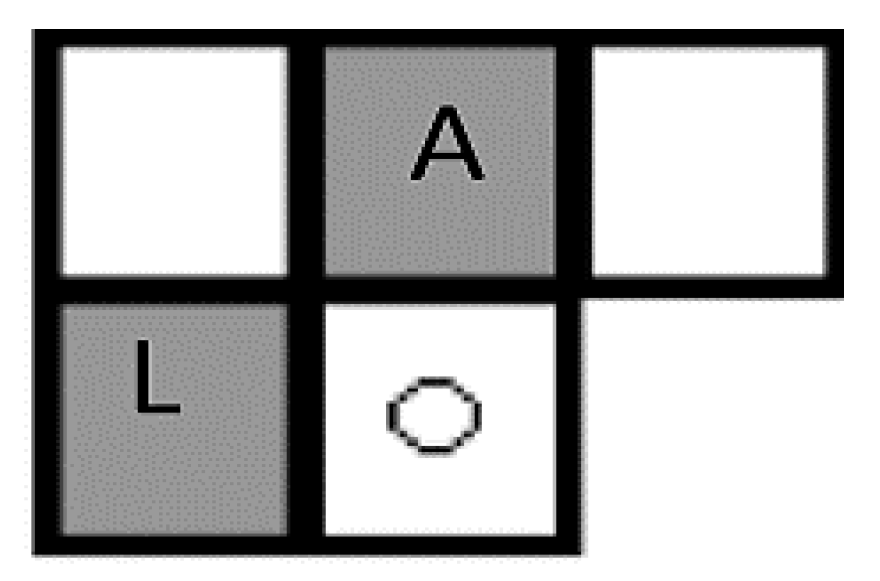

| Algorithm 1 Neighboring Macroblocks. |

| 1: Let MB represents the current macroblock, while MBSet = {S|S ∈ video frames} 2: ForEach MB in MBSet 3: Find A and L where 4: - A & L ∈ MBSet 5: - A is the top motion vector (MVA) 6: - L is the left motion vector (MVL) 7: Compute the SI where 8: - SI is the size of MB = N × N (number of pixels) 9: End Loop |

| Algorithm 2 Finding the new search window |

| 1: Let MB represents cthe urrent macroblock in MBSet 2: ForEach MB in MBSet: 3: Find x, y as: 4: 5: 6: Such that x, y ≤ , where is the search window size for the Full Search algorithm 7: Find NW representing the set of all points at the corners of the new search window rectangle as: 8: 9: End Loop |

| Algorithm 3 Proposed MPBM technique | |

| Step 1 | 1: Let s = ∑SADcenter (i.e., midpoint of the current search) and Th a pre-defined threshold value. 2: IF s < Th: 3: No motion can be found 4: Process is completed. 5: END IF |

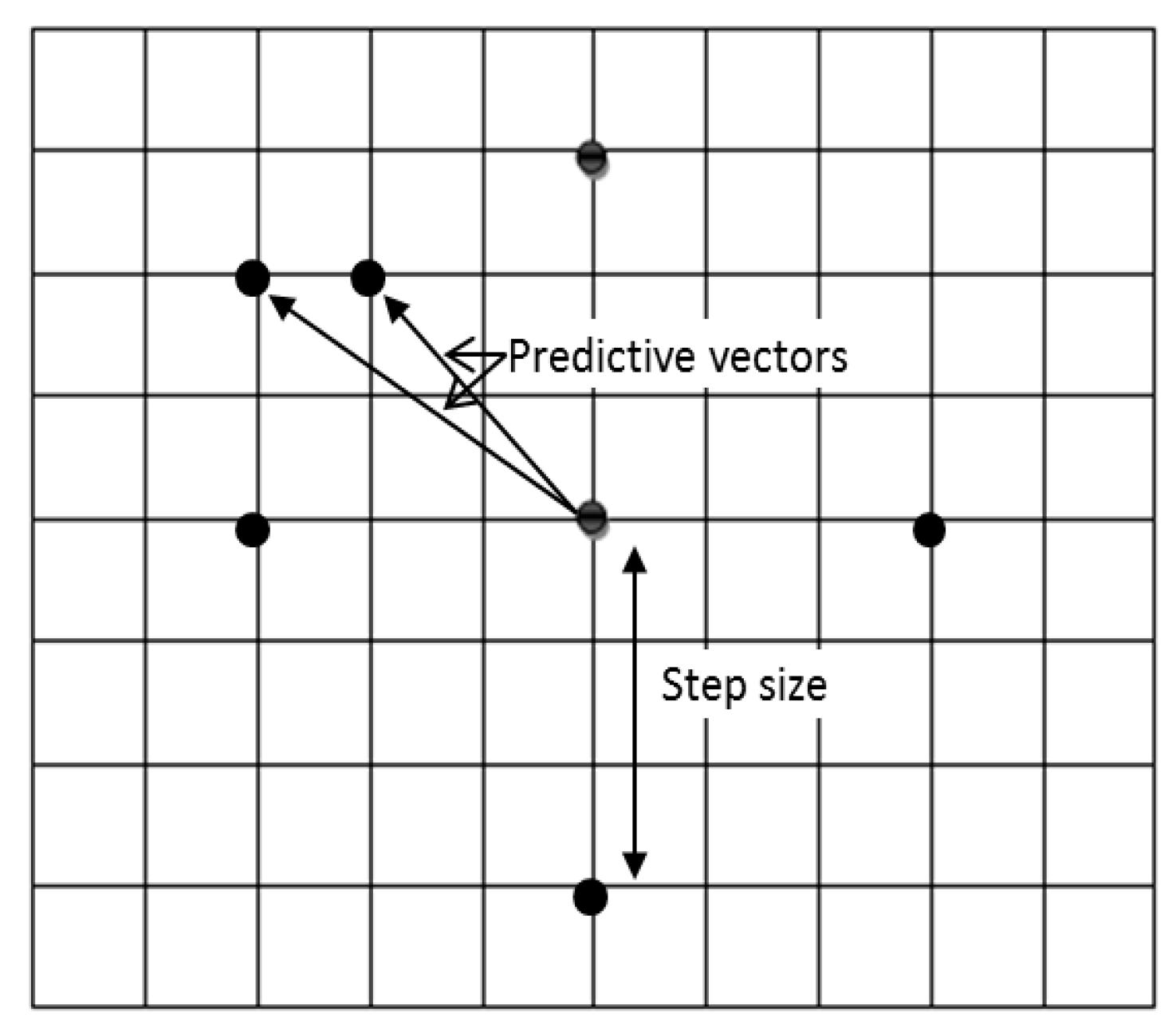

| Step 2 | 1: IF MB is in top left corner: 2: Search 5 LPS points 3: ELSE: 4: - MVA and MVL will be added to the search 5: - Use MVA and MVL for predicting the step size as: where step size = max{ Lx, Ly}. 6: - Matching MB is explored within the LSP search values on the boundary of the step size {(±step size, 0), (0, ±step size), (0,0)} 7: Set vectors {(MVA), (MVL)}, as illustrated in Figure 2. 8: END IFELSE |

| Step 3 | Matching MB is then explored within the LSP search values on the boundary of the step size {(±step size, 0), (0, ±step size), (0,0)} and the set vectors {(MVA), (MVL)}, as illustrated in Figure 2 The PDE algorithm is used to stop the partial sum matching distortion calculation between the current macroblock and candidate macroblock as soon as the matching distortion exceeds the current minimum distortion, resulting in the remaining computations to be avoided, hence, speeding up the search. |

| Step 4 | Let Er represent the error of the matching MB in step 3. IF Er < Th: The process is terminated and the matching MB provides the motion vector. ELSE - Location of the matching MB in Step 3 is used as the center of the search window - SSP defined from the four points, i.e., {(±1, 0), (0, ±1)}, will be examined. End IFELSE IF matching MB stays in the center of the search window -Computation is completed ELSE - Go to Step 1 - The matching center provides the parameters of the motion vector. |

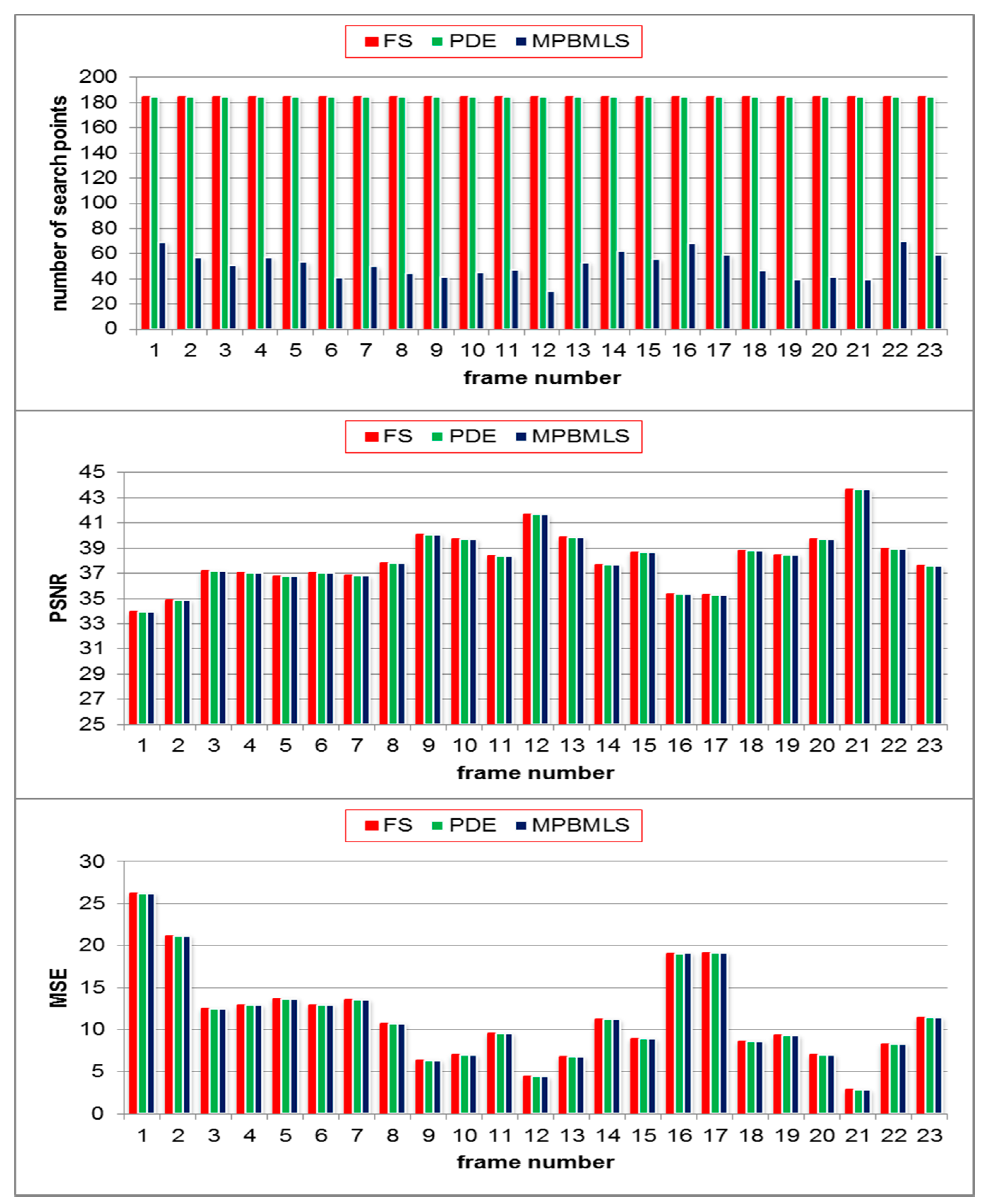

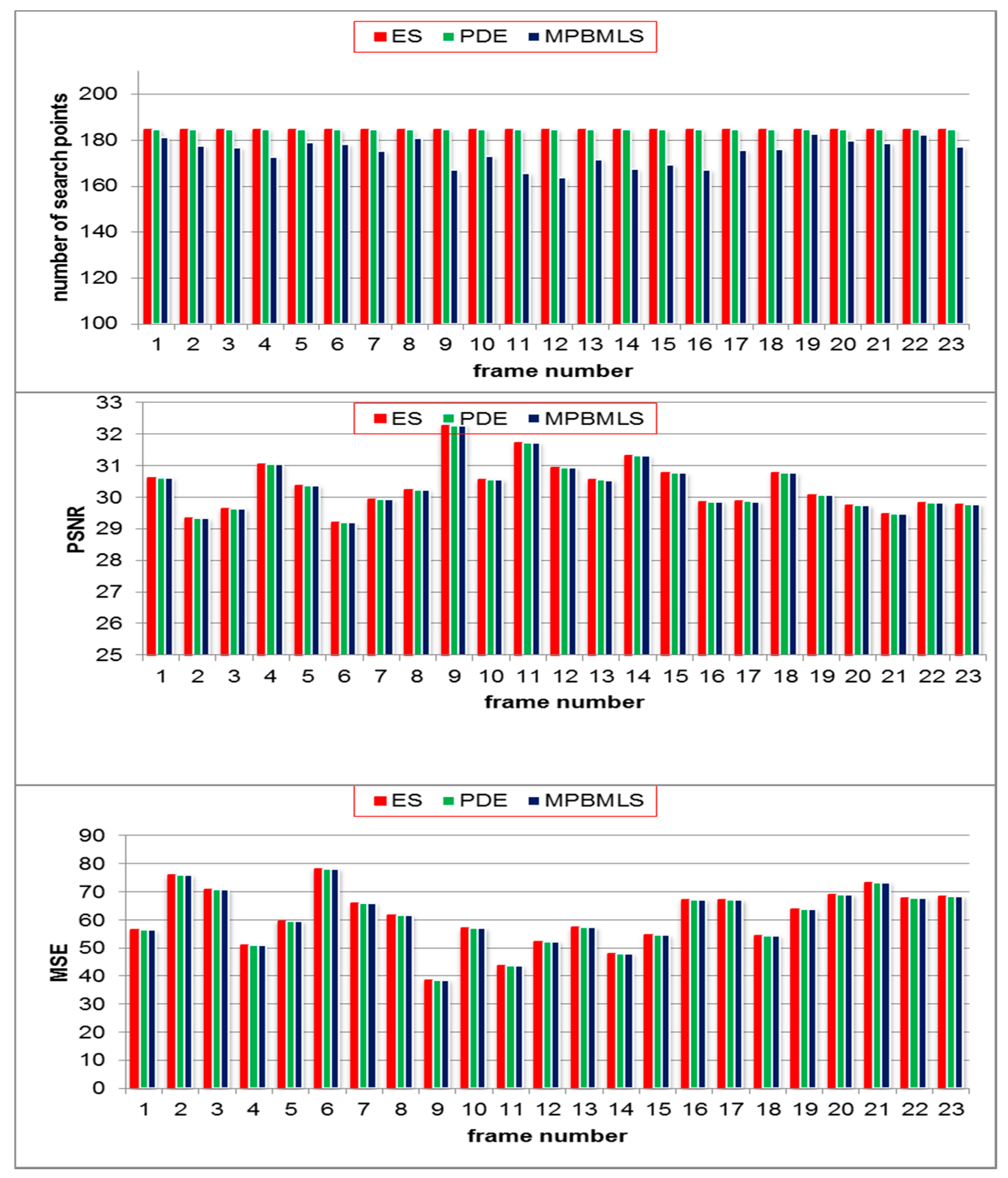

4. Simulation Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Nations, U. World Population Prospects: The 2017 Revision, Key Findings and Advance Tables; Departement of Economic and Social Affaire: New York City, NY, USA, 2017. [Google Scholar]

- Bulkeley, H.; Betsill, M. Rethinking sustainable cities: Multilevel governance and the’urban’politics of climate change. Environ. Politics 2005, 14, 42–63. [Google Scholar] [CrossRef]

- Lyer, R. Visual loT: Architectural Challenges and Opportunities. IEEE Micro 2016, 36, 45–47. [Google Scholar]

- Andreaa, P.; Caifengb, S.; I-Kai, W.K. Sensors, vision and networks: From video surveillance to activity recognition and health monitoring. J. Ambient Intell. Smart Environ. 2019, 11, 5–22. [Google Scholar]

- Goyal, A. Automatic Border Surveillance Using Machine Learning in Remote Video Surveillance Systems. In Emerging Trends in Electrical, Communications, Information Technologies; Hitendra Sarma, T., Sankar, V., Shaik, R., Eds.; Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2020; Volume 569. [Google Scholar]

- Hodges, C.; Rahmani, H.; Bennamoun, M. Deep Learning for Driverless Vehicles. In Handbook of Deep Learning Applications. Smart Innovation, Systems and Technologies; Balas, V., Roy, S., Sharma, D., Samui, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; Volume 136, pp. 83–99. [Google Scholar]

- Yang, H.; Lee, Y.; Jeon, S.; Lee, D. Multi-rotor drone tutorial: Systems, mechanics, control and state estimation. Intell. Serv. Robot. 2017, 10, 79–93. [Google Scholar] [CrossRef]

- Australian Casino Uses Facial Recognition Cameras to Identify Potential Thieves—FindBiometrics. FindBiometrics. 2019. Available online: https://findbiometrics.com/australian-casino-facial-recognition-cameras-identify-potential-thieves/ (accessed on 9 May 2019).

- Lu, H.; Gui, Y.; Jiang, X.; Wu, F.; Chen, C.W. Compressed Robust Transmission for Remote Sensing Services in Space Information Networks. IEEE Wirel. Commun. 2019, 26, 46–54. [Google Scholar] [CrossRef]

- Fan, Y.; Shang, Q.; Zeng, X. In-Block Prediction-Based Mixed Lossy and Lossless Reference Frame Recompression for Next-Generation Video Encoding. IEEE Trans. Circuits Syst. Video Technol. 2015, 25, 112–124. [Google Scholar]

- Sharman, J. Metropolitan Police’s facial recognition technology 98% inaccurate, figures show. 2018. Available online: https://www.independent.co.uk/news/uk/home-news/met-police-facial-recognition-success-south-wales-trial-home-office-false-positive-a8345036.html (accessed on 5 April 2019).

- Burgess, M. Facial recognition tech used by UK police is making a ton of mistakes. 2018. Available online: https://www.wired.co.uk/article/face-recognition-police-uk-south-wales-met-notting-hill-carnival (accessed on 11 March 2019).

- Blaschke, B. 90% of Macau ATMs now fitted with facial recognition technology—IAG. IAG, 2018. [Online]. Available online: https://www.asgam.com/index.php/2018/01/02/90-of-macau-atms-now-fitted-with-facial-recognition-technology/ (accessed on 9 May 2019).

- Chua, S. Visual loT: Ultra-Low-Power Processing Architectures and Implications. IEEE Micro 2017, 37, 52–61. [Google Scholar] [CrossRef]

- Fox, C. Face Recognition Police Tools ‘Staggeringly Inaccurate’. 2018. Available online: https://www.bbc.co.uk/news/technology-44089161 (accessed on 9 May 2019).

- Ricardo, M.; Marijn, J.; Devender, M. Data science empowering the public: Data-driven dashboards for transparent and accountable decision-making in smart cities. Gov. Inf. Q. 2018. [Google Scholar] [CrossRef]

- Gashnikov, M.V.; Glumov, N.I. Onboard processing of hyperspectral data in the remote sensing systems based on hierarchical compression. Comput. Opt. 2016, 40, 543–551. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Gao, L.; Liu, Y.; Guan, X.; Ma, K.; Wang, Y. Efficient QoS Support for Robust Resource Allocation in Blockchain-based Femtocell Networks. IEEE Trans. Ind. Inform. 2019. [Google Scholar] [CrossRef]

- Wang, S.Q.; Zhang, Z.F.; Liu, X.M.; Zhang, J.; Ma, S.M.; Gao, W. Utility-Driven Adaptive Preprocessing for Screen Content Video Compression. IEEE Trans. Multimed. 2017, 19, 660–667. [Google Scholar] [CrossRef]

- Lu, Y.; Li, S.; Shen, H. Virtualized screen: A third element for cloud mobile convergence. IEEE Multimed. Mag. 2011, 18, 4–11. [Google Scholar] [CrossRef] [Green Version]

- Kuo, H.C.; Lin, Y.L. A Hybrid Algorithm for Effective Lossless Compression of Video Display Frames. IEEE Trans. Multimed. 2012, 14, 500–509. [Google Scholar]

- Jaemoon, K.; Chong-Min, K. A Lossless Embedded Compression Using Significant Bit Truncation for HD Video Coding. IEEE Trans. Circuits Syst. Video Technol. 2010, 20, 848–860. [Google Scholar] [CrossRef]

- Srinivasan, R.; Rao, K.R. Predictive coding based on efficient motion estimation. IEEE Trans. Commun 1985, 33, 888–896. [Google Scholar] [CrossRef]

- Huang, Y.-W.; Chen, C.-Y.; Tsai, C.-H.; Shen, C.-F.; Chen, L.-G. Survey on Block Matching Motion Estimation Algorithms and Architectures with New Results. J. Vlsi Signal Process. 2006, 42, 297–320. [Google Scholar] [CrossRef] [Green Version]

- Horn, B.; Schunck, B. Determining Optical Flow. Artifical Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef] [Green Version]

- Richardson, I.E.G. The H.264 Advanced Video Compression Standard, 2nd ed.; John Wiley & Sons Inc: Fcodex Limited, UK, 2010. [Google Scholar]

- ISO/IEC. Information Technology–Coding of Moving Pictures and Associated Audio for Digital Storage Media at up to about 1,5 Mbit/s–Part 2: Video. Available online: https://www.iso.org/standard/22411.html (accessed on 1 February 2020).

- ISO/IEC. Information Technology–Generic Coding of Moving Pictures and Associated Audio–Part 2: Video. Available online: https://www.iso.org/standard/61152.html(accessed on 1 February 2020).

- ITU-T and ISO/IEC. Advanced Video Coding for Generic Audiovisual Services; H.264, MPEG, 14496–10. 2003. Available online: https://www.itu.int/ITU-T/recommendations/rec.aspx?rec=11466(accessed on 1 February 2020).

- Sullivan, G.; Topiwala, P.; Luthra, A. The H.264/AVC Advanced Video Coding Standard: Overview and Introduction to the Fidelity Range Extensions. In Proceedings of the SPIE conference on Applications of Digital Image Processing XXVII, Denver, CO, USA, 2–6 August 2004. [Google Scholar]

- Sullivan, G.J.; Wiegand, T. Video Compression—From Concepts to the H.264/AVC Standard. Proc. IEEE 2005, 93, 18–31. [Google Scholar] [CrossRef]

- Ohm, J.; Sullivan, G.J. High Efficiency Video Coding: The Next Frontier in Video Compression [Standards in a Nutshell]. IEEE Signal Process. Mag. 2013, 30, 152–158. [Google Scholar] [CrossRef]

- Suliman, A.; Li, R. Video Compression Using Variable Block Size Motion Compensation with Selective Subpixel Accuracy in Redundant Wavelet Transform. Adv. Intell. Syst. Comput. 2016, 448, 1021–1028. [Google Scholar]

- Kim, C. Complexity Adaptation in Video Encoders for Power Limited Platforms; Dublin City University: Dublin, Ireland, 2010. [Google Scholar]

- Suganya, A.; Dharma, D. Compact video content representation for video coding using low multi-linear tensor rank approximation with dynamic core tensor order. Comput. Appl. Math. 2018, 37, 3708–3725. [Google Scholar]

- Chen, D.; Tang, Y.; Zhang, H.; Wang, L.; Li, X. Incremental Factorization of Big Time Series Data with Blind Factor Approximation. IEEE Trans. Knowl. Data Eng. 2020. [Google Scholar] [CrossRef]

- Yu, L.; Wang, J.P. Review of the current and future technologies for video compression. J. Zhejiang Univ. Sci. C 2010, 11, 1–13. [Google Scholar] [CrossRef]

- Onishi, T.; Sano, T.; Nishida, Y.; Yokohari, K.; Nakamura, K.; Nitta, K.; Kawashima, K.; Okamoto, J.; Ono, N.; Sagata, A.; et al. A Single-Chip 4K 60-fps 4:2:2 HEVC Video Encoder LSI Employing Efficient Motion Estimation and Mode Decision Framework with Scalability to 8K. IEEE Trans. Large Scale Integr. (VLSI) Syst. 2018, 26, 1930–1938. [Google Scholar] [CrossRef]

- Barjatya, A. Block Matching Algorithms for Motion Estimation; DIP 6620 Spring: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Ezhilarasan, M.; Thambidurai, P. Simplified Block Matching Algorithm for Fast Motion Estimation in Video Compression. J. Comput. Sci. 2008, 4, 282–289. [Google Scholar]

- Sayood, K. Introduction to Data Compression, 3rd ed.; Morgan Kaufmann: Burlington, MA, USA, 2006. [Google Scholar]

- Hussain, A.; Ahmed, Z. A survey on video compression fast block matching algorithms. Neurocomputing 2019, 335, 215–237. [Google Scholar] [CrossRef]

- Shinde, T.S.; Tiwari, A.K. Efficient direction-oriented search algorithm for block motion estimation. IET Image Process. 2018, 12, 1557–1566. [Google Scholar] [CrossRef]

- Xiong, X.; Song, Y.; Akoglu, A. Architecture design of variable block size motion estimation for full and fast search algorithms in H.264/AVC. Comput. Electr. Eng. 2011, 37, 285–299. [Google Scholar] [CrossRef]

- Al-Mualla, M.E.; Canagarajah, C.N.; Bull, D.R. Video Coding for Mobile Communications: Efficiency, Complexity and Resilience; Academic Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Koga, T.; Ilinuma, K.; Hirano, A.; Iijima, Y.; Ishiguro, Y. Motion Compensated Interframe Coding for Video Conferencin; the Proc National Telecommum: New Orleans, LA, USA, 1981. [Google Scholar]

- Reoxiang, L.; Bing, Z.; Liou, M.L. A new three-step search algorithm for block motion estimation. IEEE Trans. Circuits Syst. Video Technol. 1994, 4, 438–442. [Google Scholar] [CrossRef]

- Lai-Man, P.; Wing-Chung, M. A novel four-step search algorithm for fast block motion estimation. IEEE Trans. Circuits Syst. Video Technol. 1996, 6, 313–317. [Google Scholar] [CrossRef]

- Shan, Z.; Kai-Kuang, M. A new diamond search algorithm for fast block matching motion estimation. In Proceedings of the ICICS, 1997 International Conference on Information, Communications and Signal Processing, Singapore, 12 September 1997; Volume 1, pp. 292–296. [Google Scholar]

- Jianhua, L.; Liou, M.L. A simple and efficient search algorithm for block-matching motion estimation. IEEE Trans. Circuits Syst. Video Technol. 1997, 7, 429–433. [Google Scholar] [CrossRef]

- Nie, Y.; Ma, K.-K. Adaptive rood pattern search for fast block-matching motion estimation. IEEE Trans Image Process. 2002, 11, 1442–1448. [Google Scholar] [CrossRef] [PubMed]

- Yi, X.; Zhang, J.; Ling, N.; Shang, W. Improved and simplified fast motion estimation for JM (JVT-P021). In Proceedings of the Joint Video Team (JVT) of ISO/IEC MPEG & ITU-T VCEG (ISO/IEC JTC1/SC29/WG11 and ITU-T SG16 Q.6) 16th Meeting, Poznan, Poland, July 2005. [Google Scholar]

- Ananthashayana, V.K.; Pushpa, M.K. joint adaptive block matching search algorithm. World Acad. Sci. Eng. Technol. 2009, 56, 225–229. [Google Scholar]

- Jong-Nam, K.; Tae-Sun, C. A fast full-search motion-estimation algorithm using representative pixels and adaptive matching scan. IEEE Trans. Circuits Syst. Video Technol. 2000, 10, 1040–1048. [Google Scholar] [CrossRef]

- Chen-Fu, L.; Jin-Jang, L. An adaptive fast full search motion estimation algorithm for H.264. Ieee Int. Symp. Circuits Syst. ISCAS 2005, 2, 1493–1496. [Google Scholar]

- Jong-Nam, K.; Sung-Cheal, B.; Yong-Hoon, K.; Byung-Ha, A. Fast full search motion estimation algorithm using early detection of impossible candidate vectors. IEEE Trans. Signal Process. 2002, 50, 2355–2365. [Google Scholar] [CrossRef]

- Jong-Nam, K.; Sung-Cheal, B.; Byung-Ha, A. Fast Full Search Motion Estimation Algorithm Using various Matching Scans in Video Coding. IEEE Trans. Syst. Mancybern. Part C Appl. Rev. 2001, 31, 540–548. [Google Scholar] [CrossRef]

- Xiao, J.; Zhu, R.; Hu, R.; Wang, M.; Zhu, Y.; Chen, D.; Li, D. Towards Real-Time Service from Remote Sensing: Compression of Earth Observatory Video Data via Long-Term Background Referencing. Remote Sens. 2018, 10, 876. [Google Scholar] [CrossRef] [Green Version]

- Allauddin, M.S.; Kiran, G.S.; Kiran, G.R.; Srinivas, G.; Mouli GU, R.; Prasad, P.V. Development of a Surveillance System for Forest Fire Detection and Monitoring using Drones. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 9361–9363. [Google Scholar]

- Kuru, K.; Ansell, D.; Khan, W.; Yetgin, H. Analysis and Optimization of Unmanned Aerial Vehicle Swarms in Logistics: An Intelligent Delivery Platform. IEEE Access 2019, 7, 15804–15831. [Google Scholar] [CrossRef]

| Sequence | Format | FS | PDE | MPBMLS |

|---|---|---|---|---|

| Claire | QCIF | 184.56 | 184.6 | 48.98 |

| Akiyo | QCIF | 184.56 | 184.6 | 46.2 |

| Carphone | QCIF | 184.56 | 184.6 | 170.2 |

| News | CIF | 204.28 | 204.3 | 121.6 |

| Stefan | CIF | 204.28 | 204.3 | 204.3 |

| Coastguard | CIF | 204.28 | 204.3 | 204.3 |

| Sequence | Format | FS | PDE | MPBMLS |

|---|---|---|---|---|

| Claire | QCIF | 0.351 | 0.18 | 0.06 |

| Akiyo | QCIF | 0.334 | 0.11 | 0.01 |

| Carphone | QCIF | 0.336 | 0.18 | 0.15 |

| News | CIF | 1.492 | 0.65 | 0.38 |

| Stefan | CIF | 1.464 | 1.09 | 0.88 |

| Coastguard | CIF | 1.485 | 1.19 | 1.03 |

| Sequence | Format | FS | PDE | MPBMLS |

|---|---|---|---|---|

| Claire | QCIF | 9.287 | 9.287 | 9.29 |

| Akiyo | QCIF | 9.399 | 9.399 | 9.399 |

| Carphone | QCIF | 56.44 | 56.44 | 56.44 |

| News | CIF | 30.33 | 30.33 | 30.33 |

| Stefan | CIF | 556.1 | 556.1 | 556.1 |

| Coastguard | CIF | 158.3 | 158.3 | 158.3 |

| Sequence | Format | FS | PDE | MPBMLS |

|---|---|---|---|---|

| Claire | QCIF | 38.94 | 38.94 | 38.94 |

| Akiyo | QCIF | 39.61 | 39.61 | 39.61 |

| Carphone | QCIF | 30.82 | 30.82 | 30.81 |

| News | CIF | 33.48 | 33.48 | 33.47 |

| Stefan | CIF | 22.16 | 22.16 | 22.16 |

| Coastguard | CIF | 26.19 | 26.19 | 26.19 |

| Sequence | FS | DS | NTSS | 4SS | SESTSS | ARPS | MPBMLY |

|---|---|---|---|---|---|---|---|

| Claire | 184.6 | 11.63 | 15.09 | 14.77 | 16.13 | 5.191 | 2.128 |

| Akiyo | 184.6 | 11.46 | 14.76 | 14.67 | 16.2 | 4.958 | 1.938 |

| Carphone | 184.6 | 13.76 | 17.71 | 16.12 | 15.73 | 7.74 | 7.06 |

| News | 204.3 | 13.1 | 17.07 | 16.38 | 16.92 | 6.058 | 3.889 |

| Stefan | 204.3 | 17.69 | 22.56 | 19.05 | 16.11 | 9.641 | 9.619 |

| Coastguard | 204.3 | 19.08 | 27.26 | 19.91 | 16.52 | 9.474 | 8.952 |

| Sequence | FS | DS | NTSS | 4SS | SESTSS | ARPS | MPBMLY |

|---|---|---|---|---|---|---|---|

| Claire | 0.351 | 0.037 | 0.031 | 0.031 | 0.037 | 0.025 | 0.015 |

| Akiyo | 0.354 | 0.036 | 0.031 | 0.031 | 0.037 | 0.023 | 0.006 |

| Carphone | 0.338 | 0.039 | 0.036 | 0.032 | 0.035 | 0.031 | 0.033 |

| News | 1.539 | 0.161 | 0.142 | 0.136 | 0.151 | 0.112 | 0.079 |

| Stefan | 1.537 | 0.267 | 0.232 | 0.174 | 0.15 | 0.158 | 0.139 |

| Coastguard | 1.551 | 0.263 | 0.235 | 0.178 | 0.15 | 0.152 | 0.14 |

| Sequence | FS | DS | NTSS | 4SS | SESTSS | ARPS | MPBMLY |

|---|---|---|---|---|---|---|---|

| Claire | 9.287 | 9.287 | 9.287 | 9.355 | 9.458 | 9.289 | 9.292 |

| Akiyo | 9.399 | 9.399 | 9.399 | 9.399 | 9.408 | 9.399 | 9.399 |

| Carphone | 56.44 | 58.16 | 57.56 | 62.12 | 69.62 | 60.02 | 59.08 |

| News | 27.29 | 29.41 | 28.2 | 29.6 | 31.22 | 29.81 | 28.64 |

| Stefan | 556.1 | 661.4 | 607.2 | 651.5 | 714.5 | 608 | 594 |

| Coastguard | 158.3 | 167.4 | 164.3 | 166 | 182.5 | 164.1 | 161.6 |

| Sequence | FS | DS | NTSS | 4SS | SESTSS | ARPS | MPBMLY |

|---|---|---|---|---|---|---|---|

| Claire | 38.94 | 38.94 | 38.94 | 38.92 | 38.89 | 38.94 | 38.94 |

| Akiyo | 39.61 | 39.61 | 39.61 | 39.61 | 39.61 | 39.61 | 39.61 |

| Carphone | 30.82 | 30.69 | 30.7 | 30.4 | 30.1 | 30.58 | 30.6 |

| News | 33.77 | 33.45 | 33.63 | 33.42 | 33.19 | 33.39 | 33.56 |

| Stefan | 22.16 | 21.49 | 21.81 | 21.51 | 21.04 | 21.82 | 21.93 |

| Coastguard | 26.19 | 25.98 | 26.05 | 26.02 | 25.6 | 26.05 | 26.11 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmed, Z.; Hussain, A.J.; Khan, W.; Baker, T.; Al-Askar, H.; Lunn, J.; Al-Shabandar, R.; Al-Jumeily, D.; Liatsis, P. Lossy and Lossless Video Frame Compression: A Novel Approach for High-Temporal Video Data Analytics. Remote Sens. 2020, 12, 1004. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12061004

Ahmed Z, Hussain AJ, Khan W, Baker T, Al-Askar H, Lunn J, Al-Shabandar R, Al-Jumeily D, Liatsis P. Lossy and Lossless Video Frame Compression: A Novel Approach for High-Temporal Video Data Analytics. Remote Sensing. 2020; 12(6):1004. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12061004

Chicago/Turabian StyleAhmed, Zayneb, Abir Jaafar Hussain, Wasiq Khan, Thar Baker, Haya Al-Askar, Janet Lunn, Raghad Al-Shabandar, Dhiya Al-Jumeily, and Panos Liatsis. 2020. "Lossy and Lossless Video Frame Compression: A Novel Approach for High-Temporal Video Data Analytics" Remote Sensing 12, no. 6: 1004. https://0-doi-org.brum.beds.ac.uk/10.3390/rs12061004