1. Introduction

Holding ~91% of the global ice mass, the Antarctic Ice Sheet (AIS) is the biggest potential contributor to global sea-level-rise [

1]. With accelerating global climate change [

2], it is of essential need to understand the response of the AIS to further increasing ocean or surface air temperatures and to evaluate its potential contribution to future sea-level-rise. Increased surface air temperatures may directly impact the AIS through enhanced surface melting resulting in the formation of supraglacial lakes in local surface depressions above an impermeable snow/ice layer of the ice sheet [

3]. In turn, the accumulation of supraglacial melt may affect Antarctic ice dynamics and mass balance through three main processes (see processes P1–P3 in

Figure 1) [

4]. First, increased surface melting and runoff (P1,

Figure 1) may lead to enhanced ice thinning (I1,

Figure 1) which directly contributes to a potentially negative Antarctic Surface Mass Balance (SMB) [

4]. Second, the temporary injection of meltwater to the bed of a grounded glacier (P2,

Figure 1) may enhance basal sliding and cause transient ice flow accelerations and increased ice discharge (I2,

Figure 1), as observed over the Greenland Ice Sheet (GrIS) [

5,

6,

7,

8,

9] and only recently along the Antarctic Peninsula (API) where drainage events triggered rapid ice flow accelerations with velocities up to 100% greater than the annual mean [

10]. The third mechanism involves a process called hydrofracturing, i.e., meltwater-induced ice shelf collapse (P3,

Figure 1). Here, the rapid drainage of surface lakes into fractures and crevasses of an ice shelf, for example formed through repeated filling and draining of lakes, initiates their downward propagation and the consequent calving of large icebergs or removal of entire ice shelves [

4,

11]. With the loss of the efficient buttressing force of an ice shelf, the inland ice accelerates and ice discharge increases (I2,

Figure 1), as observed after the collapses of several API ice shelves such as Larsen B [

12,

13,

14,

15,

16,

17]. In addition, the enhanced presence of supraglacial melt decreases the surface albedo of ice while increasing the absorption of incoming solar radiation, which initiates a positive feedback that further triggers surface melting [

18,

19]. With enhanced surface melting, the number of exposed rock similarly increases which again accelerates ice melting through a decreasing albedo [

20,

21].

Figure 1 shows supraglacial lakes on an Antarctic ocean-terminating outlet glacier, where the impact of supraglacial meltwater on ice dynamics is illustrated schematically.

In order to investigate the impact of supraglacial meltwater accumulation on Antarctic ice dynamics and mass balance in more detail, a comprehensive mapping of Antarctic supraglacial lakes is required. While ground-based surveys of the AIS are time-consuming and limited in spatial extent, spaceborne remote sensing provides a means of mapping supraglacial lakes at unprecedented spatial coverage and detail. To date, most knowledge about supraglacial lakes results from studies on the GrIS, where ice mass loss is dominated by surface melting [

4,

22]. Yet, only few studies employed remote sensing data to investigate the characteristics and distribution of supraglacial lakes in Antarctica. Remote sensing based mapping approaches developed for supraglacial lake detection on the GrIS include several semiautomated techniques (e.g., [

23,

24,

25,

26]) as well as partly automated approaches using optical Moderate-Resolution Imaging Spectroradiometer (MODIS) images at low spatial resolution [

27,

28,

29,

30,

31], yet they are found to be far less accurate than manual delineation techniques [

32]. On the other hand, Antarctic studies of surface melt accumulation using remote sensing data mostly rely on manual to semiautomated mapping techniques including the solely visual identification of melt features (e.g., [

33,

34]) or Normalized Difference Water Index (NDWI)-based thresholding techniques (e.g., [

20,

35]) usually requiring manual postprocessing or an adaptation of thresholds when dealing with large-scale analyses and image time-series [

20]. Regarding the geospatial distribution of supraglacial lake studies in Antarctica, most research focused on regions along the API [

10,

15,

36,

37], on selected glacier basins in East Antarctica [

33,

34,

35,

38,

39] as well as on larger scale investigations of which two had their focus on East Antarctica [

20,

40] and one on selected basins across the AIS [

21]. Starting with studies focusing on the API, Tuckett et al. [

10] used Landsat 4, 5, 7 and 8 as well as Sentinel-2 imagery to manually identify melt and to link it to accelerated ice flow on several API glaciers. Next, Munneke et al. [

36] used Sentinel-1 images to manually identify surface melt near the grounding line of Larsen C Ice Shelf and Glasser and Scambos [

15] and Leeson et al. [

37] used optical and radar imagery to manually map surface ponds on the Larsen B Ice Shelf prior to its collapse. Studies with focus on glacier basins in East Antarctica include the analysis of Langley et al. [

33] who manually digitized supraglacial lakes from Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) and Landsat 7 images at Langhovde Glacier. Moreover, Fricker et al. [

38] manually identified supraglacial lakes in ICESat (Ice, Cloud and Land Elevation Satellite) elevation tracks over Amery Ice Shelf and Bell et al. [

35] detected supraglacial lakes in Landsat 8 imagery covering Nansen Ice Shelf applying Normalized Difference Water Index (NDWI) thresholding. Supraglacial lakes have also been observed in Landsat images along Wilkes Land [

39] and in MODIS and Landsat 7 images across Nivlisen Ice Shelf [

34]. Larger scale investigations were conducted after it was revealed that Antarctic surface melting is more widespread than previously assumed. To start with, a recent study for the first time identified ~700 surface drainage systems in Landsat, ASTER and WorldView satellite images across Antarctica [

21]. Another study used a semiautomatic NDWI thresholding method on Sentinel-2 and Landsat 8 data to map the 2017 distribution of supraglacial lakes in East Antarctica [

20]. The authors in [

40], on the other hand, propose a threshold-based method based on Sentinel-2 and Landsat 8 data to be implemented for Antarctic-wide supraglacial lake detection in the future. In their study, specific focus is on sections of the Roi Baudouin, Nivlisen, Riiser-Larsen and Amery Ice Shelf in East Antarctica, where lake extents and volumes were tracked for several time steps. Even though the recent study by Moussavi et al. [

40] was the first to propose an automated lake detection method for Antarctica, it still has to be implemented and tested on a larger scale beyond the test regions analyzed for East Antarctica.

Despite the shown potential of Earth Observation for detecting and mapping supraglacial lakes on the AIS, data of the Sentinel-2 mission offer new opportunities for automated mapping of supraglacial lakes at unprecedented spatial resolution (10 m) and coverage. The Sentinel-2 constellation consists of two optical satellites, Sentinel-2A and Sentinel-2B, enabling the monitoring of polar regions with up to daily revisit times. Both Sentinel-2A and Sentinel-2B carry a passive Multispectral Instrument (MSI) recording the sunlight reflected from the Earth’s surface in 13 spectral bands. To date, Sentinel-2 data have been underexploited and no time-efficient mapping method has been implemented for a systematic and automated mapping of Antarctic supraglacial lakes. In fact, a circum-Antarctic record of supraglacial lakes with full ice sheet coverage is entirely missing. This is not only required to evaluate the spatial distribution of meltwater features but also to quantify their water volume, their temporal dynamics or to obtain an input dataset for SMB as well as overall mass balance calculations [

41,

42]. In addition, it is of fundamental importance to better understand the role of supraglacial meltwater on ice shelf stability as ~75% of Antarctica’s coastline is fringed by floating ice shelves [

43] providing important buttressing to the grounded inland ice [

44,

45]. Given that Antarctic surface melting is projected to double by 2050 [

46], supraglacial lakes will be even more prevalent in the future and most likely spread farther inland, which again highlights the need for an automated mapping method using high spatial resolution satellite data. Besides, the Antarctic surface and basal hydrological systems could further connect and surface melting could become a major contributor to accelerated ice mass loss from the AIS [

4].

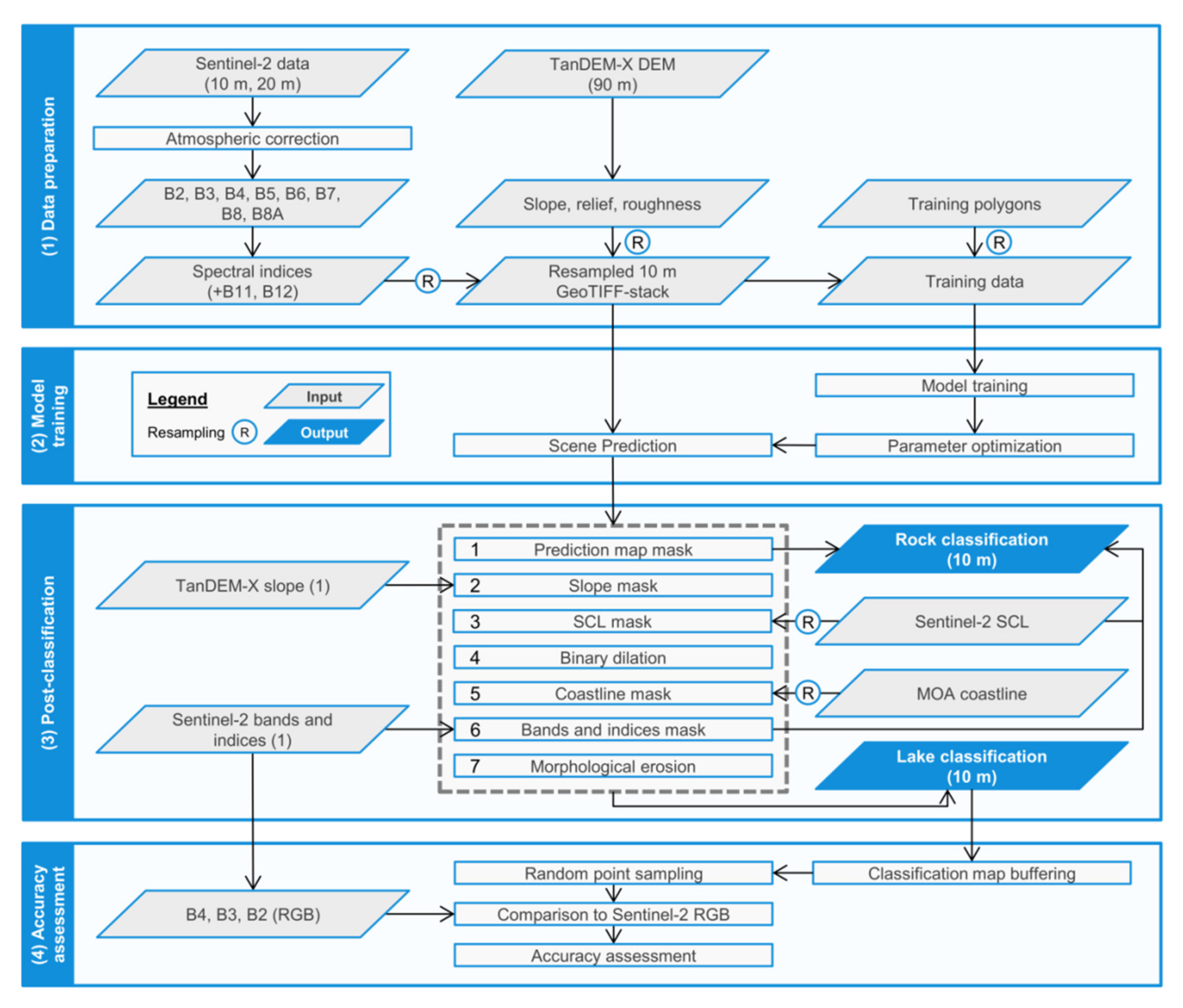

In this context, the objective of this study was to develop an automated method for Antarctic supraglacial lake mapping using state-of-the-art image processing techniques. More precisely, we employed a supervised Machine Learning (ML) algorithm, namely Random Forest (RF), trained on optical Sentinel-2 and auxiliary TanDEM-X topographic data. The main focus during method development was to ensure its spatio-temporal transferability. In the following, we first present the corresponding study sites and datasets selected for model training and testing as well as all necessary preprocessing steps (

Section 2.1 and

Section 2.2). Following this,

Section 2.3 and

Section 2.4 present our research method including RF model training and parameter optimization, postclassification as well as the methods used for validating the model. In

Section 3, we present the lake extent mapping results as well as all the outcome of the accuracy assessment and

Section 4 discusses the classification results and accuracies as well as remaining limitations of our supraglacial lake detection algorithm. Finally,

Section 5 summarizes the findings of this paper. At this point, it has to be noted that the automated mapping results of this study will be used as input for the development of a Sentinel-1 based supraglacial lake detection method, to be presented in a subsequent study.

5. Conclusions

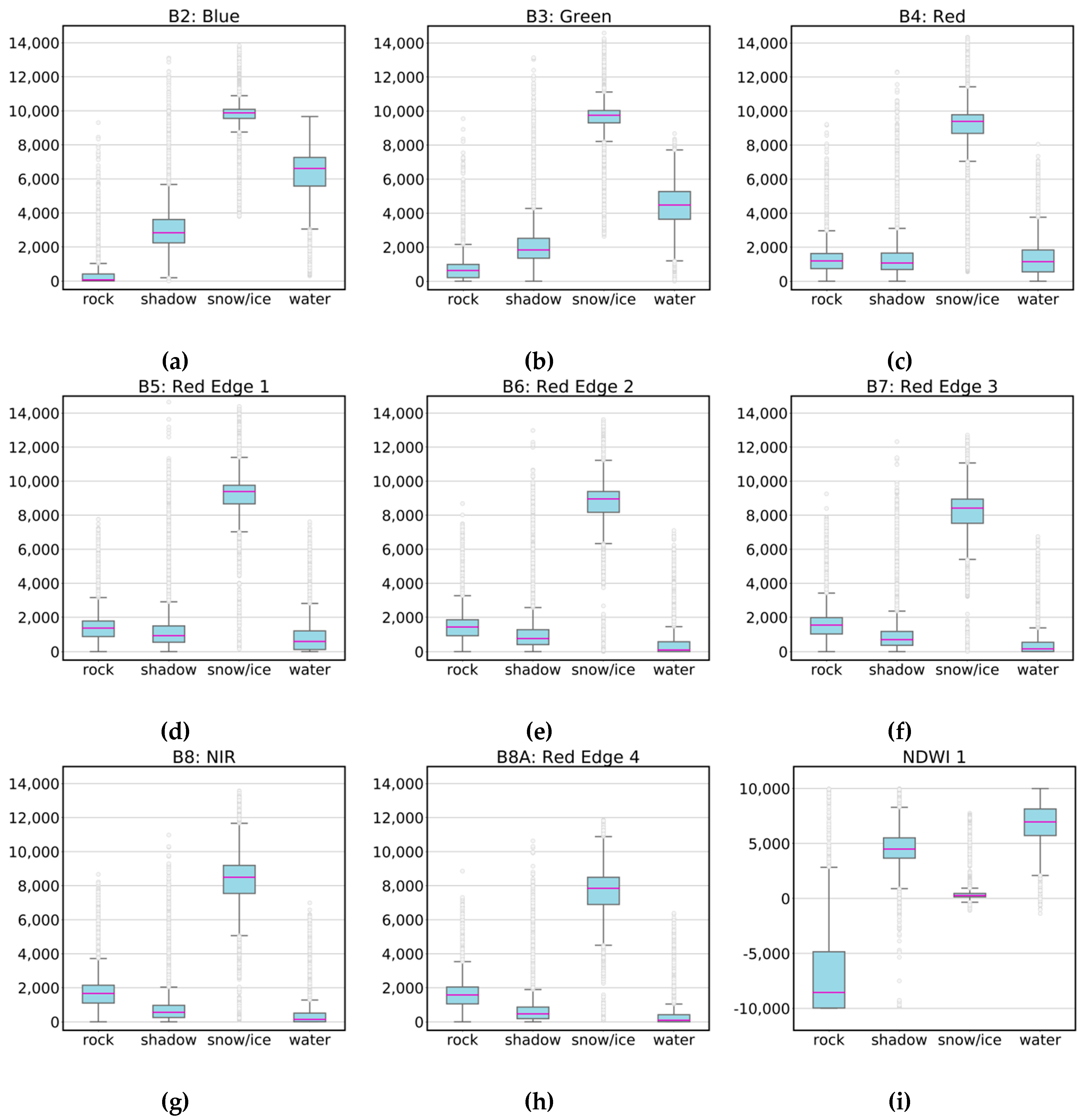

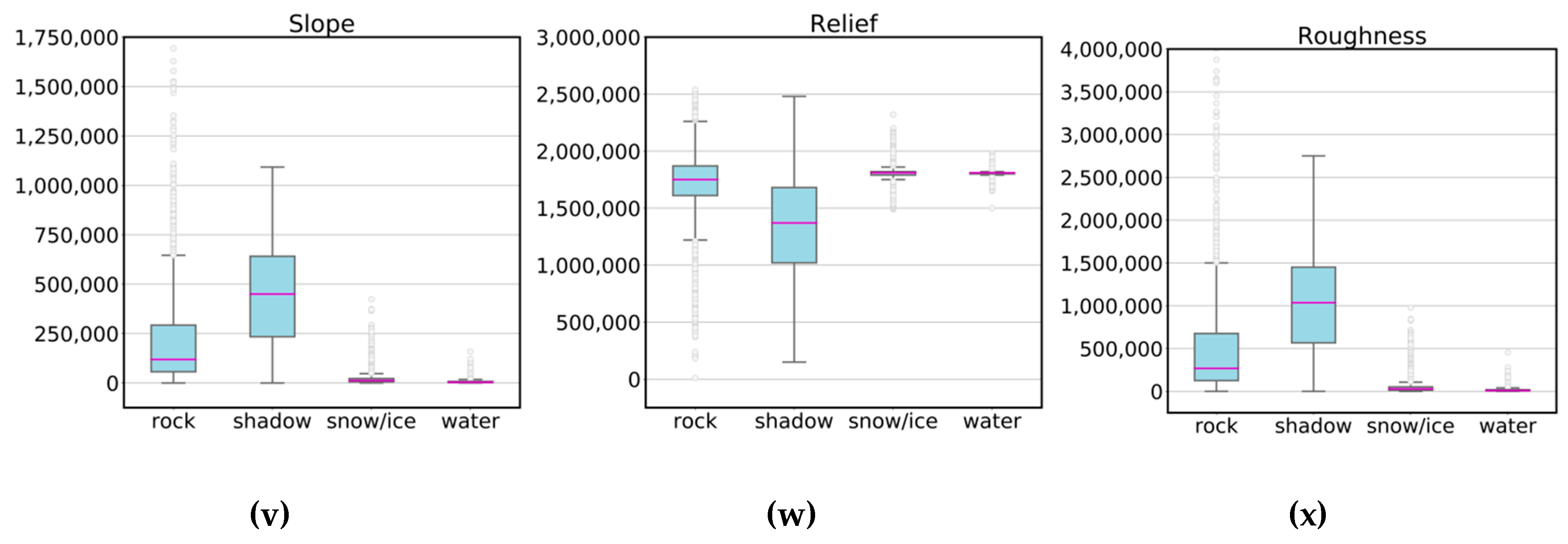

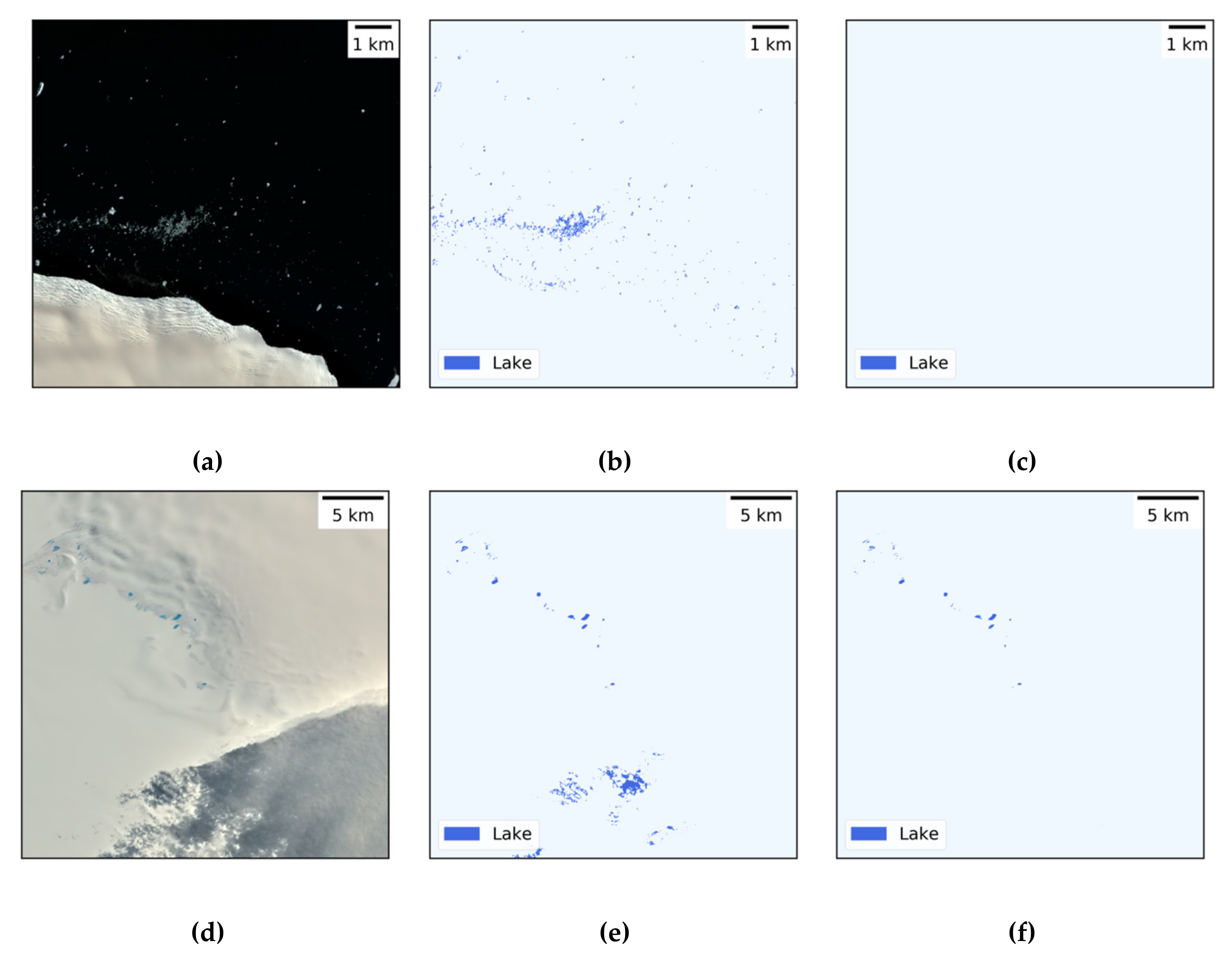

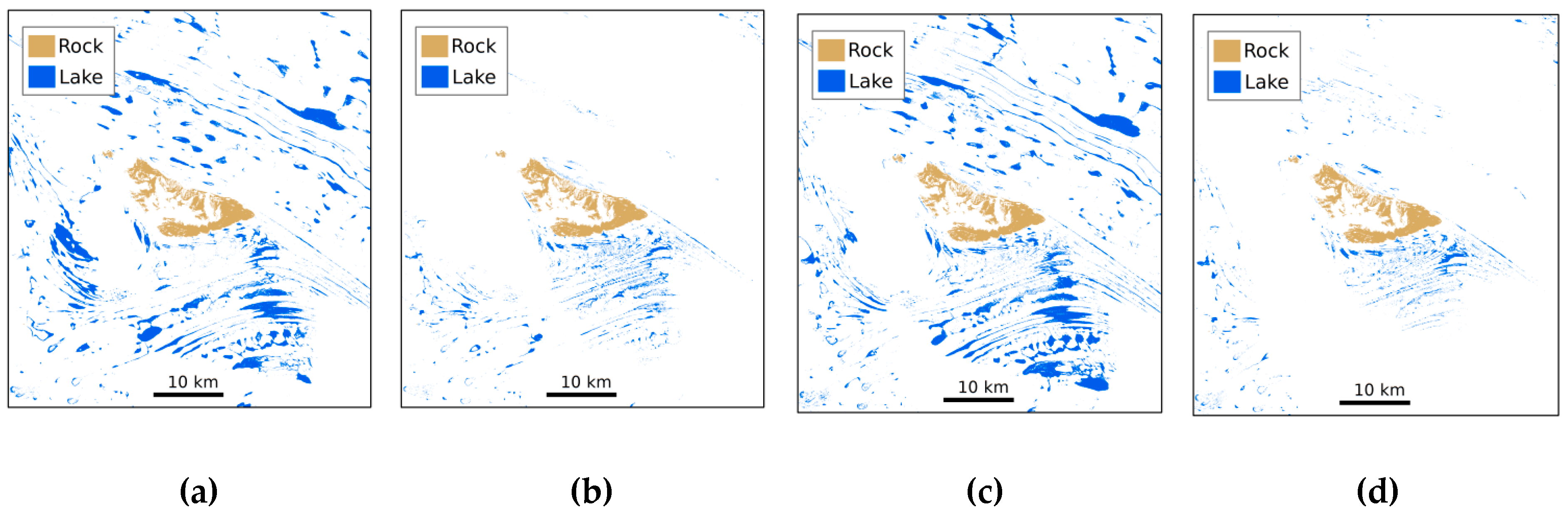

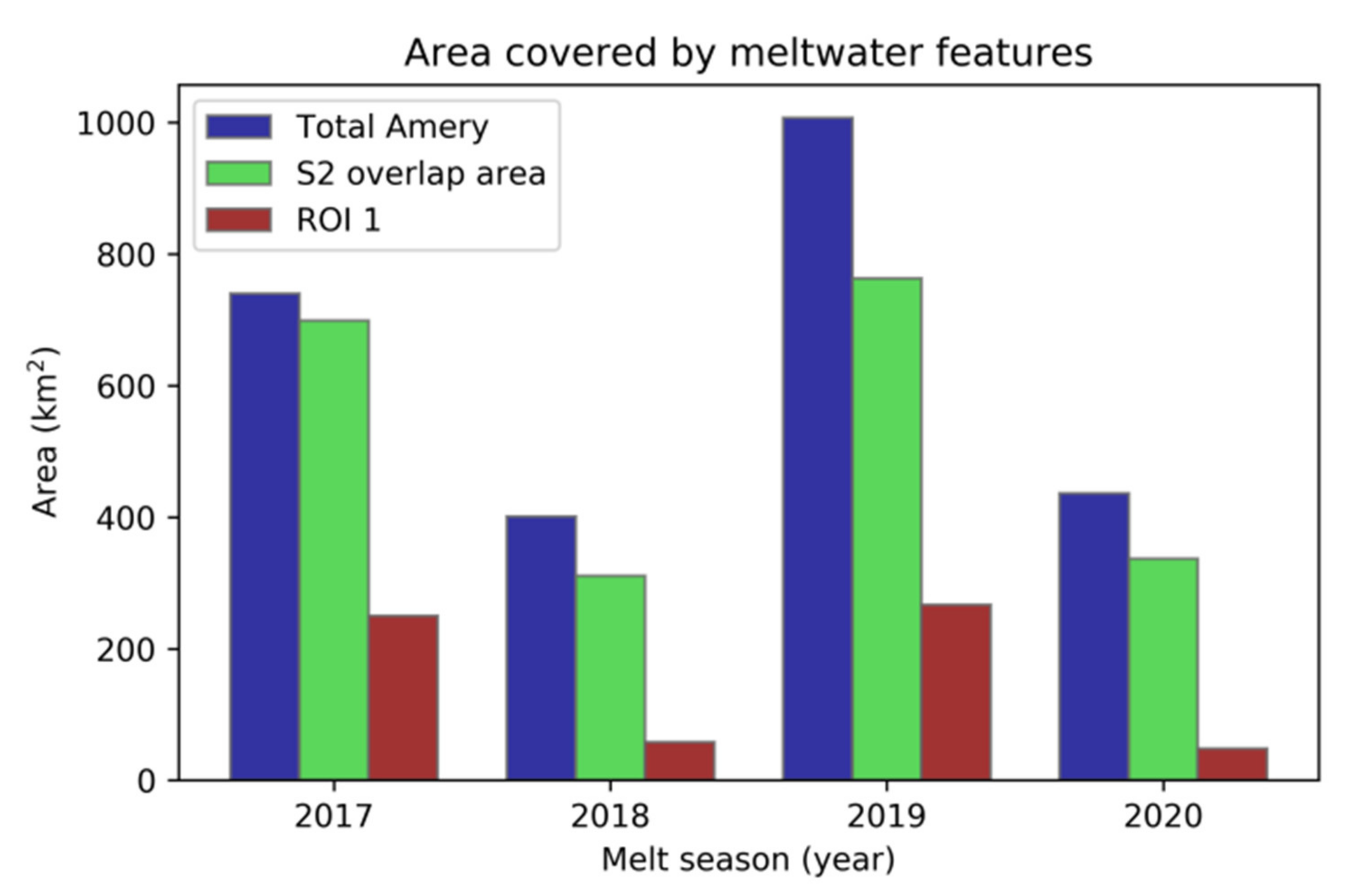

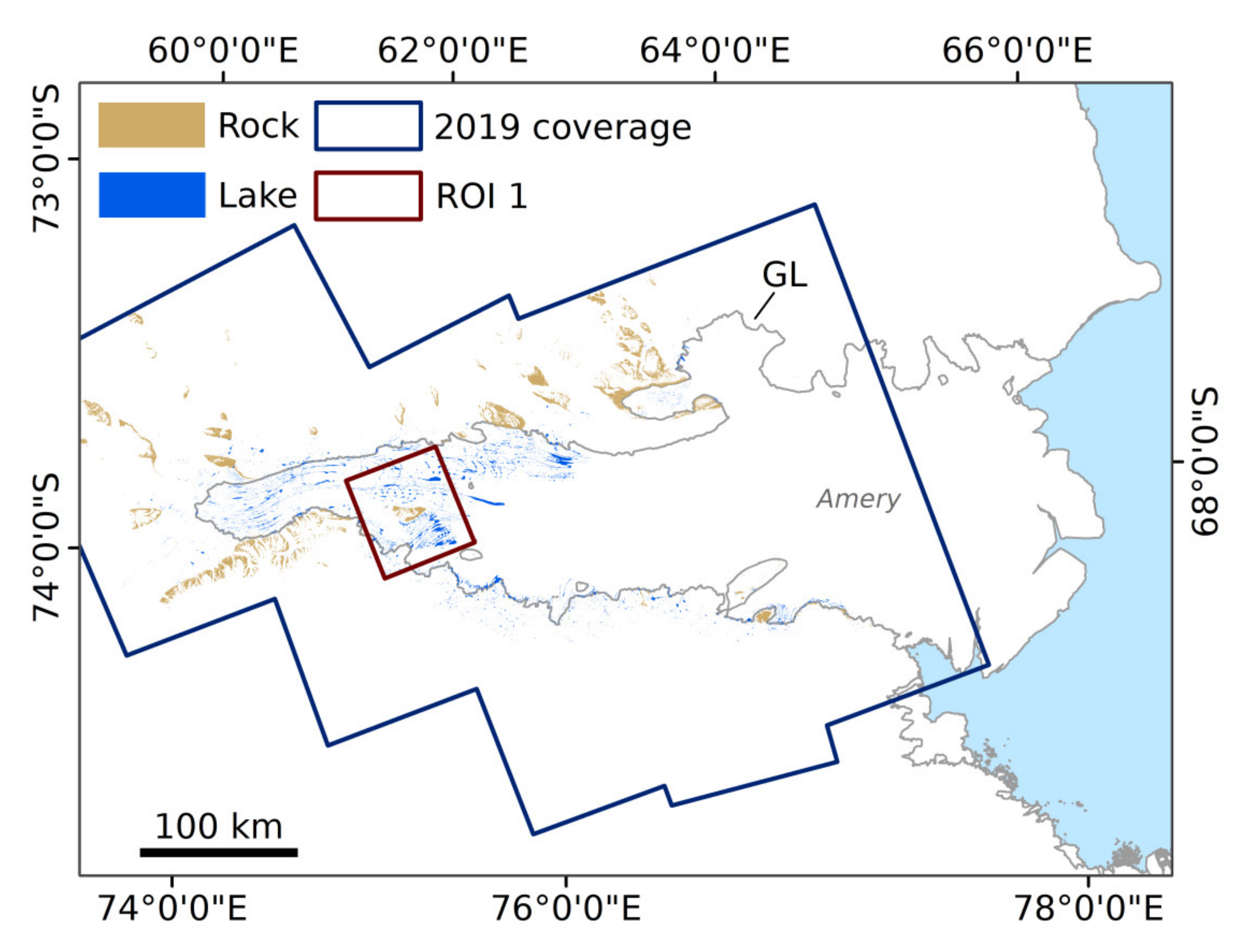

This study provides a new framework for automated mapping of Antarctic supraglacial lakes using optical Sentinel-2 imagery. More specifically, we focused on the development of a method transferable in space and time and demonstrated its suitability for spatially distributed test regions as well as for large-scale analysis of supraglacial lake dynamics at full ice shelf coverage. For this purpose, the Random Forest classifier was trained on Sentinel-2 and TanDEM-X data covering 14 training regions with four land cover classes and evaluated by means of eight spatially independent test regions distributed across Antarctica as well as the full Amery Ice Shelf. Before retrieval of lake classification maps, postclassification was performed to remove remaining misclassifications over open ocean, cloud shadow on ice or shadow in crevasses making our workflow particularly robust to outliers. In addition, the automated extraction of rock classification maps as side-product was proven particularly useful for geoscientific analyses, e.g., on increased meltwater production in relation to the spatial distribution of exposed rock.

The automated mapping results of this study reveal reliable lake extent delineations for all selected test data not presented to the model before and suggest the good functionality of our workflow for spatially and temporally distributed data. The average F1 score for the classification of water across all test sites was computed at ~86% with the highest F1 (~98%) obtained for the test scene covering George VI Ice Shelf. Similarly, the computation of Cohen’s Kappa revealed an average of 0.857 for all test data. Our results are consistent with other reference studies and identified the main remaining limitations of our workflow to be associated with (1) the lack of up-to-date topographic and coastline data, (2) difficulties in classifying pixels at lake edges and (3) shadow on ice below particularly thick clouds in Sentinel-2 imagery. Overall, the Random Forest classifier has proven its applicability for supraglacial lake detection in Antarctica and enabled the development of the first automated mapping method applied to Sentinel-2 data distributed across all three Antarctic regions. In addition, our lake extent mapping results for the first time present supraglacial lake occurrence on Hull, Cosgrove and Abbott Ice Shelves in West Antarctica as well as interannual supraglacial lake dynamics at full ice shelf coverage over Amery Ice Shelf.

Future developments involve the improvement of the Random Forest model with more training data, e.g., on cloud shadow on ice or on shallow supraglacial lakes, as well as the application of our workflow to supraglacial lake locations across the whole Antarctic continent resulting in yearly maximum lake extent mapping products. These will be crucial for assessing the impact of Antarctic supraglacial lakes on overall mass balance and thus for evaluating Antarctica’s contribution to global sea-level-rise. Besides, the results of this study will be used for further methodological developments using Sentinel-1. This is of particular importance in order to capture both surface and subsurface meltwater accumulation as well as to evaluate intraannual supraglacial lake dynamics throughout the whole year. In this context, the analysis of subannual lake records will provide important insight into their impact on Antarctic ice dynamics and thus whether lakes refreeze at the onset of Antarctic winter or drain into the ice sheet.