High-Speed Lightweight Ship Detection Algorithm Based on YOLO-V4 for Three-Channels RGB SAR Image

Abstract

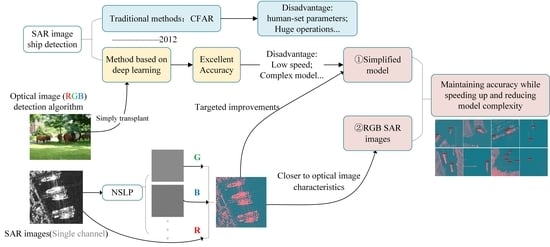

:1. Introduction

- The proposed image preprocessing method expands the single-channel SAR image originally sent to the network for learning to three channels, with ship target contour information while reducing the impact of speckle noise, making full use of network extraction capabilities, and increasing network interpretability.

- Aiming at the existing advanced and complex detection algorithms, combined with the above-mentioned preprocessing methods, a lighter network model is proposed. Compared with the existing methods, the training time is shorter, the detection speed is fast, the accuracy is high, and the hardware requirements are low.

2. Methods

2.1. YOLO-V4 Algorithm Description

2.2. Algorithm Design and Improvement

2.2.1. Construction of Three-Channel RGB SAR Image

2.2.2. Light-Weighting Model

- (1).

- the amount of space the model takes up in equipment—a single model of Faster R-CNN may add Hundreds of MBs to the download size of equipment

- (2).

- the amount of memory used at runtime—when the model runs out of free memory algorithm may be terminated by the system

- (3).

- how fast the model runs—especially in emergency situations where real-time is essential [21].

3. Experimental and Results

3.1. Dataset

3.2. Experiment 1

3.3. Experiment 2

- (1).

- Significant reduction in training time (12% for Faster R-CNN, 25% for YOLO-V4)

- (2).

- Significant reduction in model size (21% for Faster R-CNN, 9.2% for YOLO-V4)

- (3).

- Significant reduction in single-frame detection time (9.7% for Faster R-CNN, 27.7% for YOLO-V4)

4. Result

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Leng, X.; Ji, K.; Yang, K.; Zou, H. A Bilateral CFAR Algorithm for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1536–1540. [Google Scholar] [CrossRef]

- Liao, M.; Wang, C.; Wang, Y.; Jiang, L. Using SAR Images to Detect Ships from Sea Clutter. IEEE Geosci. Remote Sens. Lett. 2008, 5, 194–198. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; ACM: New York, NY, USA, 2012; pp. 1097–1105. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 13–16 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Advances in Neural Information Processing Systems; IEEE: New York, NY, USA, 2015; pp. 91–99. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.E.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. [Google Scholar]

- Chen, Z.; Gao, X. An Improved Algorithm for Ship Target Detection in SAR Images Based on Faster R-CNN. In Proceedings of the 2018 Ninth International Conference on Intelligent Control and Information Processing (ICICIP), Wanzhou, China, 9–11 November 2018; pp. 39–43. [Google Scholar] [CrossRef]

- Wang, R.; Xu, F.; Pei, J.; Wang, C.; Huang, Y.; Yang, J.; Wu, J. An Improved Faster R-CNN Based on MSER Decision Criterion for SAR Image Ship Detection in Harbor. In Proceedings of the IGARSS 2019—2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 1322–1325. [Google Scholar] [CrossRef]

- Zhang, T.; Zhang, X. High-Speed Ship Detection in SAR Images Based on a Grid Convolutional Neural Network. Remote Sens. 2019, 11, 1206. [Google Scholar] [CrossRef] [Green Version]

- Chang, Y.-L.; Anagaw, A.; Chang, L.; Wang, Y.C.; Hsiao, C.-Y.; Lee, W.-H. Ship Detection Based on YOLOv2 for SAR Imagery. Remote Sens. 2019, 11, 786. [Google Scholar] [CrossRef] [Green Version]

- Bochkovskiy, A.; Wang, C.; Liao, H.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. Available online: https://arxiv.org/abs/2004.10934 (accessed on 12 May 2021).

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. Available online: https://arxiv.org/abs/1804.02767 (accessed on 12 May 2021).

- Everingham, M.; van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The Pascal Visual Object Classes (VOC) Challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Lin, T.Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Petrona, P.; Ramanan, D.; Zitnick, C.L.; Dollar, P. Microsoft COCO: Common Objects in Context. In Computer Vision ECCV 2014. ECCV 2014; Lecture Notes in Computer Science; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer: Cham, Switzerland, 2014; Volume 8693. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, Y.-W.; Yeh, I.-H. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Qin, R.; Fu, X.; Lang, P. PolSAR Image Classification Based on Low-Frequency and Contour Subbands-Driven Polarimetric SENet. IEEE J. Stars 2020, 13, 4760–4773. [Google Scholar] [CrossRef]

- Da Cunha, A.L.; Zhou, J.; Do, M.N. The Nonsubsampled Contourlet Transform: Theory, Design, and Applications. IEEE Trans. Image Process. 2006, 15, 3089–3101. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- How Fast Is My Model? Available online: https://machinethink.net/blog/how-fast-is-my-model/ (accessed on 28 March 2021).

- Molchanov, P.; Tyree, S.; Karras, T.; Aila, T.; Kautz, J. Pruning Convolutional Neural Networks for Resource Efficient Inference 14. arXiv 2016, arXiv:1611.06440. Available online: https://arxiv.org/abs/1611.06440 (accessed on 12 May 2021).

- Darknet. Available online: https://github.com/AlexeyAB/darknet (accessed on 28 March 2021).

- Zhang, T.; Zhang, X.; Shi, J.; Wei, S. Depthwise Separable Convolution Neural Network for High-Speed SAR Ship Detection. Remote Sens. 2019, 11, 2483. [Google Scholar] [CrossRef] [Green Version]

- Li, J.; Qu, C.; Shao, J. Ship detection in SAR images based on an improved faster R-CNN. In Proceedings of the 2017 SAR in Big Data Era: Models, Methods and Applications (BIGSARDATA), Beijing, China, 13–14 November 2017; pp. 1–6. [Google Scholar]

| Networks | Params | Memory (MB) | MemR+W (GB) | G-MAdd | GFLOPs |

|---|---|---|---|---|---|

| Faster R-CNN | 136,689,024 | 377.91 | 361.4 | 109.39 | 109.39 |

| V4 | 64,002,306 | 606.26 | 1.12 | 59.8 | 29.92 |

| V4-light | 6,563,542 | 74.53 | 0.177 | 7.06 | 3.53 |

| Datasets | Number of Samples |

|---|---|

| Training Set | 812 |

| Validation Set | 116 |

| Testing set | 232 |

| Total | 1160 |

| Networks | AP | Train Time (min) | Model File Size (MB) | Time (ms) |

|---|---|---|---|---|

| Faster R-CNN | 87.13% | 812 | 108 | 126 |

| YOLO-V4 | 96.32% | 380 | 244.3 | 44.21 |

| V4-tiny | 88.08% | 98 | 22.5 | 12.25 |

| Networks | AP | Precision | Recall | F1 |

|---|---|---|---|---|

| Faster R-CNN | 87.13% | 52.85% | 92.42% | 0.67 |

| YOLO-V4 | 96.32% | 96.98% | 95.96% | 0.96 |

| V4-tiny | 88.08% | 92.00% | 81.99% | 0.87 |

| Networks | Dataset | AP | Train Time (min) | Model Size (MB) | Time (ms) |

|---|---|---|---|---|---|

| V4-tiny | SSDD | 88.08% | 98 | 22.5 | 12.25 |

| V4-tiny | RGB-SSDD | 89.64% | 99 | 22.5 | 12.15 |

| V4-light | RGB-SSDD | 90.37% | 110 | 30 | 13.42 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, J.; Fu, X.; Qin, R.; Wang, X.; Ma, Z. High-Speed Lightweight Ship Detection Algorithm Based on YOLO-V4 for Three-Channels RGB SAR Image. Remote Sens. 2021, 13, 1909. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13101909

Jiang J, Fu X, Qin R, Wang X, Ma Z. High-Speed Lightweight Ship Detection Algorithm Based on YOLO-V4 for Three-Channels RGB SAR Image. Remote Sensing. 2021; 13(10):1909. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13101909

Chicago/Turabian StyleJiang, Jiahuan, Xiongjun Fu, Rui Qin, Xiaoyan Wang, and Zhifeng Ma. 2021. "High-Speed Lightweight Ship Detection Algorithm Based on YOLO-V4 for Three-Channels RGB SAR Image" Remote Sensing 13, no. 10: 1909. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13101909