Automatic Sub-Pixel Co-Registration of Remote Sensing Images Using Phase Correlation and Harris Detector

Abstract

:1. Introduction

2. Brief Overview of Related Work

2.1. Hybrid Registration Approach

2.2. Fine Registration Using Phase Correlation

3. Materials and Methods

3.1. Harris Corners Extraction

3.2. Point Correspondence Using Fourier Phase Matching

3.3. Sub-Pixel Translation Estimation

3.3.1. Nelder–Mead (NM) Optimization

3.3.2. The Two-Point Step Size (TPSS) Gradient

3.4. Detection of Outliers

| Algorithm 1: RANSAC Algorithm |

|

3.5. Transformation Model

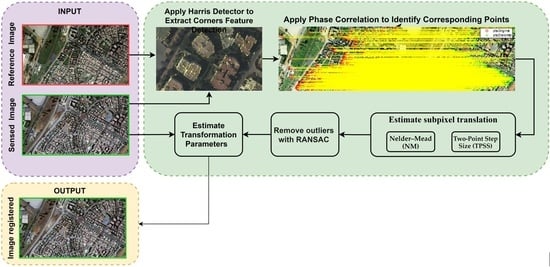

3.6. Workflow of the Proposed Approach

- Extract Harris corners from the sensed and reference images for each of the nine sub-regions.

- For each point PCi in the reference image, weigh the reference template image and the candidate template image for each of the k-nearest neighbors in the sensed image by a Blackman window.

- Compute the discrete Fourier transform of each filtered image .

- Compute the normalized cross-correlation and POC function between .

- The candidate points with the maximum magnitude of the phase-only correlation are considered as the exact corresponding points.

- Eliminate the pairs for which the score is less than 0.3, in addition to the many-to-one match with the minimum score.

- Deal with outliers using the RANSAC algorithm.

- Compute the displacement . for each corresponding point pairs using phase correlation.

- Using as initial approximations, two optimization algorithms are used to find that maximize the POC function .

- Compute the parameters of the model transformation using least squares minimization.

- Apply model transformations to align the sensed image with the reference image.

3.7. Evaluation Criteria

4. Results and Discussion

4.1. Descriptions of Experimental Data

4.2. Large-Scale Displacements Estimation with Pixel-Level Accuracy

4.2.1. Effect of Two Window Functions on the Correlation Measures

4.2.2. Performance of Phase Matching

4.3. Enhanced Sub-Pixel Displacement Estimation

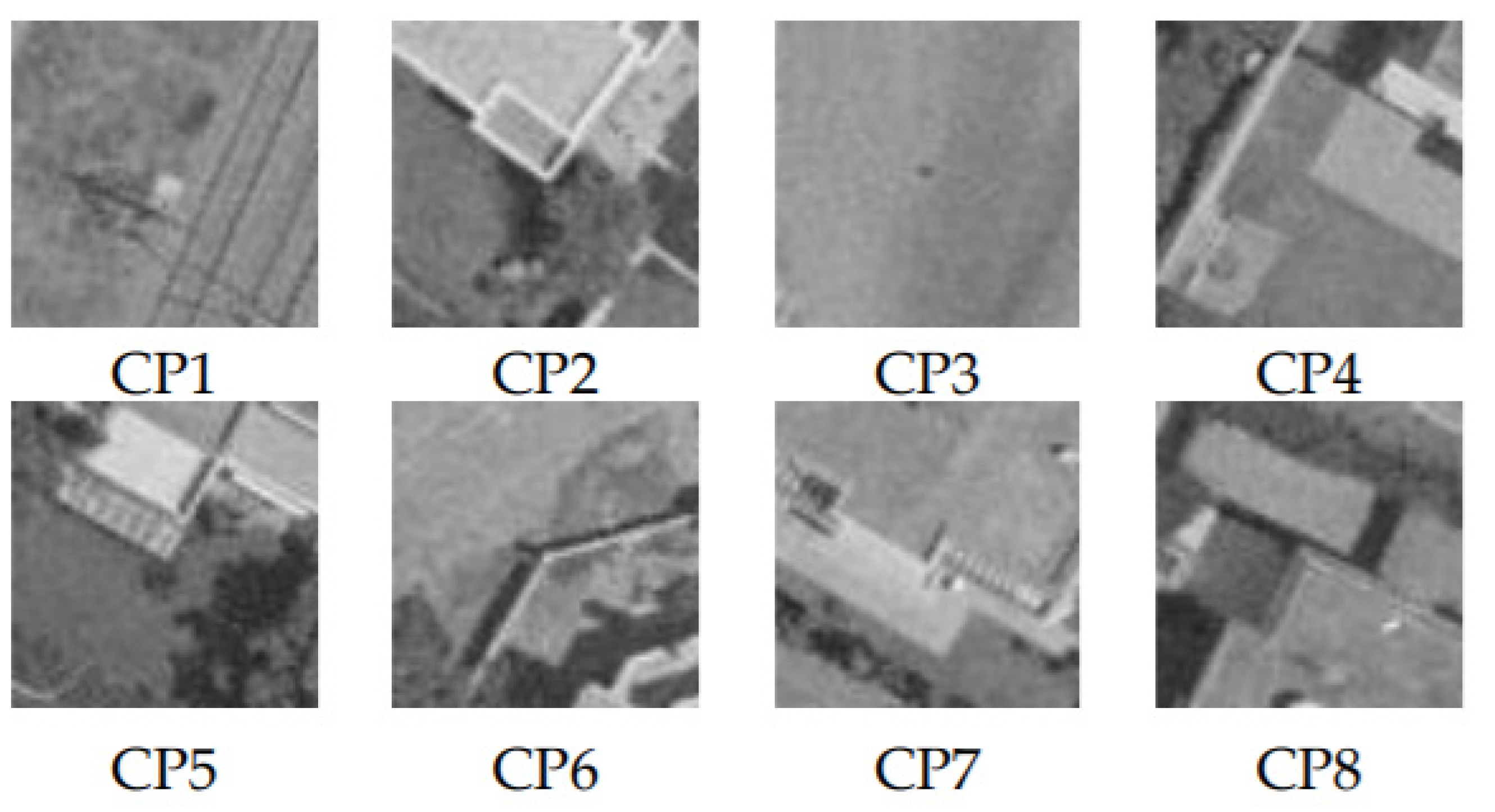

4.4. Validation of the Proposed Registration Method

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bentoutou, Y.; Taleb, N.; Kpalma, K.; Ronsin, J. An automatic image registration for applications in remote sensing. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2127–2137. [Google Scholar] [CrossRef]

- Dong, Y.; Jiao, W.; Long, T.; Liu, L.; He, G. Eliminating the Effect of Image Border with Image Periodic Decomposition for Phase Correlation Based Remote Sensing Image Registration. Sensors 2019, 19, 2329. [Google Scholar] [CrossRef] [Green Version]

- Ye, Z.; Kang, J.; Yao, J.; Song, W.; Liu, S.; Luo, X.; Xu, Y.; Tong, X. Robust Fine Registration of Multisensor Remote Sensing Images Based on Enhanced Subpixel Phase Correlation. Sensors 2020, 20, 4338. [Google Scholar] [CrossRef]

- Fan, R.; Hou, B.; Liu, J.; Yang, J.; Hong, Z. Registration of Multiresolution Remote Sensing Images Based on L2-Siamese Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 237–248. [Google Scholar] [CrossRef]

- Tondewad, M.P.S.; Dale, M. Remote Sensing Image Registration Methodology: Review and Discussion. Procedia Comput. Sci. 2020, 171, 2390–2399. [Google Scholar] [CrossRef]

- Tong, X.; Ye, Z.; Xu, Y.; Gao, S.; Xie, H.; Du, Q.; Liu, S.; Xu, X.; Liu, S.; Luan, K.; et al. Image Registration With Fourier-Based Image Correlation: A Comprehensive Review of Developments and Applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4062–4081. [Google Scholar] [CrossRef]

- Lee, W.; Sim, D.; Oh, S.-J. A CNN-Based High-Accuracy Registration for Remote Sensing Images. Remote Sens. 2021, 13, 1482. [Google Scholar] [CrossRef]

- Feng, R.; Du, Q.; Shen, H.; Li, X. Region-by-Region Registration Combining Feature-Based and Optical Flow Methods for Remote Sensing Images. Remote Sens. 2021, 13, 1475. [Google Scholar] [CrossRef]

- Shah, U.S.; Mistry, D. Survey of Image Registration techniques for Satellite Images. Int. J. Sci. Res. Dev. 2014, 1, 2448–2452. [Google Scholar]

- Eastman, R. Survey of image registration methods. In Image Registation for Remote Sensing; Cambridge University Press: Cambridge, UK, 2011; pp. 35–78. [Google Scholar]

- Brown, L.G. A survey of image registration techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef] [Green Version]

- Bhowmik, A.; Gumhold, S.; Rother, C.; Brachmann, E. Reinforced Feature Points: Optimizing Feature Detection and Description for a High-Level Task. arXiv 2020, arXiv:1912.00623. [Google Scholar]

- Li, K.; Zhang, Y.; Zhang, Z.; Lai, G. A Coarse-to-Fine Registration Strategy for Multi-Sensor Images with Large Resolution Differences. Remote Sens. 2019, 11, 470. [Google Scholar] [CrossRef] [Green Version]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image Matching from Handcrafted to Deep Features: A Survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 27, 1615–1630. [Google Scholar] [CrossRef] [Green Version]

- Kelman, A.; Sofka, M.; Stewart, C.V. Keypoint Descriptors for Matching Across Multiple Image Modalities and Non-linear Intensity Variations. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar] [CrossRef] [Green Version]

- Ehsan, S.; Clark, A.F.; Mcdonald-Maier, K.D. Rapid Online Analysis of Local Feature Detectors and Their Complementarity. Sensors 2013, 13, 10876–10907. [Google Scholar] [CrossRef] [Green Version]

- Bansal, M.; Kumar, M. 2D object recognition: A comparative analysis of SIFT, SURF and ORB feature descriptors. Multimedia Tools Appl. 2021, 80, 18839–18857. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. AVC 1988, 23.1–23.6. [Google Scholar] [CrossRef]

- Zhu, Q.; Wu, B.; Wan, N. A sub-pixel location method for interest points by means of the Harris interest strength. Photogramm. Rec. 2007, 22, 321–335. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, H.; Li, X.; Li, W.; Yuan, W. Application of Improved Harris Algorithm in Sub-Pixel Feature Point Extraction. Int. J. Comput. Electr. Eng. 2014, 6, 101–104. [Google Scholar] [CrossRef] [Green Version]

- Lowe, G. SIFT—The Scale Invariant Feature Transform. Int. J. Comput. Vis. 2004, 2, 91–110. [Google Scholar] [CrossRef]

- Ye, M.; Tang, Z. Registration of correspondent points in the stereo-pairs of Chang’E-1 lunar mission using SIFT algorithm. J. Earth Sci. 2013, 24, 371–381. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Li, J. GF-2 Panchromatic and Multispectral Remote Sensing Image Registration Algorithm. IEEE Access 2020, 8, 138067–138076. [Google Scholar] [CrossRef]

- Dong, Y.; Jiao, W.; Long, T.; He, G.; Gong, C. An Extension of Phase Correlation-Based Image Registration to Estimate Similarity Transform Using Multiple Polar Fourier Transform. Remote Sens. 2018, 10, 1719. [Google Scholar] [CrossRef] [Green Version]

- Oppenheim, A.V.; Buck, J.R.; Schafer, R.W. Discrete-Time Signal Processing; Prentice Hall: Upper Saddle River, NJ, USA, 2001; Volume 2. [Google Scholar]

- Kuglin, C.D. The Phase Correlation Image Alignment Method. Proc. Int. Conf. Cybern. Soc. 1975, 4, 163–165. [Google Scholar]

- Li, X.; Hu, Y.; Shen, T.; Zhang, S.; Cao, J.; Hao, Q. A comparative study of several template matching algorithms oriented to visual navigation. In Proceedings of the Optoelectronic Imaging and Multimedia Technology VII, Virtual, 11–16 October 2020; Volume 11550, pp. 66–74. [Google Scholar]

- Arya, K.V.; Gupta, P.; Kalra, P.; Mitra, P. Image registration using robust M-estimators. Pattern Recognit. Lett. 2007, 28, 1957–1968. [Google Scholar] [CrossRef]

- Kim, J.; Fessler, J.A. Intensity-Based Image Registration Using Robust Correlation Coefficients. IEEE Trans. Med. Imaging 2004, 23, 1430–1444. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Georgescu, B.; Meer, P. Point matching under large image deformations and illumination changes. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 674–688. [Google Scholar] [CrossRef]

- Eastman, R.D.; Le Moigne, J.; Netanyahu, N.S. Research issues in image registration far remote sensing. IEEE Conf. Comput. Vis. Pattern Recognit. 2007, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Konstantinidis, D.; Stathaki, T.; Argyriou, V. Phase Amplified Correlation for Improved Sub-Pixel Motion Estimation. IEEE Trans. Image Process. 2019, 28, 3089–3101. [Google Scholar] [CrossRef] [Green Version]

- Xu, Q.; Chavez, A.G.; Bulow, H.; Birk, A.; Schwertfeger, S. Improved Fourier Mellin Invariant for Robust Rotation Estimation with Omni-Cameras. arXiv 2019, arXiv:1811.05306v2, 320–324. [Google Scholar]

- Leprince, S.; Ayoub, F.; Klinger, Y.; Avouac, J.-P. Co-Registration of Optically Sensed Images and Correlation (COSI-Corr): An operational methodology for ground deformation measurements. IEEE Int. Geosci. Remote Sens. Symp. 2007, 1943–1946. [Google Scholar] [CrossRef] [Green Version]

- Scheffler, D.; Hollstein, A.; Diedrich, H.; Segl, K.; Hostert, P. AROSICS: An Automated and Robust Open-Source Image Co-Registration Software for Multi-Sensor Satellite Data. Remote Sens. 2017, 9, 676. [Google Scholar] [CrossRef] [Green Version]

- De Castro, E.; Morandi, C. Registration of Translated and Rotated Images Using Finite Fourier Transforms. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 700–703. [Google Scholar] [CrossRef]

- Chen, Q.-S.; Defrise, M.; Deconinck, F. Symmetric phase-only matched filtering of Fourier-Mellin transforms for image registration and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 1156–1168. [Google Scholar] [CrossRef]

- Reddy, B.; Chatterji, B. An FFT-based technique for translation, rotation, and scale-invariant image registration. IEEE Trans. Image Process. 1996, 5, 1266–1271. [Google Scholar] [CrossRef] [Green Version]

- Abdelfattah, R.; Nicolás, J.M. InSAR image co-registration using the Fourier–Mellin transform. Int. J. Remote Sens. 2005, 26, 2865–2876. [Google Scholar] [CrossRef]

- Gottesfeld, L. A Survey of Image Registration. ACM Comput. Surv. 1992, 24, 326–376. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar] [CrossRef]

- Huang, X.; Sun, Y.; Metaxas, D.; Sauer, F.; Xu, C. Hybrid Image Registration based on Configural Matching of Scale-Invariant Salient Region Features. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. Work. 2005, 167. [Google Scholar] [CrossRef]

- Mekky, N.E.; Kishk, S. Wavelet-Based Image Registration Techniques: A Study of Performance. Comput. Geosci. 2011, 11, 188–196. [Google Scholar]

- Suri, J.; Schwind, S.; Reinartz, P.; Uhl, P. Combining mutual information and scale invariant feature transform for fast and robust multisensor SAR image registration. Am. Soc. Photogramm. Remote Sens. Annu. Conf. 2009, 2, 795–806. [Google Scholar]

- Feng, R.; Du, Q.; Li, X.; Shen, H. Robust registration for remote sensing images by combining and localizing feature- and area-based methods. ISPRS J. Photogramm. Remote Sens. 2019, 151, 15–26. [Google Scholar] [CrossRef]

- Zheng, Y.; Zheng, P. Image Matching Based on Harris-Affine Detectors and Translation Parameter Estimation by Phase Correlation. In Proceedings of the 2019 IEEE 4th International Conference on Signal and Image Processing (ICSIP), Wuxi, China, 19–21 July 2019; pp. 106–111. [Google Scholar] [CrossRef]

- Leprince, S.; Barbot, S.; Ayoub, F.; Avouac, J.-P. Automatic and Precise Orthorectification, Coregistration, and Subpixel Correlation of Satellite Images, Application to Ground Deformation Measurements. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1529–1558. [Google Scholar] [CrossRef] [Green Version]

- Hrazdíra, Z.; Druckmüller, M.; Habbal, S. Iterative Phase Correlation Algorithm for High-precision Subpixel Image Registration. Astrophys. J. Suppl. Ser. 2020, 247, 8. [Google Scholar] [CrossRef]

- Li, J.; Ma, Q. A Fast Subpixel Registration Algorithm Based on Single-Step DFT Combined with Phase Correlation Constraint in Multimodality Brain Image. Comput. Math. Methods Med. 2020, 2020, 1–10. [Google Scholar] [CrossRef]

- Foroosh, H.; Zerubia, J.B.; Berthod, M. Extension of phase correlation to subpixel registration. IEEE Trans. Image Process. 2002, 11, 188–200. [Google Scholar] [CrossRef] [Green Version]

- Ma, N.; Sun, P.-F.; Men, Y.-B.; Men, C.-G.; Li, X. A Subpixel Matching Method for Stereovision of Narrow Baseline Remotely Sensed Imagery. Math. Probl. Eng. 2017, 2017, 7901692. [Google Scholar] [CrossRef]

- Ren, J.; Jiang, J.; Vlachos, T. High-Accuracy Sub-Pixel Motion Estimation From Noisy Images in Fourier Domain. IEEE Trans. Image Process. 2009, 19, 1379–1384. [Google Scholar] [CrossRef] [Green Version]

- Argyriou, V.; Vlachos, T. On the estimation of subpixel motion using phase correlation. J. Electron. Imaging 2007, 16. [Google Scholar] [CrossRef]

- Alba, A.; Gomez, J.-F.V.; Arce-Santana, E.R.; Aguilar-Ponce, R.M. Phase correlation with sub-pixel accuracy: A comparative study in 1D and 2D. Comput. Vis. Image Underst. 2015, 137, 76–87. [Google Scholar] [CrossRef]

- Guizar-Sicairos, M.; Thurman, S.T.; Fienup, J.R. Efficient subpixel image registration algorithms. Opt. Lett. 2008, 33, 156–158. [Google Scholar] [CrossRef] [Green Version]

- Foroosh, H.; Balci, M. Sub-pixel registration and estimation of local shifts directly in the fourier domain. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 1915–1918. [Google Scholar]

- Hoge, W.S. A subspace identification extension to the phase correlation method. IEEE Trans. Med. Imaging 2003, 22, 277–280. [Google Scholar] [CrossRef]

- Balci, M.; Foroosh, H. Subpixel Registration Directly from the Phase Difference. EURASIP J. Adv. Signal Process. 2006, 2006, 060796. [Google Scholar] [CrossRef] [Green Version]

- Van Puymbroeck, N.; Michel, R.; Binet, R.; Avouac, J.-P.; Taboury, J. Measuring earthquakes from optical satellite images. Appl. Opt. 2000, 39, 3486–3494. [Google Scholar] [CrossRef]

- Hoge, W.S.; Westin, C.-F. Identification of translational displacements between N-dimensional data sets using the high-order SVD and phase correlation. IEEE Trans. Image Process. 2005, 14, 884–889. [Google Scholar] [CrossRef]

- Stone, H.S.; Orchard, M.T.; Chang, E.; Martucci, S.A.; Member, S. A Fast Direct Fourier-Based Algorithm for Sub-pixel Registration of Images. IEEE Trans. Geosci. Remote Sens. 2011, 39, 2235–2243. [Google Scholar] [CrossRef] [Green Version]

- Alba, A.; Aguilar-Ponce, R.M.; Vigueras, J.F. Phase correlation based image alignment with sub-pixel accuracy. Adv. Artif. 2013, 7629, 71–182. [Google Scholar]

- Rondao, D.; Aouf, N.; Richardson, M.A.; Dubois-Matra, O. Benchmarking of local feature detectors and descriptors for multispectral relative navigation in space. Acta Astronaut. 2020, 172, 100–122. [Google Scholar] [CrossRef]

- Luo, T.; Shi, Z.; Wang, P. Robust and Efficient Corner Detector Using Non-Corners Exclusion. Appl. Sci. 2020, 10, 443. [Google Scholar] [CrossRef] [Green Version]

- Behrens, A.; Hendrik, R. Analysis of Feature Point Distributions for Fast Image Mosaicking. Acta Polytech. 2010, 50, 12–18. [Google Scholar] [CrossRef]

- Nelder, J.A.; Mead, R. A Simplex Method for Function Minimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Jiang, J.; Guo, X. Robust Feature Matching Using Spatial Clustering with Heavy Outliers. IEEE Trans. Image Process. 2019, 29, 736–746. [Google Scholar] [CrossRef] [PubMed]

- Misra, I.; Moorthi, S.M.; Dhar, D.; Ramakrishnan, R. An automatic satellite image registration technique based on Harris corner detection and Random Sample Consensus (RANSAC) outlier rejection model. In Proceedings of the 2012 1st International Conference on Recent Advances in Information Technology (RAIT), Dhanbad, India, 15–17 March 2012; Volume 2, pp. 68–73. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Inglada, J.; Giros, A. On the possibility of automatic multisensor image registration. IEEE Trans. Geosci. Remote Sens. 2004, 42, 2104–2120. [Google Scholar] [CrossRef]

- Arévalo, V.; González, J. An experimental evaluation of non-rigid registration techniques on Quickbird satellite imagery. Int. J. Remote Sens. 2008, 29, 513–527. [Google Scholar] [CrossRef]

- Fonseca, L.M.G.; Manjunath, B.S. Registration Techniques for Multisensor Remotely Sensed Imagery. Photogramm. Eng. Remote Sens. 1996, 62, 1049–1056. [Google Scholar]

- Goshtasby, A. Registration of images with geometric distortions. IEEE Trans. Geosci. Remote Sens. 1988, 26, 60–64. [Google Scholar] [CrossRef]

- Goshtaby, A. Image Registration: Principles, Tools and Methods; Springer: London, UK, 2012. [Google Scholar]

- Liew, L.H.; Lee, B.Y.; Wang, Y.C.; Cheah, W.S. Rectification of aerial images using piecewise linear transformation. IOP Conf. Series Earth Environ. Sci. 2014, 18, 12009. [Google Scholar] [CrossRef] [Green Version]

| Data Source | Image Size (pixels) | Year | Resolution (m) | Incidence Angles (°) | Angle of Solar Elevation (°) | Solar Azimuth (°) |

|---|---|---|---|---|---|---|

| Pleiades sensed image | 25,855 × 38,808 | 2014 | 0.5 | –17 | 30 | 160 |

| Pleiades reference image | 37,430 × 42,068 | 2013 | 0.5 | 18 | 31 | 161 |

| Data Source | Image Size (pixels) | Date | Resolution (m) | Echelle | Camera |

|---|---|---|---|---|---|

| Aerial images | 5121 × 2897 | 2009 | - | 1/7500 | RMK TOP 15 |

| 10,000 × 10,000 | 2014 | 0.20 | - | ADS80 | |

| 11,271 × 10,727 | 2016 | 0.20 | - | ADS80 |

| Window Function | Number of Wrong Matches | Number of Correct Matches | True Matching Rate | ||

|---|---|---|---|---|---|

| Estimates BW | 0.486 | 0.599 | 22 | 88 | 0.800 |

| Estimates HW | 0.418 | 0.586 | 29 | 81 | 0.734 |

| Estimates BW and HW | 0.405 | 0.596 | 29 | 81 | 0.736 |

| Estimates HW and BW | 0.430 | 0.594 | 28 | 82 | 0.745 |

| Window Function | Estimates PM Only | Estimates PM Using Harris Corners | ||

|---|---|---|---|---|

| RMSEx | RMSEy | RMSEx | RMSEy | |

| Estimates-BW | 2.752 | 4.301 | 0.486 | 0.599 |

| Estimates-HW | 2.439 | 4.180 | 0.418 | 0.586 |

| Estimates-BW-HW | 2.626 | 4.270 | 0.405 | 0.596 |

| Estimates-HW-BW | 2.486 | 4.278 | 0.430 | 0.594 |

| Methods | Found Corners Pairs | Filter Score < 0.3 and Identical Pairs | Eliminate Outliers (RANSAC) | True Matching Rate | Average Running Time (mn) | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| (1) | (2) | (1) | (2) | (1) | (2) | (1) | (2) | (1) | (2) | |

| SURF based matching | 640 | 19,957 | - | - | 13 | 3029 | 0.020 | 0.152 | 0.7 | 11.2 |

| Harris corners with SURF descriptor | 574 | 8760 | - | - | 11 | 813 | 0.019 | 0.093 | 0.4 | 10.8 |

| Our Approach (MinQuality = 0.01) | 30,068 | 806,806 | 6042 | 307,301 | 619 | 33,565 | 0.102 | 0.109 | 1.7 | 237.5 |

| Our Approach (MinQuality = 0.005) | 35,274 | 1,412,805 | 9115 | 567,096 | 549 | 69,678 | 0.060 | 0.123 | 3.6 | 507.0 |

| Estimates Harris | Estimates TPSS | Estimates MN | ||

|---|---|---|---|---|

| Aerial 2014–2009 | RMSEx | 0.557 | 0.577 | 0.557 |

| RMSEy | 0.821 | 0.852 | 0.821 | |

| Aerial 2014–2016 | RMSEx | 0.003 | 0.047 | 0.003 |

| RMSEy | 0.007 | 0.192 | 0.007 | |

| Satellite 2013–2014 | RMSEx | 0.676 | 0.686 | 0.676 |

| RMSEy | 0.717 | 0.686 | 0.717 | |

| RMSEx | RMSEy | |||||

|---|---|---|---|---|---|---|

| (1) | (2) | (3) | (1) | (2) | (3) | |

| Sensed image | 3.946 | 1.006 | 0.968 | 6.301 | 1.724 | 1.968 |

| Registered image with TPS | 0.252 | 0.062 | 0.428 | 0.373 | 0.084 | 0.402 |

| Registered image with first-order polynomial transformation | 0.144 | 0.066 | 0.307 | 0.244 | 0.069 | 0.311 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rasmy, L.; Sebari, I.; Ettarid, M. Automatic Sub-Pixel Co-Registration of Remote Sensing Images Using Phase Correlation and Harris Detector. Remote Sens. 2021, 13, 2314. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13122314

Rasmy L, Sebari I, Ettarid M. Automatic Sub-Pixel Co-Registration of Remote Sensing Images Using Phase Correlation and Harris Detector. Remote Sensing. 2021; 13(12):2314. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13122314

Chicago/Turabian StyleRasmy, Laila, Imane Sebari, and Mohamed Ettarid. 2021. "Automatic Sub-Pixel Co-Registration of Remote Sensing Images Using Phase Correlation and Harris Detector" Remote Sensing 13, no. 12: 2314. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13122314