Application of RGB Images Obtained by UAV in Coffee Farming

Abstract

:1. Introduction

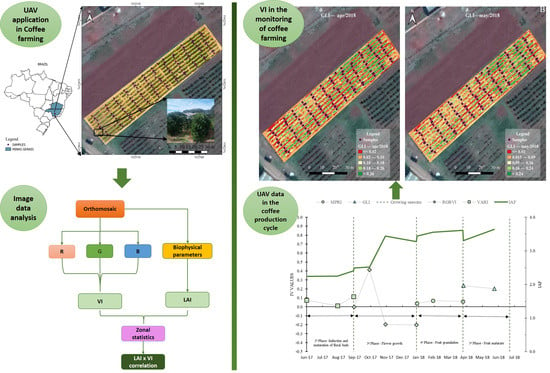

2. Materials and Methods

2.1. Study Area

2.2. UAV and Camera System

2.3. Image Processing

2.4. Obtaining Field Data

2.5. Estimated Leaf Area Index

2.6. Statistical Analysis

2.7. Vegetation Indices

3. Results and Discussion

3.1. Plant Height and Crown Diameter

3.2. Leaf Area Index (LAI)

3.3. Vegetation Indices (VIs) xLAI

4. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- CONAB—Companhia Nacional de Abastecimento. Acompanhamento da Safra Brasileira de café: (2020). Monitoramento Agrícola (Monitoring of the Brazilian Coffee Crop: Agricultural Monitoring) 6–Safra (1)—First Survey; CONAB, Companhia Nacional de Abastecimento: Brasília, Brazil, 2020. [Google Scholar]

- Jiménez-Brenes, F.M.; López-Granados, F.; Torres-Sánchez, J.; Peña, J.M.; Ramírez, P.; Castillejo-González, I.L.; De Castro, A.I. Automatic UAV-based detection of Cynodon dactylon for site-specific vineyard management. PLoS ONE 2019, 14, e0218132. [Google Scholar] [CrossRef]

- Holman, F.; Riche, A.; Michalski, A.; Castle, M.; Wooster, M.; Hawkesford, M. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Berni, J.A.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Gago, J.; Douthe, C.; Coopman, R.; Gallego, P.; Ribas-Carbo, M.; Flexas, J.; Escalona, J.; Medrano, H. UAVs challenge to assess water stress for sustainable agriculture. Agric. Water Manag. 2015, 153, 9–19. [Google Scholar] [CrossRef]

- Hatfield, J.L.; Prueger, J.H. Value of using different vegetative indices to quantify agricultural crop characteristics at different growth stages under varying management practices. Remote Sens. 2010, 2, 562–578. [Google Scholar] [CrossRef] [Green Version]

- Martins, R.N.; Pinto, F.D.A.D.C.; de Queiroz, D.M.; Valente, D.S.M.; Rosas, J.T.F. A Novel Vegetation Index for Coffee Ripeness Monitoring Using Aerial Imagery. Remote Sens. 2021, 13, 263. [Google Scholar] [CrossRef]

- Gaadi, A.-; Patil, V.; Tola, E.; Madugundu, R.; Marey, S. In-season assessment of wheat crop health using vegetation indices based on ground measured hyper spectral data. Am. J. Agric. Biol. Sci. 2014, 9, 138–146. [Google Scholar] [CrossRef] [Green Version]

- Jorge, J.; Vallbé, M.; Soler, J.A. Detection of irrigation inhomogeneities in an olive grove using the NDRE vegetation index obtained from UAV images. Eur. J. Remote Sens. 2019, 52, 169–177. [Google Scholar] [CrossRef] [Green Version]

- Ampatzidis, Y.; Partel, V. UAV-based high throughput phenotyping in citrus utilizing multispectral imaging and artificial intelligence. Remote Sens. 2019, 11, 410. [Google Scholar] [CrossRef] [Green Version]

- De Castro, A.I.; Torres-Sánchez, J.; Peña, J.M.; Jiménez-Brenes, F.M.; Csillik, O.; López-Granados, F. An Automatic Random Forest-OBIA Algorithm for Early Weed Mapping between and within Crop Rows Using UAV Imagery. Remote Sens. 2018, 10, 285. [Google Scholar] [CrossRef] [Green Version]

- Kerkech, M.; Hafiane, A.; Canals, R. Deep leaning approach with colorimetric spaces and vegetation indices for vine diseases detection in UAV images. Comput. Electron. Agric. 2018, 155, 237–243. [Google Scholar] [CrossRef]

- Fernandez-Gallego, J.A.; Kefauver, S.C.; Vatter, T.; Gutiérrez, N.A.; Nieto-Taladriz, M.T.; Araus, J.L. Low-cost assessment of grain yield in durum wheat using RGB images. Eur. J. Agron. 2019, 105, 146–156. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the main challenges in automatic plant disease identification based on visible range images. Biosyst. Eng. 2016, 144, 52–60. [Google Scholar] [CrossRef]

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, T.T. Identifying multiple plant diseases using digital image processing. Biosyst. Eng. 2016, 147, 104–116. [Google Scholar] [CrossRef]

- Mengistu, A.D.; Mengistu, S.G.; Alemayehu, D.M. Image Analysis for Ethiopian Coffee Plant Diseases Identification. IJBB 2016, 10, 1. [Google Scholar]

- Katsuhama, N.; Imai, M.; Naruse, N.; Takahashi, Y. Discrimination of areas infected with coffee leaf rust using a vegetation index. Remote Sens. Lett. 2018, 9, 1186–1194. [Google Scholar] [CrossRef]

- Chemura, A.; Mutanga, O.; Sibanda, M.; Chidoko, P. Machine learning prediction of coffee rust severity on leaves using spectroradiometer data. Trop. Plant Pathol. 2017, 43, 117–127. [Google Scholar] [CrossRef]

- Marin, D.B.; Alves, M.D.C.; Pozza, E.A.; Belan, L.L.; Freitas, M.L.D.O. Multispectral radiometric monitoring of bacterial blight of coffee. Precis. Agric. 2018, 20, 959–982. [Google Scholar] [CrossRef]

- Oliveira, H.C.; Guizilini, V.C.; Nunes, I.P.; Souza, J.R. Failure Detection in Row Crops From UAV Images Using Morphological Operators. IEEE Geosci. Remote Sens. Lett. 2018, 15, 991–995. [Google Scholar] [CrossRef]

- Carrijo, G.L.A.; Oliveira, D.E.; De Assis, G.A.; Carneiro, M.G.; Guizilini, V.C.; Souza, J.R. Automatic detection of fruits in coffee crops from aerial images. In Proceedings of the 2017 Latin American Robotics Symposium (LARS) and 2017 Brazilian Symposium on Robotics (SBR), Institute of Electrical and Electronics Engineers (IEEE), Curitiba, PR, Brazil, 9–11 November 2017; pp. 1–6. [Google Scholar]

- Cunha, J.P.; Neto, S.; Matheus, A.; Hurtado, S. Estimating vegetation volume of coffee crops using images from un-manned aerial vehicles. Eng. Agríc. 2019, 39, 41–47. [Google Scholar] [CrossRef]

- Oliveira, A.J.; Assis, G.A.; Guizilini, V.; Faria, E.R.; Souza, J.R. Segmenting and Detecting Nematode in Coffee Crops Using Aerial Images. In Transactions on Petri Nets and Other Models of Concurrency XV; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2019; pp. 274–283. [Google Scholar]

- Dos Santos, L.M.; Ferraz, G.A.E.S.; Barbosa, B.D.D.S.; Diotto, A.V.; Maciel, D.T.; Xavier, L.A.G. Biophysical parameters of coffee crop estimated by UAV RGB images. Precis. Agric. 2020, 21, 1227–1241. [Google Scholar] [CrossRef]

- Barbosa, B.D.S.; Ferraz, G.A.S.; Gonçalves, L.M.; Marin, D.B.; Maciel, D.T.; Ferraz, P.F.P.; Rossi, G. RGB vegetation indices applied to grass monitoring: A qualitative analysis. Agron. Res. 2019, 17, 349–357. [Google Scholar]

- Silva, V.A.; de Rezende, J.C.; de Carvalho, A.M.; Carvalho, G.R.; Rezende, T.; Ferreira, A.D. Recovery of coffee cultivars under the ‘skeleton cut’pruning after 4.5 years of age. Coffee Sci. 2016, 11, 55–64. [Google Scholar]

- Zhang, M.; Zhou, J.; Sudduth, K.A.; Kitchen, N.R. Estimation of maize yield and effects of variable-rate nitrogen application using UAV-based RGB imagery. Biosyst. Eng. 2020, 189, 24–35. [Google Scholar] [CrossRef]

- Mesas-Carrascosa, F.J.; Rumbao, I.C.; Torres-Sánchez, J.; García-Ferrer, A.; Peña, J.M.; Granados, F.L. Accurate ortho-mosaicked six-band multispectral UAV images as affected by mission planning for precision agriculture proposes. Int. J. Remote Sens. 2017, 38, 2161–2176. [Google Scholar] [CrossRef]

- Bater, C.W.; Coops, N.; Wulder, M.A.; Hilker, T.; Nielsen, S.E.; McDermid, G.; Stenhouse, G.B. Using digital time-lapse cameras to monitor species-specific understorey and overstorey phenology in support of wildlife habitat assessment. Environ. Monit. Assess. 2010, 180, 1–13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Torres-Sánchez, J.; López-Granados, F.; De Castro, A.I.; Peña-Barragán, J.M. Configuration and Specifications of an Unmanned Aerial Vehicle (UAV) for Early Site Specific Weed Management. PLoS ONE 2013, 8, e58210. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hobart, M.; Pflanz, M.; Weltzien, C.; Schirrmann, M. Growth Height Determination of Tree Walls for Precise Monitoring in Apple Fruit Production Using UAV Photogrammetry. Remote Sens. 2020, 12, 1656. [Google Scholar] [CrossRef]

- Gómez-Candón, D.; De Castro, A.I.; López-Granados, F. Assessing the accuracy of mosaics from unmanned aerial vehicle (UAV) imagery for precision agriculture purposes in wheat. Precis. Agric. 2014, 15, 44–56. [Google Scholar] [CrossRef] [Green Version]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef] [Green Version]

- Xiang, H.; Tian, L. Method for automatic georeferencing aerial remote sensing (RS) images from an unmanned aerial vehicle (UAV) platform. Biosyst. Eng. 2011, 108, 104–113. [Google Scholar] [CrossRef]

- Ferraz, G.A.E.S.; Da Silva, F.M.; De Oliveira, M.S.; Custódio, A.A.P.; Ferraz, P. Spatial variability of plant attributes in a coffee plantation. Revis. Ciênc. Agron. 2017, 48, 81–91. [Google Scholar] [CrossRef] [Green Version]

- Hasan, U.; Sawut, M.; Chen, S. Estimating the Leaf Area Index of Winter Wheat Based on Unmanned Aerial Vehicle RGB-Image Parameters. Sustainability 2019, 11, 6829. [Google Scholar] [CrossRef] [Green Version]

- Costa, J.D.O.; Coelho, R.; Barros, T.H.D.S.; Júnior, E.F.F.; Fernandes, A.L.T. Leaf area index and radiation extinction coefficient of a coffee canopy under variable drip irrigation levels. Acta Sci. Agron. 2019, 41, e42703. [Google Scholar] [CrossRef]

- Favarin, J.L.; Neto, D.D.; García, A.G.Y.; Nova, N.A.V.; Favarin, M.D.G.G.V. Equações para a estimativa do índice de área foliar do cafeeiro. Pesquisa Agropecuária Brasileira 2002, 37, 769–773. [Google Scholar] [CrossRef] [Green Version]

- Panagiotidis, D.; Abdollahnejad, A.; Surový, P.; Chiteculo, V. Determining tree height and crown diameter from high-resolution UAV imagery. Int. J. Remote Sens. 2017, 38, 2392–2410. [Google Scholar] [CrossRef]

- Surový, P.; Ribeiro, N.A.; Panagiotidis, D. Estimation of positions and heights from UAV-sensed imagery in tree plantations in agrosilvopastoral systems. Int. J. Remote Sens. 2018, 39, 4786–4800. [Google Scholar] [CrossRef]

- Torres-Sánchez, J.; Peña, J.M.; de Castro, A.I.; López-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Saberioon, M.; Amin, M.; Anuar, A.; Gholizadeh, A.; Wayayok, A.; Khairunniza-Bejo, S. Assessment of rice leaf chlorophyll content using visible bands at different growth stages at both the leaf and canopy scale. Int. J. Appl. Earth Obs. Geoinform. 2014, 32, 35–45. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.B.; Xu, X.Q.; He, J.Y.; Ge, X.K.; Yao, X.; Tian, Y.C. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Zhang, J.; Virk, S.; Porter, W.; Kenworthy, K.; Sullivan, D.; Schwartz, B. Applications of Unmanned Aerial Vehicle Based Imagery in Turfgrass Field Trials. Front. Plant Sci. 2019, 10, 279. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Peña-Barragán, J.M.; Ngugi, M.K.; Plant, R.E.; Six, J. Object-based crop identification using multiple vegetation indices, textural features and crop phenology. Remote Sens. Environ. 2011, 115, 1301–1316. [Google Scholar] [CrossRef]

- De Castro, A.; Ehsani, R.; Ploetz, R.; Crane, J.; Abdulridha, J. Optimum spectral and geometric parameters for early detection of laurel wilt disease in avocado. Remote Sens. Environ. 2015, 171, 33–44. [Google Scholar] [CrossRef]

- Camargo Neto, J. A combined statistical-soft computing approach for classification and mapping weed species in minimumtillage systems. Ph.D. Thesis, University of Nebraska–Lincoln, Lincoln, NE, USA, 2004. Available online: http://digitalcommons.unl.edu/dissertations/AAI3147135/ (accessed on 5 May 2021).

- Torres-Sánchez, J.; López-Granados, F.; Peña, J. An automatic object-based method for optimal thresholding in UAV images: Application for vegetation detection in herbaceous crops. Comput. Electron. Agric. 2015, 114, 43–52. [Google Scholar] [CrossRef]

- Bareth, G.E.O.R.G.; Bolten, A.N.D.R.E.A.S.; Hollberg, J.E.N.S.; Aasen, H.; Burkart, A.; Schellberg, J. Feasibility study of using non-calibrated UAV-based RGB imagery for grassland monitoring: Case study at the Rengen Long-term Grassland Experiment (RGE), Germany. DGPF Tagungsband 2015, 24, 1–7. [Google Scholar]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinform. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially Located Platform and Aerial Photography for Documentation of Grazing Impacts on Wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X.; et al. Unmanned Aerial Vehicle Remote Sensing for Field-Based Crop Phenotyping: Current Status and Perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef] [PubMed]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color Indices for Weed Identification Under Various Soil, Residue, and Lighting Conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Hague, T.; Tillett, N.D.; Wheeler, H. Automated Crop and Weed Monitoring in Widely Spaced Cereals. Precis. Agric. 2006, 7, 21–32. [Google Scholar] [CrossRef]

- Meyer, G.E.; Hindman, T.W.; Laksmi, K. Machine vision detection parameters for plant species identification. Photonics East 1999, 3543, 327–335. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Stark, R.; Grits, U.; Rundquist, D.; Kaufman, Y.; Derry, D. Vegetation and soil lines in visible spectral space: A concept and technique for remote estimation of vegetation fraction. Int. J. Remote Sens. 2002, 23, 2537–2562. [Google Scholar] [CrossRef]

- De Camargo, Â.P.; De Camargo, M.B.P. Definição e esquematização das fases fenológicas do cafeeiro arábica nas condições tropicais do Brasil. Bragantia 2001, 60, 65–68. [Google Scholar] [CrossRef] [Green Version]

- López-Granados, F.; Torres-Sánchez, J.; Jiménez-Brenes, F.M.; Arquero, O.; Lovera, M.; De Castro, A.I. An efficient RGB-UAV-based platform for field almond tree phenotyping: 3-D architecture and flowering traits. Plant Methods 2019, 15, 1–16. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Torres-Sánchez, J.; de Castro, A.I.; Peña, J.M.; Jiménez-Brenes, F.M.; Arquero, O.; Lovera, M.; López-Granados, F. Mapping the 3D structure of almond trees using UAV acquired photogrammetric point clouds and object-based image analysis. Biosyst. Eng. 2018, 176, 172–184. [Google Scholar] [CrossRef]

- Bréda, N.J.J. Ground-based measurements of leaf area index: A review of methods, instruments and current controversies. J. Exp. Bot. 2003, 54, 2403–2417. [Google Scholar] [CrossRef]

- Rezende, F.C.; Caldas, A.L.D.; Scalco, M.S.; Faria, M.A.D. Leaf area index, plant density and water management of coffee. Coffee Sci. 2014, 9, 374–384. [Google Scholar]

- Zheng, G.; Moskal, L.M. Retrieving leaf area index (LAI) using remote sensing: Theories, methods and sensors. Sensors 2009, 9, 2719–2745. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Glenn, E.P.; Huete, A.R.; Nagler, P.L.; Nelson, S.G. Relationship Between Remotely-sensed Vegetation Indices, Canopy Attributes and Plant Physiological Processes: What Vegetation Indices Can and Cannot Tell Us About the Landscape. Sensors 2008, 8, 2136–2160. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| VI | Name | Equation | Reference |

|---|---|---|---|

| MGVRI | Modified Green Red Vegetation Index | [50] | |

| GLI | Green Leaf Index | [51] | |

| MPRI | Modified photochemical reflectance index | [52] | |

| RGBVI | Red green blue vegetation index | [50] | |

| ExG | Excess of green | [53] | |

| VEG | Vegetation | [54] | |

| ExR | Excess of red | [55] | |

| ExGR | Excess green-red | [48] | |

| VARI | Visible atmospherically resistant index | [56] |

| VARI | E × GR | RGBVI | VEG | E × G | E × R | GLI | MGVRI | MPRI | LAI | |

|---|---|---|---|---|---|---|---|---|---|---|

| VARI | 1 | |||||||||

| ExGR | −0.09 | 1.00 | ||||||||

| RGBVI | 0.60 | 0.11 | 1.00 | |||||||

| VEG | 0.85 | −0.25 | 0.64 | 1.00 | ||||||

| ExG | −0.30 | 0.26 | −0.06 | −0.17 | 1.00 | |||||

| ExR | 0.05 | −0.99 | −0.12 | 0.24 | −0.12 | 1.00 | ||||

| GLI | −0.44 | 0.33 | −0.17 | −0.34 | 0.87 | −0.21 | 1.00 | |||

| MGVRI | −0.14 | −0.45 | −0.16 | −0.01 | 0.62 | 0.55 | 0.52 | 1.00 | ||

| MPRI | −0.14 | −0.45 | −0.16 | −0.01 | 0.62 | 0.55 | 0.52 | 1.00 | 1.00 | |

| LAI | 0.36 | 0.08 | 0.24 | 0.25 | 0.11 | −0.07 | 0.06 | 0.16 | 0.16 | 1.00 |

| VARI | E × GR | RGBVI | VEG | E × G | E × R | GLI | MGVRI | MPRI | LAI | |

|---|---|---|---|---|---|---|---|---|---|---|

| VARI | 1.00 | |||||||||

| E × GR | 0.95 | 1.00 | ||||||||

| RGBVI | −0.77 | −0.79 | 1.00 | |||||||

| VEG | 0.57 | 0.68 | −0.40 | 1.00 | ||||||

| E × G | 0.90 | 0.99 | −0.78 | 0.70 | 1.00 | |||||

| E × R | −0.99 | −0.94 | 0.73 | −0.58 | −0.89 | 1.00 | ||||

| GLI | 0.80 | 0.84 | −0.60 | 0.82 | 0.82 | −0.81 | 1.00 | |||

| MGVRI | 0.84 | 0.83 | −0.60 | 0.68 | 0.78 | −0.86 | 0.93 | 1.00 | ||

| MPRI | 0.99 | 0.96 | −0.77 | 0.59 | 0.92 | −0.99 | 0.81 | 0.86 | 1.00 | |

| LAI | −0.05 | −0.11 | 0.26 | 0.04 | −0.14 | 0.03 | 0.00 | −0.03 | −0.06 | 1.00 |

| VARI | E × GR | RGBVI | VEG | E × G | E × R | GLI | MGVRI | MPRI | LAI | |

|---|---|---|---|---|---|---|---|---|---|---|

| VARI | 1.00 | |||||||||

| E × GR | 0.49 | 1.00 | ||||||||

| RGBVI | 0.20 | 0.30 | 1.00 | |||||||

| VEG | 0.29 | 0.24 | 0.78 | 1.00 | ||||||

| E × G | 0.54 | 0.43 | 0.89 | 0.74 | 1.00 | |||||

| E × R | −0.58 | −0.73 | −0.11 | −0.12 | −0.33 | 1.00 | ||||

| GLI | 0.68 | 0.36 | 0.66 | 0.58 | 0.77 | −0.20 | 1.00 | |||

| MGVRI | 0.93 | 0.52 | 0.26 | 0.33 | 0.59 | −0.63 | 0.58 | 1.00 | ||

| MPRI | 0.93 | 0.52 | 0.27 | 0.33 | 0.60 | −0.63 | 0.58 | 1.00 | 1.00 | |

| LAI | 0.34 | 0.22 | 0.03 | 0.07 | 0.16 | −0.38 | 0.15 | 0.39 | 0.39 | 1.00 |

| VARI | E × GR | RGBVI | VEG | E × G | E × R | GLI | MGVRI | MPRI | LAI | |

|---|---|---|---|---|---|---|---|---|---|---|

| VARI | 1.00 | |||||||||

| E × GR | 0.49 | 1.00 | ||||||||

| RGBVI | 0.74 | 0.26 | 1.00 | |||||||

| VEG | 0.67 | 0.24 | 0.95 | 1.00 | ||||||

| E × G | 0.86 | 0.35 | 0.97 | 0.91 | 1.00 | |||||

| E × R | 0.46 | 0.93 | 0.25 | 0.21 | 0.31 | 1.00 | ||||

| GLI | 0.15 | −0.26 | 0.19 | 0.18 | 0.22 | −0.46 | 1.00 | |||

| MGVRI | 1.00 | 0.50 | 0.71 | 0.66 | 0.85 | 0.45 | 0.16 | 1.00 | ||

| MPRI | 1.00 | 0.50 | 0.71 | 0.66 | 0.85 | 0.45 | 0.16 | 1.00 | 1.00 | |

| LAI | 0.31 | −0.18 | 0.33 | 0.34 | 0.33 | −0.18 | 0.40 | 0.30 | 0.30 | 1.00 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Barbosa, B.D.S.; Araújo e Silva Ferraz, G.; Mendes dos Santos, L.; Santana, L.S.; Bedin Marin, D.; Rossi, G.; Conti, L. Application of RGB Images Obtained by UAV in Coffee Farming. Remote Sens. 2021, 13, 2397. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13122397

Barbosa BDS, Araújo e Silva Ferraz G, Mendes dos Santos L, Santana LS, Bedin Marin D, Rossi G, Conti L. Application of RGB Images Obtained by UAV in Coffee Farming. Remote Sensing. 2021; 13(12):2397. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13122397

Chicago/Turabian StyleBarbosa, Brenon Diennevam Souza, Gabriel Araújo e Silva Ferraz, Luana Mendes dos Santos, Lucas Santos Santana, Diego Bedin Marin, Giuseppe Rossi, and Leonardo Conti. 2021. "Application of RGB Images Obtained by UAV in Coffee Farming" Remote Sensing 13, no. 12: 2397. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13122397