Atmospheric Light Estimation Based Remote Sensing Image Dehazing

Abstract

:1. Introduction

- A continuously differentiable function is created to learn the optimal parameters of a linear scene depth model for the scene depth map estimation of remote sensing images.

- A color attenuation and haze-lines-based framework is proposed for the haze removal of remote sensing images, which can effectively achieve image dehazing with little color distortion.

- A haze remote sensing image dataset is created as a benchmark that contains both high- and low-resolution hazy remote sensing images. Experimental results confirm that the proposed solution has good performance on a created image dataset.

2. The Development of Remote Sensing Image Dehazing

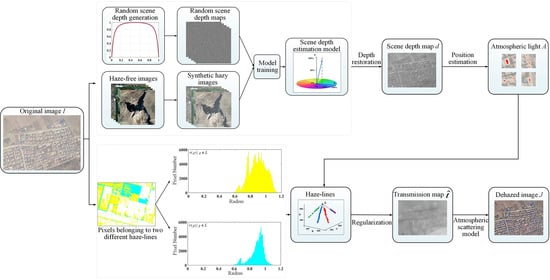

3. The Proposed Dehazing Framework for Remote Sensing Images

3.1. Scene Depth Map Restoration

3.1.1. The Definition of the Linear Model

3.1.2. Training Data Collection

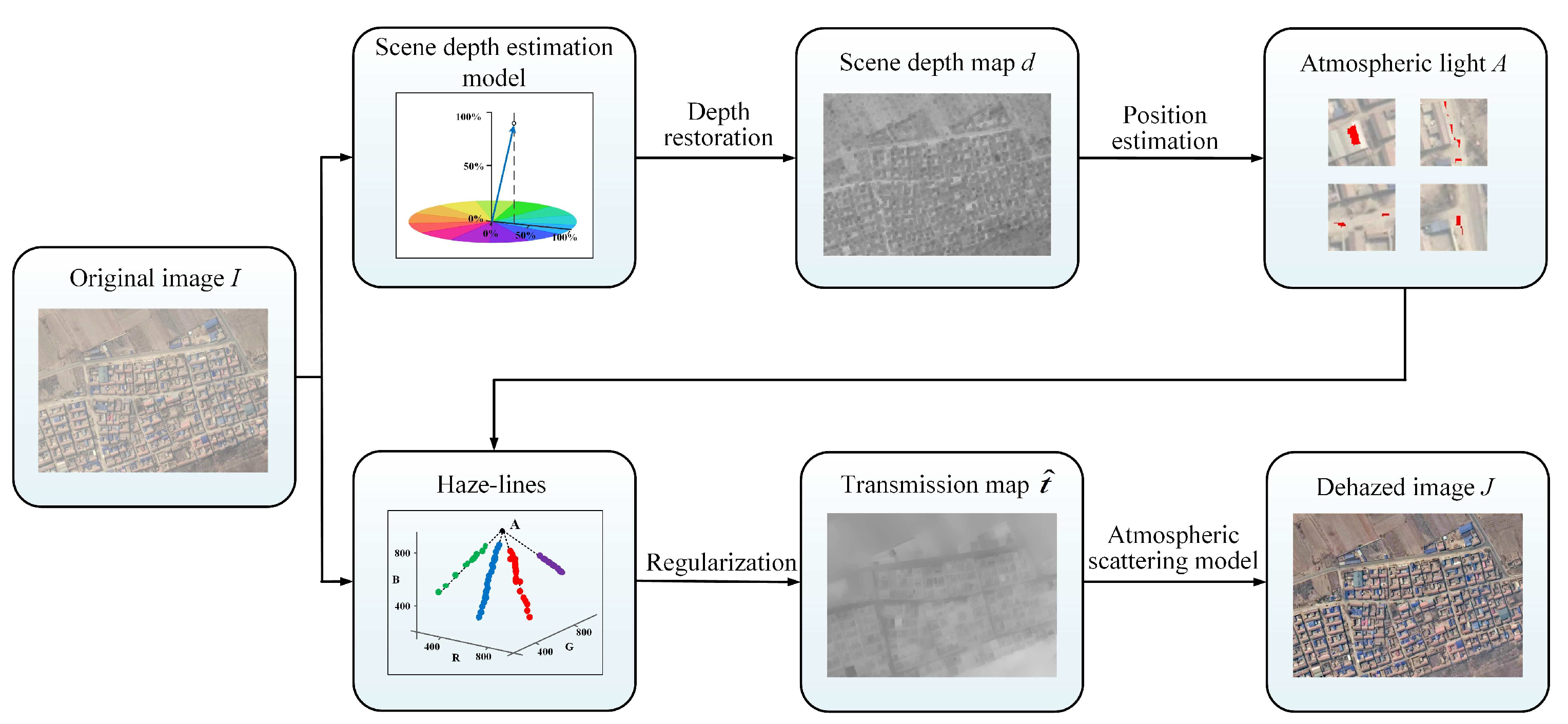

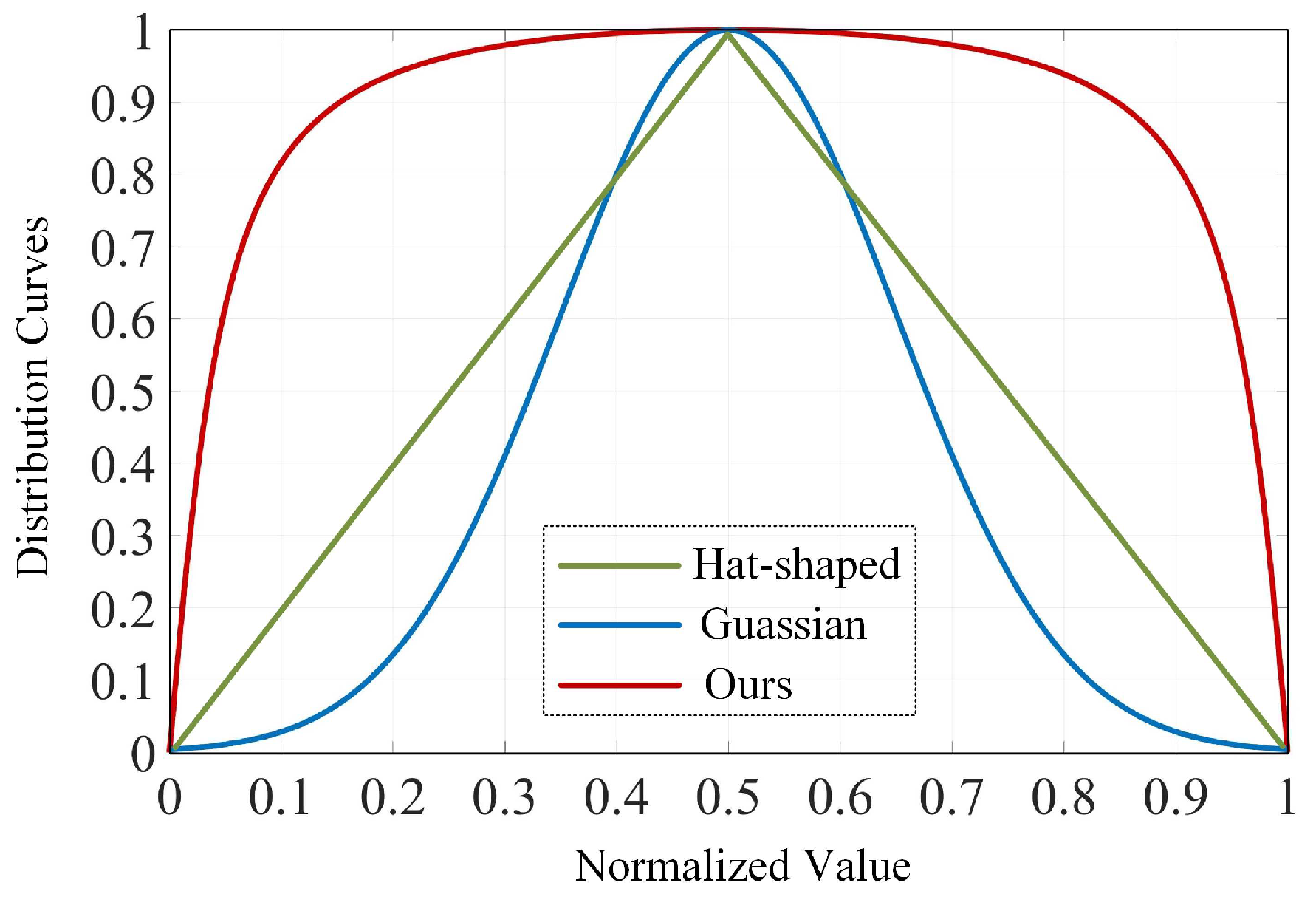

3.1.3. Learning Strategy

3.1.4. Scene Depth Restoration

3.2. Atmospheric Light Estimation

3.3. Transmission Map Estimation

3.4. Haze Removal

| Algorithm 1 The Proposed Dehazing Algorithm for Remote Sensing Images |

| Input: haze-free remote sensing images, hazy remote sensing image Output: dehazed image , scene depth map , atmospheric light A, transmission map

|

4. Comparative Experiments and Analysis

4.1. Experiment Preparation

4.2. Comparative Experiment

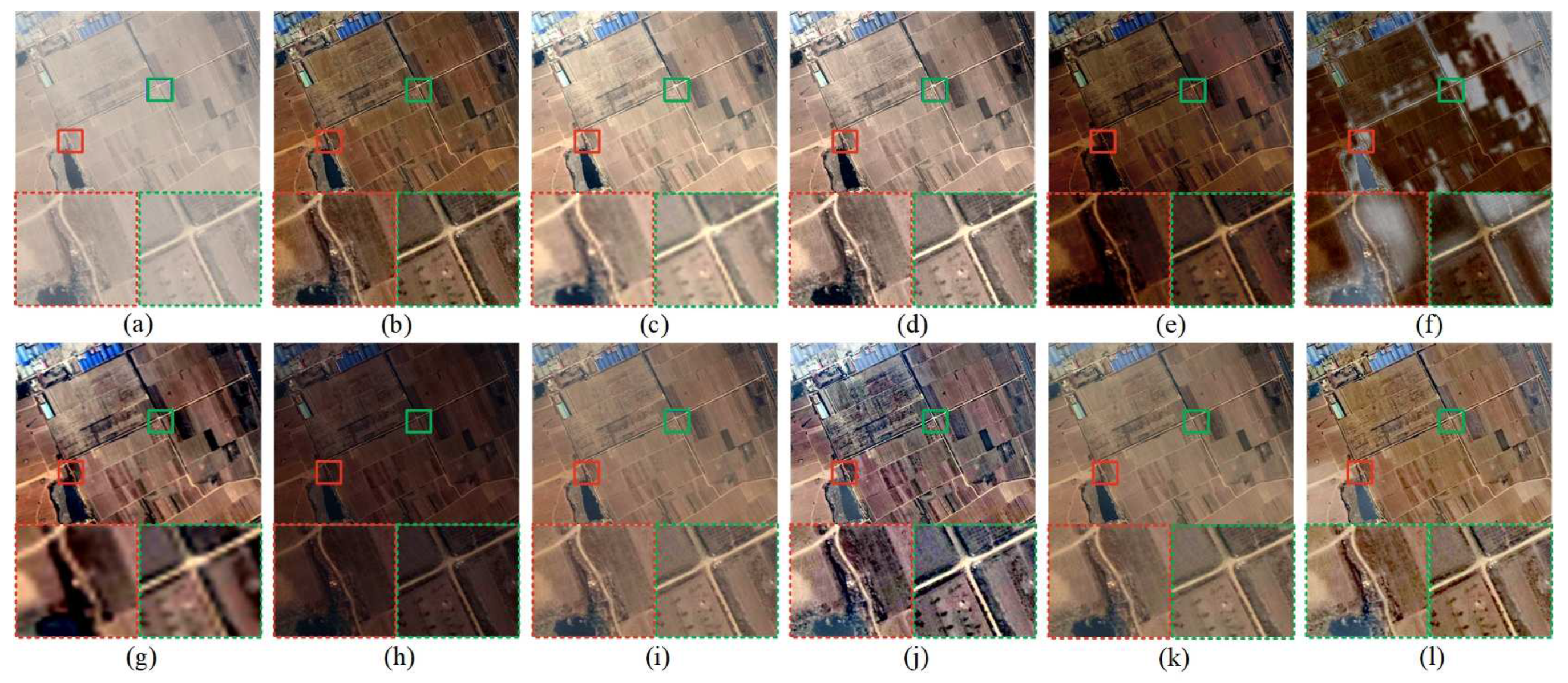

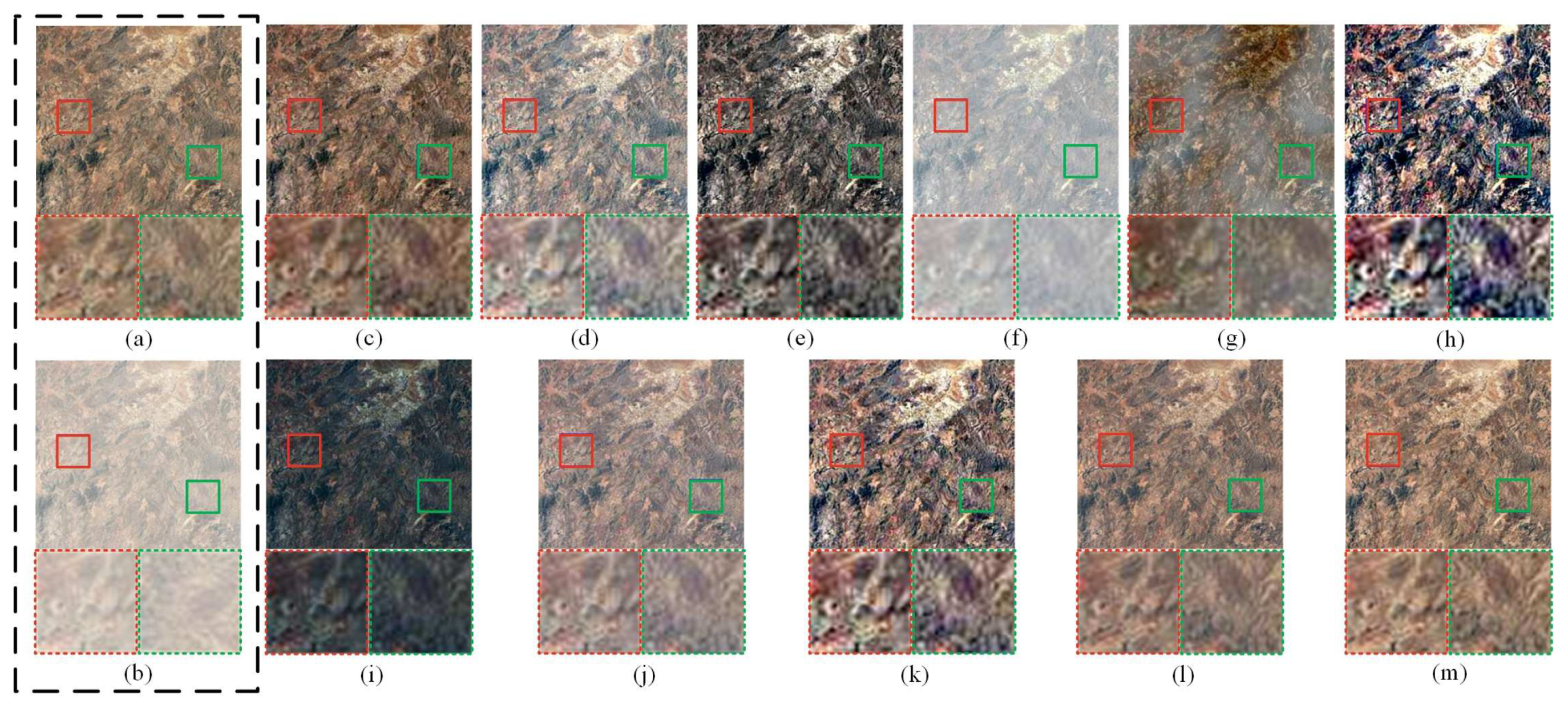

4.2.1. Comparative Experiment in Real Hazy Scenes

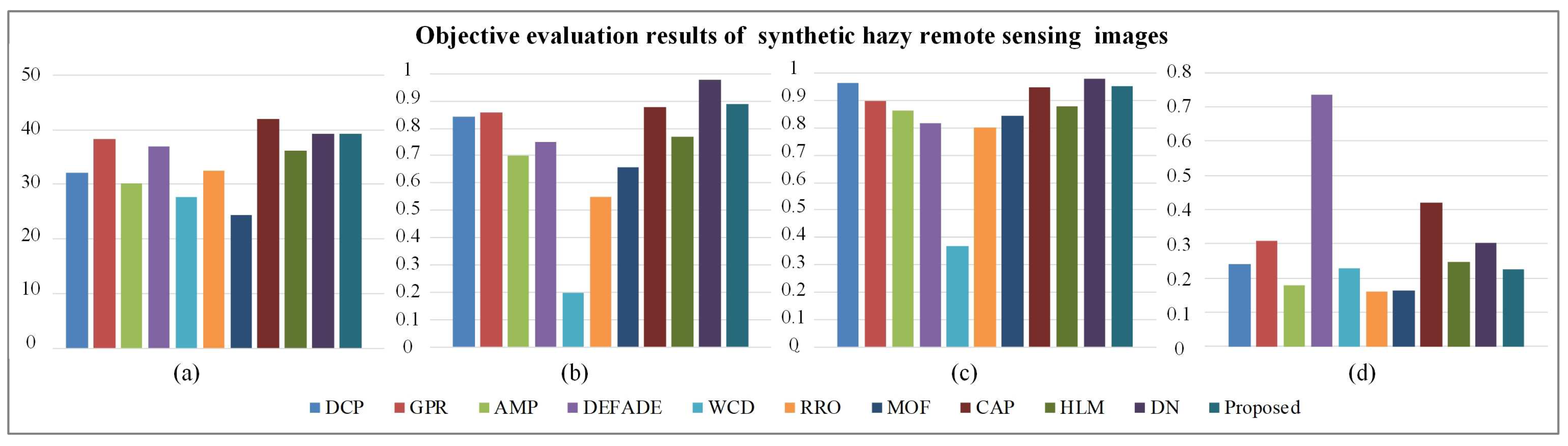

4.2.2. Comparative Experiment in Synthetic Hazy Scenes

4.2.3. Comparison of Average Processing Time

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Yang, Y.; Newsam, S. Geographic image retrieval using local invariant features. IEEE Trans. Geosci. Remote Sens. 2012, 51, 818–832. [Google Scholar] [CrossRef]

- Liu, Q.; Gao, X.; He, L.; Lu, W. Haze removal for a single visible remote sensing image. Signal Process. 2017, 137, 33–43. [Google Scholar] [CrossRef]

- Xu, L.; Zhao, D.; Yan, Y.; Kwong, S.; Chen, J.; Duan, L.Y. IDeRs: Iterative dehazing method for single remote sensing image. Inf. Sci. 2019, 489, 50–62. [Google Scholar] [CrossRef]

- Zheng, M.; Qi, G.; Zhu, Z.; Li, Y.; Wei, H.; Liu, Y. Image Dehazing by An Artificial Image Fusion Method based on Adaptive Structure Decomposition. IEEE Sens. J. 2020, 20, 8062–8072. [Google Scholar] [CrossRef]

- Israël, H. Die Sichtweite im Nebel und die Möglichkeiten ihrer künstlichen Beeinflussung; Springer: Berlin/Heidelberg, Germany, 2013; Volume 640, pp. 33–55. [Google Scholar]

- Zhu, Q.; Mai, J.; Shao, L. A fast single image haze removal algorithm using color attenuation prior. IEEE Trans. Image Process. 2015, 24, 3522–3533. [Google Scholar] [PubMed] [Green Version]

- Berman, D.; Treibitz, T.; Avidan, S. Single Image Dehazing Using Haze-Lines. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 720–734. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Hu, H.M.; Wang, S.; Li, B. A fast image dehazing algorithm based on negative correction. Signal Process. 2014, 103, 380–398. [Google Scholar] [CrossRef]

- Thomas, G.; Flores-Tapia, D.; Pistorius, S. Histogram specification: A fast and flexible method to process digital images. IEEE Trans. Instrum. Meas. 2011, 60, 1565–1578. [Google Scholar] [CrossRef]

- Yu, L.; Liu, X.; Liu, G. A new dehazing algorithm based on overlapped sub-block homomorphic filtering. In Proceedings of the Eighth International Conference on Machine Vision, ICMV 2015, Barcelona, Spain, 19–20 November 2015. [Google Scholar]

- Li, Y.C.; Du, L.; Liu, S. Image Enhancement by Lift-Wavelet Based Homomorphic Filtering. In Proceedings of the International Conference on Electronics, Communications and Control, Xi’an, China, 23–25 August 2012; pp. 1623–1627. [Google Scholar]

- Qi, G.; Chang, L.; Luo, Y.; Chen, Y.; Zhu, Z.; Wang, S. A Precise Multi-Exposure Image Fusion Method Based on Low-level Features. Sensors 2020, 20, 1597. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhu, Z.; Wei, H.; Hu, G.; Li, Y.; Qi, G.; Mazur, N. A Novel Fast Single Image Dehazing Algorithm Based on Artificial Multiexposure Image Fusion. IEEE Trans. Instrum. Meas. 2021, 70, 1–23. [Google Scholar]

- Zhao, D.; Xu, L.; Yan, Y.; Chen, J.; Duan, L.Y. Multi-scale Optimal Fusion model for single image dehazing. Signal Process. Image Commun. 2019, 74, 253–265. [Google Scholar] [CrossRef]

- Zhu, Z.; Yin, H.; Chai, Y.; Li, Y.; Qi, G. A novel multi-modality image fusion method based on image decomposition and sparse representation. Inf. Sci. 2018, 432, 516–529. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chiang, J.; Chen, Y. Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 2011, 21, 1756–1769. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar] [CrossRef]

- Chen, S.; Chen, X.; Chen, X.; Chen, J.; Cao, X.; Shen, M.; Yang, W.; Cui, X. A novel cloud removal method based on IHOT and the cloud trajectories for Landsat imagery. Remote Sens. 2018, 10, 1040. [Google Scholar] [CrossRef] [Green Version]

- Ni, W.; Gao, X.; Wang, Y. Single satellite image dehazing via linear intensity transformation and local property analysis. Neurocomputing 2016, 175, 25–39. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar]

- Fan, X.; Wang, Y.; Tang, X.; Gao, R.; Luo, Z. Two-layer Gaussian process regression with example selection for image dehazing. IEEE Trans. Circuits Syst. Video Technol. 2016, 27, 2505–2517. [Google Scholar] [CrossRef]

- Singh, D.; Kumar, V. Image dehazing using Moore neighborhood-based gradient profile prior. Signal Process. Image Commun. 2019, 70, 131–144. [Google Scholar] [CrossRef]

- Zhu, M.; He, B.; Liu, J.; Yu, J. Boosting dark channel dehazing via weighted local constant assumption. Signal Process. 2020, 171, 107453. [Google Scholar] [CrossRef]

- Zhan, J.; Gao, Y.; Liu, X. Measuring the optical scattering characteristics of large particles in visible remote sensing. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 4666–4669. [Google Scholar]

- Wang, K.; Qi, G.; Zhu, Z.; Chai, Y. A Novel Geometric Dictionary Construction Approach for Sparse Representation Based Image Fusion. Entropy 2017, 19, 306. [Google Scholar] [CrossRef] [Green Version]

- Pan, X.; Xie, F.; Jiang, Z.; Yin, J. Haze removal for a single remote sensing image based on deformed haze imaging model. IEEE Signal Process. Lett. 2015, 22, 1806–1810. [Google Scholar] [CrossRef]

- Singh, D.; Kumar, V. Dehazing of remote sensing images using improved restoration model based dark channel prior. Imaging Sci. J. 2017, 65, 282–292. [Google Scholar] [CrossRef]

- Jiang, H.; Lu, N.; Yao, L.; Zhang, X. Single image dehazing for visible remote sensing based on tagged haze thickness maps. Remote Sens. Lett. 2018, 9, 627–635. [Google Scholar] [CrossRef]

- Bahat, Y.; Irani, M. Blind dehazing using internal patch recurrence. In Proceedings of the IEEE International Conference on Computational Photography (ICCP) 2016, Evanston, IL, USA, 13–15 May 2016; pp. 1–9. [Google Scholar]

- Tang, K.; Yang, J.; Wang, J. Investigating haze-relevant features in a learning framework for image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 24–27 July 2014; pp. 2995–3000. [Google Scholar]

- Lee, S.; Yun, S.; Nam, J.H.; Won, C.S.; Jung, S.W. A review on dark channel prior based image dehazing algorithms. EURASIP J. Image Video Process. 2016, 2016, 4. [Google Scholar] [CrossRef] [Green Version]

- Sun, X.; Li, C.; Liu, L.; Yin, J.; Lei, Y.; Zhao, J. Dynamic Monitoring of Haze Pollution Using Satellite Remote Sensing. IEEE Sensors J. 2020, 20, 11802–11811. [Google Scholar] [CrossRef]

- Bilal, M.; Nichol, J.E.; Bleiweiss, M.P.; Dubois, D. A Simplified high resolution MODIS Aerosol Retrieval Algorithm (SARA) for use over mixed surfaces. Remote Sens. Environ. 2013, 136, 135–145. [Google Scholar] [CrossRef]

- Lolli, S.; Alparone, L.; Garzelli, A.; Vivone, G. Haze Correction for Contrast-Based Multispectral Pansharpening. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2255–2259. [Google Scholar] [CrossRef]

- Chakrabarti, A.; Scharstein, D.; Zickler, T.E. An Empirical Camera Model for Internet Color Vision. In Proceedings of the BMVC, London, UK, 7–10 September 2009; Volume 1, p. 4. [Google Scholar]

- Lin, H.; Kim, S.J.; Süsstrunk, S.; Brown, M.S. Revisiting radiometric calibration for color computer vision. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 129–136. [Google Scholar]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Yeganeh, H.; Wang, Z. Objective Quality Assessment of Tone-Mapped Images. IEEE Trans. Image Process. Publ. IEEE Signal Process. Soc. 2013, 22, 657–667. [Google Scholar] [CrossRef]

- Choi, L.; You, J.; Bovik, A. Referenceless prediction of perceptual fog density and perceptual image defogging. IEEE Trans. Image Process. 2015, 24, 3888–3901. [Google Scholar] [CrossRef]

- Hore, A.; Ziou, D. Image quality metrics: PSNR vs. SSIM. In Proceedings of the International Conference on Pattern Recognition, Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Salazar-Colores, S.; Cruz-Aceves, I.; Ramos-Arreguin, J.M. Single image dehazing using a multilayer perceptron. J. Electron. Imaging 2018, 27, 1–11. [Google Scholar] [CrossRef]

- Shin, J.; Kim, M.; Paik, J.; Lee, S. Radiance-Reflectance Combined Optimization and Structure-Guided l0-Norm for Single Image Dehazing. IEEE Trans. Multimed. 2019, 22, 30–44. [Google Scholar] [CrossRef]

| Short Explanation | |

|---|---|

| DCP | Dark Channel Prior [21] |

| GPR | Gaussian Process Regression [22] |

| AMP | A Multi-Layer Perceptron [42] |

| DEFADE | DEnsity of Fog Assessment based DEfogger [40] |

| WCD | Wavelength Compensation and Dehazing [17] |

| RRO | Radiance-Reflectance Combined Optimization [43] |

| MOF | Multi-scale Optimal Fusion [14] |

| CAP | Color Attenuation Prior [6] |

| HLM | Haze-lines Model [7] |

| DN | DehazeNet [16] |

| Proposed | ———— |

| TMQI | FADE | |

|---|---|---|

| DCP | 0.8602 | 0.3646(3) |

| GPR | 0.8582 | 0.6290 |

| AMP | 0.9050(2) | 0.4110(4) |

| DEFADE | 0.8917(4) | 0.4560 |

| WCD | 0.5698 | 0.5757 |

| RRO | 0.8749 | 0.5774 |

| MOF | 0.7734 | 0.6401 |

| CAP | 0.8780 | 0.7366 |

| HLM | 0.9044(3) | 0.3042(1) |

| DN | 0.8654 | 0.5598 |

| Proposed | 0.9055(1) | 0.3348(2) |

| PSNR | SSIM | TMQI | FADE | |

|---|---|---|---|---|

| DCP | 27.1712 | 0.8423 | 0.9659(2) | 0.2402 |

| GPR | 33.2397(4) | 0.8564(4) | 0.8982 | 0.3089 |

| AMP | 25.1680 | 0.6989 | 0.8646 | 0.1780(3) |

| DEFADE | 31.9961 | 0.7495 | 0.8180 | 0.7363 |

| WCD | 22.7210 | 0.1972 | 0.3690 | 0.2272 |

| RRO | 27.5368 | 0.5504 | 0.8009 | 0.1606(1) |

| MOF | 24.4369 | 0.6563 | 0.8442 | 0.1648(2) |

| CAP | 36.9671(1) | 0.8764(3) | 0.9469(4) | 0.4191 |

| HLM | 31.2391 | 0.7674 | 0.8786 | 0.2466 |

| DN | 34.2353(3) | 0.9786(1) | 0.9793(1) | 0.3032 |

| Proposed | 34.2522(2) | 0.8903(2) | 0.9519(3) | 0.2243(4) |

| DCP | GPR | AMP | DEFADE | WCD | RRO | MOF | CAP | HLM | DN | Proposed | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Time(s) | 9.3398 | 989.6070 | 4.3291 | 217.9994 | 16.9551 | 5.8158 | 1.4304 | 5.9325 | 25.7111 | 17.5564 | 19.9206 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, Z.; Luo, Y.; Wei, H.; Li, Y.; Qi, G.; Mazur, N.; Li, Y.; Li, P. Atmospheric Light Estimation Based Remote Sensing Image Dehazing. Remote Sens. 2021, 13, 2432. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13132432

Zhu Z, Luo Y, Wei H, Li Y, Qi G, Mazur N, Li Y, Li P. Atmospheric Light Estimation Based Remote Sensing Image Dehazing. Remote Sensing. 2021; 13(13):2432. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13132432

Chicago/Turabian StyleZhu, Zhiqin, Yaqin Luo, Hongyan Wei, Yong Li, Guanqiu Qi, Neal Mazur, Yuanyuan Li, and Penglong Li. 2021. "Atmospheric Light Estimation Based Remote Sensing Image Dehazing" Remote Sensing 13, no. 13: 2432. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13132432