A Convolutional Neural Network Based on Grouping Structure for Scene Classification

Abstract

:1. Introduction

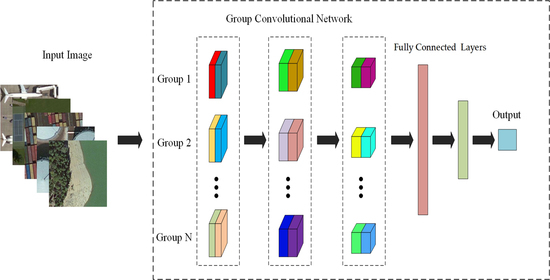

- A convolutional neural network framework, namely MGCNN, was proposed based on group convolution scheme by introducing a hyper-parameter C to divide the feature extraction path into multiple channels for improving efficiency of feature extraction meanwhile enriching the feature space.

- Attention mechanism and group convolution scheme was explored and incorporated into the proposed MGCNN, and a modified MGCNN, namely MGCNN-A, was developed. The influence of incorporating grouping and attention mechanism in feature extraction on the performance of MGCNN-A, as well as the effects of hyper-parameters C being introduced in the model under the fixed feature map channel numbers, were comprehensively investigated. At the same time, the features extracted by MGCNN and MGCNN-A are compared by discussions.

2. Methodology

2.1. Framework of Model

2.2. Grouped Convolution Block

2.3. Grouped Attention Block

2.3.1. Channel Attention

2.3.2. Grouped Attention Block

2.4. Data Augmentation and Cross Validation

2.5. Overall Accuracy and Confusion Matrix

3. Experiments and Result

3.1. Datasets

3.2. Experimental Setup

3.3. Experimental Results

3.3.1. Experiment on RSI-CB Dataset

Data Augmentation Comparative Experiment

MGCNN Experiment

MGCNN-A Experiment

3.3.2. Experiment on UC-Merced Dataset

Data Augmentation Comparative Experiment

MGCNN Experiment

MGCNN-A Experiment

4. Discussions

4.1. Generalization Capability

4.2. Feature Extraction

4.3. Limitations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhao, H.; Zhang, Y.; Liu, S.; Shi, J. PSANet: Point-wise Spatial Attention Network for Scene Parsing. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 267–283. [Google Scholar] [CrossRef]

- Zhao, J.; Zhong, Y.; Shu, H.; Zhang, L. High-resolution image classification integrating spectral-spatial-location cues by conditional random fields. IEEE Trans. Image Process. 2016, 25, 4033–4045. [Google Scholar] [CrossRef]

- Yi, Y.; Zhang, Z.; Zhang, W.; Zhang, C.; Li, W.; Zhao, T. Semantic segmentation of urban buildings from VHR remote sensing imagery using a deep convolutional neural network. Remote Sens. 2019, 11, 1774. [Google Scholar] [CrossRef] [Green Version]

- Shawky, O.A.; Hagag, A.; El-Dahshan, E.S.A.; Ismail, M.A. Remote sensing image scene classification using CNN-MLP with data augmentation. Optik 2020, 221, 165356. [Google Scholar] [CrossRef]

- Zhang, R.; Chen, Z.; Zhang, S.; Song, F.; Zhang, G.; Zhou, Q.; Lei, T. Remote sensing image scene classification with noisy label distillation. Remote Sens. 2020, 12, 2376. [Google Scholar] [CrossRef]

- Xu, K.; Huang, H.; Deng, P.; Shi, G. Two-stream feature aggregation deep neural network for scene classification of remote sensing images. Inf. Sci. 2020, 539, 250–268. [Google Scholar] [CrossRef]

- Ma, A.; Wan, Y.; Zhong, Y.; Wang, J.; Zhang, L. SceneNet: Remote sensing scene classification deep learning network using multi-objective neural evolution architecture search. ISPRS J. Photogramm. Remote Sens. 2021, 172, 171–188. [Google Scholar] [CrossRef]

- Shi, S.; Chang, Y.; Wang, G.; Li, Z.; Hu, Y.; Liu, M.; Li, Y.; Li, B.; Zong, M.; Huang, W. Planning for the wetland restoration potential based on the viability of the seed bank and the land-use change trajectory in the Sanjiang Plain of China. Sci. Total Environ. 2020, 733, 139208. [Google Scholar] [CrossRef]

- Yi, Y.; Zhang, Z.; Zhang, W.; Jia, H.; Zhang, J. Landslide susceptibility mapping using multiscale sampling strategy and convolutional neural network: A case study in Jiuzhaigou region. Catena 2020, 195, 104851. [Google Scholar] [CrossRef]

- Jeong, D.; Kim, M.; Song, K.; Lee, J. Planning a Green Infrastructure Network to Integrate Potential Evacuation Routes and the Urban Green Space in a Coastal City: The Case Study of Haeundae District, Busan, South Korea. Sci. Total Environ. 2021, 761, 143179. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Pan, Y.; Zhang, J.; Hu, T.; Zhao, J.; Li, N.; Chen, Q. A generalized approach based on convolutional neural networks for large area cropland mapping at very high resolution. Remote Sens. Environ. 2020, 247, 111912. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Cao, S.; Du, M.; Zhao, W.; Hu, Y.; Mo, Y.; Chen, S.; Cai, Y.; Peng, Z.; Zhang, C. Multi-level monitoring of three-dimensional building changes for megacities: Trajectory, morphology, and landscape. ISPRS J. Photogramm. Remote Sens. 2020, 167, 54–70. [Google Scholar] [CrossRef]

- Mohammadi, H.; Samadzadegan, F. An object based framework for building change analysis using 2D and 3D information of high resolution satellite images. Adv. Space Res. 2020, 66, 1386–1404. [Google Scholar] [CrossRef]

- Mustaqeem; Kwon, S. CLSTM: Deep feature-based speech emotion recognition using the hierarchical convlstm network. Mathematics 2020, 8, 2133. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Philbin, J.; Chum, O.; Isard, M.; Sivic, J.; Zisserman, A. Object retrieval with large vocabularies and fast spatial matching. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 25, 1097–1105. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef] [Green Version]

- Hu, J.; Shen, L.; Albanie, S.; Sun, G.; Wu, E. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Wang, W.; Hu, X.; Yang, J. Selective kernel networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 510–519. [Google Scholar] [CrossRef] [Green Version]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar] [CrossRef] [Green Version]

- Fu, K.; Chang, Z.; Zhang, Y.; Xu, G.; Zhang, K.; Sun, X. Rotation-aware and multi-scale convolutional neural network for object detection in remote sensing images. ISPRS J. Photogramm. Remote Sens. 2020, 161, 294–308. [Google Scholar] [CrossRef]

- Liu, T.; Yang, L.; Lunga, D. Change detection using deep learning approach with object-based image analysis. Remote Sens. Environ. 2021, 256, 112308. [Google Scholar] [CrossRef]

- Zhang, H.; Gong, M.; Zhang, P.; Su, L.; Shi, J. Feature-Level Change Detection Using Deep Representation and Feature Change Analysis for Multispectral Imagery. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1666–1670. [Google Scholar] [CrossRef]

- Tuia, D.; Pasolli, E.; Emery, W.J. Using active learning to adapt remote sensing image classifiers. Remote Sens. Environ. 2011, 115, 2232–2242. [Google Scholar] [CrossRef]

- Bruzzone, L.; Fernández Prieto, D. A partially unsupervised cascade classifier for the analysis of multitemporal remote-sensing images. Pattern Recognit. Lett. 2002, 23, 1063–1071. [Google Scholar] [CrossRef] [Green Version]

- Han, X.; Zhong, Y.; Cao, L.; Zhang, L. Pre-trained alexnet architecture with pyramid pooling and supervision for high spatial resolution remote sensing image scene classification. Remote Sens. 2017, 9, 848. [Google Scholar] [CrossRef] [Green Version]

- Gong, X.; Xie, Z.; Liu, Y.; Shi, X.; Zheng, Z. Deep salient feature based anti-noise transfer network for scene classification of remote sensing imagery. Remote Sens. 2018, 10, 410. [Google Scholar] [CrossRef] [Green Version]

- Li, L.; Liang, P.; Ma, J.; Jiao, L.; Guo, X.; Liu, F.; Sun, C. A multiscale self-adaptive attention network for remote sensing scene classification. Remote Sens. 2020, 12, 2209. [Google Scholar] [CrossRef]

- Wang, Q.; Member, S.; Liu, S.; Chanussot, J. Scene Classification With Recurrent Attention of VHR Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 1155–1167. [Google Scholar] [CrossRef]

- Mustaqeem; Kwon, S. MLT-DNet: Speech emotion recognition using 1D dilated CNN based on multi-learning trick approach. Expert Syst. Appl. 2021, 167, 114177. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, J.; Tian, J.; Zhuo, L.; Zhang, J. Residual dense network based on channel-spatial attention for the scene classification of a high-resolution remote sensing image. Remote Sens. 2020, 12, 1887. [Google Scholar] [CrossRef]

- Guo, D.; Xia, Y.; Luo, X. Scene Classification of Remote Sensing Images Based on Saliency Dual Attention Residual Network. IEEE Access 2020, 8, 6344–6357. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.S. SCA-CNN: Spatial and channel-wise attention in convolutional networks for image captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 6298–6306. [Google Scholar] [CrossRef] [Green Version]

- Mustaqeem; Kwon, S. Att-Net: Enhanced emotion recognition system using lightweight self-attention module. Appl. Soft Comput. 2021, 102, 107101. [Google Scholar] [CrossRef]

- Li, H.; Dou, X.; Tao, C.; Wu, Z.; Chen, J.; Peng, J.; Deng, M.; Zhao, L. Rsi-cb: A large-scale remote sensing image classification benchmark using crowdsourced data. Sensors 2020, 20, 1594. [Google Scholar] [CrossRef] [Green Version]

- Yang, Y.; Newsam, S. Bag-of-visual-words and spatial extensions for land-use classification. In Proceedings of the 18th SIGSPATIAL International Symposium on Advances in Geographic Information Systems, San Jose, CA, USA, 2–5 November 2010; pp. 270–279. [Google Scholar] [CrossRef]

- Fonał, K.; Zdunek, R. Fast hierarchical tucker decomposition with single-mode preservation and tensor subspace analysis for feature extraction from augmented multimodal data. Neurocomputing 2021, 445, 231–243. [Google Scholar] [CrossRef]

| Layers | ResNet-50 | MGCNN | MGCNN-A |

|---|---|---|---|

| Conv1 | 7 × 7, 64, stride 2 | 7 × 7, 64, stride 2 | 7 × 7, 64, stride 2 |

| Conv2 | 3 × 3 max pool, stride 2 | 3 × 3 max pool, stride 2 | 3 × 3 max pool, stride 2 |

| Conv3 | |||

| Conv4 | |||

| Conv5 | |||

| FC | Global average pool, FC, Softmax | Global average pool, FC, Softmax | Global average pool, FC, Softmax |

| Method | Overall Accuracy (%) | |

|---|---|---|

| Without Data Augmentation | With Data Augmentation | |

| VGGNet-16 | 81.831 | 89.849 |

| GoogLeNet-22 | 91.815 | 93.791 |

| ResNet-50 | 93.417 | 94.930 |

| Method | Overall Accuracy (%) |

|---|---|

| ResNet-50 | 94.930 |

| MGCNN-C2 | 96.859 |

| MGCNN-C4 | 96.881 |

| MGCNN-C8 | 96.409 |

| MGCNN-C16 | 96.303 |

| Method | Overall Accuracy (%) |

|---|---|

| ResNet-50 | 94.930 |

| MGCNN-A2 | 95.704 |

| MGCNN-A4 | 96.294 |

| MGCNN-A8 | 95.513 |

| MGCNN-A16 | 95.626 |

| Method | Overall Accuracy (%) | |

|---|---|---|

| Without Data Augmentation | With Data Augmentation | |

| VGGNet-16 | 76.524 | 79.381 |

| GoogLeNet-22 | 77.810 | 85.286 |

| ResNet-50 | 81.524 | 88.857 |

| Method | Overall Accuracy (%) |

|---|---|

| ResNet-50 | 88.857 |

| MGCNN-C2 | 91.190 |

| MGCNN-C4 | 91.905 |

| MGCNN-C8 | 91.096 |

| MGCNN-C16 | 90.143 |

| Method | Overall Accuracy (%) |

|---|---|

| ResNet-50 | 88.857 |

| MGCNN-A2 | 90.286 |

| MGCNN-A4 | 91.524 |

| MGCNN-A8 | 90.667 |

| MGCNN-A16 | 90.429 |

| Model | Accuracy (%) | |

|---|---|---|

| Airplane | Parking Lot | |

| ResNet-50 | 82 | 87 |

| MGCNN-C4 | 84 | 92 |

| MGCNN-A4 | 86 | 95 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, X.; Zhang, Z.; Zhang, W.; Yi, Y.; Zhang, C.; Xu, Q. A Convolutional Neural Network Based on Grouping Structure for Scene Classification. Remote Sens. 2021, 13, 2457. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13132457

Wu X, Zhang Z, Zhang W, Yi Y, Zhang C, Xu Q. A Convolutional Neural Network Based on Grouping Structure for Scene Classification. Remote Sensing. 2021; 13(13):2457. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13132457

Chicago/Turabian StyleWu, Xuan, Zhijie Zhang, Wanchang Zhang, Yaning Yi, Chuanrong Zhang, and Qiang Xu. 2021. "A Convolutional Neural Network Based on Grouping Structure for Scene Classification" Remote Sensing 13, no. 13: 2457. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13132457