Moving Target Shadow Analysis and Detection for ViSAR Imagery

Abstract

:1. Introduction

- (1)

- A fast factorized back projection (FFBP) based SAR video frame formation method: This processing method generates high matching SAR video directly from SAR echo, which has the advantages of being applicable to multi-mode SAR data, no additional registration processing, flexible use, high accuracy and high computational efficiency.

- (2)

- Shadow formation mechanism and velocity condition analysis: Based on SAR imaging mechanism and the radar equation, the relationship between shadow and scattering characteristics, illumination time, imaging geometry, target size, processing parameters, etc. is analyzed, and the velocity condition of ground shadow formation under given parameters is obtained, which provides the basis for ViSAR system design and shadow-based moving target detection processing.

- (3)

- ViSAR shadow detection method: Based on the analysis of the shadow features of ViSAR, a shadow detection method of moving target is adopted, which combines background difference and symmetric difference. The basic idea is to make full use of the time information of a ViSAR sequential image and the shadow features. It has the advantages of fast calculation and good robustness.

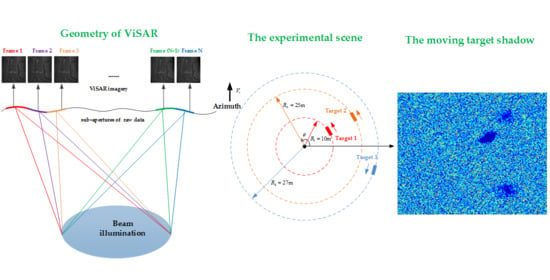

2. ViSAR Formation

2.1. Frame Rate Analysis for ViSAR

2.2. SAR Video Formation Method

3. Moving Target Shadow Formation

3.1. Mechanism of Shadow

3.2. Analysis of Ground Moving Target Shadow

3.3. Shadow-Based Ground Moving Target Detection

4. Experiment Results and Discussion

4.1. Uniform Scene Simulation

4.2. Real Data Simulation

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Symbol | Term Name |

|---|---|

| carrier wavelength | |

| platform velocity | |

| beam center slant distance | |

| squint angle | |

| equivalent antenna azimuth length | |

| azimuth size of the antenna | |

| synthetic aperture time of the sub-aperture image | |

| azimuth bandwidth of the sub-aperture image | |

| equivalent azimuth resolution of the sub-aperture image | |

| non-overlap frame rate | |

| overlap frame rate | |

| overlap ratio of the SAR image | |

| transmitted signal bandwidth | |

| speed of light | |

| slant range from the antenna phase center to the point target | |

| the set of obstacle surfaces vector | |

| obstacle surface | |

| radar position vector | |

| instantaneous vector from the radar to the obstacle surface | |

| shadow projected on the ground | |

| signal-to-noise ratio (SNR) of a static point target | |

| effective RCS | |

| azimuth resolution | |

| range resolution | |

| SNR of the area targets | |

| threshold that can clearly distinguish the shadow and background | |

| range velocity of moving target | |

| azimuth velocity of moving target | |

| the size of target along the range | |

| the size of target along the range | |

| sheltering time of scatterer |

References

- Soumekh, M. Synthetic Aperture Radar Signal. Processing with MATLAB Algorithms; Wiley: New York, NY, USA, 1999. [Google Scholar]

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. 2013, 1, 6–43. [Google Scholar] [CrossRef] [Green Version]

- Cumming, I.G.; Wong, F.H. Digital Processing of Synthetic Aperture Radar Data; Artech House: Norwood, MA, USA, 2005. [Google Scholar]

- Wu, B.; Gao, Y.; Ghasr, M.T.; Zougui, R. Resolution-Based Analysis for Optimizing Subaperture Measurements in Circular SAR Imaging. IEEE Trans. Instrum. Meas. 2018, 67, 2804–2811. [Google Scholar] [CrossRef]

- Xiang, D.; Wang, W.; Tang, T.; Su, Y. Multiple-component polarimetric decomposition with new volume scatterering models for PolSAR urban areas. IET Radar Sonar Navig. 2017, 11, 410–419. [Google Scholar] [CrossRef]

- Dong, G.; Kuang, G.; Wang, N.; Wang, W. Classification via Sparse Representation of Steerable Wavelet Frames on Grassmann Manifold: Application to Target Recognition in SAR Image. IEEE Trans. Image Process. 2017, 26, 2892–2904. [Google Scholar] [CrossRef] [PubMed]

- He, F.; Chen, Q.; Dong, Z.; Sun, Z. Processing of Ultrahigh-Resolution Spaceborne Sliding Spotlight SAR Data on Curved Orbit. IEEE Trans. Aerosp. Electron. Syst. 2013, 49, 819–839. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, X.; Tang, K.; Liu, M.; Liu, L. Spaceborne Video-SAR Moving Target Surveillance System. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 2348–2351. [Google Scholar]

- Gu, C.; Chang, W. An Efficient Geometric Distortion Correction Method for SAR Video Formation. In Proceedings of the 2016 5th International Conference on Modern Circuits and Systems Technologies (MOCAST), Thessaloniki, Greece, 12–14 May 2016. [Google Scholar]

- Palm, S.; Wahlena, A.; Stankoa, S.; Pohla, N.; Welligb, P.; Stilla, U. Real-time Onboard Processing and Ground Based Monitoring of FMCW-SAR Videos. In Proceedings of the 10th European Conference on Synthetic Aperture Radar (EUSAR), Berlin, Germany, 3–5 June 2014; pp. 148–151. [Google Scholar]

- Yamaoka, T.; Suwa, K.; Hara, T.; Nakano, Y. Radar Video Generated from Synthetic Aperture Radar Image. In Proceedings of the International Conference on Modern Circuits and Systems Technologies (IGARSS), Beijing, China, 10–15 July 2016; pp. 4582–4585. [Google Scholar]

- Yang, X.; Shi, J.; Zhou, Y.; Wang, C.; Hu, Y.; Zhang, X.; Wei, S. Ground Moving Target Tracking and Refocusing Using Shadow in Video-SAR. Remote Sens. 2020, 12, 3083. [Google Scholar] [CrossRef]

- Jahangir, M. Moving target detection for Synthetic Aperture Radar via shadow detection. In Proceedings of the IEEE IET International Conference on Radar Systems, Edinburgh, UK, 15–18 October 2007. [Google Scholar]

- Xu, H.; Yang, Z.; Chen, G.; Liao, G.; Tian, M. A Ground Moving Target Detection Approach based on shadow feature with multichannel High-resolution Synthetic Aperture Radar. IEEE Geosci. Remote Sens. Lett. 2016, 12, 1572–1576. [Google Scholar] [CrossRef]

- Wen, L.; Ding, J.; Loffeld, O. ViSAR Moving Target Detection Using Dual Faster R-CNN. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 2984–2994. [Google Scholar] [CrossRef]

- Huang, X.; Ding, J.; Guo, Q. Unsupervised Image Registration for ViSAR. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2021, 14, 1075–1083. [Google Scholar] [CrossRef]

- Tian, X.; Liu, J.; Mallick, M.; Huang, K. Simultaneous Detection and Tracking of Moving-Target Shadows in ViSAR Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 1181–1199. [Google Scholar] [CrossRef]

- Zhao, B.; Han, Y.; Wang, H.; Tang, L.; Liu, X.; Wang, T. Robust Shadow Tracking for ViSAR. IEEE Trans. Geosci. Remote Sens. Lett. 2021, 18, 821–825. [Google Scholar] [CrossRef]

- Callow, H.J.; Groen, J.; Hansen, R.E.; Sparr, T. Shadow Enhancement in SAR Imagery. In Proceedings of the IEEE IET International Conference on Radar Systems, Edinburgh, UK, 15–18 October 2007. [Google Scholar]

- Khwaja, A.S.; Ma, J. Applications of Compressed Sensing for SAR Moving-Target Velocity Estimation and Image Compression. IEEE Trans. Instrum. Meas. 2011, 60, 2848–2860. [Google Scholar] [CrossRef]

- Zhao, S.; Chen, J.; Yang, W.; Sun, B.; Wang, Y. Image Formation method for Spaceborne ViSAR. In Proceedings of the IEEE 5th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Singapore, 1–4 September 2015; pp. 148–151. [Google Scholar]

- Yan, H.; Mao, X.; Zhang, J.; Zhu, D. Frame Rate Analysis of Video Synthetic Aperture Radar (ViSAR). In Proceedings of the ISAP, Okinawa, Japan, 24–28 October 2016; pp. 446–447. [Google Scholar]

- Hu, R.; Min, R.; Pi, Y. Interpolation-free algorithm for persistent multi-frame imaging of video-SAR. IET Radar Sonar Navig. 2017, 11, 978–986. [Google Scholar] [CrossRef]

- Ulander, L.; Hellsten, H.; Stenstrom, G. Synthetic-aperture radar processing using fast factorized back-projection. IEEE Trans. Aerosp. Electron. Syst. 2003, 39, 760–776. [Google Scholar] [CrossRef] [Green Version]

- Basu, S.K.; Bresler, Y. O(N2log2N) filtered backprojection reconstruction algorithm for tomography. IEEE Trans. Image Process. 2000, 9, 1760–1773. [Google Scholar] [CrossRef] [PubMed]

- Xie, H.; An, D.; Huang, X.; Zhou, Z. Fast time-domain imaging in elliptical polar coordinate for general bistatic VHF/UHF ultra-wideband SAR with arbitrary motion. IEEE J. Sel. Top. Appl. Earth Observ. 2015, 8, 879–895. [Google Scholar] [CrossRef]

- Rodriguez-Cassola, M.; Parts, P.; Krieger, G.; Moreira, A. Efficient Time-Domain Image Formation with Precise Topography Accommodation for General Bistatic SAR Configurations. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 2949–2966. [Google Scholar] [CrossRef] [Green Version]

- Xueyan, K.; Ruliang, Y. An Effective Imaging Method of Moving Targets in Airborne SAR Real Data. J. Test. Meas. Technol. 2004, 18, 214–218. [Google Scholar]

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, L.V. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Egiazarian, K. Video Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. In Proceedings of the 15th European Signal Processing Conference, Poznan, Poland, 3–7 September 2007; pp. 145–149. [Google Scholar]

- Skolnik, M.I. Introduction to Radar Systems, 3rd ed.; McGraw-Hill Education: New York, NY, USA, 2014. [Google Scholar]

- Zhang, S.S.; Long, T.; Zeng, T.; Ding, Z. Space-borne synthetic aperture radar received data simulation based on airborne SAR image data. Adv. Space Res. 2008, 41, 181–182. [Google Scholar] [CrossRef]

| Parameters | Values | |

|---|---|---|

| Center Frequency | 35 GHz | |

| Bandwidth | 600 MHz | |

| Working Mode | Spotlight | |

| Radiuses | [10 m, 25 m, 27 m] | |

| Scene Size | 120 m × 120 m | |

| Moving Target Size | 5 m × 2 m | |

| Pulse Width | 10 us | |

| Experiment 1 | Platform Velocity | 100 m/s |

| Platform Height | 9 km | |

| Scene Center Slant Range | 30 km | |

| Angular Rate | 0.06222 rad/s | |

| PRF | 500 Hz | |

| Experiment 2 | Platform Velocity | 100 m/s |

| Platform Height | 1 km | |

| Scene Center Slant Range | 3 km | |

| Angular Rate | 0.6222 rad/s | |

| PRF | 2200 Hz | |

| Experiment 3 | Platform Velocity | 200 m/s |

| Platform Height | 9 km | |

| Scene Center Slant Range | 30 km | |

| Angular Rate | 0.12444 rad/s | |

| PRF | 500 Hz | |

| Parameters | Values |

|---|---|

| Center Frequency | 10/35 GHz |

| Bandwidth | 600 MHz |

| Working Mode | spotlight |

| Moving Target Size | 5 m × 2 m |

| Number of Moving Target | 2 |

| Pulse Width | 10 us |

| Platform Velocity | 100 m/s |

| Platform Height | 8 km |

| Scene Center Slant Range | 12 km |

| PRF | 800 Hz |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Z.; Chen, X.; Yi, T.; He, F.; Dong, Z.; Zhang, Y. Moving Target Shadow Analysis and Detection for ViSAR Imagery. Remote Sens. 2021, 13, 3012. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13153012

He Z, Chen X, Yi T, He F, Dong Z, Zhang Y. Moving Target Shadow Analysis and Detection for ViSAR Imagery. Remote Sensing. 2021; 13(15):3012. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13153012

Chicago/Turabian StyleHe, Zhihua, Xing Chen, Tianzhu Yi, Feng He, Zhen Dong, and Yue Zhang. 2021. "Moving Target Shadow Analysis and Detection for ViSAR Imagery" Remote Sensing 13, no. 15: 3012. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13153012