Hyperspectral and Multispectral Image Fusion by Deep Neural Network in a Self-Supervised Manner

Abstract

:1. Introduction

- We introduce a strategy for self-supervised fusion of LR HSI and HR MSI. Different from deep learning methods, the proposed strategy gets rid of the dependence on the size and even the existence of a training dataset.

- A simple diffusion process is introduced as the reference to constrain the spatial accuracy of fusion results. Two simple but effective optimization terms are proposed as constraints to guarantee the spectral and spatial accuracy of fusion results.

- Several simulation and real-data experiments are conducted with some popular hyperspectral datasets. Under the condition where no training datasets are available, our method outperforms all comparison methods, testifying the superiority of the proposed strategy.

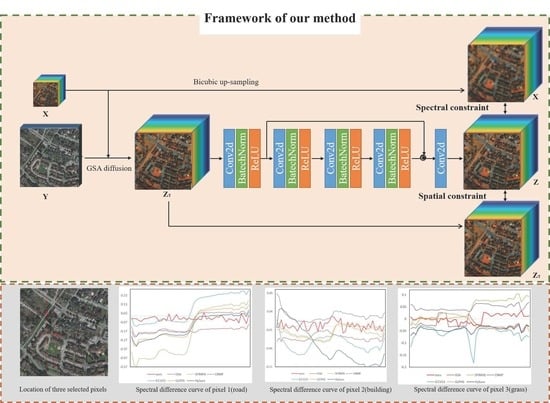

2. Methods

2.1. Problem Formulation

2.2. Fusion Process

2.3. Network Structure

3. Experiments

3.1. Experiment Settings

3.1.1. Datasets

3.1.2. Comparison Methods

3.1.3. Evaluation Methods

3.1.4. Impletion Details

3.2. Experiment with Simulated Multispectral Images

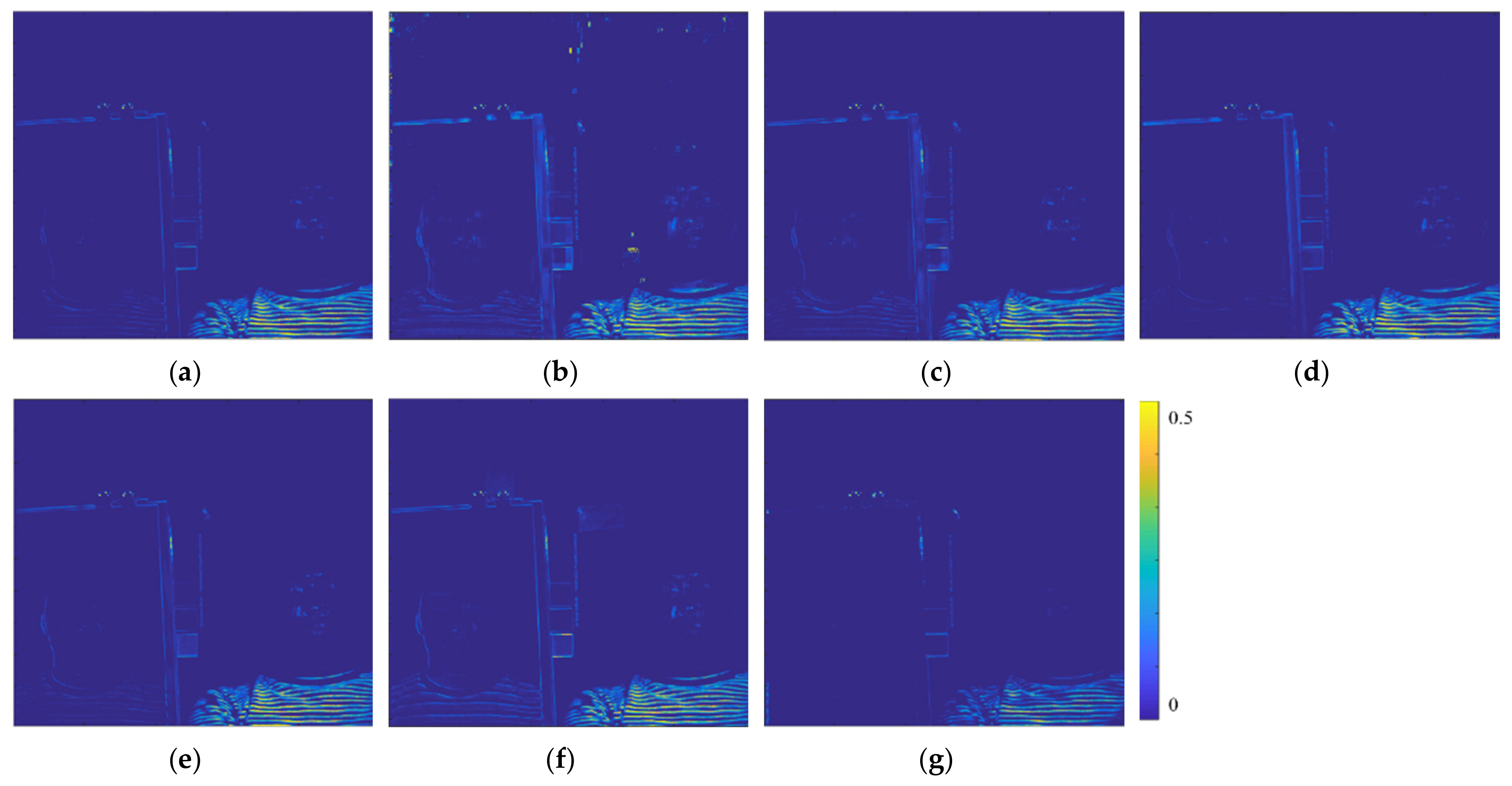

3.2.1. CAVE Dataset

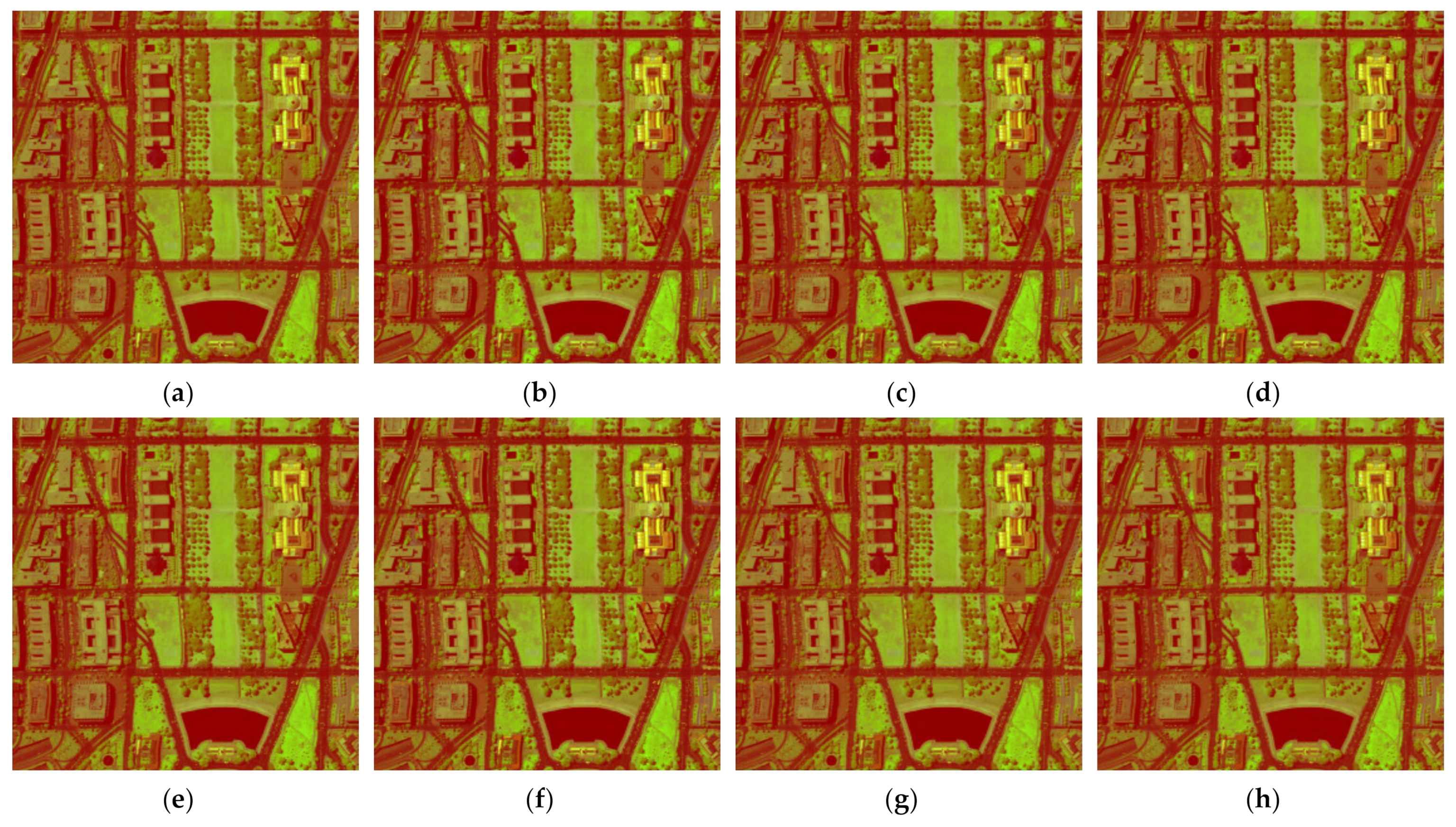

3.2.2. Pavia University Dataset

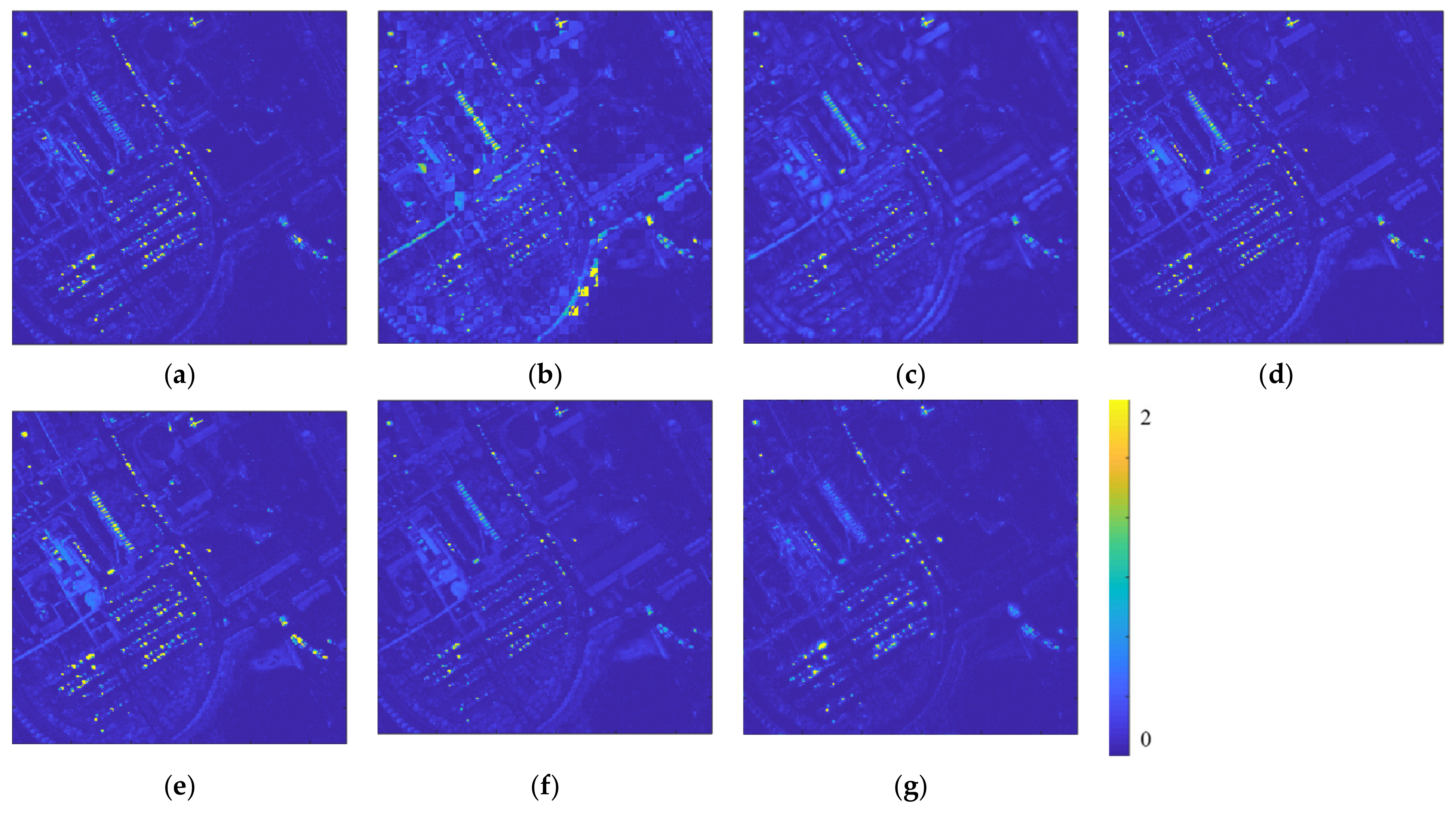

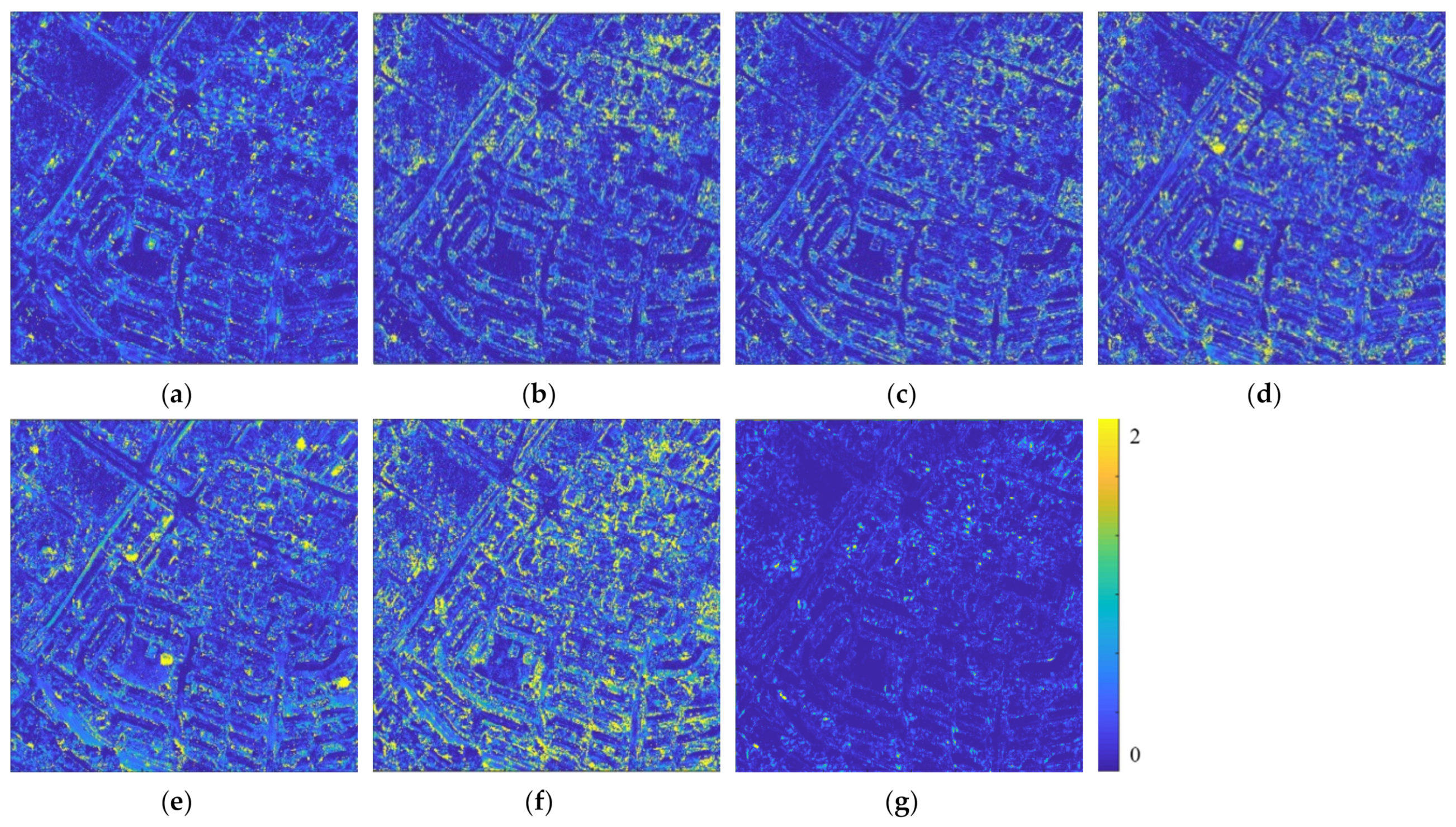

3.2.3. Washington DC Dataset

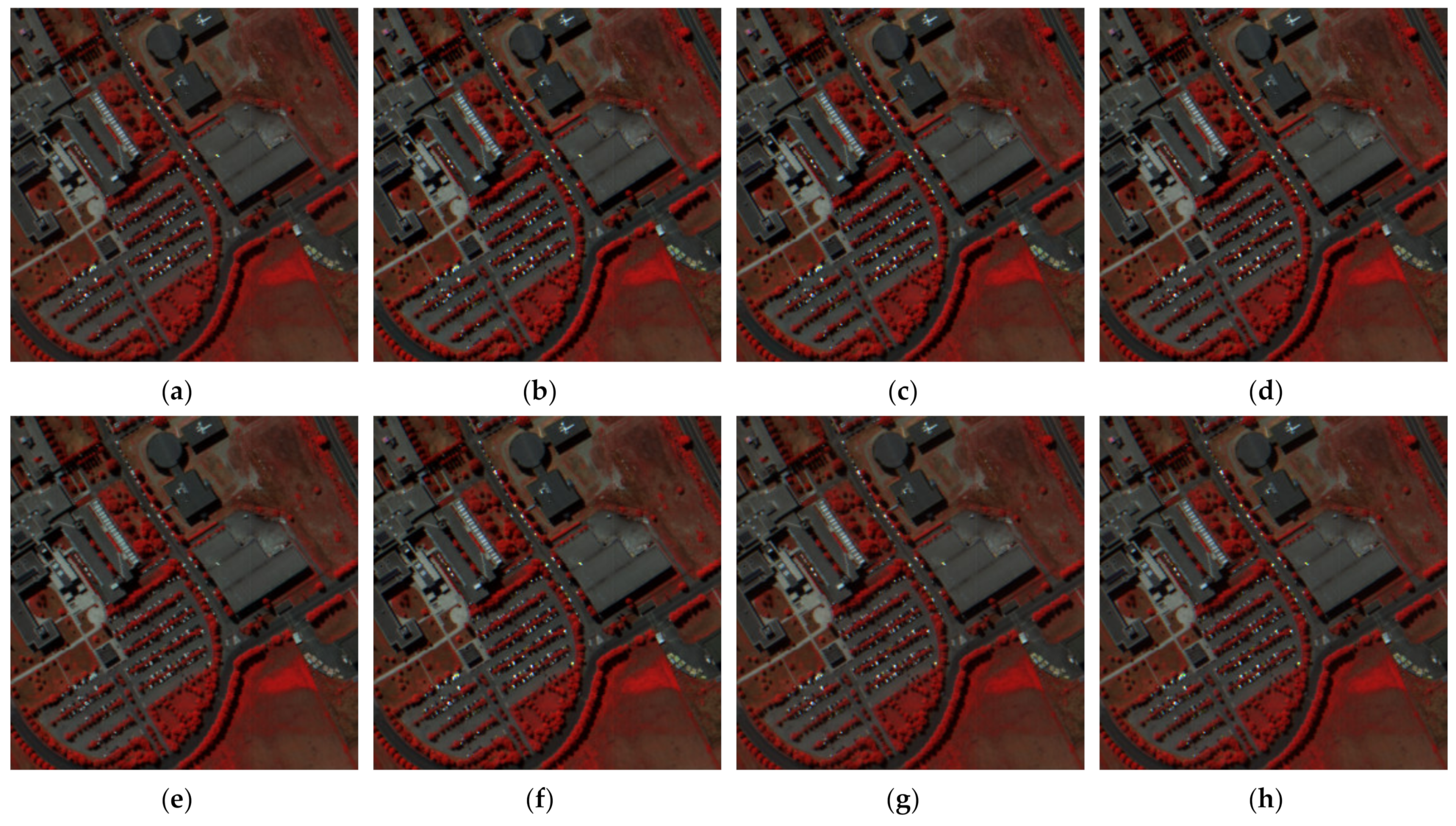

3.3. Experiment with Real Multispectral Images

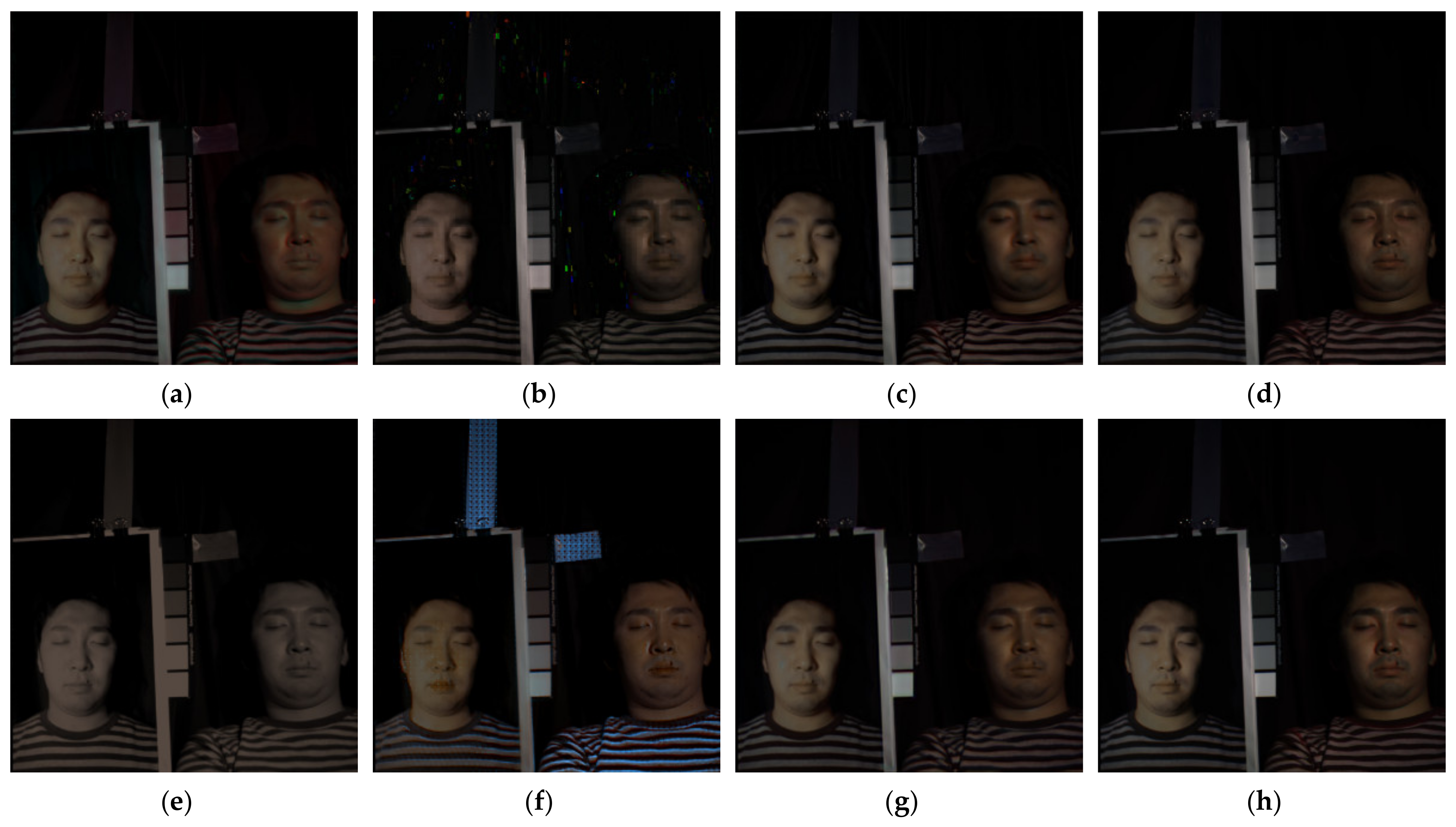

3.3.1. CAVE Dataset

3.3.2. Houston 2018 Dataset

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, F.; Du, B.; Zhang, L. Scene classification via a gradient boosting random convolutional network framework. IEEE Trans. Geosci. Remote. Sens. 2015, 54, 1793–1802. [Google Scholar] [CrossRef]

- Uzkent, B.; Rangnekar, A.; Hoffman, M. Aerial vehicle tracking by adaptive fusion of hyperspectral likelihood maps. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 39–48. [Google Scholar]

- Plaza, A.; Du, Q.; Bioucas-Dias, J.M.; Jia, X.; Kruse, F.A. Foreword to the special issue on spectral unmixing of remotely sensed data. IEEE Trans. Geosci. Remote. Sens. 2011, 49, 4103–4110. [Google Scholar] [CrossRef] [Green Version]

- Du, L.; Tian, Q.; Yu, T.; Meng, Q.; Jancso, T.; Udvardy, P.; Huang, Y. A comprehensive drought monitoring method integrating MODIS and TRMM data. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 245–253. [Google Scholar] [CrossRef]

- Rodríguez-Veiga, P.; Quegan, S.; Carreiras, J.; Persson, H.J.; Fransson, J.E.; Hoscilo, A.; Ziółkowski, D.; Stereńczak, K.; Lohberger, S.; Stängel, M. Forest biomass retrieval approaches from earth observation in different biomes. Int. J. Appl. Earth Obs. Geoinf. 2019, 77, 53–68. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and multispectral data fusion: A comparative review of the recent literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Kwarteng, P.; Chavez, A. Extracting spectral contrast in Landsat Thematic Mapper image data using selective principal component analysis. Photogramm. Eng. Remote. Sens. 1989, 55, 339–348. [Google Scholar]

- Carper, W.; Lillesand, T.; Kiefer, R. The use of intensity-hue-saturation transformations for merging SPOT panchromatic and multispectral image data. Photogramm. Eng. Remote. Sens. 1990, 56, 459–467. [Google Scholar]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent No. 6,011,875, 1 April 2000. [Google Scholar]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving component substitution pansharpening through multivariate regression of MS + Pan data. IEEE Trans. Geosci. Remote. Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Chavez, P.; Sides, S.C.; Anderson, J.A. Comparison of three different methods to merge multiresolution and multispectral data- Landsat TM and SPOT panchromatic. Photogramm. Eng. Remote. Sens. 1991, 57, 295–303. [Google Scholar]

- Liu, J. Smoothing filter-based intensity modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote. Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored multiscale fusion of high-resolution MS and Pan imagery. Photogramm. Eng. Remote. Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Shahdoosti, H.R.; Javaheri, N. Pansharpening of clustered MS and Pan images considering mixed pixels. IEEE Geosci. Remote Sens. Lett. 2017, 14, 826–830. [Google Scholar] [CrossRef]

- Yasuma, F.; Mitsunaga, T.; Iso, D.; Nayar, S.K. Generalized assorted pixel camera: Postcapture control of resolution, dynamic range, and spectrum. IEEE Trans. Image Process. 2010, 19, 2241–2253. [Google Scholar] [CrossRef] [Green Version]

- Iordache, M.-D.; Bioucas-Dias, J.M.; Plaza, A. Sparse unmixing of hyperspectral data. IEEE Trans. Geosci. Remote. Sens. 2011, 49, 2014–2039. [Google Scholar] [CrossRef] [Green Version]

- Yokoya, N.; Yairi, T.; Iwasaki, A. Coupled nonnegative matrix factorization unmixing for hyperspectral and multispectral data fusion. IEEE Trans. Geosci. Remote. Sens. 2011, 50, 528–537. [Google Scholar] [CrossRef]

- Akhtar, N.; Shafait, F.; Mian, A. Sparse spatio-spectral representation for hyperspectral image super-resolution. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 63–78. [Google Scholar]

- Akhtar, N.; Shafait, F.; Mian, A. Bayesian sparse representation for hyperspectral image super resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3631–3640. [Google Scholar]

- Lanaras, C.; Baltsavias, E.; Schindler, K. Hyperspectral super-resolution by coupled spectral unmixing. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 3586–3594. [Google Scholar]

- Zhang, L.; Shen, H.; Gong, W.; Zhang, H. Adjustable model-based fusion method for multispectral and panchromatic images. IEEE Trans. Syst. Man Cybern. Part. B (Cybern.) 2012, 42, 1693–1704. [Google Scholar] [CrossRef] [PubMed]

- Chatterjee, A.; Yuen, P.W. Endmember learning with k-means through scd model in hyperspectral scene reconstructions. J. Imaging 2019, 5, 85. [Google Scholar] [CrossRef] [Green Version]

- Zhang, L.; Nie, J.; Wei, W.; Li, Y.; Zhang, Y. Deep Blind Hyperspectral Image Super-Resolution. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 2388–2400. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Zhang, K.; Wang, M. Learning low-rank decomposition for pan-sharpening with spatial-spectral offsets. IEEE Trans. Neural Netw. Learn. Syst. 2017, 29, 3647–3657. [Google Scholar]

- Zhou, Y.; Feng, L.; Hou, C.; Kung, S.-Y. Hyperspectral and multispectral image fusion based on local low rank and coupled spectral unmixing. IEEE Trans. Geosci. Remote. Sens. 2017, 55, 5997–6009. [Google Scholar] [CrossRef]

- Liu, X.; Shen, H.; Yuan, Q.; Lu, X.; Zhou, C. A universal destriping framework combining 1-D and 2-D variational optimization methods. IEEE Trans. Geosci. Remote. Sens. 2017, 56, 808–822. [Google Scholar] [CrossRef]

- Shen, H. Integrated fusion method for multiple temporal-spatial-spectral images. In Proceedings of the 22nd ISPRS Congress, Melbourne, Australia, 5 August–1 September 2012; Volume B7, pp. 407–410. [Google Scholar] [CrossRef] [Green Version]

- Han, X.-H.; Zheng, Y.; Chen, Y.-W. Multi-level and multi-scale spatial and spectral fusion CNN for hyperspectral image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Korea, 27–28 October 2019. [Google Scholar]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. Multispectral and hyperspectral image fusion using a 3-D-convolutional neural network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 639–643. [Google Scholar] [CrossRef] [Green Version]

- Mei, S.; Yuan, X.; Ji, J.; Zhang, Y.; Wan, S.; Du, Q. Hyperspectral image spatial super-resolution via 3D full convolutional neural network. Remote. Sens. 2017, 9, 1139. [Google Scholar] [CrossRef] [Green Version]

- Xie, Q.; Zhou, M.; Zhao, Q.; Meng, D.; Zuo, W.; Xu, Z. Multispectral and hyperspectral image fusion by MS/HS fusion net. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1585–1594. [Google Scholar]

- Liu, S.; Miao, S.; Su, J.; Li, B.; Hu, W.; Zhang, Y.-D. UMAG-Net: A New Unsupervised Multi-attention-guided Network for Hyperspectral and Multispectral Image Fusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2021, 14, 7373–7385. [Google Scholar] [CrossRef]

- Lu, X.; Zhang, J.; Yang, D.; Xu, L.; De Jia, F. Cascaded Convolutional Neural Network-Based Hyperspectral Image Resolution Enhancement via an Auxiliary Panchromatic Image. IEEE Trans. Image Process. 2021, 30, 6815–6828. [Google Scholar] [CrossRef]

- Gatys, L.A.; Ecker, A.S.; Bethge, M. Image style transfer using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2414–2423. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep image prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9446–9454. [Google Scholar]

- Shaham, T.R.; Dekel, T.; Michaeli, T. Singan: Learning a generative model from a single natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 4570–4580. [Google Scholar]

- Shocher, A.; Bagon, S.; Isola, P.; Irani, M. Ingan: Capturing and retargeting the "DNA" of a natural image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Korea, 27 October–2 November 2019; pp. 4492–4501. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv Prepr. 2014, arXiv:1409.1556. [Google Scholar]

- Gatys, L.; Ecker, A.S.; Bethge, M. Texture synthesis using convolutional neural networks. Adv. Neural Inf. Process. Syst. 2015, 28, 262–270. [Google Scholar]

- Selva, M.; Aiazzi, B.; Butera, F.; Chiarantini, L.; Baronti, S. Hyper-sharpening: A first approach on SIM-GA data. IEEE J. Sel. Top. Appl. Earth Obs. Remote. Sens. 2015, 8, 3008–3024. [Google Scholar] [CrossRef]

- Simoes, M.; Bioucas-Dias, J.; Almeida, L.B.; Chanussot, J. A convex formulation for hyperspectral image superresolution via subspace-based regularization. IEEE Trans. Geosci. Remote. Sens. 2014, 53, 3373–3388. [Google Scholar] [CrossRef] [Green Version]

| PSNR | SSIM | SAM | CC | |

|---|---|---|---|---|

| GSA | 38.4838 | 0.9742 | 4.5940 | 0.9745 |

| SFIMHS | 33.8042 | 0.9396 | 8.7843 | 0.9641 |

| CNMF | 34.8185 | 0.9506 | 7.6047 | 0.9109 |

| ICCV15 | 36.5875 | 0.9652 | 6.0231 | 0.9766 |

| GLPHS | 37.4164 | 0.9606 | 5.5818 | 0.9769 |

| HySure | 37.3821 | 0.9585 | 6.7477 | 0.9641 |

| Ours | 40.1032 | 0.9864 | 4.2902 | 0.9832 |

| PSNR | SSIM | SAM | CC | |

|---|---|---|---|---|

| GSA | 38.0679 | 0.9721 | 3.6394 | 0.9337 |

| SFIMHS | 35.8753 | 0.9651 | 4.0605 | 0.9343 |

| CNMF | 37.6781 | 0.9720 | 3.6576 | 0.9335 |

| ICCV15 | 38.3872 | 0.9737 | 3.3430 | 0.9220 |

| GLPHS | 37.6992 | 0.9742 | 3.3215 | 0.9178 |

| HySure | 35.7145 | 0.9676 | 3.6405 | 0.9215 |

| Ours | 38.7403 | 0.9751 | 3.2625 | 0.9345 |

| PSNR | SSIM | SAM | CC | |

|---|---|---|---|---|

| GSA | 38.6088 | 0.9839 | 1.9482 | 0.9932 |

| SFIMHS | 36.4287 | 0.9817 | 2.2033 | 0.9924 |

| CNMF | 38.3836 | 0.9857 | 1.8832 | 0.9929 |

| ICCV15 | 36.9171 | 0.9696 | 2.2603 | 0.9767 |

| GLPHS | 37.5585 | 0.9763 | 2.1159 | 0.9750 |

| HySure | 37.5309 | 0.9805 | 1.9879 | 0.9901 |

| Ours | 39.1805 | 0.9873 | 1.6875 | 0.9937 |

| PSNR | SSIM | SAM | CC | |

|---|---|---|---|---|

| GSA | 30.3058 | 0.8591 | 13.8931 | 0.9727 |

| SFIMHS | 25.2277 | 0.8126 | 22.3912 | 0.9244 |

| CNMF | 30.6975 | 0.8908 | 10.7764 | 0.9496 |

| ICCV15 | 27.1515 | 0.8811 | 12.9924 | 0.9412 |

| GLPHS | 35.0865 | 0.9262 | 8.4063 | 0.9829 |

| HySure | 27.7137 | 0.8312 | 14.7039 | 0.9482 |

| Ours | 36.0586 | 0.9601 | 6.6032 | 0.9863 |

| PSNR | SSIM | SAM | CC | |

|---|---|---|---|---|

| GSA | 24.2119 | 0.5682 | 9.4216 | 0.9995 |

| SFIMHS | 22.6962 | 0.5431 | 11.0776 | 0.9996 |

| CNMF | 24.4170 | 0.5633 | 8.0521 | 0.9996 |

| ICCV15 | 18.8145 | 0.3710 | 18.2986 | 0.9961 |

| GLPHS | 24.6457 | 0.5935 | 8.5538 | 0.9990 |

| HySure | 23.6509 | 0.5699 | 8.0248 | 0.9994 |

| Ours | 27.1543 | 0.6772 | 7.4625 | 0.9997 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, J.; Li, J.; Jiang, M. Hyperspectral and Multispectral Image Fusion by Deep Neural Network in a Self-Supervised Manner. Remote Sens. 2021, 13, 3226. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13163226

Gao J, Li J, Jiang M. Hyperspectral and Multispectral Image Fusion by Deep Neural Network in a Self-Supervised Manner. Remote Sensing. 2021; 13(16):3226. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13163226

Chicago/Turabian StyleGao, Jianhao, Jie Li, and Menghui Jiang. 2021. "Hyperspectral and Multispectral Image Fusion by Deep Neural Network in a Self-Supervised Manner" Remote Sensing 13, no. 16: 3226. https://0-doi-org.brum.beds.ac.uk/10.3390/rs13163226